don’t all the time get the credit score they deserve. Strategies like ok-nearest neighbors (ok-NN) and kernel density estimators are typically dismissed as easy or old style, however their actual power is in estimating conditional relationships straight from knowledge, with out imposing a hard and fast purposeful kind. This flexibility makes them interpretable and highly effective, particularly when knowledge are restricted or after we wish to incorporate area information.

On this article, I’ll present how nonparametric strategies present a unified basis for conditional inference, protecting regression, classification, and even artificial knowledge era. Utilizing the traditional Iris dataset as a operating instance, I’ll illustrate easy methods to estimate conditional distributions in apply and the way they’ll help a variety of information science duties.

Estimating Conditional Distributions

The important thing thought is easy: as an alternative of predicting only a single quantity or class label, we estimate the complete vary of potential outcomes for a variable given another data. In different phrases, fairly than focusing solely on the anticipated worth, we seize your complete chance distribution of outcomes that would happen underneath related circumstances.

To do that, we take a look at knowledge factors near the scenario we’re taken with; that’s, these with conditioning variables close to our question level in characteristic area. Every level contributes to the estimate, with its affect weighted by similarity: factors nearer to the question have extra affect, whereas extra distant factors depend much less. By aggregating these weighted contributions, we receive a easy, data-driven estimate of how the goal variable behaves throughout completely different contexts.

This strategy permits us to transcend level predictions to a richer understanding of uncertainty, variability, and construction within the knowledge.

Steady Goal: Conditional Density Estimation

To make this concrete, let’s take two steady variables from the Iris dataset: sepal size (x1) because the conditioning variable and petal size (y) because the goal. For every worth of x1, we take a look at close by knowledge factors and kind a density over their y-values by centering small, weighted kernels on them, with weights reflecting proximity in sepal size. The result’s a easy estimate of the conditional density p(y ∣ x1).

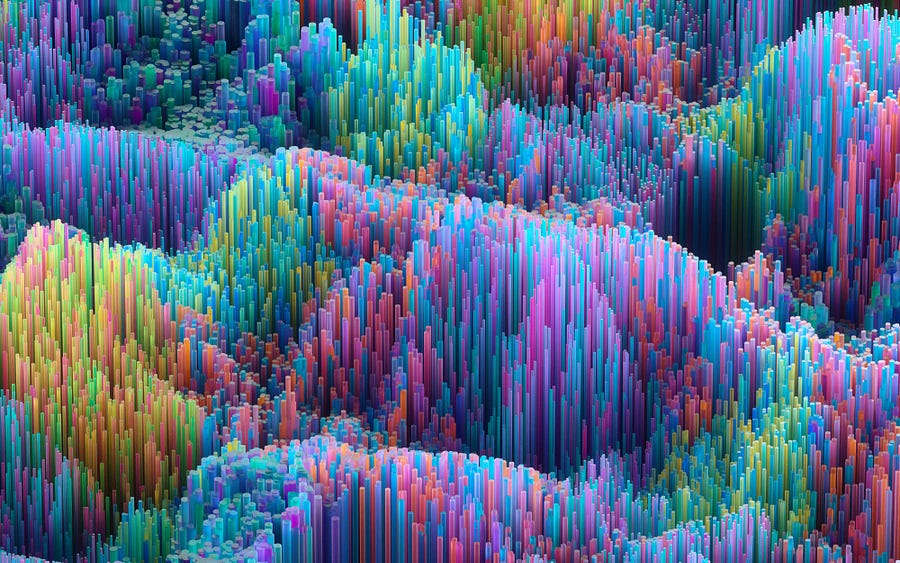

Determine 1 reveals the ensuing conditional distribution. At every worth of x1, a vertical slice via the colour map represents p(y ∣ x1). From this distribution we will compute statistics such because the imply or mode; we will additionally pattern a random worth, a key step for artificial knowledge era. The determine additionally reveals the mode regression curve, which passes via the peaks of those conditional distributions. In contrast to a conventional least-squares match, this curve comes straight from the native conditional distributions, naturally adapting to nonlinearity, skew, and even multimodal patterns.

What if now we have multiple conditioning variable? For instance, suppose we wish to estimate p(y ∣ x1, x2).

Slightly than treating (x1, x2) as a single joint enter and making use of a two-dimensional kernel, we will assemble this distribution sequentially:

p(y ∣ x1, x2) ∝ p(y ∣ x2) p(x2 ∣ x1),

which successfully assumes that after x2 is thought, y relies upon totally on x2 fairly than straight on x1. This step-by-step strategy captures the conditional construction step by step: dependencies among the many predictors are modeled first, and these are then linked to the goal.

Similarity weights are all the time computed within the subspace of the related conditioning variables. For instance, if we have been estimating p(x3 ∣ x1, x2), similarity can be decided utilizing x1 and x2. This ensures that the conditional distribution adapts exactly to the chosen predictors.

Categorical Goal: Conditional Class Chances

We are able to apply the identical precept of conditional estimation when the goal variable is categorical. For instance, suppose we wish to predict the species y of an Iris flower given its sepal size (x1) and petal size (x2). For every class y = c, we use sequential estimation to estimate the joint distribution p(x1, x2 | y = c). These joint distributions are then mixed utilizing Bayes’ theorem to acquire the conditional possibilities p(y = c ∣ x1, x2), which can be utilized for classification or stochastic sampling.

Determine 2, panels 1–3, present the estimated joint distributions for every species. From these, we will classify by choosing probably the most possible species or generate random samples in accordance with the estimated possibilities. The fourth panel shows the anticipated class boundaries, which seem easy fairly than abrupt, reflecting uncertainty the place species overlap.

Artificial Information Technology

Nonparametric conditional distributions do greater than help regression or classification. Additionally they allow us to generate solely new datasets that protect the construction of the unique knowledge. Within the sequential strategy, we mannequin every variable based mostly on those that come earlier than it, then draw values from these estimated conditional distributions to construct artificial data. Repeating this course of provides us a full artificial dataset that maintains the relationships amongst all of the attributes.

The process works as follows:

- Begin with one variable and pattern from its marginal distribution.

- For every subsequent variable, estimate its conditional distribution given the variables already sampled.

- Draw a price from this conditional distribution.

- Repeat till all variables have been sampled to kind an entire artificial report.

Determine 3 reveals the unique (left) and artificial (proper) Iris datasets within the unique measurement area. Solely three of the 4 steady attributes are displayed to suit the 3D visualization. The artificial dataset carefully reproduces the patterns and relationships within the unique, displaying that nonparametric conditional distributions can successfully seize multivariate construction.

Though we’ve illustrated the strategy with the small, low-dimensional Iris dataset, this nonparametric framework scales naturally to a lot bigger and extra complicated datasets, together with these with a mixture of numerical and categorical variables. By estimating conditional distributions step-by-step, it captures wealthy relationships amongst many options, making it broadly helpful throughout fashionable knowledge science duties.

Dealing with Combined Attributes

Up to now, our examples have thought-about conditional estimation with steady conditioning variables, regardless that the goal could also be both steady or categorical. In these circumstances, Euclidean distance works nicely as a measure of similarity. In apply, nevertheless, we frequently have to situation on combined attributes, which requires an appropriate distance metric. For such datasets, measures like Gower distance can be utilized. With an acceptable similarity metric, the nonparametric framework applies seamlessly to heterogeneous knowledge, sustaining its means to estimate conditional distributions and generate reasonable artificial samples.

Benefits of the Sequential Method

An alternative choice to sequential estimation is to mannequin distributions collectively over all conditioning variables. This may be achieved utilizing multidimensional kernels centered on the knowledge factors, or via a mix mannequin, for instance representing the distribution with N Gaussians, the place N is way smaller than the variety of knowledge factors. Whereas this works in low dimensions (it could work for the Iris dataset), it rapidly turns into data-intensive, computationally expensive, and sparse because the variety of variables will increase, particularly when predictors embody each numeric and categorical varieties. The sequential strategy sidesteps these points by modeling dependencies step-by-step and computing similarity solely within the related subspace, bettering effectivity, scalability, and interpretability.

Conclusion

Nonparametric strategies are versatile, interpretable, and environment friendly, making them preferrred for estimating conditional distributions and producing artificial knowledge. By specializing in native neighborhoods within the conditioning area, they seize complicated dependencies straight from the information with out counting on strict parametric assumptions. You can even herald area information in delicate methods, equivalent to adjusting similarity measures or weighting schemes to emphasise essential options or identified relationships. This retains the mannequin primarily data-driven whereas guided by prior insights, producing extra reasonable outcomes.

💡 Concerned about seeing these concepts in motion? I’ll be sharing a short LinkedIn publish within the coming days with key examples and insights. Join with me right here: https://www.linkedin.com/in/andrew-skabar/