typically use Imply Reciprocal Rank (MRR) and Imply Common Precision (MAP) to evaluate the standard of their rankings. On this publish, we’ll talk about why (MAP) and (MRR) poorly aligned with trendy person habits in search rating. We then have a look at two metrics that function higher alternate options to (MRR) and (MAP).

What are MRR and MAP?

Imply Reciprocal Rank (MRR)

Imply Reciprocal Rank ((MRR)) is the common rank the place the primary related merchandise happens.

$$mathrm{RR} = frac{1}{textual content{rank of first related merchandise}}$$

In e-commerce, the primary related rank might be the rank of the primary merchandise clicked in response to a question

For the above instance, assume the related merchandise is the second merchandise. This implies:

$$mathrm{Reciprocal Rank} = frac{1}{2}$$

Reciprocal rank is calculated for all of the queries within the analysis set. To get a single metric for all of the queries, we take the imply of reciprocal ranks to get the Imply Reciprocal Rank

$$mathrm{Imply Reciprocal Rank} = frac{1}{N}sum_{i=1}^N {frac{1}{textual content{Rank of First Related Merchandise}}}$$

the place (N) is the variety of queries. From this definition, we will see that (MRR) focuses on getting one related consequence early. It doesn’t measure what occurs after the primary related consequence.

Imply Common Precision (MAP)

Imply Common Precision ((MAP) measures how nicely the system retrieves related objects and the way early they’re proven. We start by first calculating Common Precision (AP) for every question. We outline AP as

$$mathrm{AP} = frac{1}Rsum_{ok=1}^{Ok}mathrm{Precision@}ok cdot mathbf{1}[text{item at } k text{ is relevant}]$$

the place (|R|) is the variety of related objects for the question

(mathrm{MAP}) is the common of (mathrm{AP}) throughout queries

The above equation seems to be loads, however it’s really easy. Let’s use an instance to interrupt it down. Assume a question has 3 related objects, and our mannequin predicts the next order:

Rank: 1 2 3 4 5

Merchandise: R N R N R(R = related, N = not related)

To compute the (MAP), we compute the AP at every related place:

- @1: Precision = 1/1 = 1.0

- @3: Precision = 2/3 ≈ 0.667

- @5: Precision = 3/5 = 0.6

$$mathrm{AP} = frac{1}{3}(1.0 + 0.667 + 0.6) = 0.756$$

We calculate the above for all of the queries and common them to get the (MAP). The AP components has two essential parts:

- Precision@ok: Since we use Precision, retrieving related objects earlier yields larger precision values. If the mannequin ranks related objects later, Precision@ok reduces attributable to a bigger ok

- Averaging the Precisions: We common the precisions over the whole variety of related objects. If the system by no means retrieves an merchandise or retrieves it past the cutoff, the merchandise contributes nothing to the numerator whereas nonetheless counting within the denominator, which reduces (AP) and (MAP).

Why MAP and MRR are Dangerous for Search Rating

Now that we’ve lined the definitions, let’s perceive why (MAP) and (MRR) are usually not used for search outcomes rating.

Relevance is Graded, not Binary

After we compute (MRR), we take the rank of the primary related merchandise. In (MRR), we deal with all related objects the identical. It makes no distinction if a distinct related merchandise exhibits up first. In actuality, totally different objects are inclined to have totally different relevance.

Equally, in (MAP), we use binary relevance- we merely search for the following related merchandise. Once more, (MAP) makes no distinction within the relevance rating of the objects. In actual instances, relevance is graded, not binary.

Merchandise : 1 2 3

Relevance: 3 1 0(MAP) and (MRR) each ignore how good the related merchandise is. They fail to quantify the relevance.

Customers Scan A number of Outcomes

That is extra particular to (MRR). In (MRR) computation, we report the rank of the primary related merchandise. And ignore every little thing after. It may be good for lookups, QA, and so on. However that is unhealthy for suggestions, product search, and so on.

Throughout search, customers don’t cease on the first related consequence (apart from instances the place there is just one right response). Customers scan a number of outcomes that contribute to general search relevancy.

MAP overemphasizes recall

(MAP) computes

$$mathrm{AP} = frac{1}Rsum_{ok=1}^{Ok}mathrm{Precision@}ok cdot mathbf{1}[text{item at } k text{ is relevant}]$$

As a consequence, each related merchandise contributes to the scoring. Lacking any related merchandise hurts the scoring. When customers make a search, they don’t seem to be concerned with discovering all of the related objects. They’re concerned with discovering one of the best few choices. (MAP) optimization pushes the mannequin to study the lengthy tail of related objects, even when the relevance contribution is low, and customers by no means scroll that far. Therefore, (MAP) overemphasizes recall.

MAP Decays Linearly

Contemplate the instance beneath. We place a related merchandise at three totally different positions and compute the AP

| Rank | Precision@ok | AP |

|---|---|---|

| 1 | 1/1 = 1.0 | 1.0 |

| 3 | 1/3 ≈ 0.33 | 0.33 |

| 30 | 1/30 ≈ 0.033 | 0.033 |

AP at Rank 30 seems to be worse than Rank 3, which seems to be worse than Rank 1. The AP rating decays linearly with the rank. In actuality, Rank 3 vs Rank 30 is greater than a 10x distinction. It’s extra like seen vs not seen.

(MAP) is position-sensitive however solely weakly. It doesn’t mirror how person habits adjustments with place. (MAP) is position-sensitive by way of Precision@ok, the place the decay with rank is linear. This doesn’t mirror how person consideration drops in search outcomes.

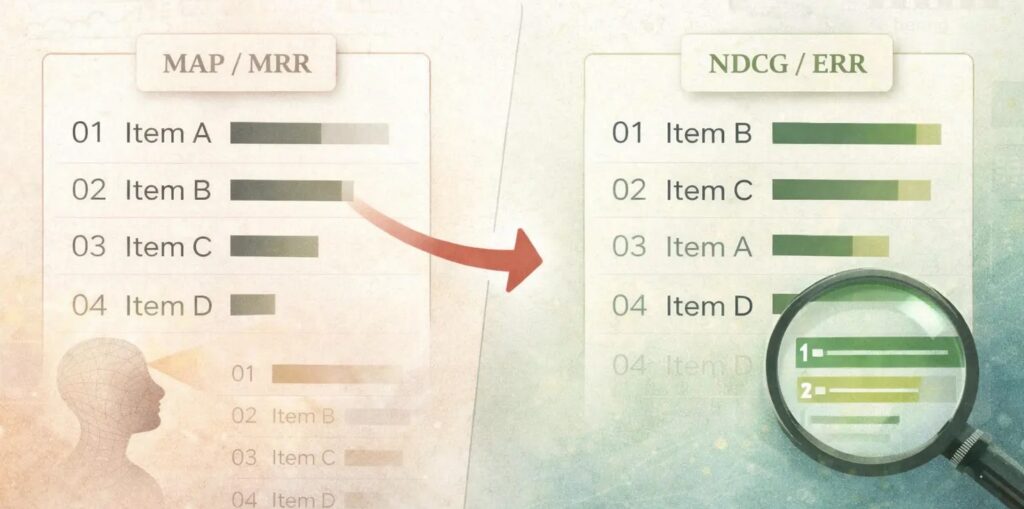

NDCG and ERR are Higher Decisions

For search outcomes rating, (NDCG) and (ERR) are higher selections. They repair the problems that (MRR) and (MAP) undergo from.

Anticipated Reciprocal Rank (ERR)

Anticipated Reciprocal Rank ((ERR)) assumes a cascade person mannequin whereby a person does the next

- Scans the checklist from high to backside

- At every rank (i),

- With chance (R_i), the person is happy and stops

- With chance (1- R_i), the person continues trying forward

(ERR) computes the anticipated reciprocal rank of this stopping place the place the person is happy:

$$mathrm{ERR} = sum_{r=1}^n frac{1}{r} cdot {R}_r cdot prod_{i=1}^{r-1}{1-{R}_i}$$

the place (R_i) is (R_i = frac{2^{l_i}-1}{2^{l_m}}) and (l_m) is the utmost attainable label worth.

Let’s perceive how (ERR) is totally different from (MRR).

- (ERR) makes use of (R_i = frac{2^{l_i}-1}{2^{l_m}}), which is graded relevance, so a consequence can partially fulfill a person

- (ERR) permits for a number of related objects to contribute. Early high-quality objects scale back the contribution of later objects

Instance 1

We’ll take a toy instance to grasp how (ERR) and (MRR) differ

Rank : 1 2 3

Relevance: 2 3 0- (MRR) = 1 (related merchandise is at first place)

- (ERR) =

- (R_1 = {(2^2 – 1)}/{2^3} = {3}/{8})

- (R_2 ={(2^3 – 1)}/{2^3} = {7}/{8})

- (R_3 ={(2^0 – 1)}/{2^3} = 0)

- (ERR = (1/1) cdot R_1 + (1/2) cdot R_2 + (1/3) cdot R_3 = 0.648)

- (MRR) says good rating. (ERR) says not good as a result of the next relevance merchandise seems later

Instance 2

Let’s take one other instance to see how a change in rating impacts the (ERR) contribution of an merchandise. We’ll place a extremely related merchandise at totally different positions in an inventory and compute the (ERR) contribution for that merchandise. Contemplate the instances beneath

- Rating 1: ([8, 4, 4, 4, 4])

- Rating 2: ([4, 4, 4, 4, 8])

Lets compute

| Relevance l | 2^l − 1 | R(l) |

|---|---|---|

| 4 | 15 | 0.0586 |

| 8 | 255 | 0.9961 |

Utilizing this to compute the total (ERR) for each the rankings, we get:

- Rating 1: (ERR) ≈ 0.99

- Rating 2: (ERR) ≈ 0.27

If we particularly have a look at the contribution of the merchandise with the relevance rating of 8, we see that the drop in contribution of that time period is 6.36x. If the penalty have been linear, the drop can be 5x.

| State of affairs | Contribution of relevance-8 merchandise |

|---|---|

| Rank 1 | 0.9961 |

| Rank 5 | 0.1565 |

| Drop issue | 6.36x |

Normalized Discounted Cumulative Achieve (NDCG)

Normalized Discounted Cumulative Achieve ((NDCG)) is one other nice selection that’s well-suited for rating search outcomes. (NDCG) is constructed on two essential concepts

- Achieve: Gadgets with larger relevance scores are price way more

- Low cost: objects showing later are price a lot much less since customers pay much less consideration to later objects

NDCG combines the concept of Achieve and Low cost to create a rating. Moreover, it additionally normalizes the rating to permit comparability between totally different queries. Formally, achieve and low cost are outlined as

- (mathrm{Achieve} = 2^{l_r}-1)

- (mathrm{Low cost} = log_2(1+r))

the place (l) is the relevance label of an merchandise at place (r) and (r) is the place at which it happens.

Achieve has an exponential type, which rewards larger relevance. This ensures that objects with the next relevance rating contribute way more. The logarithmic low cost penalizes the later rating of related objects. Mixed and utilized to all the ranked checklist, we get the Discounted Cumulative Achieve:

$$mathrm{DCG@Ok} = sum_{r=1}^{Ok} frac{2^{l_r}-1}{mathrm{log_2(1+r)}}$$

for a given ranked checklist (l_1, l_2, l_3, …l_k). (DCG@Ok) computation is useful, however the relevance labels can fluctuate in scale throughout queries, which makes evaluating (DCG@Ok) an unfair comparability. So we’d like a approach to normalize the (DCG@Ok) values.

We do this by computing the (IDCG@Ok), which is the perfect discounted cumulative achieve. (IDCG) is the utmost attainable (DCG) for a similar objects, obtained by sorting them by relevance in descending order.

$$mathrm{DCG@Ok} = sum_{r=1}^{Ok} frac{2^{l^*_r}-1}{mathrm{log_2(1+r)}}$$

(IDCG) represents an ideal rating. To normalize the (DCG@Ok), we compute

$$mathrm{NDCG@Ok} = frac{mathrm{DCG@Ok}}{mathrm{IDCG@Ok}}$$

(NDCG@Ok) has the next properties

- Bounded between 0 and 1

- Comparable throughout queries

- 1 is an ideal ordering

Instance: Good vs Barely Worse Ordering

On this instance, we’ll take two totally different rankings of the identical checklist and evaluate their (NDCG) values. Assume we’ve objects with relevance labels on a 0-3 scale.

Rating A

Rank : 1 2 3

Relevance: 3 2 1Rating B

Rank : 1 2 3

Relevance: 2 1 3Computing the (NDCG) parts, we get:

| Rank | Achieve (2^l − 1) | Low cost log₂(1 + r) | A contrib | B contrib |

|---|---|---|---|---|

| 1 | 7 | 1.00 | 7.00 | 3.00 |

| 2 | 3 | 1.58 | 1.89 | 4.42 |

| 3 | 1 | 2.00 | 0.50 | 0.50 |

- DCG(A) = 9.39

- DCG(B) = 7.92

- IDCG = 9.39

- NDCG(A) = 9.39 / 9.39 = 1.0

- NDCG(B) = 7.92 / 9.39 = 0.84

Thus, swapping a related merchandise away from rank 1 causes a big drop.

NDCG: Further Dialogue

The low cost that varieties the denominator of the (DCG) computation is logarithmic in nature. It will increase a lot slower than linearly.

$$mathrm{low cost(r)}=frac{1}{mathrm{log2(1+r)}}$$

Let’s see how this compares with the linear decay:

| Rank (r) |

Linear (1/r) |

Logarithmic (1 / log₂(1 + r)) |

|---|---|---|

| 1 | 1.00 | 1.00 |

| 2 | 0.50 | 0.63 |

| 5 | 0.20 | 0.39 |

| 10 | 0.10 | 0.29 |

| 50 | 0.02 | 0.18 |

- (1/r) decays sooner

- (1/log(1+r)) decays slower

Logarithmic low cost penalizes later ranks much less aggressively than a linear low cost. The distinction between rank 1 → 2 is bigger, however the distinction between rank 10 → 50 is small.

The log low cost has a diminishing marginal discount in penalizing later ranks attributable to its concave form. This prevents (NDCG) from changing into a top-heavy metric the place ranks 1-3 dominate the rating. A linear penalty would ignore affordable selections decrease down.

A logarithmic low cost additionally displays the truth that person consideration drops sharply on the high of the checklist after which flattens out as a substitute of reducing linearly with rank.

Conclusion

(MAP) and (MRR) are helpful info retrieval metrics, however are poorly fitted to trendy search rating methods. Whereas (MAP) focuses on discovering all of the related paperwork, (MRR) treats a rating drawback as a single-position metric. (MAP) and (MRR) each ignore the graded relevance of things in a search and deal with them as binary: related and never related.

(NDCG) and (ERR) higher mirror actual person habits by accounting for a number of positions, permitting objects to have non-binary scores, whereas giving larger significance to high positions. For search rating methods, these position-sensitive metrics are usually not only a higher choice- they’re obligatory.

Additional Studying

- LambdaMART (good rationalization)

- Learning To Rank (extremely advocate studying this. It’s lengthy and thorough, and likewise the inspiration for this text!)