of this collection [1], [2], and [3], we’ve got noticed:

- interpretation of multiplication of a matrix by a vector,

- the bodily which means of matrix-matrix multiplication,

- the conduct of a number of special-type matrices, and

- visualization of matrix transpose.

On this story, I need to share my perspective on what lies beneath matrix inversion, why totally different formulation associated to inversion are the best way they really are, and at last, why calculating the inverse may be carried out rather more simply for matrices of a number of particular varieties.

Listed below are the definitions that I take advantage of all through the tales of this collection:

- Matrices are denoted with uppercase (like ‘A‘, ‘B‘), whereas vectors and scalars are denoted with lowercase (like ‘x‘, ‘y‘ or ‘m‘, ‘n‘).

- |x| – is the size of vector ‘x‘,

- AT – is the transpose of matrix ‘A‘,

- B-1 – is the inverse of matrix ‘B‘.

Definition of the inverse matrix

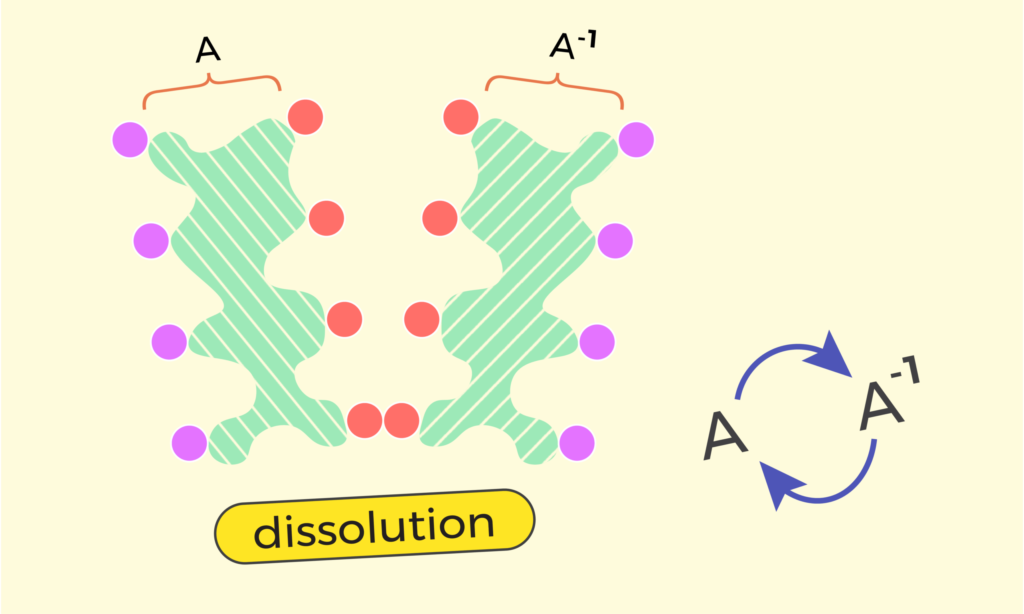

From part 1 of this collection – “matrix-vector multiplication” [1], we keep in mind that a sure matrix “A“, when multiplied by a vector ‘x‘ as “y = Ax“, may be handled as a change of enter vector ‘x‘ into the output vector ‘y‘. If that’s the case, then the inverse matrix A-1 ought to do the reverse transformation – it ought to remodel vector ‘y‘ again to ‘x‘:

[begin{equation*}

x = A^{-1}y

end{equation*}]

Substituting “y = Ax” there’ll give us:

[begin{equation*}

x = A^{-1}y = A^{-1}(Ax) = (A^{-1}A)x

end{equation*}]

which implies that the product of the unique matrix and its inverse – A-1A, ought to be such a matrix, which does no transformation to any enter vector ‘x‘. In different phrases:

[begin{equation*}

(A^{-1}A) = E

end{equation*}]

the place “E” is the id matrix.

The primary query that may come up right here is, is it all the time potential to reverse the affect of a sure matrix “A“? The reply is – it’s potential, provided that no 2 totally different enter vectors x1 and x2 are being reworked by way of “A” into the identical output vector ‘y‘. In different phrases, the inverse matrix A-1 exists provided that for any output vector ‘y‘ there exists precisely one enter vector ‘x‘, which is reworked by way of “A” into it:

[begin{equation*}

y = Ax

end{equation*}]

On this collection, I don’t need to dive an excessive amount of into the formal a part of definitions and proofs. As an alternative, I need to observe a number of circumstances the place it’s really potential to invert the given matrix “A“, and we’ll see how the inverse matrix A-1 is calculated for every of these circumstances.

Inverting chains of matrices

An necessary method associated to matrix inverse is:

[begin{equation*}

(AB)^{-1} = B^{-1}A^{-1}

end{equation*}]

which states that the inverse of the product of matrices is the same as the product of inverse matrices, however within the reverse order. Let’s perceive why the order of matrices is being reversed.

What’s the bodily which means of the inverse (AB)-1? It ought to be such a matrix that turns again the affect of the matrix (AB). So if:

[begin{equation*}

y = (AB)x,

end{equation*}]

then, we must always have:

[begin{equation*}

x = (AB)^{-1}y.

end{equation*}]

Now the transformation “y = (AB)x” goes in 2 steps: first, we do:

[begin{equation*}

Bx = t,

end{equation*}]

which supplies an intermediate vector ‘t‘, after which that ‘t‘ is multiplied by “A“:

[begin{equation*}

y = At = A(Bx).

end{equation*}]

So the matrix “A” influenced the vector after it was already influenced by “B“. On this case, to show again such a sequential affect, at first we must always flip again the affect of “A“, by multiplying A-1 over ‘y‘, which can give us:

[begin{equation*}

A^{-1}y = A^{-1}(ABx) = (A^{-1}A)Bx = EBx = Bx = t,

end{equation*}]

… the intermediate vector ‘t‘, produced a bit above.

Word, the vector ‘t’ participates right here twice.

Then, after getting again the intermediate vector ‘t‘, to revive ‘x‘, we also needs to reverse the affect of matrix “B“. And that’s carried out by multiplying B-1 over ‘t‘:

[begin{equation*}

B^{-1}t = B^{-1}(Bx) = (B^{-1}B)x = Ex = x,

end{equation*}]

or writing all of it in an expanded means:

[begin{equation*}

x = B^{-1}(A^{-1}A)Bx = (B^{-1}A^{-1})(AB)x,

end{equation*}]

which explicitly reveals that to show again the affect of the matrix (AB) we must always use (B-1A-1).

Word, each vectors ‘x’ and ‘t’ take part right here twice.

Because of this within the inverse of a product of matrices, their order is reversed:

[begin{equation*}

(AB)^{-1} = B^{-1}A^{-1}

end{equation*}]

The identical precept is utilized when we’ve got extra matrices in a sequence, like:

[begin{equation*}

(ABC)^{-1} = C^{-1}B^{-1}A^{-1}

end{equation*}]

Inversion of a number of particular matrices

Now, with the notion of what lies beneath matrix inversion, let’s view how matrices of a number of particular varieties are being inverted.

Inverse of cyclic-shift matrix

A cyclic-shift matrix is such a matrix “V“, which when multiplied by an enter vector ‘x‘, produces an output vector “y = Vx“, the place all values of ‘x‘ are cyclic shifted by some ‘okay‘ positions. To realize that, the cyclic-shift matrix “V” has 2 strains of ‘1’s, which reside parallel to its foremost diagonal, whereas all different cells of it are ‘0’s.

[begin{equation*}

begin{pmatrix}

y_1 y_2 y_3 y_4 y_5

end{pmatrix}

= y = Vx =

begin{bmatrix}

0 & 0 & 1 & 0 & 0

0 & 0 & 0 & 1 & 0

0 & 0 & 0 & 0 & 1

1 & 0 & 0 & 0 & 0

0 & 1 & 0 & 0 & 0

end{bmatrix}

*

begin{pmatrix}

x_1 x_2 x_3 x_4 x_5

end{pmatrix}

=

begin{pmatrix}

x_3 x_4 x_5 x_1 x_2

end{pmatrix}

end{equation*}]

Now, how ought to we undo the transformation of the cyclic-shift matrix “V“? Clearly, we must always apply one other cyclic-shift matrix V-1, which now cyclic shifts all of the values of ‘y‘ downwards by ‘okay‘ positions (bear in mind, “V” was shifting all of the values of ‘x‘ upwards).

[begin{equation*}

begin{pmatrix}

x_1 x_2 x_3 x_4 x_5

end{pmatrix}

= x = V^{-1}Vx =

begin{bmatrix}

0 & 0 & 0 & 1 & 0

0 & 0 & 0 & 0 & 1

1 & 0 & 0 & 0 & 0

0 & 1 & 0 & 0 & 0

0 & 0 & 1 & 0 & 0

end{bmatrix}

begin{bmatrix}

0 & 0 & 1 & 0 & 0

0 & 0 & 0 & 1 & 0

0 & 0 & 0 & 0 & 1

1 & 0 & 0 & 0 & 0

0 & 1 & 0 & 0 & 0

end{bmatrix}

begin{pmatrix}

x_1 x_2 x_3 x_4 x_5

end{pmatrix}

= V^{-1}y

end{equation*}]

Because of this the inverse of a cyclic-shift matrix is one other cyclic-shift matrix:

[begin{equation*}

V_1^{-1} = V_2

end{equation*}]

Greater than that, we are able to be aware that the X-diagram of V-1 is definitely the horizontal flip of the X-diagram of “V“. And from the earlier a part of this collection – “transpose of a matrix” [3], we keep in mind that the horizontal flip of an X-diagram corresponds to the transpose of that matrix. Because of this the inverse of a cyclic shift matrix is the same as its transpose:

[begin{equation*}

V^{-1} = V^T

end{equation*}]

Inverse of an change matrix

An change matrix, typically denoted by “J“, is such a matrix, which when multiplied by an enter vector ‘x‘, produces an output vector ‘y‘, having all of the values of ‘x‘, however in reverse order. To realize that, “J” has ‘1’s on its anti-diagonal, whereas all different cells are ‘0’s.

[begin{equation*}

begin{pmatrix}

y_1 y_2 y_3 y_4 y_5

end{pmatrix}

= y = Jx =

begin{bmatrix}

0 & 0 & 0 & 0 & 1

0 & 0 & 0 & 1 & 0

0 & 0 & 1 & 0 & 0

0 & 1 & 0 & 0 & 0

1 & 0 & 0 & 0 & 0

end{bmatrix}

*

begin{pmatrix}

x_1 x_2 x_3 x_4 x_5

end{pmatrix}

=

begin{pmatrix}

x_5 x_4 x_3 x_2 x_1

end{pmatrix}

end{equation*}]

Clearly, to undo such a transformation, we must always apply another change matrix.

[

begin{equation*}

begin{pmatrix}

x_1 x_2 x_3 x_4 x_5

end{pmatrix}

= x = J^{-1}Jx =

begin{bmatrix}

0 & 0 & 0 & 0 & 1

0 & 0 & 0 & 1 & 0

0 & 0 & 1 & 0 & 0

0 & 1 & 0 & 0 & 0

1 & 0 & 0 & 0 & 0

end{bmatrix}

begin{bmatrix}

0 & 0 & 0 & 0 & 1

0 & 0 & 0 & 1 & 0

0 & 0 & 1 & 0 & 0

0 & 1 & 0 & 0 & 0

1 & 0 & 0 & 0 & 0

end{bmatrix}

begin{pmatrix}

x_1 x_2 x_3 x_4 x_5

end{pmatrix}

= J^{-1}y

end{equation*}]

Because of this the inverse of an change matrix is the change matrix itself:

[begin{equation*}

J^{-1} = J

end{equation*}]

Inverse of a permutation matrix

A permutation matrix is such a matrix “P” which, when multiplied by an enter vector ‘x‘, rearranges its values in a special order. To realize that, an n*n-sized permutation matrix “P” has ‘n‘ 1(s), organized in such a means that no two 1(s) seem on the identical row or the identical column. All different cells of “P” are 0(s).

[begin{equation*}

begin{pmatrix}

y_1 y_2 y_3 y_4 y_5

end{pmatrix}

= y = Px =

begin{bmatrix}

0 & 0 & 1 & 0 & 0

1 & 0 & 0 & 0 & 0

0 & 0 & 0 & 1 & 0

0 & 0 & 0 & 0 & 1

0 & 1 & 0 & 0 & 0

end{bmatrix}

*

begin{pmatrix}

x_1 x_2 x_3 x_4 x_5

end{pmatrix}

=

begin{pmatrix}

x_3 x_1 x_4 x_5 x_2

end{pmatrix}

end{equation*}]

Now, what sort of matrix ought to be the inverse of a permutation matrix? In different phrases, undo the transformation of a permutation matrix “P“? Clearly, we have to do one other rearrangement, which acts in reverse order. So, for instance, if the enter worth x3 was moved by “P” to output worth y1, then within the inverse permutation matrix P-1, the enter worth y1 ought to be moved again to output worth x3. Because of this when drawing X-diagrams of permutation matrices “P-1” and “P“, one would be the reflection of the opposite.

Equally to the case of an change matrix, within the case of a permutation matrix, we are able to visually be aware that the X-diagrams of “P” and P-1 differ solely by a horizontal flip. That’s the reason the inverse of any permutation matrix “P” is the same as its transposition:

[begin{equation*}

P^{-1} = P^T

end{equation*}]

[begin{equation*}

begin{pmatrix}

x_1 x_2 x_3 x_4 x_5

end{pmatrix}

= x = P^{-1}Px =

begin{bmatrix}

0 & 1 & 0 & 0 & 0

0 & 0 & 0 & 0 & 1

1 & 0 & 0 & 0 & 0

0 & 0 & 1 & 0 & 0

0 & 0 & 0 & 1 & 0

end{bmatrix}

begin{bmatrix}

0 & 0 & 1 & 0 & 0

1 & 0 & 0 & 0 & 0

0 & 0 & 0 & 1 & 0

0 & 0 & 0 & 0 & 1

0 & 1 & 0 & 0 & 0

end{bmatrix}

begin{pmatrix}

x_1 x_2 x_3 x_4 x_5

end{pmatrix}

= P^{-1}y

end{equation*}]

Inverse of a rotation matrix

A rotation matrix on 2D aircraft is such a matrix “R“, which, when multiplied by a vector (x1, x2), rotates the purpose “x=(x1, x2)” counter-clockwise by a sure angle “ϴ” across the null-point. Its method is:

[

begin{equation*}

begin{pmatrix}

y_1 y_2

end{pmatrix}

= y = Rx =

begin{bmatrix}

cos(theta) & -sin(theta)

sin(theta) & phantom{+} cos(theta)

end{bmatrix}

*

begin{pmatrix}

x_1 x_2

end{pmatrix}

end{equation*}]

Now, what ought to be the inverse of a rotation matrix? Easy methods to undo the rotation produced by a matrix “R“? Clearly, that ought to be one other rotation matrix, this time with an angle “-ϴ” (or “360°-ϴ“):

[begin{equation*}

R^{-1} =

begin{bmatrix}

cos(-theta) & -sin(-theta)

sin(-theta) & phantom{+} cos(-theta)

end{bmatrix}

=

begin{bmatrix}

phantom{+} cos(theta) & sin(theta)

-sin(theta) & cos(theta)

end{bmatrix}

=

R^T

end{equation*}]

Which is why the inverse of a rotation matrix is one other rotation matrix. We additionally see that the inverse R-1 is the same as the transpose of the unique matrix “R“.

Inverse of a triangular matrix

An upper-triangular matrix is a sq. matrix that has zeros under its diagonal. Due to that, in its X-diagram, there aren’t any arrows directed downwards:

The horizontal arrows correspond to cells of the diagonal, whereas the arrows which might be directed upwards correspond to the cells above the diagonal.

Equally, the lower-triangular matrix is outlined, which has zeroes above its foremost diagonal. On this article, we’ll focus solely on upper-triangular matrices, as for lower-triangular ones, inversion is carried out in a similar means.

For simplicity, let’s at first deal with inverting a 2×2-sized upper-triangular matrix ‘A‘.

As soon as ‘A‘ is multiplied by an enter vector ‘x‘, the end result vector “y = Ax” has the next kind:

[begin{equation*}

y =

begin{pmatrix}

y_1 y_2

end{pmatrix}

=

begin{bmatrix}

a_{1,1} & a_{1,2}

0 & a_{2,2}

end{bmatrix}

begin{pmatrix}

x_1 x_2

end{pmatrix}

=

begin{pmatrix}

begin{aligned}

a_{1,1}x_1 + a_{1,2}x_2

a_{2,2}x_2

end{aligned}

end{pmatrix}

end{equation*}]

Now, when calculating the inverse matrix A-1, we would like it to behave within the reverse order:

How ought to we restore (x1, x2) from (y1, y2)? The primary and easiest step is to revive x2, utilizing solely y2, as a result of y2 was initially affected solely by x2. We don’t want the worth of y1 for that:

Subsequent, how ought to we restore x1? This time, we are able to’t use solely y1, as a result of the worth “y1 = a1,1x1 + a1,2x2” is form of a combination of x1 and x2. However we are able to restore x1 if utilizing each y1 and y2 correctly. This time, y2 will assist to filter out the affect of x2, so the pure worth of x1 may be restored:

We see now that the inverse A-1 of the upper-triangular matrix “A” can also be an upper-triangular matrix.

What about triangular matrices of bigger sizes? Let’s take this time a 3×3-sized matrix and discover its inverse analytically.

Values of the output vector ‘y‘ are obtained now from ‘x‘ within the following means:

[

begin{equation*}

y =

begin{pmatrix}

y_1 y_2 y_3

end{pmatrix}

= Ax =

begin{bmatrix}

a_{1,1} & a_{1,2} & a_{1,3}

0 & a_{2,2} & a_{2,3}

0 & 0 & a_{3,3}

end{bmatrix}

begin{pmatrix}

x_1 x_2 x_3

end{pmatrix}

=

begin{pmatrix}

begin{aligned}

a_{1,1}x_1 + a_{1,2}x_2 + a_{1,3}x_3

a_{2,2}x_2 + a_{2,3}x_3

a_{3,3}x_3

end{aligned}

end{pmatrix}

end{equation*}]

As we’re interested by constructing the inverse matrix A-1, our goal is to seek out (x1, x2, x3), having the values of (y1, y2, y3):

[begin{equation*}

begin{pmatrix}

x_1 x_2 x_3

end{pmatrix}

= A^{-1}y =

begin{bmatrix}

text{?} & text{?} & text{?}

text{?} & text{?} & text{?}

text{?} & text{?} & text{?}

end{bmatrix}

*

begin{pmatrix}

y_1 y_2 y_3

end{pmatrix}

end{equation*}]

In different phrases, we should clear up the system of linear equations talked about above.

Doing that can restore at first the worth of x3 as:

[begin{equation*}

y_3 = a_{3,3}x_3, hspace{1cm} x_3 = frac{1}{a_{3,3}} y_3

end{equation*}]

which can make clear cells of the final row of A-1 :

[begin{equation*}

begin{pmatrix}

x_1 x_2 x_3

end{pmatrix}

= A^{-1}y =

begin{bmatrix}

text{?} & text{?} & text{?}

text{?} & text{?} & text{?}

0 & 0 & frac{1}{a_{3,3}}

end{bmatrix}

*

begin{pmatrix}

y_1 y_2 y_3

end{pmatrix}

end{equation*}]

Having x3 found out, we are able to deliver all its occurrences to the left aspect of the system:

[begin{equation*}

begin{pmatrix}

y_1 – a_{1,3}x_3

y_2 – a_{2,3}x_3

y_3 – a_{3,3}x_3

end{pmatrix}

=

begin{pmatrix}

begin{aligned}

a_{1,1}x_1 + a_{1,2}x_2

a_{2,2}x_2

0

end{aligned}

end{pmatrix}

end{equation*}]

which can permit us to calculate x2 as:

[begin{equation*}

y_2 – a_{2,3}x_3 = a_{2,2}x_2, hspace{1cm}

x_2 = frac{y_2 – a_{2,3}x_3}{a_{2,2}} = frac{y_2 – (a_{2,3}/a_{3,3})y_3}{a_{2,2}}

end{equation*}]

This already clarifies the cells of the second row of A-1 :

[begin{equation*}

begin{pmatrix}

x_1 x_2 x_3

end{pmatrix}

= A^{-1}y =

begin{bmatrix}

text{?} & text{?} & text{?} [0.2cm]

0 & frac{1}{a_{2,2}} & – frac{a_{2,3}}{a_{2,2}a_{3,3}} [0.2cm]

0 & 0 & frac{1}{a_{3,3}}

finish{bmatrix}

*

start{pmatrix}

y_1 y_2 y_3

finish{pmatrix}

finish{equation*}]

Lastly, having the values of x3 and x2 found out, we are able to do the identical trick of transferring now x2 to the left aspect of the system:

[begin{equation*}

begin{pmatrix}

begin{aligned}

y_1 – a_{1,3}x_3 & – a_{1,2}x_2

y_2 – a_{2,3}x_3 & – a_{2,2}x_2

y_3 – a_{3,3}x_3 &

end{aligned}

end{pmatrix}

=

begin{pmatrix}

a_{1,1}x_1

0

0

end{pmatrix}

end{equation*}]

from which x1 will likely be derived as:

[begin{equation*}

begin{aligned}

& y_1 – a_{1,3}x_3 – a_{1,2}x_2 = a_{1,1}x_1,

& x_1

= frac{y_1 – a_{1,3}x_3 – a_{1,2}x_2}{a_{1,1}}

= frac{y_1 – (a_{1,3}/a_{3,3})y_3 – a_{1,2}frac{y_2 – (a_{2,3}/a_{3,3})y_3}{a_{2,2}}}{a_{1,1}}

end{aligned}

end{equation*}]

so the primary row of matrix A-1 will even be clarified:

[begin{equation*}

begin{pmatrix}

x_1 x_2 x_3

end{pmatrix}

= A^{-1}y =

begin{bmatrix}

frac{1}{a_{1,1}} & – frac{a_{1,2}}{a_{1,1}a_{2,2}} & frac{a_{1,2}a_{2,3} – a_{1,3}a_{2,2}}{a_{1,1}a_{2,2}a_{3,3}} [0.2cm]

0 & frac{1}{a_{2,2}} & – frac{a_{2,3}}{a_{2,2}a_{3,3}} [0.2cm]

0 & 0 & frac{1}{a_{3,3}}

finish{bmatrix}

*

start{pmatrix}

y_1 y_2 y_3

finish{pmatrix}

finish{equation*}]

After deriving A-1 analytically, we are able to see that additionally it is an upper-triangular matrix.

Being attentive to the sequence of actions that we used right here to calculate A-1, we are able to say for certain now that the inverse of any upper-triangular matrix ‘A‘ can also be an upper-triangular matrix:

A similar judgment will present that the inverse of a lower-triangular matrix is one other lower-triangular matrix.

A numerical instance of inverting a sequence of matrices

Let’s have one other have a look at why, throughout an inversion of a sequence of matrices, their order is reversed. Recalling the method:

[begin{equation*}

(AB)^{-1} = B^{-1}A^{-1}

end{equation*}]

This time, for each ‘A‘ and ‘B‘, we’ll take sure sorts of matrices. The primary matrix “A=V” will likely be a cyclic shift matrix:

Let’s recall right here that to revive the enter vector ‘x‘, the inverse V-1 ought to do the alternative – cyclic shift values of the argument vector ‘y‘ downwards:

The second matrix “B=S” will likely be a diagonal matrix with totally different values on its foremost diagonal:

The inverse S-1 of such a scale matrix, to revive the unique vector ‘x‘, should halve solely the primary 2 values of its argument vector ‘y‘:

Now, what sort of conduct will the product matrix “VS” have? When calculating “y = VSx“, it can double solely the primary 2 values of the enter vector ‘x‘, and cyclic shift the whole end result upwards.

We all know already that after the output vector “y = VSx” is calculated, to reverse the affect of the product matrix “VS” and to revive the enter vector ‘x‘, we must always do:

[begin{equation*}

x = (VS)^{-1}y = S^{-1}V^{-1}y

end{equation*}]

In different phrases, the order of matrices ‘V‘ and ‘S‘ ought to be reversed throughout inversion:

And what is going to occur if we attempt to invert the love of “VS” in an improper means, with out reversing the order of the matrices, assuming that V-1S-1 is what ought to be used for it:

We see that the unique vector (x1, x2, x3, x4) from the correct aspect will not be restored on the left aspect now. As an alternative, we’ve got vector

(2x1, x2, 0.5x3, x4) there. One purpose for that is that the worth x3 shouldn’t be halved on its path, but it surely really will get halved as a result of in the intervening time when matrix S-1 is utilized, x3 seems on the second place from the highest, which really halves it. Identical refers back to the path of worth x1. All that ends in having an altered vector on the left aspect.

Conclusion

On this story, we’ve got checked out matrix inversion operation A-1 as one thing that undoes the transformation of the given matrix “A“. We have now noticed why inverting a sequence of matrices like (ABC)-1 really reverses the order of multiplication, leading to C-1B-1A-1. Additionally, we bought a visible perspective on why inverting a number of particular sorts of matrices ends in one other matrix of the identical sort.

Thanks for studying!

That is in all probability the final a part of my “Understanding Matrices” collection. I hope you loved studying all 4 elements! If that’s the case, be at liberty to comply with me on LinkedIn, as hopefully different articles will likely be coming quickly, and I’ll publish updates there!

My gratitude to:

– Asya Papyan, for exact design of all of the used illustrations ( behance.net/asyapapyan ).

– Roza Galstyan, for cautious evaluate of the draft, and helpful solutions ( linkedin.com/in/roza-galstyan-a54a8b352/ ).When you loved studying this story, be at liberty to attach with me on LinkedIn ( linkedin.com/in/tigran-hayrapetyan-cs/ ).

All used photos, until in any other case famous, are designed by request of the writer.

References:

[1] – Understanding matrices | Part 1: Matrix-Vector Multiplication

[2] – Understanding matrices | Part 2: Matrix-Matrix Multiplication