had been first launched for photos, and for photos they’re typically simple to know.

A filter slides over pixels and detects edges, shapes, or textures. You may read this article I wrote earlier to know how CNNs work for photos with Excel.

For textual content, the concept is similar.

As a substitute of pixels, we slide filters over phrases.

As a substitute of visible patterns, we detect linguistic patterns.

And plenty of vital patterns in textual content are very native. Let’s take these quite simple examples:

- “good” is optimistic

- “unhealthy” is unfavorable

- “not good” is unfavorable

- “not unhealthy” is usually optimistic

In my previous article, we noticed easy methods to characterize phrases as numbers utilizing embeddings.

We additionally noticed a key limitation: after we used a worldwide common, phrase order was fully ignored.

From the mannequin’s viewpoint, “not good” and “good not” seemed precisely the identical.

So the subsequent problem is evident: we would like the mannequin to take phrase order into consideration.

A 1D Convolutional Neural Community is a pure device for this, as a result of it scans a sentence with small sliding home windows and reacts when it acknowledges acquainted native patterns.

1. Understanding a 1D CNN for Textual content: Structure and Depth

1.1. Constructing a 1D CNN for textual content in Excel

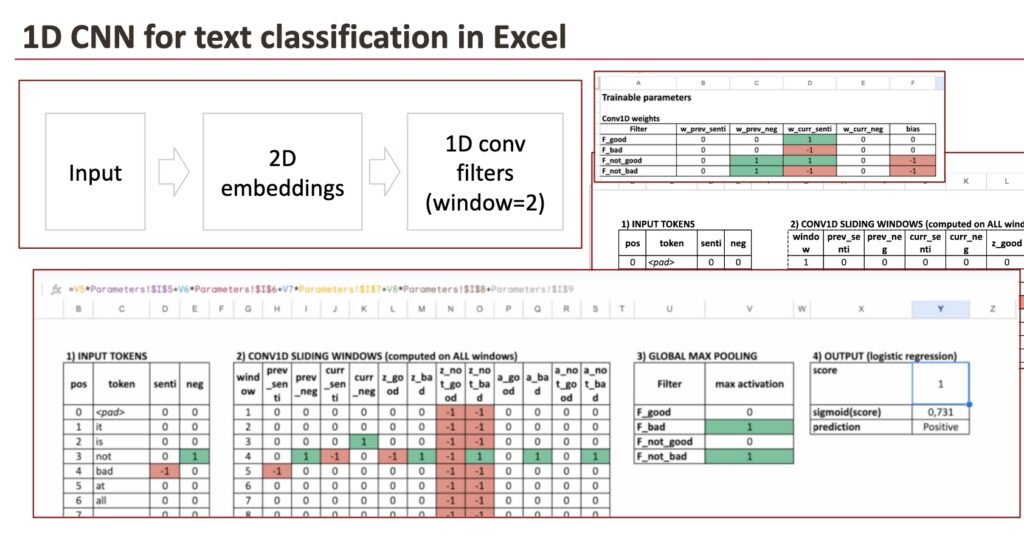

On this article, we construct a 1D CNN structure in Excel with the next elements:

- Embedding dictionary

We use a 2-dimensional embedding. As a result of one dimension just isn’t sufficient for this activity.

One dimension encodes sentiment, and the second dimension encodes negation. - Conv1D layer

That is the core part of a CNN structure.

It consists of filters that slide throughout the sentence with a window size of two phrases. We select 2 phrases to be easy. - ReLU and world max pooling

These steps preserve solely the strongest matches detected by the filters.

We will even focus on the truth that ReLU is optionally available. - Logistic regression

That is the ultimate classification layer, which mixes the detected patterns right into a chance.

This pipeline corresponds to a normal CNN textual content classifier.

The one distinction right here is that we explicitly write and visualize the ahead cross in Excel.

1.2. What “deep studying” means on this structure

Earlier than going additional, allow us to take a step again.

Sure, I do know, I do that typically, however having a worldwide view of fashions actually helps to know them.

The definition of deep studying is usually blurred.

For many individuals, deep studying merely means “many layers”.

Right here, I’ll take a barely completely different viewpoint.

What actually characterizes deep studying just isn’t the variety of layers, however the depth of the transformation utilized to the enter information.

With this definition:

- Even a mannequin with a single convolution layer might be thought-about deep studying,

- as a result of the enter is reworked right into a extra structured and summary illustration.

However, taking uncooked enter information, making use of one-hot encoding, and stacking many totally related layers doesn’t essentially make a mannequin deep in a significant sense.

In concept, if we don’t have any transformation, one layer is sufficient.

In CNNs, the presence of a number of layers has a really concrete motivation.

Think about a sentence like:

This film just isn’t excellent

With a single convolution layer and a small window, we are able to detect easy native patterns corresponding to: “very + good”

However we can’t but detect higher-level patterns corresponding to: “not + (excellent)”

This is the reason CNNs are sometimes stacked:

- the primary layer detects easy native patterns,

- the second layer combines them into extra complicated ones.

On this article, we intentionally deal with one convolution layer.

This makes each step seen and simple to know in Excel, whereas retaining the logic an identical to deeper CNN architectures.

2. Turning phrases into embeddings

Allow us to begin with some easy phrases. We’ll attempt to detect negation, so we are going to use these phrases, with different phrases (that we’ll not mannequin)

- “good”

- “unhealthy”

- “not good”

- “not unhealthy”

We preserve the illustration deliberately small so that each step is seen.

We’ll solely use a dictionary of three phrases : good, unhealthy and never.

All different phrases may have 0 as embeddings.

2.1 Why one dimension just isn’t sufficient

In a earlier article on sentiment detection, we used a single dimension.

That labored for “good” versus “unhealthy”.

However now we need to deal with negation.

One dimension can solely characterize one idea effectively.

So we want two dimensions:

- senti: sentiment polarity

- neg: negation marker

2.2 The embedding dictionary

Every phrase turns into a 2D vector:

- good → (senti = +1, neg = 0)

- unhealthy → (senti = -1, neg = 0)

- not → (senti = 0, neg = +1)

- some other phrase → (0, 0)

This isn’t how actual embeddings look. Actual embeddings are realized, high-dimensional, and never instantly interpretable.

However for understanding how Conv1D works, this toy embedding is ideal.

In Excel, that is only a lookup desk.

In an actual neural community, this embedding matrix could be trainable.

3. Conv1D filters as sliding sample detectors

Now we arrive on the core concept of a 1D CNN.

A Conv1D filter is nothing mysterious. It’s only a small set of weights plus a bias that slides over the sentence.

As a result of:

- every phrase embedding has 2 values (senti, neg)

- our window incorporates 2 phrases

every filter has:

- 4 weights (2 dimensions × 2 positions)

- 1 bias

That’s all.

You may consider a filter as repeatedly asking the identical query at each place:

“Do these two neighboring phrases match a sample I care about?”

3.1 Sliding home windows: how Conv1D sees a sentence

Think about this sentence:

it’s not unhealthy in any respect

We select a window measurement of two phrases.

Meaning the mannequin seems to be at each adjoining pair:

- (it, is)

- (is, not)

- (not, unhealthy)

- (unhealthy, at)

- (at, all)

Essential level:

The filters slide in every single place, even when each phrases are impartial (all zeros).

3.2 4 intuitive filters

To make the habits simple to know, we use 4 filters.

Filter 1 – “I see GOOD”

This filter seems to be solely on the sentiment of the present phrase.

Plain-text equation for one window:

z = senti(current_word)

If the phrase is “good”, z = 1

If the phrase is “unhealthy”, z = -1

If the phrase is impartial, z = 0

After ReLU, unfavorable values grow to be 0. However it’s optionally available.

Filter 2 – “I see BAD”

This one is symmetric.

z = -senti(current_word)

So:

- “unhealthy” → z = 1

- “good” → z = -1 → ReLU → 0

Filter 3 – “I see NOT GOOD”

This filter seems to be at two issues on the similar time:

- neg(previous_word)

- senti(current_word)

Equation:

z = neg(previous_word) + senti(current_word) – 1

Why the “-1”?

It acts like a threshold in order that each situations should be true.

Outcomes:

- “not good” → 1 + 1 – 1 = 1 → activated

- “is sweet” → 0 + 1 – 1 = 0 → not activated

- “not unhealthy” → 1 – 1 – 1 = -1 → ReLU → 0

Filter 4 – “I see NOT BAD”

Similar concept, barely completely different signal:

z = neg(previous_word) + (-senti(current_word)) – 1

Outcomes:

- “not unhealthy” → 1 + 1 – 1 = 1

- “not good” → 1 – 1 – 1 = -1 → 0

This can be a essential instinct:

A CNN filter can behave like a native logical rule, realized from information.

3.3 Ultimate results of sliding home windows

Right here is the ultimate outcomes of those 4 filters.

4. ReLU and max pooling: from native to world

4.1 ReLU

After computing z for each window, we apply ReLU:

ReLU(z) = max(0, z)

Which means:

- unfavorable proof is ignored

- optimistic proof is saved

Every filter turns into a presence detector.

By the way in which, it’s an activation perform within the Neural community. So a Neural community just isn’t that troublesome in spite of everything.

4.2 International Max pooling

Then comes world max pooling.

For every filter, we preserve solely:

max activation over all home windows

Interpretation:

“I don’t care the place the sample seems, solely whether or not it seems strongly someplace.”

At this level, the entire sentence is summarized by 4 numbers:

- strongest “good” sign

- strongest “unhealthy” sign

- strongest “not good” sign

- strongest “not unhealthy” sign

4.3 What occurs if we take away ReLU?

With out ReLU:

- unfavorable values keep unfavorable

- max pooling could choose unfavorable values

This mixes two concepts:

- absence of a sample

- reverse of a sample

The filter stops being a clear detector and turns into a signed rating.

The mannequin might nonetheless work mathematically, however interpretation turns into tougher.

5. The ultimate layer is logistic regression

Now we mix these indicators.

We compute a rating utilizing a linear mixture:

rating = 2 × F_good – 2 × F_bad – 3 × F_not_good – 3 × F_not_bad – bias

Then we convert the rating right into a chance:

chance = 1 / (1 + exp(-score))

That’s precisely logistic regression.

So sure:

- the CNN extracts options: this step might be thought-about as function engineering, proper?

- logistic regression makes the ultimate selections, it’s a basic machine studying mannequin we all know effectively

6. Full examples with sliding filters

Instance 1

“it’s unhealthy, so it’s not good in any respect”

The sentence incorporates:

After max pooling:

- F_good = 1 (as a result of “good” exists)

- F_bad = 1

- F_not_good = 1

- F_not_bad = 0

Ultimate rating turns into strongly unfavorable.

Prediction: unfavorable sentiment.

Instance 2

“it’s good. sure, not unhealthy.”

The sentence incorporates:

After max pooling:

- F_good = 1

- F_bad = 1 (as a result of the phrase “unhealthy” seems)

- F_not_good = 0

- F_not_bad = 1

The ultimate linear layer learns that “not unhealthy” ought to outweigh “unhealthy”.

Prediction: optimistic sentiment.

This additionally reveals one thing vital: max pooling retains all robust indicators.

The ultimate layer decides easy methods to mix them.

Exemple 3 with A limitation that explains why CNNs get deeper

Do that sentence:

“it’s not very unhealthy”

With a window of measurement 2, the mannequin sees:

It by no means sees (not, unhealthy), so the “not unhealthy” filter by no means fires.

It explains why actual fashions use:

- bigger home windows

- a number of convolution layers

- or different architectures for longer dependencies

Conclusion

The energy of Excel is visibility.

You may see:

- the embedding dictionary

- all filter weights and biases

- each sliding window

- each ReLU activation

- the max pooling end result

- the logistic regression parameters

Coaching is just the method of adjusting these numbers.

When you see that, CNNs cease being mysterious.

They grow to be what they are surely: structured, trainable sample detectors that slide over information.