Some disillusionment was inevitable. When OpenAI launched a free web app called ChatGPT in late 2022, it modified the course of a complete business—and a number of other world economies. Tens of millions of individuals began speaking to their computer systems, and their computer systems began speaking again. We had been enchanted, and we anticipated extra.

We received it. Expertise firms scrambled to remain forward, placing out rival merchandise that outdid each other with every new launch: voice, pictures, video. With nonstop one-upmanship, AI firms have offered every new product drop as a serious breakthrough, reinforcing a widespread religion that this know-how would simply hold getting higher. Boosters advised us that progress was exponential. They posted charts plotting how far we’d come since final yr’s fashions: Look how the road goes up! Generative AI might do something, it appeared.

Nicely, 2025 has been a yr of reckoning.

This story is a part of MIT Expertise Overview’s Hype Correction package deal, a sequence that resets expectations about what AI is, what it makes potential, and the place we go subsequent.

For a begin, the heads of the highest AI firms made guarantees they couldn’t hold. They advised us that generative AI would exchange the white-collar workforce, result in an age of abundance, make scientific discoveries, and assist discover new cures for illness. FOMO the world over’s economies, a minimum of within the World North, made CEOs tear up their playbooks and attempt to get in on the motion.

That’s when the shine began to come back off. Although the know-how might have been billed as a common multitool that might revamp outdated enterprise processes and lower prices, a lot of research revealed this yr recommend that corporations are failing to make the AI pixie mud work its magic. Surveys and trackers from a variety of sources, together with the US Census Bureau and Stanford College, have discovered that enterprise uptake of AI tools is stalling. And when the instruments do get tried out, many tasks stay stuck in the pilot stage. With out broad buy-in throughout the financial system it isn’t clear how the large AI firms will ever recoup the unbelievable quantities they’ve already spent on this race.

On the similar time, updates to the core know-how are now not the step adjustments they as soon as had been.

The very best-profile instance of this was the botched launch of GPT-5 in August. Right here was OpenAI, the agency that had ignited (and to a big extent sustained) the present growth, set to launch a brand-new era of its know-how. OpenAI had been hyping GPT-5 for months: “PhD-level skilled in something,” CEO Sam Altman crowed. On one other event Altman posted, with out remark, a picture of the Demise Star from Star Wars, which OpenAI stans took to be an emblem of final energy: Coming quickly! Expectations had been big.

And but, when it landed, GPT-5 gave the impression to be—extra of the identical? What adopted was the largest vibe shift since ChatGPT first appeared three years in the past. “The period of boundary-breaking developments is over,” Yannic Kilcher, an AI researcher and widespread YouTuber, announced in a video posted two days after GPT-5 got here out: “AGI will not be coming. It appears very a lot that we’re within the Samsung Galaxy period of LLMs.”

Lots of people (me included) have made the analogy with telephones. For a decade or so, smartphones had been probably the most thrilling shopper tech on this planet. At this time, new merchandise drop from Apple or Samsung with little fanfare. Whereas superfans pore over small upgrades, to most individuals this yr’s iPhone now seems and feels lots like final yr’s iPhone. Is that the place we’re with generative AI? And is it an issue? Certain, smartphones have grow to be the brand new regular. However they modified the best way the world works, too.

To be clear, the previous couple of years have been full of real “Wow” moments, from the beautiful leaps within the high quality of video generation models to the problem-solving chops of so-called reasoning fashions to the world-class competition wins of the latest coding and math models. However this exceptional know-how is just a few years outdated, and in some ways it’s still experimental. Its successes include big caveats.

Maybe we have to readjust our expectations.

The massive reset

Let’s watch out right here: The pendulum from hype to anti-hype can swing too far. It might be rash to dismiss this know-how simply because it has been oversold. The knee-jerk response when AI fails to reside as much as its hype is to say that progress has hit a wall. However that misunderstands how analysis and innovation in tech work. Progress has at all times moved in matches and begins. There are methods over, round, and below partitions.

Take a step again from the GPT-5 launch. It got here scorching on the heels of a sequence of exceptional fashions that OpenAI had shipped within the earlier months, together with o1 and o3 (first-of-their-kind reasoning fashions that launched the business to a complete new paradigm) and Sora 2, which raised the bar for video era as soon as once more. That doesn’t sound like hitting a wall to me.

AI is actually good! Have a look at Nano Banana Professional, the brand new picture era mannequin from Google DeepMind that may flip a guide chapter into an infographic, and far more. It’s simply there—at no cost—in your cellphone.

And but you’ll be able to’t assist however marvel: When the wow issue is gone, what’s left? How will we view this know-how a yr or 5 from now? Will we predict it was definitely worth the colossal costs, each monetary and environmental?

With that in thoughts, listed here are 4 methods to consider the state of AI on the finish of 2025: The beginning of a much-needed hype correction.

01: LLMs aren’t every thing

In some methods, it’s the hype round massive language fashions, not AI as a complete, that wants correcting. It has grow to be apparent that LLMs aren’t the doorway to artificial general intelligence, or AGI, a hypothetical know-how that some insist will at some point be capable to do any (cognitive) job a human can.

Even an AGI evangelist like Ilya Sutskever, chief scientist and cofounder on the AI startup Protected Superintelligence and former chief scientist and cofounder at OpenAI, now highlights the restrictions of LLMs, a know-how he had a huge hand in creating. LLMs are excellent at studying easy methods to do a variety of particular duties, however they don’t appear to study the ideas behind these duties, Sutskever mentioned in an interview with Dwarkesh Patel in November.

It’s the distinction between studying easy methods to remedy a thousand totally different algebra issues and studying easy methods to remedy any algebra drawback. “The factor which I feel is probably the most elementary is that these fashions in some way simply generalize dramatically worse than folks,” Sutskever mentioned.

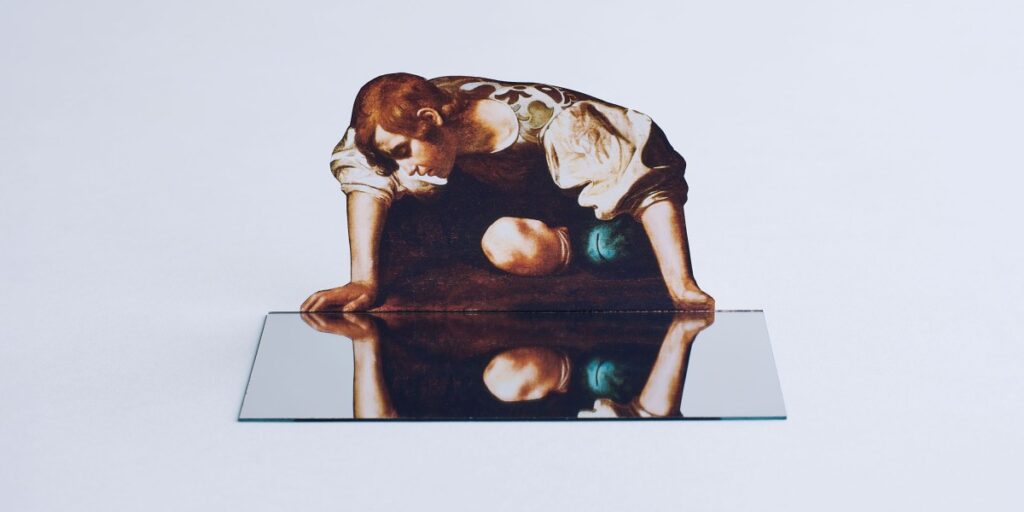

It’s straightforward to think about that LLMs can do something as a result of their use of language is so compelling. It’s astonishing how properly this know-how can mimic the best way folks write and converse. And we’re hardwired to see intelligence in issues that behave in sure methods—whether or not it’s there or not. In different phrases, now we have constructed machines with humanlike habits and can’t resist seeing a humanlike thoughts behind them.

That’s comprehensible. LLMs have been a part of mainstream life for just a few years. However in that point, entrepreneurs have preyed on our shaky sense of what the know-how can actually do, pumping up expectations and turbocharging the hype. As we reside with this know-how and are available to grasp it higher, these expectations ought to fall again all the way down to earth.

02: AI will not be a fast repair to all of your issues

In July, researchers at MIT revealed a study that grew to become a tentpole speaking level within the disillusionment camp. The headline consequence was {that a} whopping 95% of companies that had tried utilizing AI had discovered zero worth in it.

The final thrust of that declare was echoed by different analysis, too. In November, a study by researchers at Upwork, an organization that runs a web-based market for freelancers, discovered that brokers powered by prime LLMs from OpenAI, Google DeepMind, and Anthropic failed to finish many easy office duties by themselves.

That is miles off Altman’s prediction: “We imagine that, in 2025, we might even see the primary AI brokers ‘be a part of the workforce’ and materially change the output of firms,” he wrote on his personal blog in January.

However what will get missed in that MIT research is that the researchers’ measure of success was fairly slender. That 95% failure fee accounts for firms that had tried to implement bespoke AI programs however had not but scaled them past the pilot stage after six months. It shouldn’t be too shocking that a variety of experiments with experimental know-how don’t pan out right away.

That quantity additionally doesn’t embrace using LLMs by staff outdoors of official pilots. The MIT researchers discovered that round 90% of the businesses they surveyed had a form of AI shadow financial system the place employees had been utilizing private chatbot accounts. However the worth of that shadow financial system was not measured.

When the Upwork research checked out how properly brokers accomplished duties along with individuals who knew what they had been doing, success charges shot up. The takeaway appears to be that lots of people are determining for themselves how AI may assist them with their jobs.

That matches with one thing the AI researcher and influencer (and coiner of the time period “vibe coding”) Andrej Karpathy has noted: Chatbots are higher than the typical human at a variety of various things (consider giving authorized recommendation, fixing bugs, doing highschool math), however they don’t seem to be higher than an skilled human. Karpathy suggests this can be why chatbots have proved widespread with particular person shoppers, serving to non-experts with on a regular basis questions and duties, however they haven’t upended the financial system, which might require outperforming expert staff at their jobs.

That will change. For now, don’t be shocked that AI has not (but) had the affect on jobs that boosters mentioned it will. AI will not be a fast repair, and it can’t exchange people. However there’s lots to play for. The methods by which AI might be built-in into on a regular basis workflows and enterprise pipelines are nonetheless being tried out.

03: Are we in a bubble? (In that case, what sort of bubble?)

If AI is a bubble, is it just like the subprime mortgage bubble of 2008 or the web bubble of 2000? As a result of there’s an enormous distinction.

The subprime bubble worn out an enormous a part of the financial system, as a result of when it burst it left nothing behind besides debt and overvalued actual property. The dot-com bubble worn out a variety of firms, which despatched ripples the world over, but it surely left behind the toddler web—a world community of cables and a handful of startups, like Google and Amazon, that grew to become the tech giants of right now.

Then once more, perhaps we’re in a bubble not like both of these. In any case, there’s no actual enterprise mannequin for LLMs proper now. We don’t but know what the killer app will likely be, or if there’ll even be one.

And plenty of economists are involved in regards to the unprecedented quantities of cash being sunk into the infrastructure required to construct capability and serve the projected demand. However what if that demand doesn’t materialize? Add to that the bizarre circularity of a lot of these offers—with Nvidia paying OpenAI to pay Nvidia, and so forth—and it’s no shock everyone’s received a special tackle what’s coming.

Some buyers stay sanguine. In an interview with the Expertise Enterprise Programming Community podcast in November, Glenn Hutchins, cofounder of Silver Lake Companions, a serious worldwide personal fairness agency, gave a couple of causes to not fear. “Each considered one of these information facilities—virtually all of them—has a solvent counterparty that’s contracted to take all of the output they’re constructed to swimsuit,” he mentioned. In different phrases, it’s not a case of “Construct it and so they’ll come”—the purchasers are already locked in.

And, he identified, one of many greatest of these solvent counterparties is Microsoft. “Microsoft has the world’s finest credit standing,” Hutchins mentioned. “In case you signal a cope with Microsoft to take the output out of your information middle, Satya is nice for it.”

Many CEOs will likely be trying again on the dot-com bubble and attempting to study its classes. Right here’s one strategy to see it: The businesses that went bust again then didn’t have the cash to final the gap. Those who survived the crash thrived.

With that lesson in thoughts, AI firms right now are attempting to pay their approach by means of what might or will not be a bubble. Keep within the race; don’t get left behind. Even so, it’s a determined gamble.

However there’s one other lesson too. Firms which may appear to be sideshows can flip into unicorns quick. Take Synthesia, which makes avatar era instruments for companies. Nathan Benaich, cofounder of the VC agency Air Road Capital, admits that when he first heard in regards to the firm a couple of years in the past, again when concern of deepfakes was rife, he wasn’t certain what its tech was for and thought there was no marketplace for it.

“We didn’t know who would pay for lip-synching and voice cloning,” he says. “Turns on the market’s lots of people who needed to pay for it.” Synthesia now has round 55,000 company prospects and brings in round $150 million a yr. In October, the corporate was valued at $4 billion.

04: ChatGPT was not the start, and it received’t be the top

ChatGPT was the culmination of a decade’s worth of progress in deep studying, the know-how that underpins all of recent AI. The seeds of deep studying itself had been planted within the Eighties. The sector as a complete goes again a minimum of to the Nineteen Fifties. If progress is measured in opposition to that backdrop, generative AI has barely received going.

In the meantime, analysis is at a fever pitch. There are extra high-quality submissions to the world’s main AI conferences than ever earlier than. This yr, organizers of a few of these conferences resorted to turning down papers that reviewers had already accredited, simply to handle numbers. (On the similar time, preprint servers like arXiv have been flooded with AI-generated research slop.)

“It’s again to the age of analysis once more,” Sutskever mentioned in that Dwarkesh interview, speaking in regards to the present bottleneck with LLMs. That’s not a setback; that’s the beginning of one thing new.

“There’s at all times a variety of hype beasts,” says Benaich. However he thinks there’s an upside to that: Hype attracts the cash and expertise wanted to make actual progress. “You understand, it was solely like two or three years in the past that the individuals who constructed these fashions had been principally analysis nerds that simply occurred on one thing that form of labored,” he says. “Now everyone who’s good at something in know-how is engaged on this.”

The place can we go from right here?

The relentless hype hasn’t come simply from firms drumming up enterprise for his or her vastly costly new applied sciences. There’s a big cohort of individuals—inside and out of doors the business—who need to imagine within the promise of machines that may learn, write, and assume. It’s a wild decades-old dream.

However the hype was by no means sustainable—and that’s factor. We now have an opportunity to reset expectations and see this know-how for what it truly is—assess its true capabilities, perceive its flaws, and take the time to discover ways to apply it in helpful (and helpful) methods. “We’re nonetheless attempting to determine easy methods to invoke sure behaviors from this insanely high-dimensional black field of data and abilities,” says Benaich.

This hype correction was lengthy overdue. However know that AI isn’t going wherever. We don’t even absolutely perceive what we’ve constructed thus far, not to mention what’s coming subsequent.