and medium corporations obtain success in constructing Information and ML platforms, constructing AI platforms is now profoundly difficult. This put up discusses three key the explanation why try to be cautious about constructing AI platforms and proposes my ideas on promising instructions as a substitute.

Disclaimer: It’s based mostly on private views and doesn’t apply to cloud suppliers and information/ML SaaS corporations. They need to as a substitute double down on the analysis of AI platforms.

The place I’m Coming From

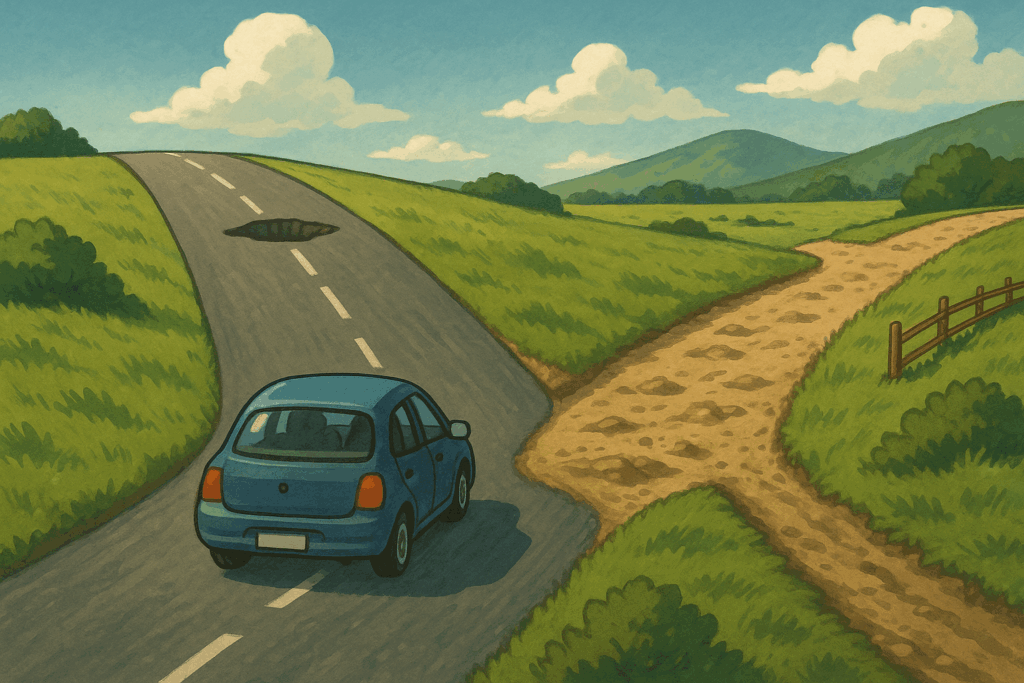

In my earlier article From Data Platform to ML Platform in Towards Information Science, I shared how an information platform evolves into an ML platform. This journey applies to most small and medium-sized corporations. Nevertheless, there was no clear path for small and medium-sized corporations to proceed growing their platforms into AI platforms but. Leveling as much as AI platforms, the trail forked into two instructions:

- AI Infrastructure: The “New Electrical energy” (AI Inference) is extra environment friendly when centrally generated. It’s a recreation for giant techs and enormous mannequin suppliers.

- AI Functions Platform: Can’t construct the “seaside home” (AI platform) on continually shifting floor. The evolving AI functionality and rising new growth paradigm make discovering lasting standardization difficult.

Nevertheless, there are nonetheless instructions which are prone to stay necessary at the same time as AI fashions proceed to evolve. It’s lined on the finish of this put up.

Excessive Barrier of AI Infrastructure

Whereas Databricks is possibly solely a number of instances higher than your personal Spark jobs, DeepSeek could possibly be 100x extra environment friendly than you on LLM inferencing. Coaching and serving an LLM mannequin require considerably extra funding in infrastructure and, as importantly, management over the LLM mannequin’s construction.

In this series, I briefly shared the infrastructure for LLM coaching, which incorporates parallel training strategies, topology designs, and training accelerations. On the {hardware} aspect, in addition to high-performance GPUs and TPUs, a good portion of the associated fee went to networking setup and high-performance storage companies. Clusters require an extra RDMA community to allow non-blocking, point-to-point connections for information trade between cases. The orchestration companies should help complicated job scheduling, failover methods, {hardware} difficulty detection, and GPU useful resource abstraction and pooling. The coaching SDK must facilitate asynchronous checkpointing, information processing, and mannequin quantization.

Relating to mannequin serving, mannequin suppliers usually incorporate inference effectivity throughout mannequin growth phases. Mannequin suppliers doubtless have higher mannequin quantification methods, which might produce the identical mannequin high quality with a considerably smaller mannequin measurement. Mannequin suppliers are prone to develop a greater mannequin parallel technique because of the management they’ve over the mannequin construction. It could enhance the batch measurement throughout LLM inference, which successfully will increase GPU utilization. Moreover, massive LLM gamers have logistical benefits that allow them to entry cheaper routers, mainframes, and GPU chips. Extra importantly, stronger mannequin construction management and higher mannequin parallel functionality imply mannequin suppliers can leverage cheaper GPU units. For mannequin customers counting on open-source fashions, GPU deprecation could possibly be a much bigger concern.

Take DeepSeek R1 for example. Let’s say you’re utilizing p5e.48xlarge AWS occasion which offer 8 H200 chips with NVLink linked. It would price you 35$ per hour. Assuming you might be doing in addition to Nvidia and obtain 151 tokens/second performance. To generate 1 million output tokens, it should price you $64(1 million / (151 * 3600) * $35). How a lot does DeepSeek promote its token at per million? 2$ only! DeepSeek can obtain 60 instances the effectivity of your cloud deployment (assuming a 50% margin from DeepSeek).

So, LLM inference energy is certainly like electrical energy. It displays the variety of functions that LLMs can energy; it additionally implies that it’s most effective when centrally generated. However, it is best to nonetheless self-host LLM companies for privacy-sensitive use instances, similar to hospitals have their electrical energy turbines for emergencies.

Consistently shifting floor

Investing in AI infrastructure is a daring recreation, and constructing light-weight platforms for AI functions comes with its hidden pitfalls. With the speedy evolution of AI mannequin capabilities, there is no such thing as a aligned paradigm for AI functions; subsequently, there’s a lack of a stable basis for constructing AI functions.

The straightforward reply to that’s: be affected person.

If we take a holistic view of knowledge and ML platforms, growth paradigms emerge solely when the capabilities of algorithms converge.

| Domains | Algorithm Emerge | Resolution Emerge | Massive Platforms Emerge |

| Information Platform | 2004 — MapReduce (Google) | 2010–2015 — Spark, Flink, Presto, Kafka | 2020–Now — Databricks, Snowflake |

| ML Platform | 2012 — ImageNet (AlexNet, CNN breakthrough) | 2015–2017 — TensorFlow, PyTorch, Scikit-learn | 2018–Now — SageMaker, MLflow, Kubeflow, Databricks ML |

| AI Platform | 2017 — Transformers (Consideration is All You Want) | 2020–2022 —ChatGPT, Claude, Gemini, DeepSeek | 2023–Now — ?? |

After a number of years of fierce competitors, a couple of massive mannequin gamers stay standing within the Area. Nevertheless, the evolution of the AI functionality is just not but converging. With the development of AI fashions’ capabilities, the prevailing growth paradigm will rapidly change into out of date. Massive gamers have simply began to take their stab at agent growth platforms, and new options are popping up like popcorn in an oven. Winners will ultimately seem, I imagine. For now, constructing agent standardization themselves is a difficult name for small and medium-sized corporations.

Path Dependency of Outdated Success

One other problem of constructing an AI platform is moderately delicate. It’s about reflecting the mindset of platform builders, whether or not having path dependency from the earlier success of constructing information and ML platforms.

As we beforehand shared, since 2017, the information and ML growth paradigms are well-aligned, and essentially the most crucial job for the ML platform is standardization and abstraction. Nevertheless, the event paradigm for AI functions is just not but established. If the staff follows the earlier success story of constructing an information and ML platform, they may find yourself prioritizing standardization on the mistaken time. Doable instructions are:

- Construct an AI Mannequin Gateway: Present centralised audit and logging of requests to LLM fashions.

- Construct an AI Agent Framework: Develop a self-built SDK for creating AI brokers with enhanced connectivity to the inner ecosystem.

- Standardise RAG Practices: Constructing a Customary Information Indexing Movement to decrease the bar for engineer construct data companies.

These initiatives can certainly be vital. However the ROI actually will depend on the size of your organization. Regardless, you’re gonna have the next challenges:

- Sustain with the newest AI developments.

- Buyer adoption price when it’s simple for purchasers to bypass your abstraction.

Suppose builders of knowledge and ML platforms are like “Closet Organizers”, AI builders now ought to act like “Trend Designers”. It requires embracing new concepts, conducting speedy experiments, and even accepting a degree of imperfection.

My Ideas on Promising Instructions

Despite the fact that so many challenges are forward, please be reminded that it’s nonetheless gratifying to work on the AI platform proper now, as you’ve gotten substantial leverage which wasn’t there earlier than:

- The transformation functionality of AI is extra substantial than that of knowledge and machine studying.

- The motivation to undertake AI is far more potent than ever.

In case you decide the appropriate path and technique, the transformation you’ll be able to deliver to your organisation is critical. Listed below are a few of my ideas on instructions which may expertise much less disruption because the AI mannequin scales additional. I believe they’re equally necessary with AI platformisation:

- Excessive-quality, rich-semantic information merchandise: Information merchandise with excessive accuracy and accountability, wealthy descriptions, and reliable metrics will “radiate” extra influence with the expansion of AI fashions.

- Multi-modal Information Serving: OLTP, OLAP, NoSQL, and Elasticsearch, a scalable data service behind the MCP server, could require a number of varieties of databases to help high-performance information serving. It’s difficult to take care of a single supply of reality and efficiency with fixed reverse ETL jobs.

- AI DevOps: AI-centric software program growth, upkeep, and analytics. Code-gen accuracy is enormously elevated over the previous 12 months.

- Experimentation and Monitoring: Given the elevated uncertainty of AI functions, the analysis and monitoring of those functions are much more crucial.

These are my ideas on constructing AI platforms. Please let me know your ideas on it as nicely. Cheers!