to start out learning LLMs with all this content material over the web, and new issues are arising every day. I’ve learn some guides from Google, OpenAI, and Anthropic and observed how every focuses on totally different elements of Agents and LLMs. So, I made a decision to consolidate these ideas right here and add different necessary concepts that I feel are important if you happen to’re beginning to examine this discipline.

This submit covers key ideas with code examples to make issues concrete. I’ve ready a Google Colab notebook with all of the examples so you may apply the code whereas studying the article. To make use of it, you’ll want an API key — verify part 5 of my previous article if you happen to don’t know how one can get one.

Whereas this information offers you the necessities, I like to recommend studying the complete articles from these firms to deepen your understanding.

I hope this lets you construct a stable basis as you begin your journey with LLMs!

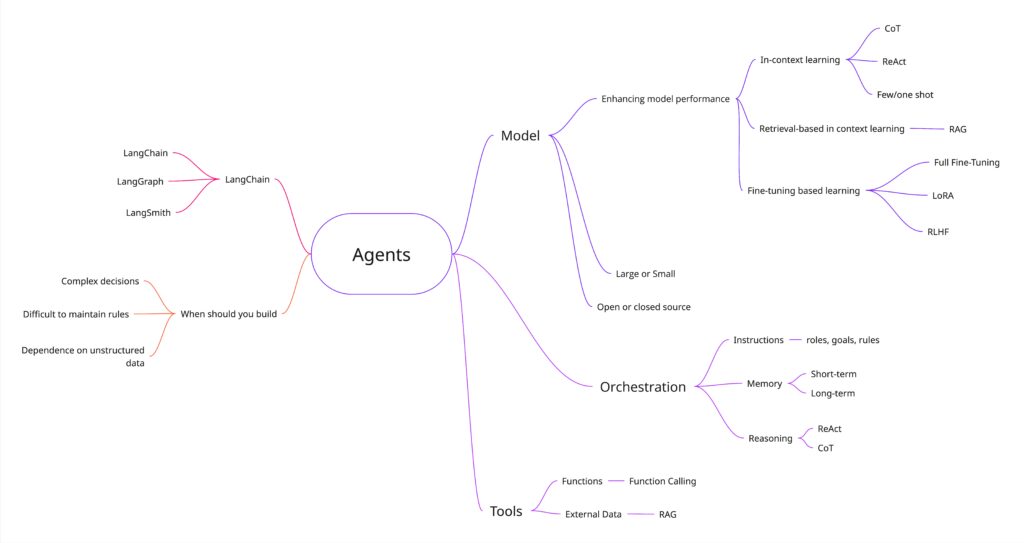

On this MindMap, you may verify a abstract of this text’s content material.

What’s an agent?

“Agent” may be outlined in a number of methods. Every firm whose information I’ve learn defines brokers in a different way. Let’s look at these definitions and evaluate them:

“Brokers are techniques that independently accomplish duties in your behalf.” (Open AI)

“In its most elementary kind, a Generative AI agent may be outlined as an software that makes an attempt to obtain a purpose by observing the world and appearing upon it utilizing the instruments that it has at its disposal. Brokers are autonomous and may act independently of human intervention, particularly when supplied with correct objectives or aims they’re meant to realize. Brokers may also be proactive of their strategy to reaching their objectives. Even within the absence of express instruction units from a human, an agent can purpose about what it ought to do subsequent to realize its final purpose.” (Google)

“Some prospects outline brokers as totally autonomous techniques that function independently over prolonged intervals, utilizing varied instruments to perform advanced duties. Others use the time period to explain extra prescriptive implementations that comply with predefined workflows. At Anthropic, we categorize all these variations as agentic techniques, however draw an necessary architectural distinction between workflows and brokers:

– Workflows are techniques the place LLMs and instruments are orchestrated by means of predefined code paths.

– Brokers, alternatively, are techniques the place LLMs dynamically direct their very own processes and gear utilization, sustaining management over how they accomplish duties.” (Anthropic)

The three definitions emphasize totally different elements of an agent. Nonetheless, all of them agree that brokers:

- Function autonomously to carry out duties

- Make choices about what to do subsequent

- Use instruments to realize objectives

An agent consists of three principal parts:

- Mannequin

- Directions/Orchestration

- Instruments

First, I’ll outline every part in an easy phrase so you may have an outline. Then, within the following part, we’ll dive into every part.

- Mannequin: a language mannequin that generates the output.

- Directions/Orchestration: express tips defining how the agent behaves.

- Instruments: permits the agent to work together with exterior knowledge and companies.

Mannequin

Mannequin refers back to the language mannequin (LM). In easy phrases, it predicts the subsequent phrase or sequence of phrases primarily based on the phrases it has already seen.

If you wish to perceive how these fashions work behind the black field, here’s a video from 3Blue1Brown that explains it.

Brokers vs fashions

Brokers and fashions will not be the identical. The mannequin is a part of an agent, and it’s utilized by it. Whereas fashions are restricted to predicting a response primarily based on their coaching knowledge, brokers prolong this performance by appearing independently to realize particular objectives.

Here’s a abstract of the principle variations between Fashions and Brokers from Google’s paper.

Massive Language Fashions

The opposite L from LLM refers to “Massive”, which primarily refers back to the variety of parameters it was educated on. These fashions can have a whole bunch of billions and even trillions of parameters. They’re educated on big knowledge and wish heavy pc energy to be educated on.

Examples of LLMs are GPT 4o, Gemini Flash 2.0 , Gemini Professional 2.5, Claude 3.7 Sonnet.

Small Language Fashions

We even have Small Language Fashions (SLM). They’re used for less complicated duties the place you want much less knowledge and fewer parameters, are lighter to run, and are simpler to regulate.

SLMs have fewer parameters (sometimes beneath 10 billion), dramatically decreasing the computational prices and power utilization. They concentrate on particular duties and are educated on smaller datasets. This maintains a stability between efficiency and useful resource effectivity.

Examples of SLMs are Llama 3.1 8B (Meta), Gemma2 9B (Google), Mistral 7B (Mistral AI).

Open Supply vs Closed Supply

These fashions may be open supply or closed. Being open supply signifies that the code — generally mannequin weights and coaching knowledge, too — is publicly obtainable for anybody to make use of freely, perceive the way it works internally, and alter for particular duties.

The closed mannequin signifies that the code isn’t publicly obtainable. Solely the corporate that developed it will probably management its use, and customers can solely entry it by means of APIs or paid companies. Generally, they’ve a free tier, like Gemini has.

Right here, you may verify some open supply fashions on Hugging Face.

These with * in dimension imply this data shouldn’t be publicly obtainable, however there are rumors of a whole bunch of billions and even trillions of parameters.

Directions/Orchestration

Directions are express tips and guardrails defining how the agent behaves. In its most elementary kind, an agent would include simply “Directions” for this part, as outlined in Open AI’s information. Nonetheless, the agent might have extra than simply “Directions” to deal with extra advanced situations. In Google’s paper, they name this part “Orchestration” as a substitute, and it entails three layers:

- Directions

- Reminiscence

- Mannequin-based Reasoning/Planning

Orchestration follows a cyclical sample. The agent gathers data, processes it internally, after which makes use of these insights to find out its subsequent transfer.

Directions

The directions could possibly be the mannequin’s objectives, profile, roles, guidelines, and knowledge you suppose is necessary to boost its habits.

Right here is an instance:

system_prompt = """

You're a pleasant and a programming tutor.

At all times clarify ideas in a easy and clear method, utilizing examples when doable.

If the person asks one thing unrelated to programming, politely deliver the dialog again to programming matters.

"""On this instance, we informed the function of the LLM, the anticipated habits, how we needed the output — easy and with examples when doable — and set limits on what it’s allowed to speak about.

Mannequin-based Reasoning/Planning

Some reasoning methods, equivalent to ReAct and Chain-of-Thought, give the orchestration layer a structured method to soak up data, carry out inner reasoning, and produce knowledgeable choices.

Chain-of-Thought (CoT) is a immediate engineering approach that allows reasoning capabilities by means of intermediate steps. It’s a method of questioning a language mannequin to generate a step-by-step clarification or reasoning course of earlier than arriving at a ultimate reply. This technique helps the mannequin to interrupt down the issue and never skip any intermediate duties to keep away from reasoning failures.

Prompting instance:

system_prompt = f"""

You're the assistant for a tiny candle store.

Step 1:Test whether or not the person mentions both of our candles:

• Forest Breeze (woodsy scent, 40 h burn, $18)

• Vanilla Glow (heat vanilla, 35 h burn, $16)

Step 2:Record any assumptions the person makes

(e.g. "Vanilla Glow lasts 50 h" or "Forest Breeze is unscented").

Step 3:If an assumption is fallacious, appropriate it politely.

Then reply the query in a pleasant tone.

Point out solely the 2 candles above-we do not promote the rest.

Use precisely this output format:

Step 1:<your reasoning>

Step 2:<your reasoning>

Step 3:<your reasoning>

Response to person: <ultimate reply>

"""Right here is an instance of the mannequin output for the person question: “Hello! I’d like to purchase the Vanilla Glow. Is it $10?”. You’ll be able to see the mannequin following our tips from every step to construct the ultimate reply.

ReAct is one other immediate engineering approach that mixes reasoning and appearing. It offers a thought course of technique for language fashions to purpose and take motion on a person question. The agent continues in a loop till it accomplishes the duty. This method overcomes weaknesses of reasoning-only strategies like CoT, equivalent to hallucination, as a result of it causes in exterior data obtained by means of actions.

Prompting instance:

system_prompt= """You're an agent that may name two instruments:

1. CurrencyAPI:

• enter: {base_currency (3-letter code), quote_currency (3-letter code)}

• returns: trade price (float)

2. Calculator:

• enter: {arithmetic_expression}

• returns: end result (float)

Comply with **strictly** this response format:

Thought: <your reasoning>

Motion: <ToolName>[<arguments>]

Statement: <instrument end result>

… (repeat Thought/Motion/Statement as wanted)

Reply: <ultimate reply for the person>

By no means output the rest. If no instrument is required, skip on to Reply.

"""Right here, I haven’t carried out the capabilities (the mannequin is hallucinating to get the forex), so it’s simply an instance of the reasoning hint:

These methods are good to make use of if you want transparency and management over what and why the agent is giving that reply or taking an motion. It helps debug your system, and if you happen to analyze it, it might present indicators for enhancing prompts.

If you wish to learn extra, these methods have been proposed by Google’s researchers within the paper Chain of Thought Prompting Elicits Reasoning in Large Language Models and REACT: SYNERGIZING REASONING AND ACTING IN LANGUAGE MODELS.

Reminiscence

LLMs don’t have reminiscence in-built. This “Reminiscence” is a few content material you go inside your immediate to present the mannequin context. We will refer to 2 varieties of reminiscence: short-term and long-term.

- Quick-term reminiscence refers back to the speedy context the mannequin has entry to throughout an interplay. This could possibly be the newest message, the final N messages, or a abstract of earlier messages. The quantity might differ primarily based on the mannequin’s context limitations — when you hit that restrict, you possibly can drop older messages to present area to new ones.

- Lengthy-term reminiscence entails storing necessary data past the mannequin’s context window for future use. To work round this, you possibly can summarize previous conversations or get key data and save them externally, sometimes in a vector database. When wanted, the related data is retrieved utilizing Retrieval-Augmented Era (RAG) methods to refresh the mannequin’s understanding. We’ll discuss RAG within the following part.

Right here is only a easy instance of managing short-term reminiscence manually. You’ll be able to verify the Google Colab notebook for this code execution and a extra detailed clarification.

# System immediate

system_prompt = """

You're the assistant for a tiny candle store.

Step 1:Test whether or not the person mentions both of our candles:

• Forest Breeze (woodsy scent, 40 h burn, $18)

• Vanilla Glow (heat vanilla, 35 h burn, $16)

Step 2:Record any assumptions the person makes

(e.g. "Vanilla Glow lasts 50 h" or "Forest Breeze is unscented").

Step 3:If an assumption is fallacious, appropriate it politely.

Then reply the query in a pleasant tone.

Point out solely the 2 candles above-we do not promote the rest.

Use precisely this output format:

Step 1:<your reasoning>

Step 2:<your reasoning>

Step 3:<your reasoning>

Response to person: <ultimate reply>

"""

# Begin a chat_history

chat_history = []

# First message

user_input = "I want to purchase 1 Forest Breeze. Can I pay $10?"

full_content = f"System directions: {system_prompt}nn Chat Historical past: {chat_history} nn Person message: {user_input}"

response = consumer.fashions.generate_content(

mannequin="gemini-2.0-flash",

contents=full_content

)

# Append to speak historical past

chat_history.append({"function": "person", "content material": user_input})

chat_history.append({"function": "assistant", "content material": response.textual content})

# Second Message

user_input = "What did I say I needed to purchase?"

full_content = f"System directions: {system_prompt}nn Chat Historical past: {chat_history} nn Person message: {user_input}"

response = consumer.fashions.generate_content(

mannequin="gemini-2.0-flash",

contents=full_content

)

# Append to speak historical past

chat_history.append({"function": "person", "content material": user_input})

chat_history.append({"function": "assistant", "content material": response.textual content})

print(response.textual content)We truly go to the mannequin the variable full_content, composed of system_prompt (containing directions and reasoning tips), the reminiscence (chat_history), and the brand new user_input.

In abstract, you may mix directions, reasoning tips, and reminiscence in your immediate to get higher outcomes. All of this mixed varieties considered one of an agent’s parts: Orchestration.

Instruments

Fashions are actually good at processing data, nonetheless, they’re restricted by what they’ve discovered from their coaching knowledge. With entry to instruments, the fashions can work together with exterior techniques and entry data past their coaching knowledge.

Features and Perform Calling

Features are self-contained modules of code that accomplish a selected process. They’re reusable code that you should use over and over.

When implementing operate calling, you join a mannequin with capabilities. You present a set of predefined capabilities, and the mannequin determines when to make use of every operate and which arguments are required primarily based on the operate’s specs.

The Mannequin doesn’t execute the operate itself. It is going to inform which capabilities needs to be referred to as and go the parameters (inputs) to make use of that operate primarily based on the person question, and you’ll have to create the code to execute this operate later. Nonetheless, if we construct an agent, then we are able to program its workflow to execute the operate and reply primarily based on that, or we are able to use Langchain, which has an abstraction of the code, and also you simply go the capabilities to the pre-built agent. Keep in mind that an agent is a composition of (mannequin + directions + instruments).

On this method, you prolong your agent’s capabilities to make use of exterior instruments, equivalent to calculators, and take actions, equivalent to interacting with exterior techniques utilizing APIs.

Right here, I’ll first present you an LLM and a fundamental operate name so you may perceive what is going on. It’s nice to make use of LangChain as a result of it simplifies your code, however it is best to perceive what is going on beneath the abstraction. On the finish of the submit, we’ll construct an agent utilizing LangChain.

The method of making a operate name:

- Outline the operate and a operate declaration, which describes the operate’s identify, parameters, and function to the mannequin.

- Name LLM with operate declarations. As well as, you may go a number of capabilities and outline if the mannequin can select any operate you specified, whether it is pressured to name precisely one particular operate, or if it will probably’t use them in any respect.

- Execute Perform Code.

- Reply the person.

# Buying checklist

shopping_list: Record[str] = []

# Features

def add_shopping_items(objects: Record[str]):

"""Add a number of objects to the procuring checklist."""

for merchandise in objects:

shopping_list.append(merchandise)

return {"standing": "okay", "added": objects}

def list_shopping_items():

"""Return all objects presently within the procuring checklist."""

return {"shopping_list": shopping_list}

# Perform declarations

add_shopping_items_declaration = {

"identify": "add_shopping_items",

"description": "Add a number of objects to the procuring checklist",

"parameters": {

"kind": "object",

"properties": {

"objects": {

"kind": "array",

"objects": {"kind": "string"},

"description": "An inventory of procuring objects so as to add"

}

},

"required": ["items"]

}

}

list_shopping_items_declaration = {

"identify": "list_shopping_items",

"description": "Record all present objects within the procuring checklist",

"parameters": {

"kind": "object",

"properties": {},

"required": []

}

}

# Configuration Gemini

consumer = genai.Consumer(api_key=os.getenv("GEMINI_API_KEY"))

instruments = varieties.Device(function_declarations=[

add_shopping_items_declaration,

list_shopping_items_declaration

])

config = varieties.GenerateContentConfig(instruments=[tools])

# Person enter

user_input = (

"Hey there! I am planning to bake a chocolate cake later at present, "

"however I noticed I am out of flour and chocolate chips. "

"Might you please add these objects to my procuring checklist?"

)

# Ship the person enter to Gemini

response = consumer.fashions.generate_content(

mannequin="gemini-2.0-flash",

contents=user_input,

config=config,

)

print("Mannequin Output Perform Name")

print(response.candidates[0].content material.elements[0].function_call)

print("n")

#Execute Perform

tool_call = response.candidates[0].content material.elements[0].function_call

if tool_call.identify == "add_shopping_items":

end result = add_shopping_items(**tool_call.args)

print(f"Perform execution end result: {end result}")

elif tool_call.identify == "list_shopping_items":

end result = list_shopping_items()

print(f"Perform execution end result: {end result}")

else:

print(response.candidates[0].content material.elements[0].textual content)On this code, we’re creating two capabilities: add_shopping_items and list_shopping_items. We outlined the operate and the operate declaration, configured Gemini, and created a person enter. The mannequin had two capabilities obtainable, however as you may see, it selected add_shopping_items and acquired the args={‘objects’: [‘flour’, ‘chocolate chips’]}, which was precisely what we have been anticipating. Lastly, we executed the operate primarily based on the mannequin output, and people objects have been added to the shopping_list.

Exterior knowledge

Generally, your mannequin doesn’t have the fitting data to reply correctly or do a process. Entry to exterior knowledge permits us to supply further knowledge to the mannequin, past the foundational coaching knowledge, eliminating the necessity to prepare the mannequin or fine-tune it on this extra knowledge.

Instance of the info:

- Web site content material

- Structured Information in codecs like PDF, Phrase Docs, CSV, Spreadsheets, and so on.

- Unstructured Information in codecs like HTML, PDF, TXT, and so on.

Some of the frequent makes use of of a knowledge retailer is the implementation of RAGs.

Retrieval Augmented Era (RAG)

Retrieval Augmented Era (RAG) means:

- Retrieval -> When the person asks the LLM a query, the RAG system will seek for an exterior supply to retrieve related data for the question.

- Augmented -> The related data will likely be included into the immediate.

- Era -> The LLM then generates a response primarily based on each the unique immediate and the extra context retrieved.

Right here, I’ll present you the steps of a regular RAG. We’ve got two pipelines, one for storing and the opposite for retrieving.

First, now we have to load the paperwork, break up them into smaller chunks of textual content, embed every chunk, and retailer them in a vector database.

Vital:

- Breaking down massive paperwork into smaller chunks is necessary as a result of it makes a extra centered retrieval, and LLMs even have context window limits.

- Embeddings create numerical representations for items of textual content. The embedding vector tries to seize the that means, so textual content with related content material could have related vectors.

The second pipeline retrieves the related data primarily based on a person question. First, embed the person question and retrieve related chunks within the vector retailer utilizing some calculation, equivalent to fundamental semantic similarity or most marginal relevance (MMR), between the embedded chunks and the embedded person question. Afterward, you may mix probably the most related chunks earlier than passing them into the ultimate LLM immediate. Lastly, add this mix of chunks to the LLM directions, and it will probably generate a solution primarily based on this new context and the unique immediate.

In abstract, you may give your agent extra data and the flexibility to take motion with instruments.

Enhancing mannequin efficiency

Now that now we have seen every part of an agent, let’s discuss how we might improve the mannequin’s efficiency.

There are some methods for enhancing mannequin efficiency:

- In-context studying

- Retrieval-based in-context studying

- Superb-tuning primarily based studying

In-context studying

In-context studying means you “educate” the mannequin how one can carry out a process by giving examples straight within the immediate, with out altering the mannequin’s underlying weights.

This technique offers a generalized strategy with a immediate, instruments, and few-shot examples at inference time, permitting it to study “on the fly” how and when to make use of these instruments for a selected process.

There are some varieties of in-context studying:

We already noticed examples of Zero-shot, CoT, and ReAct within the earlier sections, so now right here is an instance of one-shot studying:

user_query= "Carlos to arrange the server by Tuesday, Maria will finalize the design specs by Thursday, and let's schedule the demo for the next Monday."

system_prompt= f""" You're a useful assistant that reads a block of assembly transcript and extracts clear motion objects.

For every merchandise, checklist the particular person accountable, the duty, and its due date or timeframe in bullet-point kind.

Instance 1

Transcript:

'John will draft the finances by Friday. Sarah volunteers to assessment the advertising deck subsequent week. We have to ship invitations for the kickoff.'

Actions:

- John: Draft finances (due Friday)

- Sarah: Overview advertising deck (subsequent week)

- Workforce: Ship kickoff invitations

Now you

Transcript: {user_query}

Actions:

"""

# Ship the person enter to Gemini

response = consumer.fashions.generate_content(

mannequin="gemini-2.0-flash",

contents=system_prompt,

)

print(response.textual content)Right here is the output primarily based in your question and the instance:

Retrieval-based in-context studying

Retrieval-based in-context studying means the mannequin retrieves exterior context (like paperwork) and provides this related content material retrieved into the mannequin’s immediate at inference time to boost its response.

RAGs are necessary as a result of they scale back hallucinations and allow LLMs to reply questions on particular domains or non-public knowledge (like an organization’s inner paperwork) with no need to be retrained.

In case you missed it, return to the final part, the place I defined RAG intimately.

Superb-tuning-based studying

Superb-tuning-based studying means you prepare the mannequin additional on a selected dataset to “internalize” new behaviors or data. The mannequin’s weights are up to date to mirror this coaching. This technique helps the mannequin perceive when and how one can apply sure instruments earlier than receiving person queries.

There are some frequent methods for fine-tuning. Listed below are just a few examples so you may search to check additional.

Analogy to match the three methods

Think about you’re coaching a tour information to obtain a gaggle of individuals in Iceland.

- In-Context Studying: you give the tour information just a few handwritten notes with some examples like “If somebody asks about Blue Lagoon, say this. In the event that they ask about native meals, say that”. The information doesn’t know town deeply, however he can comply with your examples as lengthy the vacationers keep inside these matters.

- Retrieval-Based mostly Studying: you equip the information with a cellphone + map + entry to Google search. The information doesn’t have to memorize every part however is aware of how one can lookup data immediately when requested.

- Superb-Tuning: you give the information months of immersive coaching within the metropolis. The data is already of their head after they begin giving excursions.

The place does LangChain come in?

LangChain is a framework designed to simplify the event of functions powered by massive language fashions (LLMs).

Throughout the LangChain ecosystem, now we have:

- LangChain: The essential framework for working with LLMs. It permits you to change between suppliers or mix parts when constructing functions with out altering the underlying code. For instance, you possibly can swap between Gemini or GPT fashions simply. Additionally, it makes the code easier. Within the subsequent part, I’ll evaluate the code we constructed within the part on operate calling and the way we might do this with LangChain.

- LangGraph: For constructing, deploying, and managing agent workflows.

- LangSmith: For debugging, testing, and monitoring your LLM functions

Whereas these abstractions simplify improvement, understanding their underlying mechanics by means of checking the documentation is crucial — the comfort these frameworks present comes with hidden implementation particulars that may influence efficiency, debugging, and customization choices if not correctly understood.

Past LangChain, you may also take into account OpenAI’s Brokers SDK or Google’s Agent Improvement Equipment (ADK), which provide totally different approaches to constructing agent techniques.

Let’s construct one agent utilizing LangChain

Right here, in a different way from the code within the “Perform Calling” part, we don’t need to create operate declarations like we did earlier than manually. Utilizing the @instrumentdecorator above our capabilities, LangChain robotically converts them into structured descriptions which can be handed to the mannequin behind the scenes.

ChatPromptTemplate organizes data in your immediate, creating consistency in how data is offered to the mannequin. It combines system directions + the person’s question + agent’s working reminiscence. This fashion, the LLM all the time will get data in a format it will probably simply work with.

The MessagesPlaceholder part reserves a spot within the immediate template and the agent_scratchpad is the agent’s working reminiscence. It comprises the historical past of the agent’s ideas, instrument calls, and the outcomes of these calls. This permits the mannequin to see its earlier reasoning steps and gear outputs, enabling it to construct on previous actions and make knowledgeable choices.

One other key distinction is that we don’t need to implement the logic with conditional statements to execute the capabilities. The create_openai_tools_agent operate creates an agent that may purpose about which instruments to make use of and when. As well as, the AgentExecutor orchestrates the method, managing the dialog between the person, agent, and instruments. The agent determines which instrument to make use of by means of its reasoning course of, and the executor takes care of the operate execution and dealing with the end result.

# Buying checklist

shopping_list = []

# Features

@instrument

def add_shopping_items(objects: Record[str]):

"""Add a number of objects to the procuring checklist."""

for merchandise in objects:

shopping_list.append(merchandise)

return {"standing": "okay", "added": objects}

@instrument

def list_shopping_items():

"""Return all objects presently within the procuring checklist."""

return {"shopping_list": shopping_list}

# Configuration

llm = ChatGoogleGenerativeAI(

mannequin="gemini-2.0-flash",

temperature=0

)

instruments = [add_shopping_items, list_shopping_items]

immediate = ChatPromptTemplate.from_messages([

("system", "You are a helpful assistant that helps manage shopping lists. "

"Use the available tools to add items to the shopping list "

"or list the current items when requested by the user."),

("human", "{input}"),

MessagesPlaceholder(variable_name="agent_scratchpad")

])

# Create the Agent

agent = create_openai_tools_agent(llm, instruments, immediate)

agent_executor = AgentExecutor(agent=agent, instruments=instruments, verbose=True)

# Person enter

user_input = (

"Hey there! I am planning to bake a chocolate cake later at present, "

"however I noticed I am out of flour and chocolate chips. "

"Might you please add these objects to my procuring checklist?"

)

# Ship the person enter to Gemini

response = agent_executor.invoke({"enter": user_input})After we use verbose=True, we are able to see the reasoning and actions whereas the code is being executed.

And the ultimate end result:

When do you have to construct an agent?

Keep in mind that we mentioned brokers’s definitions within the first part and noticed that they function autonomously to carry out duties. It’s cool to create brokers, much more due to the hype. Nonetheless, constructing an agent shouldn’t be all the time probably the most environment friendly resolution, and a deterministic resolution might suffice.

A deterministic resolution signifies that the system follows clear and predefined guidelines with out an interpretation. This fashion is best when the duty is well-defined, secure, and advantages from readability. As well as, on this method, it’s simpler to check and debug, and it’s good when you want to know precisely what is going on given an enter, no “black field”. Anthropic’s guide exhibits many alternative LLM Workflows the place LLMs and instruments are orchestrated by means of predefined code paths.

The most effective practices information for constructing brokers from Open AI and Anthropic advocate first discovering the only resolution doable and solely rising the complexity if wanted.

When you’re evaluating if you happen to ought to construct an agent, take into account the next:

- Complicated choices: when coping with processes that require nuanced judgment, dealing with exceptions, or making choices that rely closely on context — equivalent to figuring out whether or not a buyer is eligible for a refund.

- Diffult-to-maintain guidelines: In case you have workflows constructed on difficult units of guidelines which can be tough to replace or preserve with out threat of constructing errors, and they’re always altering.

- Dependence on unstructured knowledge: In case you have duties that require understanding written or spoken language, getting insights from paperwork — pdfs, emails, photographs, audio, html pages… — or chatting with customers naturally.

Conclusion

We noticed that brokers are techniques designed to perform duties on human behalf independently. These brokers are composed of directions, the mannequin, and instruments to entry exterior knowledge and take actions. There are some methods we might improve our mannequin by enhancing the immediate with examples, utilizing RAG to present extra context, or fine-tuning it. When constructing an agent or LLM workflow, LangChain may also help simplify the code, however it is best to perceive what the abstractions are doing. At all times understand that simplicity is one of the best ways to construct agentic techniques, and solely comply with a extra advanced strategy if wanted.

Subsequent Steps

If you’re new to this content material, I like to recommend that you just digest all of this primary, learn it just a few occasions, and in addition learn the complete articles I beneficial so you may have a stable basis. Then, attempt to begin constructing one thing, like a easy software, to start out practising and creating the bridge between this theoretical content material and the apply. Starting to construct is one of the best ways to study these ideas.

As I informed you earlier than, I’ve a easy step-by-step guide for creating a chat in Streamlit and deploying it. There may be additionally a video on YouTube explaining this information in Portuguese. It’s a good start line if you happen to haven’t completed something earlier than.

I hope you loved this tutorial.

You’ll find all of the code for this undertaking on my GitHub or Google Colab.

Comply with me on:

Sources

Building effective agents – Anthropic

Agents – Google

A practical guide to building agents – OpenAI

Chain of Thought Prompting Elicits Reasoning in Large Language Models – Google Analysis

REACT: SYNERGIZING REASONING AND ACTING IN LANGUAGE MODELS – Google Analysis

Small Language Models: A Guide With Examples – DataCamp