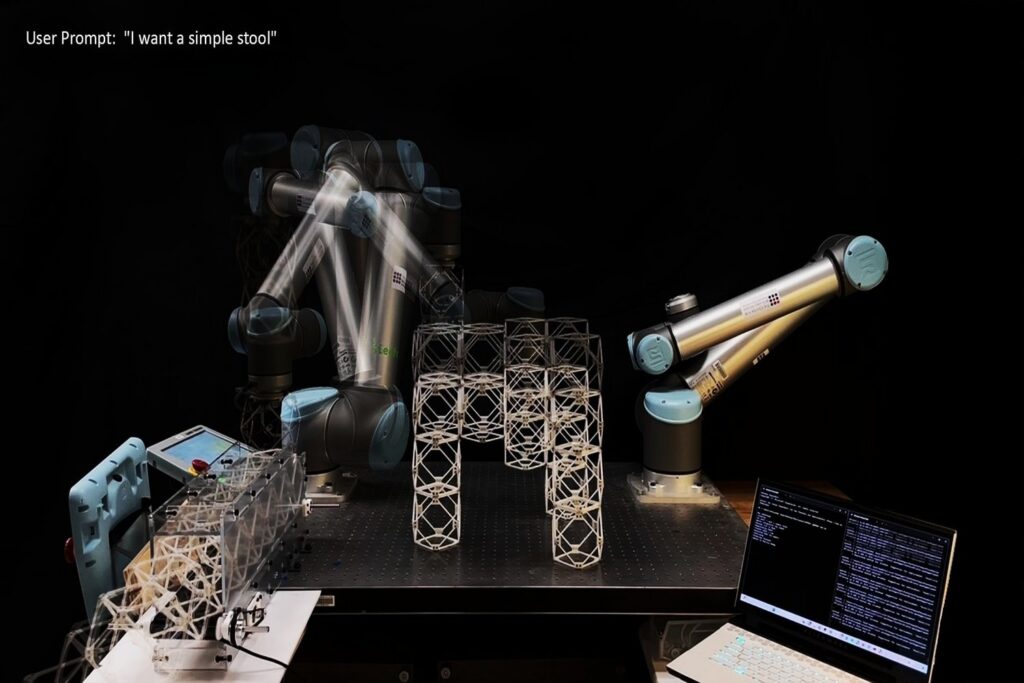

Generative AI and robotics are shifting us ever nearer to the day after we can ask for an object and have it created inside a couple of minutes. The truth is, MIT researchers have developed a speech-to-reality system, an AI-driven workflow that enables them to supply enter to a robotic arm and “communicate objects into existence,” creating issues like furnishings in as little as 5 minutes.

With the speech-to-reality system, a robotic arm mounted on a desk is ready to obtain spoken enter from a human, equivalent to “I desire a easy stool,” after which assemble the objects out of modular elements. Thus far, the researchers have used the system to create stools, cabinets, chairs, a small desk, and even ornamental gadgets equivalent to a canine statue.

“We’re connecting pure language processing, 3D generative AI, and robotic meeting,” says Alexander Htet Kyaw, an MIT graduate scholar and Morningside Academy for Design (MAD) fellow. “These are quickly advancing areas of analysis that haven’t been introduced collectively earlier than in a method which you can truly make bodily objects simply from a easy speech immediate.”

Speech to Actuality: On-Demand Manufacturing utilizing 3D Generative AI, and Discrete Robotic Meeting

The thought began when Kyaw — a graduate scholar within the departments of Structure and Electrical Engineering and Laptop Science — took Professor Neil Gershenfeld’s course, “Learn how to Make Virtually Something.” In that class, he constructed the speech-to-reality system. He continued engaged on the challenge on the MIT Heart for Bits and Atoms (CBA), directed by Gershenfeld, collaborating with graduate college students Se Hwan Jeon of the Division of Mechanical Engineering and Miana Smith of CBA.

The speech-to-reality system begins with speech recognition that processes the person’s request utilizing a massive language mannequin, adopted by 3D generative AI that creates a digital mesh illustration of the item, and a voxelization algorithm that breaks down the 3D mesh into meeting elements.

After that, geometric processing modifies the AI-generated meeting to account for fabrication and bodily constraints related to the actual world, such because the variety of elements, overhangs, and connectivity of the geometry. That is adopted by creation of a possible meeting sequence and automatic path planning for the robotic arm to assemble bodily objects from person prompts.

By leveraging pure language, the system makes design and manufacturing extra accessible to folks with out experience in 3D modeling or robotic programming. And, not like 3D printing, which may take hours or days, this method builds inside minutes.

“This challenge is an interface between people, AI, and robots to co-create the world round us,” Kyaw says. “Think about a situation the place you say ‘I desire a chair,’ and inside 5 minutes a bodily chair materializes in entrance of you.”

The workforce has speedy plans to enhance the weight-bearing functionality of the furnishings by altering the technique of connecting the cubes from magnets to extra sturdy connections.

“We’ve additionally developed pipelines for changing voxel constructions into possible meeting sequences for small, distributed cellular robots, which may assist translate this work to constructions at any measurement scale,” Smith says.

The goal of utilizing modular elements is to eradicate the waste that goes into making bodily objects by disassembling after which reassembling them into one thing completely different, as an illustration turning a settee right into a mattress once you not want the couch.

As a result of Kyaw additionally has expertise utilizing gesture recognition and augmented reality to interact with robots within the fabrication course of, he’s presently engaged on incorporating each speech and gestural management into the speech-to-reality system.

Leaning into his reminiscences of the replicator within the “Star Trek” franchise and the robots within the animated movie “Massive Hero 6,” Kyaw explains his imaginative and prescient.

“I need to enhance entry for folks to make bodily objects in a quick, accessible, and sustainable method,” he says. “I’m working towards a future the place the very essence of matter is actually in your management. One the place actuality might be generated on demand.”

The workforce introduced their paper “Speech to Reality: On-Demand Production using Natural Language, 3D Generative AI, and Discrete Robotic Assembly” on the Affiliation for Computing Equipment (ACM) Symposium on Computational Fabrication (SCF ’25) held at MIT on Nov. 21.