that linear fashions might be… effectively, stiff. Have you ever ever checked out a scatter plot and realized a straight line simply isn’t going to chop it? We’ve all been there.

Actual-world information is at all times difficult. More often than not, it feels just like the exception is the rule. The info you get in your job is nothing like these stunning linear datasets that we used throughout years of coaching within the academy.

For instance, you’re one thing like “Power Demand vs. Temperature.” It’s not a line; it’s a curve. Normally, our first intuition is to succeed in for Polynomial Regression. However that’s a entice!

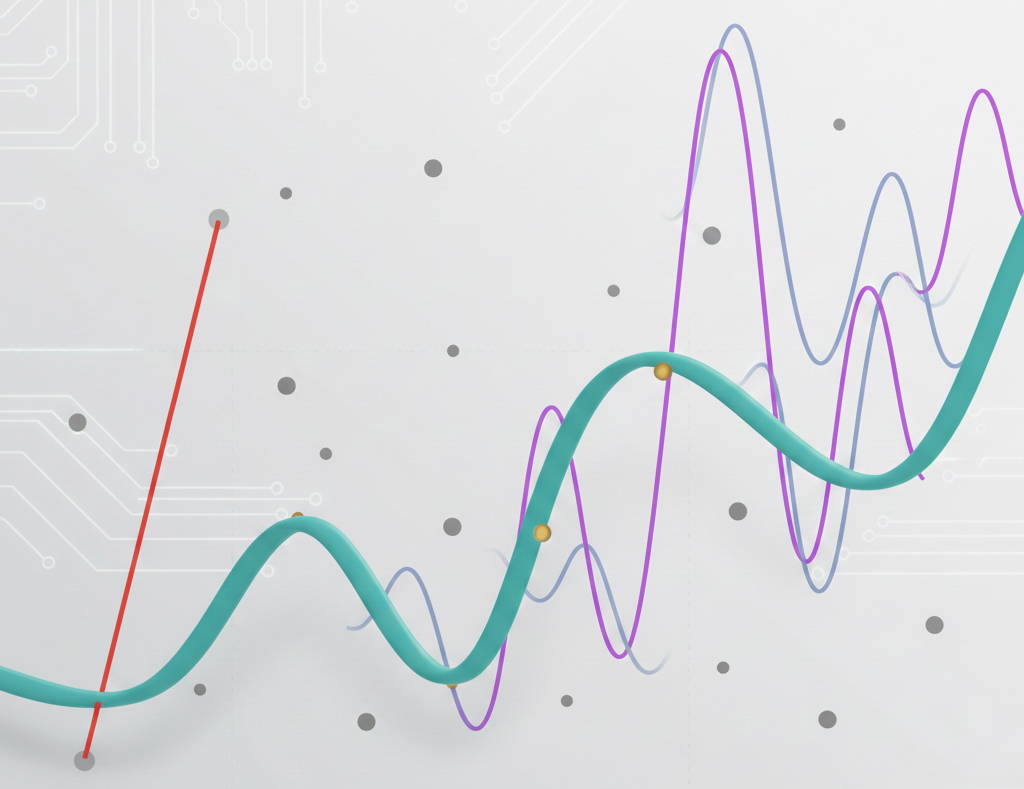

For those who’ve ever seen a mannequin curve go wild on the edges of your graph, you’ve witnessed the “Runge Phenomenon.” Excessive-degree polynomials are like a toddler with a crayon, since they’re too versatile and don’t have any self-discipline.

That’s why I’m going to point out you this feature referred to as Splines. They’re a neat resolution: extra versatile than a line, however way more disciplined than a polynomial.

Splines are mathematical capabilities outlined by polynomials, and used to easy a curve.

As a substitute of attempting to suit one complicated equation to your total dataset, you break the info into segments at factors referred to as knots. Every section will get its personal easy polynomial, and so they’re all stitched collectively so easily you possibly can’t even see the seams.

The Downside with Polynomials

Think about we’ve a non-linear development, and we apply a polynomial x² or x³ to it. It seems okay regionally, however then we take a look at the perimeters of your information, and the curve goes means off. In response to Runge’s Phenomenon [2], high-degree polynomials have this drawback the place one bizarre information level at one finish can pull the whole curve out of whack on the different finish.

Why Splines are the “Simply Proper” Alternative

Splines don’t attempt to match one large equation to every little thing. As a substitute, they divide your information into segments utilizing factors referred to as knots. We’ve got some benefits of utilizing knots.

- Native Management: What occurs in a single section stays in that section. As a result of these chunks are native, a bizarre information level at one finish of your graph gained’t break the match on the different finish.

- Smoothness: They use “B-splines” (Foundation splines) to make sure that the place segments meet, the curve is completely easy.

- Stability: In contrast to polynomials, they don’t go wild on the boundaries.

Okay. Sufficient speak, now let’s implement this resolution.

Implementing it with Scikit-Study

Scikit-Study’s SplineTransformer is the go-to selection for this. It turns a single numeric characteristic into a number of foundation options {that a} easy linear mannequin can then use to study complicated, non-linear shapes.

Let’s import some modules.

import numpy as np

import matplotlib.pyplot as plt

from sklearn.preprocessing import SplineTransformer

from sklearn.linear_model import Ridge

from sklearn.pipeline import make_pipeline

from sklearn.model_selection import GridSearchCVSubsequent, we create some curved oscillating information.

# 1. Create some 'wiggly' artificial information (e.g., seasonal gross sales)

rng = np.random.RandomState(42)

X = np.kind(rng.rand(100, 1) * 10, axis=0)

y = np.sin(X).ravel() + rng.regular(0, 0.1, X.form[0])

# Plot the info

plt.determine(figsize=(12, 5))

plt.scatter(X, y, colour='grey', alpha=0.5, label='Information')

plt.legend()

plt.title("Information")

plt.present()

Okay. Now we are going to create a pipeline that runs the SplineTranformer with the default settings, adopted by a Ridge Regression.

# 2. Construct a pipeline: Splines + Linear Mannequin

# n_knots=5 (default) creates 4 segments; diploma=3 makes it a cubic spline

mannequin = make_pipeline(

SplineTransformer(n_knots=5, diploma=3),

Ridge(alpha=0.1)

)Subsequent, we are going to tune the variety of knots for our mannequin. We use GridSearchCV to run a number of variations of the mannequin, testing totally different knot counts till it finds the one which performs finest on our information.

# We tune 'n_knots' to seek out the most effective tune

param_grid = {'splinetransformer__n_knots': vary(3, 12)}

grid = GridSearchCV(mannequin, param_grid, cv=5)

grid.match(X, y)

print(f"Greatest knot depend: {grid.best_params_['splinetransformer__n_knots']}")Greatest knot depend: 8Then, we retrain our spline mannequin with the finest knot depend, predict, and plot the info. Additionally, allow us to perceive what we’re doing right here with this fast breakdown of the SplineTransformer class arguments:

n_knots: variety of joints within the curve. The extra you’ve gotten, the extra versatile the curve will get.diploma: This defines the “smoothness” of the segments. It refers back to the diploma of the polynomial used between knots (1 is a line; 2 is smoother; 3 is the default).knots: This one tells the mannequin the place to position the joints. For instance,uniformseparates the curve into equal areas, whereas quantile allocates extra knots the place the info is denser.- Tip: Use

'quantile'in case your information is clustered.

- Tip: Use

extrapolation: Tells the mannequin what it ought to do when it encounters information outdoors the vary it noticed throughout coaching.- Tip: use

'periodic'for cyclic information, resembling calendar or clock.

- Tip: use

include_bias: Whether or not to incorporate a “bias” column (a column of all ones). In case you are utilizing aLinearRegressionorRidgemannequin later in your pipeline, these fashions normally have their very ownfit_intercept=True, so you possibly can usually set this toFalseto keep away from redundancy.

# 2. Construct the optimized Spline

mannequin = make_pipeline(

SplineTransformer(n_knots=8,

diploma=3,

knots= 'uniform',

extrapolation='fixed',

include_bias=False),

Ridge(alpha=0.1)

).match(X, y)

# 3. Predict and Visualize

y_plot = mannequin.predict(X)

# Plot

plt.determine(figsize=(12, 5))

plt.scatter(X, y, colour='grey', alpha=0.5, label='Information')

plt.plot(X, y_plot, colour='teal', linewidth=3, label='Spline Mannequin')

plt.plot(X, y_plot_10, colour='purple', linewidth=2, label='Polynomial Match (Diploma 20)')

plt.legend()

plt.title("Splines: Versatile but Disciplined")

plt.present()Right here is the consequence. With splines, we’ve higher management and a smoother mannequin, escaping the issue on the ends.

We’re evaluating a polynomial mannequin of diploma=20 with the spline mannequin. One can argue that decrease levels can do a a lot better modeling of this information, and they might be appropriate. I’ve examined as much as the thirteenth diploma, and it suits effectively with this dataset.

Nevertheless, that’s precisely the purpose of this text. When the mannequin just isn’t becoming too effectively to the info, and we have to maintain growing the polynomial diploma, we actually will fall into the wild edges drawback.

Actual-Life Functions

The place would you really use this in enterprise?

- Time-Sequence Cycles: Use

extrapolation='periodic'for options like “hour of day” or “month of yr.” It ensures the mannequin is aware of that 11:59 PM is true subsequent to 12:01 AM. With this argument, we inform theSplineTransformerthat the top of our cycle (hour 23) ought to wrap round and meet the start (hour 0). Thus, the spline ensures that the slope and worth on the finish of the day completely match the beginning of the following day. - Dose-Response in Medication: Modeling how a drug impacts a affected person. Most medicine observe a non-linear curve the place the profit ultimately ranges off (saturation) or, worse, turns into toxicity. Splines are the “gold customary” right here as a result of they will map these complicated organic shifts with out forcing the info right into a inflexible form.

- Revenue vs. Expertise: Wage usually grows rapidly early on after which plateaus; splines seize this “bend” completely.

Earlier than You Go

We’ve lined lots right here, from why polynomials generally is a “wild” option to how periodic splines remedy the midnight hole. Right here’s a fast wrap-up to maintain in your again pocket:

- The Golden Rule: Use Splines when a straight line is simply too easy, however a high-degree polynomial begins oscillating and overfitting.

- Knots are Key: Knots are the “joints” of your mannequin. Discovering the appropriate quantity by way of

GridSearchCVis the distinction between a easy curve and a jagged mess. - Periodic Energy: For any characteristic that cycles (hours, days, months), use

extrapolation='periodic'. It ensures the mannequin understands that the top of the cycle flows completely again into the start. - Function Engineering > Advanced Fashions: Typically, a easy

Ridgeregression mixed withSplineTransformerwill outperform a fancy “Black Field” mannequin whereas remaining a lot simpler to elucidate to your boss.

For those who favored this content material, discover extra about my work and my contacts on my web site.

GitHub Repository

Right here is the whole code of this train, and a few extras.

https://github.com/gurezende/Studying/blob/master/Python/sklearn/SplineTransformer.ipynb

References

[1. SplineTransformer Documentation] https://scikit-learn.org/stable/modules/generated/sklearn.preprocessing.SplineTransformer.html

[2. Runge’s Phenomenon] https://en.wikipedia.org/wiki/Runge%27s_phenomenon

[3. Make Pipeline Docs] https://scikit-learn.org/stable/modules/generated/sklearn.pipeline.make_pipeline.html