, I’ll give a quick introduction to the MapReduce programming mannequin. Hopefully after studying this, you allow with a stable instinct of what MapReduce is, the function it performs in scalable knowledge processing, and the right way to acknowledge when it may be utilized to optimize a computational job.

Contents:

Terminology & Helpful Background:

Under are some phrases/ideas which may be helpful to know earlier than studying the remainder of this text.

What’s MapReduce?

Launched by a few builders at Google within the early 2000s, MapReduce is a programming mannequin that permits large-scale knowledge processing to be carried out in a parallel and distributed method throughout a compute cluster consisting of many commodity machines.

The MapReduce programming mannequin is good for optimizing compute duties that may be damaged down into unbiased transformations on distinct partitions of the enter knowledge. These transformations are sometimes adopted by grouped aggregation.

The programming mannequin breaks up the computation into the next two primitives:

- Map: given a partition of the enter knowledge to course of, parse the enter knowledge for every of its particular person information. For every report, apply some user-defined knowledge transformation to extract a set of intermediate key-value pairs.

- Cut back: for every distinct key within the set of intermediate key-value pairs, combination the values in some method to supply a smaller set of key-value pairs. Usually, the output of the scale back part is a single key-value pair for every distinct key.

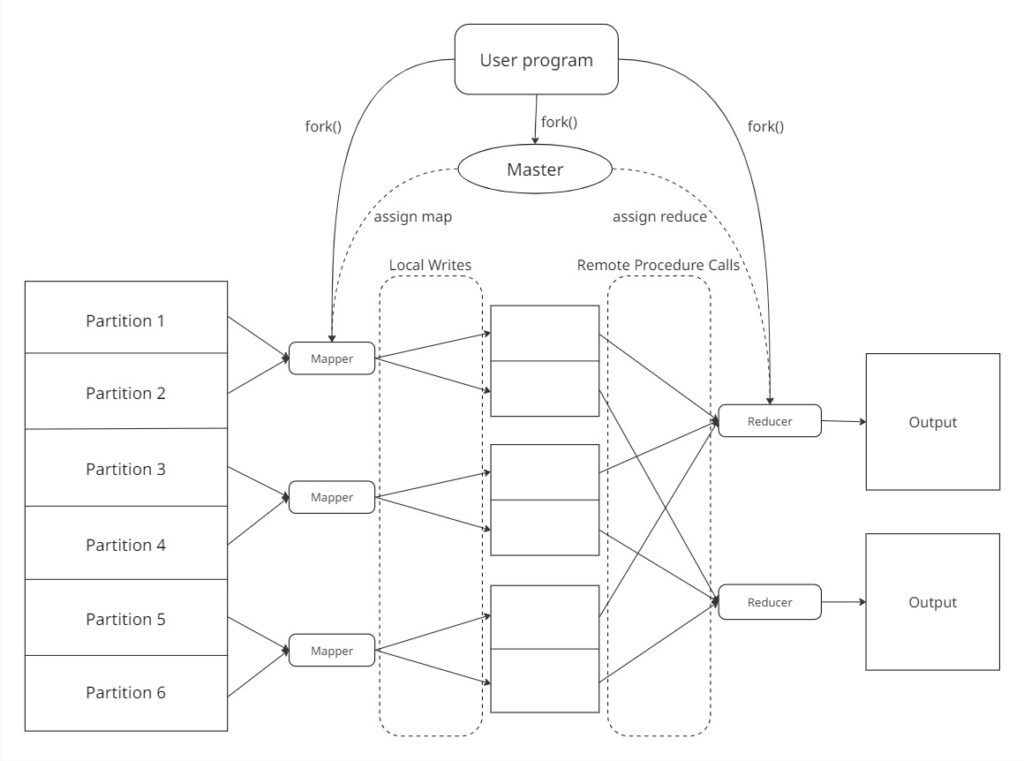

On this MapReduce framework, computation is distributed amongst a compute cluster of N machines with homogenous commodity {hardware}, the place N could also be within the tons of or 1000’s, in follow. One among these machines is designated because the grasp, and all the opposite machines are designated as staff.

- Grasp: handles job scheduling by assigning map and scale back duties to accessible staff.

- Employee: deal with the map and scale back duties it’s assigned by the grasp.

Every of the duties inside the map or scale back part could also be executed in a parallel and distributed method throughout the accessible staff within the compute cluster. Nonetheless, the map and scale back phases are executed sequentially — that’s, all map duties should full earlier than kicking off the scale back part.

That each one most likely sounds fairly summary, so let’s undergo some motivation and a concrete instance of how the MapReduce framework will be utilized to optimize widespread knowledge processing duties.

Motivation & Easy Instance

The MapReduce programming mannequin is usually finest for giant batch processing duties that require executing unbiased knowledge transformations on distinct teams of the enter knowledge, the place every group is usually recognized by a novel worth of a keyed attribute.

You possibly can consider this framework as an extension to the split-apply-combine sample within the context of information evaluation, the place map encapsulates the split-apply logic and scale back corresponds with the mix. The crucial distinction is that MapReduce will be utilized to attain parallel and distributed implementations for generic computational duties outdoors of information wrangling and statistical computing.

One of many motivating knowledge processing duties that impressed Google to create the MapReduce framework was to construct indexes for its search engine.

We are able to specific this job as a MapReduce job utilizing the next logic:

- Divide the corpus to look via into separate partitions/paperwork.

- Outline a map() operate to use to every doc of the corpus, which can emit <phrase, documentID> pairs for each phrase that’s parsed within the partition.

- For every distinct key within the set of intermediate <phrase, documentID> pairs produced by the mappers, apply a user-defined scale back() operate that may mix the doc IDs related to every phrase to supply <phrase, checklist(documentIDs)> pairs.

For added examples of information processing duties that match properly with the MapReduce framework, take a look at the original paper.

MapReduce Walkthrough

There are quite a few different nice assets that walkthrough how the MapReduce algorithm works. Nonetheless, I don’t really feel that this text can be full with out one. In fact, confer with the original paper for the “supply of fact” of how the algorithm works.

First, some primary configuration is required to arrange for execution of a MapReduce job.

- Implement map() and scale back() to deal with the information transformation and aggregation logic particular to the computational job.

- Configure the block measurement of the enter partition handed to every map job. The MapReduce library will then set up the variety of map duties accordingly, M, that will probably be created and executed.

- Configure the variety of scale back duties, R, that will probably be executed. Moreover, the consumer might specify a deterministic partitioning operate to specify how key-value pairs are assigned to partitions. In follow, this partitioning operate is usually a hash of the important thing (i.e. hash(key) mod R).

- Usually, it’s fascinating to have fine task granularity. In different phrases, M and R must be a lot bigger than the variety of machines within the compute cluster. Because the grasp node in a MapReduce cluster assigns duties to staff based mostly on availability, partitioning the processing workload into many duties decreases the possibilities that any single employee node will probably be overloaded.

As soon as the required configuration steps are accomplished, the MapReduce job will be executed. The execution technique of a MapReduce job will be damaged down into the next steps:

- Partition the enter knowledge into M partitions, the place every partition is related to a map employee.

- Every map employee applies the user-defined map() operate to its partition of the information. The execution of every of those map() capabilities on every map employee could also be carried out in parallel. The map() operate will parse the enter information from its knowledge partition and extract all key-value pairs from every enter report.

- The map employee will kind these key-value pairs in rising key order. Optionally, if there are a number of key-value pairs for a single key, the values for the important thing could also be combined right into a single key-value pair, if desired.

- These key-value pairs are then written to R separate information saved on the native disk of the employee. Every file corresponds to a single scale back job. The places of those information are registered with the grasp.

- When all of the map duties have completed, the grasp notifies the reducer staff the places of the intermediate information related to the scale back job.

- Every scale back job makes use of remote procedure calls to learn the intermediate information related to the duty saved on the native disks of the mapper staff.

- The scale back job then iterates over every of the keys within the intermediate output, after which applies the user-defined scale back() operate to every distinct key within the intermediate output, together with its related set of values.

- As soon as all of the scale back staff have accomplished, the grasp employee notifies the consumer program that the MapReduce job is full. The output of the MapReduce job will probably be accessible within the R output information saved within the distributed file system. The customers might entry these information instantly, or cross them as enter information to a different MapReduce job for additional processing.

Expressing a MapReduce Job in Code

Now let’s take a look at how we are able to use the MapReduce framework to optimize a standard knowledge engineering workload— cleansing/standardizing massive quantities of uncooked knowledge, or the remodel stage of a typical ETL workflow.

Suppose that we’re accountable for managing knowledge associated to a consumer registration system. Our knowledge schema might include the next info:

- Identify of consumer

- Date they joined

- State of residence

- E mail deal with

A pattern dump of uncooked knowledge might appear to be this:

John Doe , 04/09/25, il, [email protected]

jane SMITH, 2025/04/08, CA, [email protected]

JOHN DOE, 2025-04-09, IL, [email protected]

Mary Jane, 09-04-2025, Ny, [email protected]

Alice Walker, 2025.04.07, tx, [email protected]

Bob Stone , 04/08/2025, CA, [email protected]

BOB STONE , 2025/04/08, CA, [email protected]Earlier than making this knowledge accessible for evaluation, we most likely wish to remodel the information to a clear, customary format.

We’ll wish to repair the next:

- Names and states have inconsistent case.

- Dates differ in format.

- Some fields include redundant whitespace.

- There are duplicate entries for sure customers (ex: John Doe, Bob Stone).

We might want the ultimate output to appear to be this.

alice walker,2025-04-07,TX,[email protected]

bob stone,2025-04-08,CA,[email protected]

jane smith,2025-04-08,CA,[email protected]

john doe,2025-09-04,IL,[email protected]

mary jane,2025-09-04,NY,[email protected]The info transformations we wish to perform are simple, and we may write a easy program that parses the uncooked knowledge and applies the specified transformation steps to every particular person line in a serial method. Nonetheless, if we’re coping with hundreds of thousands or billions of information, this strategy could also be fairly time consuming.

As a substitute, we are able to use the MapReduce mannequin to use our knowledge transformations to distinct partitions of the uncooked knowledge, after which “combination” these remodeled outputs by discarding any duplicate entries that seem within the intermediate end result.

There are various libraries/frameworks accessible for expressing packages as MapReduce jobs. For our instance, we’ll use the mrjob library to specific our knowledge transformation program as a MapReduce job in python.

mrjob simplifies the method of writing MapReduce because the developer merely wants to offer implementations for the mapper and reducer logic in a single python class. Though it’s now not below lively improvement and should not obtain the identical degree of efficiency as different choices that enable deployment of jobs on Hadoop (as its a python wrapper across the Hadoop API), it’s a good way for anyone accustomed to python to begin studying the right way to write MapReduce jobs and recognizing the right way to break up computation into map and scale back duties.

Utilizing mrjob, we are able to write a easy MapReduce job by subclassing the MRJob class and overriding the mapper() and reducer() strategies.

Our mapper() will include the information transformation/cleansing logic we wish to apply to every report of enter:

- Standardize names and states to lowercase and uppercase, respectively.

- Standardize dates to %Y-%m-%d format.

- Strip pointless whitespace round fields.

After making use of these knowledge transformations to every report, it’s doable that we might find yourself with duplicate entries for some customers. Our reducer() implementation will get rid of such duplicate entries that seem.

from mrjob.job import MRJob

from mrjob.step import MRStep

from datetime import datetime

import csv

import re

class UserDataCleaner(MRJob):

def mapper(self, _, line):

"""

Given a report of enter knowledge (i.e. a line of csv enter),

parse the report for <Identify, (Date, State, E mail)> pairs and emit them.

If this operate will not be applied,

by default, <None, line> will probably be emitted.

"""

attempt:

row = subsequent(csv.reader([line])) # returns row contents as a listing of strings ("," delimited by default)

# if row contents do not observe schema, do not extract KV pairs

if len(row) != 4:

return

identify, date_str, state, e mail = row

# clear knowledge

identify = re.sub(r's+', ' ', identify).strip().decrease() # substitute 2+ whitespaces with a single house, then strip main/trailing whitespace

state = state.strip().higher()

e mail = e mail.strip().decrease()

date = self.normalize_date(date_str)

# emit cleaned KV pair

if identify and date and state and e mail:

yield identify, (date, state, e mail)

besides:

cross # skip dangerous information

def reducer(self, key, values):

"""

Given a Identify and an iterator of (Date, State, E mail) values related to that key,

return a set of (Date, State, E mail) values for that Identify.

This can get rid of all duplicate <Identify, (Date, State, E mail)> entries.

"""

seen = set()

for worth in values:

worth = tuple(worth)

if worth not in seen:

seen.add(worth)

yield key, worth

def normalize_date(self, date_str):

codecs = ["%Y-%m-%d", "%m-%d-%Y", "%d-%m-%Y", "%d/%m/%y", "%m/%d/%Y", "%Y/%m/%d", "%Y.%m.%d"]

for fmt in codecs:

attempt:

return datetime.strptime(date_str.strip(), fmt).strftime("%Y-%m-%d")

besides ValueError:

proceed

return ""

if __name__ == '__main__':

UserDataCleaner.run()This is only one instance of a easy knowledge transformation job that may be expressed utilizing the mrjob framework. For extra complicated data-processing duties that can’t be expressed with a single MapReduce job, mrjob supports this by permitting builders to jot down a number of mapper() and producer() strategies, and outline a pipeline of mapper/producer steps that end result within the desired output.

By default, mrjob executes your job in a single course of, as this permits for pleasant improvement, testing, and debugging. In fact, mrjob helps the execution of MapReduce jobs on varied platforms (Hadoop, Google Dataproc, Amazon EMR). It’s good to bear in mind that the overhead of preliminary cluster setup will be pretty important (~5+ min, relying on the platform and varied components), however when executing MapReduce jobs on actually massive datasets (10+ GB), job deployment on certainly one of these platforms would save important quantities of time because the preliminary setup overhead can be pretty small relative to the execution time on a single machine.

Try the mrjob documentation if you wish to discover its capabilities additional 🙂

MapReduce: Contributions & Present State

MapReduce was a major contribution to the event of scalable, data-intensive purposes primarily for the next two causes:

- The authors acknowledged that primitive operations originating from useful programming, map and reduce, will be pipelined collectively to perform many Big Data duties.

- It abstracted away the difficulties that include executing these operations on a distributed system.

Mapreduce was not important as a result of it launched new primitive ideas. Moderately, MapReduce was so influential as a result of it encapsulated these map and scale back primitives right into a single library, which mechanically dealt with challenges that come from managing distributed programs, reminiscent of task scheduling and fault tolerance. These abstractions allowed builders with little distributed programming expertise to jot down parallel packages effectively.

There have been opponents from the database community who have been skeptical in regards to the novelty of the MapReduce framework — previous to MapReduce, there was current analysis on parallel database systems investigating the right way to allow parallel and distributed execution of analytical SQL queries. Nonetheless, MapReduce is usually built-in with a distributed file system with no necessities to impose a schema on the information, and it offers builders the liberty to implement customized knowledge processing logic (ex: machine studying workloads, picture processing, community evaluation) in map() and scale back() which may be unattainable to specific via SQL queries alone. These traits allow MapReduce to orchestrate parallel and distributed execution of basic goal packages, as an alternative of being restricted to declarative SQL queries.

All that being stated, the MapReduce framework is now not the go-to mannequin for many trendy large-scale knowledge processing duties.

It has been criticized for its considerably restrictive nature of requiring computations to be translated into map and scale back phases, and requiring intermediate knowledge to be materialized earlier than transmitting it between mappers and reducers. Materializing intermediate outcomes might end in I/O bottlenecks, as all mappers should full their processing earlier than the scale back part begins. Moreover, complicated knowledge processing duties might require many MapReduce jobs to be chained collectively and executed sequentially.

Trendy frameworks, reminiscent of Apache Spark, have prolonged upon the unique MapReduce design by choosing a extra versatile DAG execution model. This DAG execution mannequin permits all the sequence of transformations to be optimized, in order that dependencies between levels will be acknowledged and exploited to execute knowledge transformations in reminiscence and pipeline intermediate outcomes, when applicable.

Nonetheless, MapReduce has had a major affect on trendy knowledge processing frameworks (Apache Spark, Flink, Google Cloud Dataflow) because of basic distributed programming ideas that it launched, reminiscent of locality-aware scheduling, fault tolerance by re-execution, and scalability.

Wrap Up

For those who made it this far, thanks for studying! There was quite a lot of content material right here, so let’s rapidly flesh out what we mentioned.

- MapReduce is a programming mannequin used to orchestrate the parallel and distributed execution of packages throughout a big compute cluster of commodity {hardware}. Builders can write parallel packages utilizing the MapReduce framework by merely defining the mapper and reducer logic particular for his or her job.

- Duties that encompass making use of transformations on unbiased partitions of the information adopted by grouped aggregation are very best suits to be optimized by MapReduce.

- We walked via the right way to specific a standard knowledge engineering workload as a MapReduce job utilizing the MRJob library.

- MapReduce because it was initially designed is now not used for contemporary large knowledge duties, however its core elements have performed a signifcant function within the design of recent distributed programming frameworks.

If there are any essential particulars in regards to the MapReduce framework which might be lacking or deserve extra consideration right here, I’d love to listen to it within the feedback. Moreover, I did my finest to incorporate the entire nice assets that I learn whereas writing this text, and I extremely advocate checking them out when you’re considering studying additional!

The writer has created all photos on this article.

Sources

MapReduce Fundamentals:

mrjob:

Associated Background:

MapReduce Limitations & Extensions: