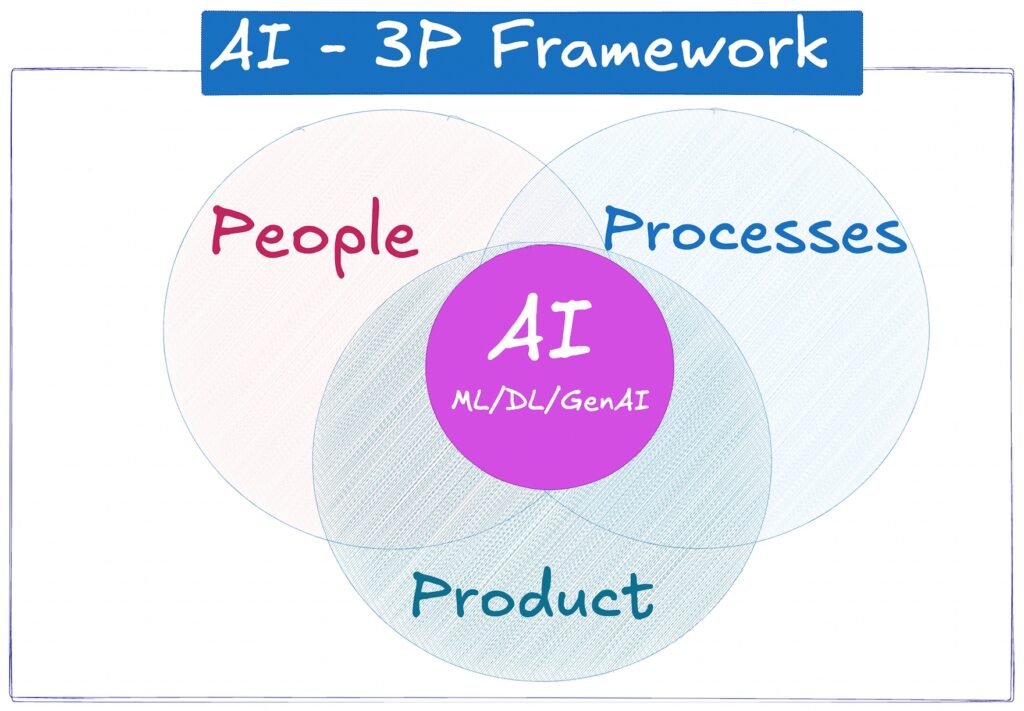

that the majority AI pilot tasks fail — not due to technical shortcomings, however as a consequence of challenges in aligning new know-how with present organizational constructions. Whereas implementing AI fashions could appear simple, the actual obstacles usually lie in integrating these options with the group’s individuals, processes, and merchandise. This idea, generally known as the “3P” pillars of challenge administration, gives a sensible lens for assessing AI readiness.

On this article, I introduce a framework to assist groups consider and prioritize AI initiatives by asking focused, context-specific {custom} questions throughout these three pillars, making certain dangers are recognized and managed earlier than implementation begins.

Whether or not you’re concerned within the technical or enterprise aspect of decision-making within the AI course of, the ideas I define on this article are designed to cowl each features.

The problem of implementing AI use instances

Think about being offered with an inventory of over 100 potential AI use instances from throughout a world enterprise. Moreover, think about that the record of use instances breaks down into quite a lot of particular departmental requests that the event staff must ship.

The advertising division desires a customer-facing chatbot. Finance desires to automate bill processing. HR is asking for a software to summarize 1000’s of resumes. Every request comes with a special sponsor, a special degree of technical element, and a special sense of urgency, usually pushed by strain to ship seen AI wins as quickly as doable.

On this state of affairs, think about that the supply staff decides to start implementing what seems to be the quickest wins, and so they greenlight the advertising chatbot. However, after preliminary momentum, the issues begin.

First are the individuals issues. For instance, the advertising chatbot stalls as two groups within the division can’t agree on who’s accountable for it, freezing growth.

After this situation is solved, course of points come up. For instance, the chatbot wants reside buyer information, however getting approval from the authorized and compliance groups takes months, and nobody is obtainable for extra “admin” work.

Even when this will get resolved, the product itself hits a wall. For instance, the staff discovers the “fast win” chatbot can’t simply combine with the corporate’s important backend techniques, leaving it unable to ship actual worth to prospects till this situation is sorted.

Lastly, after greater than six months, budgets are exhausted, stakeholders are dissatisfied, and the preliminary pleasure round AI has worn off. Fortuitously, this final result is exactly what the AI-3P framework is designed to stop.

Earlier than diving into the framework idea, let’s first have a look at what latest analysis reveals about why AI endeavors go off monitor.

Why do AI initiatives derail?

Enthusiasm round AI — or extra exactly, generative AI — continues to peak day after day, and so we learn quite a few tales about these challenge initiatives. However not all finish with a constructive final result. Reflecting this actuality, a latest MIT study from July 2025 prompted a headline in Fortune journal that “95% of generative AI pilots at companies are failing.”

A part of the report related to our goal entails the explanations why these initiatives fail. To cite a Fortune post:

The most important downside, the report discovered, was not that the AI fashions weren’t succesful sufficient (though execs tended to suppose that was the issue.) As an alternative, the researchers found a “studying hole” — individuals and organizations merely didn’t perceive the best way to use the AI instruments correctly or the best way to design workflows that might seize the advantages of AI whereas minimizing draw back dangers.

…

The report additionally discovered that firms which bought-in AI fashions and options have been extra profitable than enterprises that attempted to construct their very own techniques. Buying AI instruments succeeded 67% of the time, whereas inside builds panned out solely one-third as usually.

…

The general thrust of the MIT report was that the issue was not the tech. It was how firms have been utilizing the tech.

…

With these causes in thoughts, I need to emphasize the significance of higher understanding dangers earlier than implementing AI use instances.

In different phrases, if most AI endeavors don’t fail due to the fashions themselves, however due to points round possession, workflows, or change administration, then we now have pre-work to do in evaluating new initiatives. To realize that, we will adapt the traditional enterprise pillars for know-how adoption — individuals and processes, with a deal with the top product.

This pondering has led me to develop a sensible scorecard round these three pillars for AI pre-development choices: AI-3P with BYOQ (Deliver Your Personal Questions).

The general concept of the framework is to prioritize AI use instances by offering your individual context-specific questions that purpose to qualify your AI alternatives and make dangers seen earlier than the hands-on implementation begins.

Let’s begin by explaining the core of the framework.

Scoring BYOQ per 3P

As indicated earlier, the framework idea relies on reviewing every potential AI use case towards the three pillars that decide success: individuals, course of, and product.

For every pillar, we offer examples of BYOQ questions grouped by classes that can be utilized to evaluate a selected AI request for implementation.

Questions are formulated in order that the doable answer-score mixtures are “No/Unknown” (= 0), “Partial” (= 1), and “Sure/Not relevant” (= 2).

After assigning scores to every query, we sum the entire rating for every pillar, and this quantity is used later within the weighted AI-3P readiness equation.

With this premise in thoughts, let’s break down how to consider every pillar.

Earlier than we begin to think about fashions and code, we must always be sure that the “human factor” is prepared for an AI initiative.

This implies confirming there’s enterprise buy-in (sponsorship) and an accountable proprietor who can champion the challenge by means of its inevitable hurdles. Success additionally is dependent upon an sincere evaluation of the supply staff’s abilities in areas resembling Machine Studying operations. However past these technical abilities, AI initiatives can simply fail with out a considerate plan for end-user adoption, making change administration a non-negotiable a part of the equation.

That’s why the target of this pillar’s BYOQ is to show that possession, functionality, and adoption exist earlier than the construct part begins.

We are able to then group and rating questions within the Folks pillar as follows:

As soon as we’re assured that we now have requested the proper questions and assigned the rating on a scale from 0 to 2 to every, with No/Unknown = 0, Partial = 1, and Sure/Not Relevant = 2, the subsequent step is to examine how this concept aligns with the group’s day by day operations, which brings us to the second pillar.

The Processes pillar is about making certain the AI use case answer suits into the operational cloth of our group.

Frequent challenge stoppers, resembling rules and the inner qualification course of for brand spanking new applied sciences, are included right here. As well as, questions associated to Day 2 operations that assist product resiliency are additionally evaluated.

On this manner, the record of BYOQ on this pillar is conceptualized to grasp dangers in governance, compliance, and provisioning paths.

By finalizing the scores for this pillar and gaining a transparent understanding of the standing of operational guardrails, we will then focus on the product itself.

Right here is the place we problem our technical assumptions, making certain they’re grounded within the realities of our Folks and Processes pillars.

This begins with the basic “problem-to-tech” match, the place we have to decide the kind of AI use case and whether or not to construct a {custom} answer or purchase an present one. As well as, right here we consider the steadiness, maturity and scalability of the underlying platform, too. Aside from that, we additionally weigh the questions that concern the top‑consumer expertise and the general financial match of the Product pillar.

Consequently, the questions for this pillar are designed to check the technical selections, the end-user expertise, and the answer’s monetary viability.

Now that we’ve examined the what, the how, and the who, it’s time to convey all of it collectively and switch these ideas into an actionable resolution.

Bringing 3P collectively

After consolidating scores from 3P, the “prepared/partially prepared/not-ready” resolution is made, and the ultimate desk seems like this for a selected AI request:

As we will see from Desk 4, the core logic of the framework lies in remodeling qualitative solutions right into a quantitative AI readiness rating.

To recap, right here’s how the step-by-step method works:

Step 1: We calculate a uncooked rating, i.e., Precise rating per pillar, by answering an inventory of {custom} questions (BYOQs). Every reply will get a worth:

- No/Unknown = 0 factors. It is a purple flag or a big unknown.

- Partial = 1 level. There’s some progress, but it surely’s not totally resolved.

- Sure/Not relevant = 2 factors. The requirement is met, or it isn’t related to this use case.

Step 2: We assign a selected weight to every pillar’s complete rating. Within the instance above, based mostly on the findings from the MIT study, the weighting is intentionally biased towards the Folks pillar, and the assigned weights are: 40 p.c individuals, 35 p.c processes, and 25 p.c product.

After assigning weights, we calculate Weighted rating per pillar within the following manner:

Step 3: We sum the weighted scores to get the AI-3P Readiness rating, a quantity from 0 to 100. This rating locations every AI initiative into one in all three actionable tiers:

- 80–100: Construct now. That’s a inexperienced gentle. This suggests the important thing parts are in place, the dangers are understood, and implementation can proceed following customary challenge guardrails.

- 60–79: Pilot with guardrails. Proceed with warning. In different phrases, the thought has benefit, however some gaps may derail the challenge. The advice right here can be to repair the highest three to 5 dangers after which launch a time-boxed pilot to study extra about use case feasibility earlier than committing totally.

- 0–59: De-risk first. Cease and repair the recognized gaps, which point out excessive failure danger for the evaluated AI initiative.

In abstract, the choice is the product of the AI-3P Readiness components:

That’s the method for scoring a person AI request, with a deal with custom-built questions round individuals, processes, and merchandise.

However what if we now have a portfolio of AI requests? An easy adoption of the framework to prioritize them on the organizational degree proceeds as follows:

- Create a listing of AI use instances. Begin by gathering all of the proposed AI initiatives from throughout the enterprise. Cluster them by division (advertising, finance, and so forth), consumer journey, or enterprise impression to identify overlaps and dependencies.

- Rating particular person AI requests with the staff on a set of pre-provided questions. Deliver the product house owners, tech leads, information house owners, champions, and danger/compliance house owners (and different accountable people) into the identical room. Rating every AI request collectively as a staff utilizing the BYOQ.

- Type all evaluated use instances by AI‑3P rating. As soon as the cumulative rating per pillar and the weighted

AI-3P Readinessmeasure is calculated for each AI use case, rank all of the AI initiatives. This leads to an goal, risk-adjusted precedence record. Lastly, take the highest n use instances which have cleared the brink for full construct and conduct an extra risk-benefit check-up earlier than investing assets in them.

Now let’s have a look at some necessary particulars about the best way to use this framework successfully.

Customizing the framework

On this part, I share some notes on what to think about when personalizing the AI-3P framework.

First, though the “Deliver Your Personal Questions” logic is constructed for flexibility, it nonetheless requires standardization. It’s necessary to create a set record of questions earlier than beginning to use the framework so that each AI use case has a “truthful shot” in analysis over totally different time intervals.

Second, inside the framework, a “Not relevant” (NA) reply scores 2 factors (the identical as a “Sure” reply) per query, treating it as a non-issue for that use case. Whereas this simplifies the calculation, it’s necessary to trace the entire variety of NA solutions for a given challenge. Though in concept a excessive variety of NAs can point out a challenge with decrease complexity, in actuality this could sidestep many implementation hurdles. It might be prudent to report an NA‑ratio per pillar and cap NA contribution at maybe 25 p.c of a pillar’s most to stop “inexperienced” scores constructed on non‑applicables.

The identical is legitimate for “Unknown” solutions with rating 0, which current a full “blind spot,” and probably must be flagged for the “de-risk first” tier if the data is lacking in particular classes as “Possession,” “Compliance,” or “Finances.”

Third, the pillar weights (within the instance above: 40 p.c individuals, 35 p.c processes, 25 p.c product) must be seen as an adjustable metric that may be business or group particular. As an example, in closely regulated industries like finance, the processes pillar may carry extra weight as a consequence of stringent compliance. On this case, one may think about adjusting the weighting to 35 p.c individuals / 45 p.c processes / 20 p.c product.

The identical flexibility applies to the choice tiers (80–100, 60–79, 0–59). A company with a high-risk tolerance may decrease the “construct now” threshold to 75, whereas a extra conservative one may increase it to 85. Because of this, it’s related to agree on the scoring logic earlier than evaluating the AI use instances.

As soon as these parts are in place, you may have the whole lot wanted to start assessing your AI use case(s).

Thanks for studying. I hope this text helps you navigate the strain for “fast AI wins” by offering a sensible software to establish the initiatives which can be prepared for fulfillment.

I’m eager to study out of your experiences with the framework, so be happy to attach and share your suggestions on my Medium or LinkedIn profiles.

The assets (tables with formulation) included on this article are within the GitHub repo positioned right here:

CassandraOfTroy/ai-3p-framework-template: An Excel template to implement the AI-3P Framework for assessing and de-risking AI projects before deployment.

Acknowledgments

This text was initially revealed on the Data Science at Microsoft Medium publication.

The BYOQ idea was impressed by my discussions with Microsoft colleagues Evgeny Minkevich and Sasa Juratovic. The AI‑3P scorecard concept is influenced by the MEDDIC methodology launched to me by Microsoft colleague Dmitriy Nekrasov.

Particular because of Casey Doyle and Ben Huberman for offering editorial evaluations and serving to to refine the readability and construction of this text.