As you could have heard, DeepSeek-R1 is making waves. It’s all around the AI newsfeed, hailed as the primary open-source reasoning mannequin of its type.

The excitement? Properly-deserved.

The mannequin? Highly effective.

DeepSeek-R1 represents the present frontier in reasoning fashions, pushing the boundaries of what open-source AI can obtain. However right here’s the half you gained’t see within the headlines: working with it isn’t precisely easy.

Prototyping might be clunky. Deploying to manufacturing? Even trickier.

That’s the place DataRobot is available in. We make it simpler to develop with and deploy DeepSeek-R1, so you’ll be able to spend much less time wrestling with complexity and extra time constructing actual, enterprise-ready options.

Prototyping DeepSeek-R1 and bringing functions into manufacturing are essential to harnessing its full potential and delivering higher-quality generative AI experiences.

So, what precisely makes DeepSeek-R1 so compelling — and why is it sparking all this consideration? Let’s take a more in-depth look to see if all of the hype is justified.

May this be the mannequin that outperforms OpenAI’s newest and best?

Past the hype: Why DeepSeek-R1 is price your consideration

DeepSeek-R1 isn’t simply one other generative AI mannequin. It’s arguably the primary open-source “reasoning” mannequin — a generative textual content mannequin particularly strengthened to generate textual content that approximates its reasoning and decision-making processes.

For AI practitioners, that opens up new potentialities for functions that require structured, logic-driven outputs.

What additionally stands out is its effectivity. Coaching DeepSeek-R1 reportedly price a fraction of what it took to develop fashions like GPT-4o, due to reinforcement studying strategies printed by DeepSeek AI. And since it’s absolutely open-source, it gives better flexibility whereas permitting you to keep up management over your information.

In fact, working with an open-source mannequin like DeepSeek-R1 comes with its personal set of challenges, from integration hurdles to efficiency variability. However understanding its potential is step one to creating it work successfully in real-world applications and delivering extra related and significant experiences to finish customers.

Utilizing DeepSeek-R1 in DataRobot

In fact, potential doesn’t all the time equal straightforward. That’s the place DataRobot is available in.

With DataRobot, you’ll be able to host DeepSeek-R1 utilizing NVIDIA GPUs for high-performance inference or entry it by serverless predictions for quick, versatile prototyping, experimentation, and deployment.

Regardless of the place DeepSeek-R1 is hosted, you’ll be able to combine it seamlessly into your workflows.

In follow, this implies you’ll be able to:

- Examine efficiency throughout fashions with out the trouble, utilizing built-in benchmarking instruments to see how DeepSeek-R1 stacks up in opposition to others.

- Deploy DeepSeek-R1 in manufacturing with confidence, supported by enterprise-grade safety, observability, and governance options.

- Construct AI applications that ship related, dependable outcomes, with out getting slowed down by infrastructure complexity.

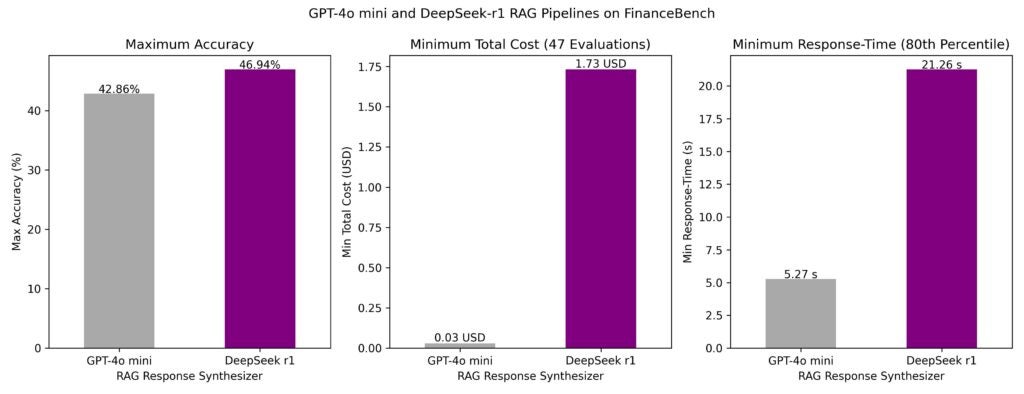

LLMs like DeepSeek-R1 are not often utilized in isolation. In real-world manufacturing functions, they operate as a part of refined workflows slightly than standalone fashions. With this in thoughts, we evaluated DeepSeek-R1 inside a number of retrieval-augmented technology (RAG) pipelines over the well-known FinanceBench dataset and in contrast its efficiency to GPT-4o mini.

So how does DeepSeek-R1 stack up in real-world AI workflows? Right here’s what we discovered:

- Response time: Latency was notably decrease for GPT-4o mini. The eightieth percentile response time for the quickest pipelines was 5 seconds for GPT-4o mini and 21 seconds for DeepSeek-R1.

- Accuracy: One of the best generative AI pipeline utilizing DeepSeek-R1 because the synthesizer LLM achieved 47% accuracy, outperforming the most effective pipeline utilizing GPT-4o mini (43% accuracy).

- Value: Whereas DeepSeek-R1 delivered greater accuracy, its price per name was considerably greater—about $1.73 per request in comparison with $0.03 for GPT-4o mini. Internet hosting selections influence these prices considerably.

Whereas DeepSeek-R1 demonstrates spectacular accuracy, its greater prices and slower response instances might make GPT-4o mini the extra environment friendly selection for a lot of functions, particularly when price and latency are essential.

This evaluation highlights the significance of evaluating fashions not simply in isolation however inside end-to-end AI workflows.

Uncooked efficiency metrics alone don’t inform the total story. Evaluating fashions inside refined agentic and non-agentic RAG pipelines gives a clearer image of their real-world viability.

Utilizing DeepSeek-R1’s reasoning in brokers

DeepSeek-R1’s power isn’t simply in producing responses — it’s in the way it causes by advanced situations. This makes it notably useful for agent-based techniques that must deal with dynamic, multi-layered use instances.

For enterprises, this reasoning functionality goes past merely answering questions. It could actually:

- Current a spread of choices slightly than a single “finest” response, serving to customers discover totally different outcomes.

- Proactively collect data forward of person interactions, enabling extra responsive, context-aware experiences.

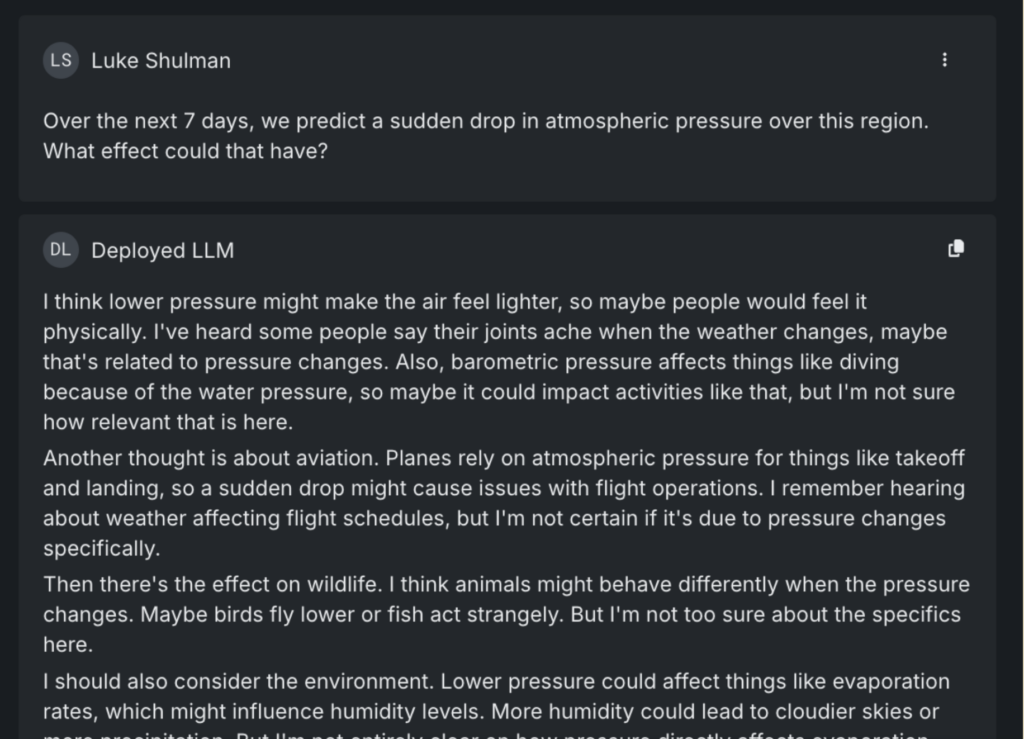

Right here’s an instance:

When requested in regards to the results of a sudden drop in atmospheric strain, DeepSeek-R1 doesn’t simply ship a textbook reply. It identifies a number of methods the query may very well be interpreted — contemplating impacts on wildlife, aviation, and inhabitants well being. It even notes much less apparent penalties, just like the potential for out of doors occasion cancellations attributable to storms.

In an agent-based system, this type of reasoning might be utilized to real-world situations, akin to proactively checking for flight delays or upcoming occasions that is likely to be disrupted by climate adjustments.

Apparently, when the identical query was posed to different main LLMs, together with Gemini and GPT-4o, none flagged occasion cancellations as a possible threat.

DeepSeek-R1 stands out in agent-driven functions for its capability to anticipate, not simply react.

Examine DeepSeek-R1 to GPT 4o-mini: What the information tells us

Too typically, AI practitioners rely solely on an LLM’s solutions to find out if it’s prepared for deployment. If the responses sound convincing, it’s straightforward to imagine the mannequin is production-ready. However with out deeper analysis, that confidence might be deceptive, as fashions that carry out nicely in testing typically battle in real-world functions.

That’s why combining knowledgeable evaluation with quantitative assessments is essential. It’s not nearly what the mannequin says, however the way it will get there—and whether or not that reasoning holds up below scrutiny.

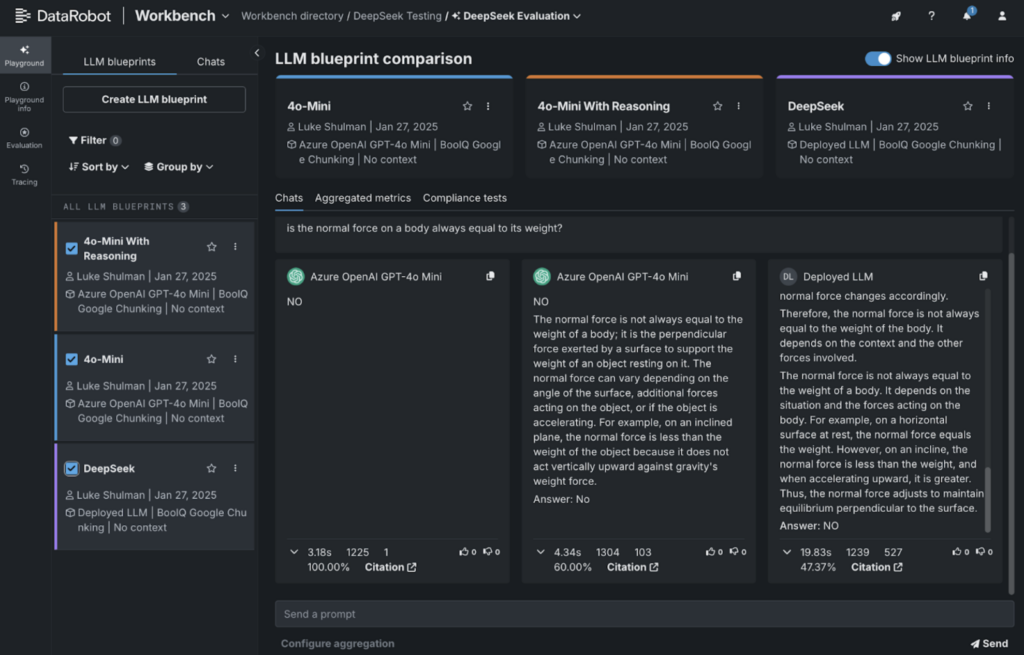

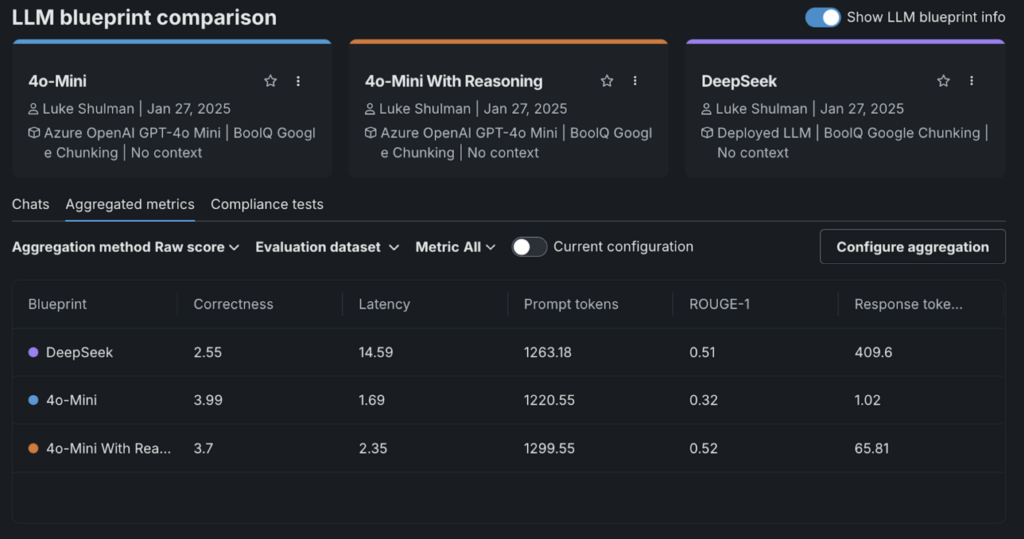

As an example this, we ran a fast analysis utilizing the Google BoolQ studying comprehension dataset. This dataset presents quick passages adopted by sure/no questions to check a mannequin’s comprehension.

For GPT-4o-mini, we used the next system immediate:

Attempt to reply with a transparent YES or NO. You might also say TRUE or FALSE however be clear in your response.

Along with your reply, embody your reasoning behind this reply. Enclose this reasoning with the tag <suppose>.

For instance, if the person asks “What coloration is a can of coke” you’ll say:

<suppose>A can of coke should discuss with a coca-cola which I imagine is all the time offered with a pink can or label</suppose>

Reply: Crimson

Right here’s what we discovered:

- Proper: DeepSeek-R1’s output.

- On the far left: GPT-4o-mini answering with a easy Sure/No.

- Heart: GPT-4o-mini with reasoning included.

We used DataRobot’s integration with LlamaIndex’s correctness evaluator to grade the responses. Apparently, DeepSeek-R1 scored the bottom on this analysis.

What stood out was how including “reasoning” prompted correctness scores to drop throughout the board.

This highlights an vital takeaway: whereas DeepSeek-R1 performs nicely in some benchmarks, it might not all the time be the most effective match for each use case. That’s why it’s essential to check fashions side-by-side to seek out the appropriate instrument for the job.

Internet hosting DeepSeek-R1 in DataRobot: A step-by-step information

Getting DeepSeek-R1 up and operating doesn’t must be difficult. Whether or not you’re working with one of many base fashions (over 600 billion parameters) or a distilled model fine-tuned on smaller fashions like LLaMA-70B or LLaMA-8B, the method is simple. You’ll be able to host any of those variants on DataRobot with only a few setup steps.

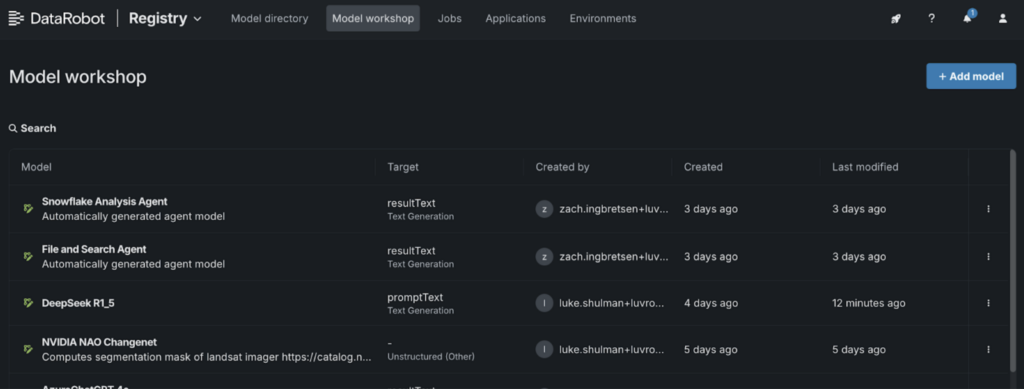

1. Go to the Mannequin Workshop:

- Navigate to the “Registry” and choose the “Mannequin Workshop” tab.

2. Add a brand new mannequin:

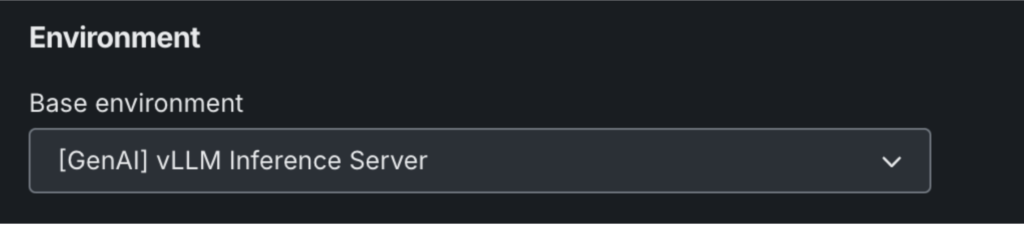

- Identify your mannequin and select “[GenAI] vLLM Inference Server” below the surroundings settings.

- Click on “+ Add Mannequin” to open the Customized Mannequin Workshop.

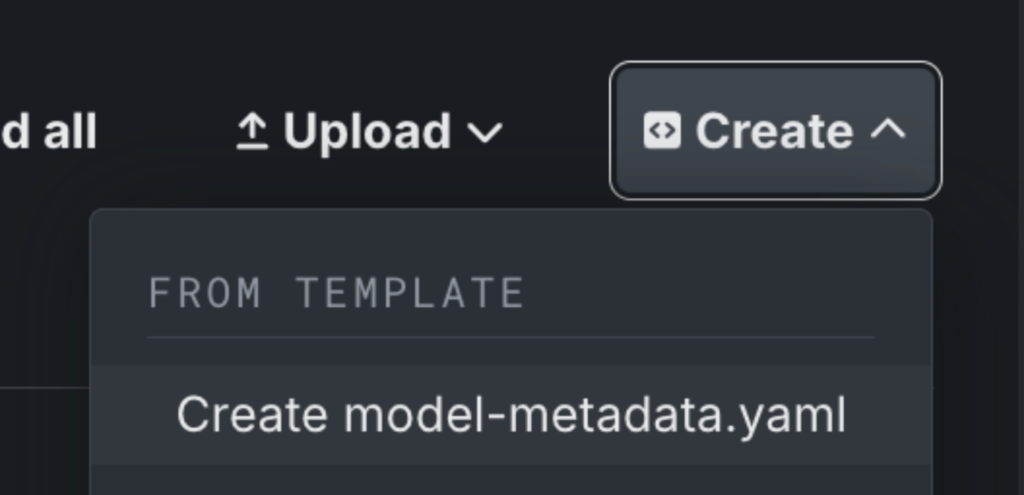

3. Arrange your mannequin metadata:

- Click on “Create” so as to add a model-metadata.yaml file.

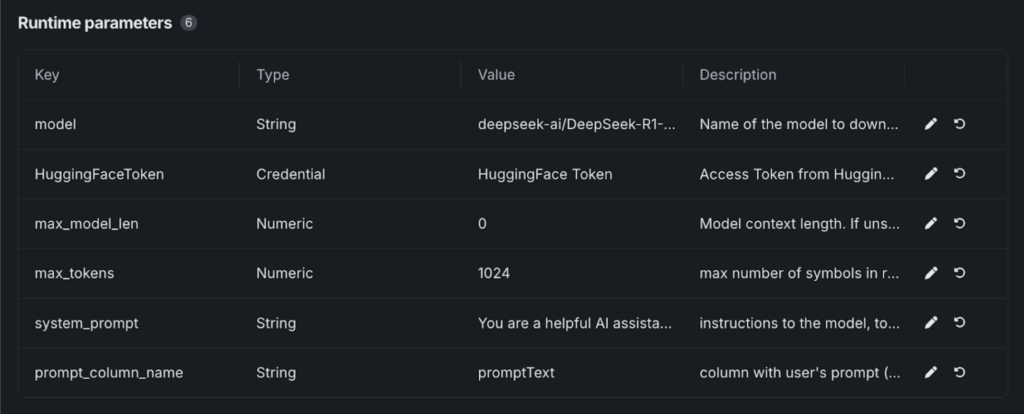

4. Edit the metadata file:

- Save the file, and “Runtime Parameters” will seem.

- Paste the required values from our GitHub template, which incorporates all of the parameters wanted to launch the mannequin from Hugging Face.

5. Configure mannequin particulars:

- Choose your Hugging Face token from the DataRobot Credential Retailer.

- Underneath “mannequin,” enter the variant you’re utilizing. For instance: deepseek-ai/DeepSeek-R1-Distill-Llama-8B.

6. Launch and deploy:

- As soon as saved, your DeepSeek-R1 mannequin will likely be operating.

- From right here, you’ll be able to check the mannequin, deploy it to an endpoint, or combine it into playgrounds and functions.

From DeepSeek-R1 to enterprise-ready AI

Accessing cutting-edge generative AI instruments is simply the beginning. The actual problem is evaluating which fashions suit your particular use case—and safely bringing them into production to ship actual worth to your finish customers.

DeepSeek-R1 is only one instance of what’s achievable when you’ve got the flexibleness to work throughout fashions, evaluate their efficiency, and deploy them with confidence.

The identical instruments and processes that simplify working with DeepSeek can assist you get probably the most out of different fashions and energy AI functions that ship actual influence.

See how DeepSeek-R1 compares to different AI fashions and deploy it in manufacturing with a free trial.

Concerning the creator

Nathaniel Daly is a Senior Product Supervisor at DataRobot specializing in AutoML and time sequence merchandise. He’s targeted on bringing advances in information science to customers such that they will leverage this worth to unravel actual world enterprise issues. He holds a level in Arithmetic from College of California, Berkeley.

Luke Shulman, Technical Discipline Director: Luke has over 15 years of expertise in information analytics and information science. Previous to becoming a member of DataRobot, Luke led implementations and was a director on the product administration crew at Arcadia.io, the main healthcare SaaS analytics platform. He continued that function at N1Health. At DataRobot, Luke leads integrations throughout the AI/ML ecosystem. He’s additionally an lively contributor to the DrX extensions to the DataRobot API shopper and MLFlow integration. An avid champion of information science, Luke has additionally contributed to initiatives throughout the information science ecosystem together with Bokeh, Altair, and Zebras.js.