, I’ve walked you thru putting together a very basic RAG pipeline in Python, in addition to chunking large text documents. We’ve additionally regarded into how documents are transformed into embeddings, permitting us to shortly seek for comparable paperwork inside a vector database, together with how reranking is used to determine probably the most applicable paperwork for answering the consumer’s question.

So, now that we’ve retrieved the related paperwork, it’s time to cross them into the LLM for the technology step. However earlier than that, it is very important have the ability to inform if the retrieval mechanism works effectively and may efficiently determine related outcomes. In spite of everything, retrieving the chunks that comprise the reply to the consumer’s question is the very first step for with the ability to generate a significant reply.

Subsequently, that is precisely what we’re going to discover in as we speak’s submit. Particularly, we’re going to check out a few of the hottest metrics for evaluating retrieval and reranking efficiency.

🍨DataCream is a publication providing tales and tutorials on AI, knowledge, tech. If you’re concerned with these matters, subscribe here.

. . .

Why care about measuring retrieval efficiency

So, our aim is to guage how effectively our embedding mannequin and vector database convey again candidate textual content chunks. Primarily, what we’re looking for out right here is “Are the proper paperwork someplace within the top-k retrieved set?”, or does our vector search return full rubbish? 🙃 There are a number of totally different measures we are able to make the most of to reply this query. Most of them originate from the Information Retrieval area.

Earlier than we start, it’s helpful to tell apart between two varieties of measures — these are binary and graded relevance measures. Extra particularly, binary measures characterize a retrieved textual content chunk both as related or irrelevant for answering the consumer’s question — there isn’t any in between. Quite the opposite, graded measures assign a relevance worth to every textual content chunk that’s retrieved, someplace in a spectrum starting from full irrelevance to finish relevance.

Basically, binary measures characterize every chunk as related or irrelevant, a success or a miss, a optimistic or a unfavourable. Because of this, when contemplating binary retrieval measures, we are able to find yourself in one of many following conditions:

- True Constructive ➔ A result’s retrieved within the prime okay and is certainly related to the consumer’s question; it’s appropriately retrieved.

- False Constructive ➔ A result’s retrieved within the prime okay, however is in reality irrelevant; it’s wrongly retrieved.

- True Damaging ➔ A outcome just isn’t retrieved within the prime okay and is certainly not related to the consumer’s question; it’s appropriately not retrieved.

- False Damaging ➔ A outcome just isn’t retrieved within the prime okay, nevertheless it was in reality related; it’s wrongly not retrieved.

As you possibly can think about, True conditions — True Constructive and True Damaging — are what we’re searching for. On the flip aspect, False conditions — False Damaging and False Constructive — are what we try to attenuate, however this can be a moderately conflicting aim. Extra particularly, with a purpose to embody all related outcomes that exist (that’s, decrease the False Negatives), we have to make our search extra inclusive, however by making the search extra inclusive, we additionally threat growing the False Positives.

One other distinction we are able to make is between order-unaware and order-aware relevance measures. As their names point out, order-unaware measures solely specific whether or not a related outcome exists within the prime okay retrieved textual content chunks, or not. On the flip aspect, order-aware measures additionally keep in mind the rating by which a textual content chunk seems, other than whether or not it simply makes an look on the highest okay chunks.

All retrieval analysis measures might be calculated for various values of okay, thus, we denote them as ‘some measure’@okay, like HitRate@okay or Precision@okay (duh!). Anyhow, in the remainder of this submit, I’ll be exploring some primary binary, order-unaware, retrieval analysis metrics.

. . .

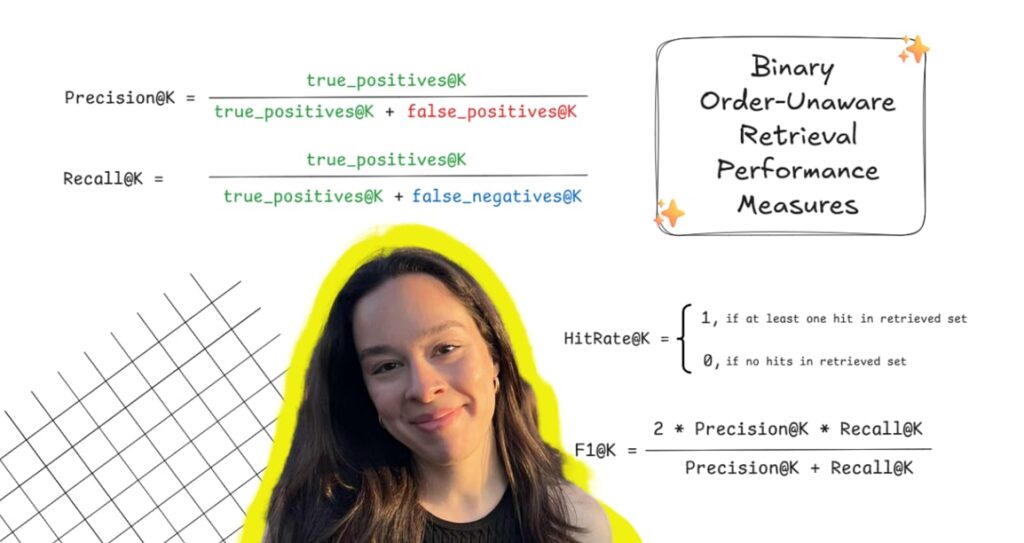

Some order-unaware, binary measures

Binary order-unaware measures for evaluating retrieval are probably the most easy and intuitive to grasp. Thus, they’re an incredible start line for getting our heads round what precisely what try to measure and consider. Some frequent and helpful binary, order-unaware measures are HitRate@okay, Recall@okay, Precision@okay, and F1@okay. However let’s see all these in some extra element.

🎯 HitRate@Ok

HitRate@Ok is the only of all measures for evaluating the retrieval analysis. It’s a binary measure indicating whether or not there’s a minimum of one related outcome within the prime okay retrieved chunks, or not. Thus, it could actually solely take two values: both 1 (if a minimum of one related doc exists within the retrieved set), or 0 (if not one of the retrieved paperwork is in actuality related). It truly is probably the most primary measure of success one can think about — a minimum of hitting the goal with one thing. For a single question and the respective retrieved set of outcomes, HitRate@okay might be calculated as follows:

On this approach, we are able to calculate totally different Hit Charges for all queries and retrieved leads to a check set, and at last, calculate the typical HitRate@Ok of your complete check set.

Arguably, Hit Charge is the only, most easy, and best to calculate retrieval metric; thus, it gives a very good start line for evaluating the retrieval step of our RAG pipeline.

🎯 Recall@Ok

Recall@Ok expresses how usually the related paperwork seem inside the prime okay retrieved paperwork. In essence, it evaluates how good we did with avoiding False Negatives. Recall@okay is calculated as follows:

Thus, it could actually vary from 0 to 1, with 0 indicating that we solely retrieved irrelevant outcomes, and 1 indicating that we solely retrieved related outcomes (no False Negatives). It’s like asking ‘Out of all of the gadgets that existed, what number of did we get?‘. It signifies what number of out of the highest okay outcomes are really related.

Recall focuses on the amount of the retrieved outcomes — what number of out of all of the related outcomes did we handle to seek out? Thus, it features effectively as a retrieval measure for situations the place we have to discover as many related outcomes as doable, even by retrieving some irrelevant ones together with them.

Thus, the upper a Recall@okay we obtain, the extra related paperwork we have now retrieved with the vector search, out of all of the related paperwork that actually exist. Quite the opposite, retrieving paperwork with a nasty Recall@okay rating is a moderately dangerous begin for the retrieval step of our RAG pipeline — if the suitable related paperwork and related data aren’t there within the first place, no magical reranking or LLM mannequin goes to repair the scenario.

🎯 Precision@okay

Precision@okay signifies how lots of the prime okay retrieved paperwork are certainly related. In essence, it evaluates how good we did with not together with False Positives. Precision@okay might be calculated as follows:

In different phrases, precision is the reply to the query “Out of the gadgets that we retrieved, what number of are appropriate?“. It signifies what number of of all of the really related outcomes had been efficiently retrieved within the prime okay. Because of this, it could actually vary from 0 to 1, with 0 indicating that we solely retrieved irrelevant outcomes, and 1 indicating that we solely retrieved related outcomes (no irrelevant outcomes retrieved — no False Positives).

Thereby, Precision@okay largely emphasizes every retrieved outcome being legitimate, moderately than exhaustively discovering each outcome. In different phrases, Precision@okay can serve effectively as a retrieval measure for situations valuing the standard of retrieved outcomes over amount. That’s, retrieving outcomes that we’re positive are related, even when this implies we mistakenly reject some related outcomes.

🎯 F1@Ok

However what if we want each appropriate and full outcomes — what if we want the retrieved set to attain excessive each in Recall and Precision? To realize this, Recall@Ok and Precision@Ok might be mixed right into a single measure known as F1@Ok, permitting us to create a rating that concurrently balances the validity and completeness of the retrieved outcomes. Particularly, F1@okay might be calculated as follows:

Once more, F1@okay can vary from 0 to 1. An F1@okay worth near 1 signifies that each Precision@okay and Recall@okay are excessive, that means the retrieved outcomes are each correct and complete. On the flip aspect, an F1@okay worth near zero signifies that both Recall@okay or Recall@okay is low, and even each. On this approach, F1@okay serves as an efficient single metric to guage balanced retrieval, since it would solely be excessive when each precision and recall are excessive.

. . .

So, is our vector search any good?

So now let’s see how all these play out within the ‘Battle and Peace’ instance by answering yet another time my favourite query — ‘Who’s Anna Pávlovna?’. As in my earlier posts, I’ll as soon as once more be utilizing the War and Peace textual content for example, licensed as Public Area and simply accessible by means of Project Gutenberg. Our code to this point appears like this:

import torch

from sentence_transformers import CrossEncoder

import os

from langchain.chat_models import ChatOpenAI

from langchain.document_loaders import TextLoader

from langchain.embeddings import OpenAIEmbeddings

from langchain.vectorstores import FAISS

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.docstore.doc import Doc

import faiss

api_key = "your_api_key"

#%%

# initialize LLM

llm = ChatOpenAI(openai_api_key=api_key, mannequin="gpt-4o-mini", temperature=0.3)

# initialize cross-encoder mannequin

cross_encoder = CrossEncoder('cross-encoder/ms-marco-TinyBERT-L-2', system='cuda' if torch.cuda.is_available() else 'cpu')

def rerank_with_cross_encoder(question, relevant_docs):

pairs = [(query, doc.page_content) for doc in relevant_docs] # pairs of (question, doc) for cross-encoder

scores = cross_encoder.predict(pairs) # relevance scores from cross-encoder mannequin

ranked_indices = np.argsort(scores)[::-1] # type paperwork primarily based on cross-encoder rating (the upper, the higher)

ranked_docs = [relevant_docs[i] for i in ranked_indices]

ranked_scores = [scores[i] for i in ranked_indices]

return ranked_docs, ranked_scores

# initialize embeddings mannequin

embeddings = OpenAIEmbeddings(openai_api_key=api_key)

# loading paperwork for use for RAG

text_folder = "RAG information"

paperwork = []

for filename in os.listdir(text_folder):

if filename.decrease().endswith(".txt"):

file_path = os.path.be a part of(text_folder, filename)

loader = TextLoader(file_path)

paperwork.lengthen(loader.load())

splitter = RecursiveCharacterTextSplitter(chunk_size=1000, chunk_overlap=100)

split_docs = []

for doc in paperwork:

chunks = splitter.split_text(doc.page_content)

for chunk in chunks:

split_docs.append(Doc(page_content=chunk))

paperwork = split_docs

# normalize data base embeddings

import numpy as np

def normalize(vectors):

vectors = np.array(vectors)

norms = np.linalg.norm(vectors, axis=1, keepdims=True)

return vectors / norms

doc_texts = [doc.page_content for doc in documents]

doc_embeddings = embeddings.embed_documents(doc_texts)

doc_embeddings = normalize(doc_embeddings)

# faiss index with interior product

import faiss

dimension = doc_embeddings.form[1]

index = faiss.IndexFlatIP(dimension) # interior product index

index.add(doc_embeddings)

# create vector database w FAISS

vector_store = FAISS(embedding_function=embeddings, index=index, docstore=None, index_to_docstore_id=None)

vector_store.docstore = {i: doc for i, doc in enumerate(paperwork)}

def major():

print("Welcome to the RAG Assistant. Sort 'exit' to give up.n")

whereas True:

user_input = enter("You: ").strip()

if user_input.decrease() == "exit":

print("Exiting…")

break

# embedding + normalize question

query_embedding = embeddings.embed_query(user_input)

query_embedding = normalize([query_embedding])

k_ = 10

# search FAISS index

D, I = index.search(query_embedding, okay=k_)

# get related paperwork

relevant_docs = [vector_store.docstore[i] for i in I[0]]

# rerank with our operate

reranked_docs, reranked_scores = rerank_with_cross_encoder(user_input, relevant_docs)

# get prime reranked chunks

retrieved_context = "nn".be a part of([doc.page_content for doc in reranked_docs[:5]])

# get related paperwork

relevant_docs = [vector_store.docstore[i] for i in I[0]]

retrieved_context = "nn".be a part of([doc.page_content for doc in relevant_docs])

# D incorporates interior product scores == cosine similarities (since normalized)

print("nTop chunks and their cosine similarity scores:n")

for rank, (idx, rating) in enumerate(zip(I[0], D[0]), begin=1):

print(f"Chunk {rank}:")

print(f"Cosine similarity: {rating:.4f}")

print(f"Content material:n{vector_store.docstore[idx].page_content}n{'-'*40}")

# system immediate

system_prompt = (

"You're a useful assistant. "

"Use ONLY the next data base context to reply the consumer. "

"If the reply just isn't within the context, say you do not know.nn"

f"Context:n{retrieved_context}"

)

# messages for LLM

messages = [

{"role": "system", "content": system_prompt},

{"role": "user", "content": user_input}

]

# generate response

response = llm.invoke(messages)

assistant_message = response.content material.strip()

print(f"nAssistant: {assistant_message}n")

if __name__ == "__main__":

major()Let’s tweak it a bit bit to calculate some retrieval measures.

To begin with, we are able to add the next part originally of our script, with a purpose to outline the retrieval analysis metrics we need to calculate:

#%% retrieval analysis metrics

# Perform to normalize textual content

def normalize_text(textual content):

return " ".be a part of(textual content.decrease().break up())

# Hit Charge @ Ok

def hit_rate_at_k(retrieved_docs, ground_truth_texts, okay):

for doc in retrieved_docs[:k]:

doc_norm = normalize_text(doc.page_content)

if any(normalize_text(gt) in doc_norm or doc_norm in normalize_text(gt) for gt in ground_truth_texts):

return True

return False

# Precision @ okay

def precision_at_k(retrieved_docs, ground_truth_texts, okay):

hits = 0

for doc in retrieved_docs[:k]:

doc_norm = normalize_text(doc.page_content)

if any(normalize_text(gt) in doc_norm or doc_norm in normalize_text(gt) for gt in ground_truth_texts):

hits += 1

return hits / okay

# Recall @ okay

def recall_at_k(retrieved_docs, ground_truth_texts, okay):

matched = set()

for i, gt in enumerate(ground_truth_texts):

gt_norm = normalize_text(gt)

for doc in retrieved_docs[:k]:

doc_norm = normalize_text(doc.page_content)

if gt_norm in doc_norm or doc_norm in gt_norm:

matched.add(i)

break

return len(matched) / len(ground_truth_texts) if ground_truth_texts else 0

# F1 @ Ok

def f1_at_k(precision, recall):

return 2 * precision * recall / (precision + recall) if (precision + recall) > 0 else 0

With a purpose to calculate any of these analysis metrics, we have to first outline a set of queries and the respective really related chunks. This can be a moderately copious train; thus, I shall be solely demonstrating the method for one question — ‘Who’s Anna Pávlovna? — and the respective related textual content chunks that ought to ideally be retrieved. In any case, this data — both for only one question or for a real-life analysis set — might be outlined within the type of a floor reality dictionary, permitting us to map numerous check queries to the anticipated related textual content chunks.

Particularly, we are able to take into account that the related chunks that needs to be included within the floor reality dictionary for our question ‘Who’s Anna Pávlovna?’ are the next:

- “It was in July 1805, and the speaker was the well-known Anna Pávlovna Schérer, maid of honor and favourite of the Empress Márya Fëdorovna. With these phrases, she greeted Prince Vasíli Kurágin, a person of excessive rank and significance, who was the primary to reach at her reception. Anna Pávlovna had had a cough for some days. She was, as she stated, affected by la grippe; grippe being then a brand new phrase in St. Petersburg, used solely by the elite. All her invites with out exception, written in French, and delivered by a scarlet-liveried footman that morning, ran as follows: “When you have nothing higher to do, Depend (or Prince), and if the prospect of spending a night with a poor invalid just isn’t too horrible, I shall be very charmed to see you tonight between 7 and 10—Annette Schérer.” “

- “Anna Pávlovna’s “At House” was like the previous one, solely the novelty she provided her company this time was not Mortemart, however a diplomatist recent from Berlin with the very newest particulars of the Emperor Alexander’s go to to Potsdam, and of how the 2 august mates had pledged themselves in an indissoluble alliance to uphold the reason for justice in opposition to the enemy of the human race. Anna Pávlovna acquired Pierre with a shade of melancholy, evidently referring to the younger man’s current loss by the loss of life of Depend Bezúkhov (everybody consistently thought-about it an obligation to guarantee Pierre that he was vastly troubled by the loss of life of the daddy he had hardly identified), and her melancholy was identical to the august melancholy she confirmed on the point out of her most august Majesty the Empress Márya Fëdorovna. Pierre felt flattered by this. Anna Pávlovna organized the totally different teams in her drawing room together with her ordinary ability. The big group, by which had been”

- “drawing room together with her ordinary ability. The big group, by which had been Prince Vasíli and the generals, had the good thing about the diplomat. One other group was on the tea desk. Pierre wished to affix the previous, however Anna Pávlovna—who was within the excited situation of a commander on a battlefield to whom hundreds of recent and sensible concepts happen which there’s hardly time to place in motion—seeing Pierre, touched his sleeve together with her finger, saying:”

On this approach, we are able to outline the bottom reality for this one question and the respective chunks that comprise the knowledge that may reply the query as follows:

question = "Who's Anna Pávlovna?"

ground_truth_texts = [

"It was in July, 1805, and the speaker was the well-known Anna Pávlovna Schérer, maid of honor and favorite of the Empress Márya Fëdorovna. With these words she greeted Prince Vasíli Kurágin, a man of high rank and importance, who was the first to arrive at her reception. Anna Pávlovna had had a cough for some days. She was, as she said, suffering from la grippe; grippe being then a new word in St. Petersburg, used only by the elite. All her invitations without exception, written in French, and delivered by a scarlet-liveried footman that morning, ran as follows: “If you have nothing better to do, Count (or Prince), and if the prospect of spending an evening with a poor invalid is not too terrible, I shall be very charmed to see you tonight between 7 and 10—Annette Schérer.”",

"Anna Pávlovna’s “At Home” was like the former one, only the novelty she offered her guests this time was not Mortemart, but a diplomatist fresh from Berlin with the very latest details of the Emperor Alexander’s visit to Potsdam, and of how the two august friends had pledged themselves in an indissoluble alliance to uphold the cause of justice against the enemy of the human race. Anna Pávlovna received Pierre with a shade of melancholy, evidently relating to the young man’s recent loss by the death of Count Bezúkhov (everyone constantly considered it a duty to assure Pierre that he was greatly afflicted by the death of the father he had hardly known), and her melancholy was just like the august melancholy she showed at the mention of her most august Majesty the Empress Márya Fëdorovna. Pierre felt flattered by this. Anna Pávlovna arranged the different groups in her drawing room with her habitual skill. The large group, in which were",

"drawing room with her habitual skill. The large group, in which were Prince Vasíli and the generals, had the benefit of the diplomat. Another group was at the tea table. Pierre wished to join the former, but Anna Pávlovna—who was in the excited condition of a commander on a battlefield to whom thousands of new and brilliant ideas occur which there is hardly time to put in action—seeing Pierre, touched his sleeve with her finger, saying:"

]

Lastly, we are able to additionally add the next part to our major() operate with a purpose to appropriately calculate and show the analysis metrics:

...

k_ = 10

# search FAISS index

D, I = index.search(query_embedding, okay=k_)

# get related paperwork

relevant_docs = [vector_store.docstore[i] for i in I[0]]

# rerank with our operate

reranked_docs, reranked_scores = rerank_with_cross_encoder(user_input, relevant_docs)

# -- NEW SECTION --

# Consider reranked docs utilizing metrics

top_k_docs = reranked_docs[:k_] # or change `okay` as wanted

precision = precision_at_k(top_k_docs, ground_truth_texts, okay=k_)

recall = recall_at_k(top_k_docs, ground_truth_texts, okay=k_)

f1 = f1_at_k(precision, recall)

hit = hit_rate_at_k(top_k_docs, ground_truth_texts, okay=k_)

print("n--- Retrieval Analysis Metrics ---")

print(f"Hit@6: {hit}")

print(f"Precision@6: {precision:.2f}")

print(f"Recall@6: {recall:.2f}")

print(f"F1@6: {f1:.2f}")

print("-" * 40)

# -- NEW SECTION --

# get prime reranked chunks

retrieved_context = "nn".be a part of([doc.page_content for doc in reranked_docs[:2]])

...Discover that we run the analysis after reranking. Because the metrics we calculate— like Precision@Ok, Recall@Ok, and F1@Ok — are order-unaware, evaluating them on the highest okay retrieved chunks earlier than or after reranking yields the identical outcomes, so long as the set of prime okay gadgets stays the identical.

So, for our query ‘Who’s Anna Pávlovna?’ and @okay = 10, we get the next scores:

However what’s the that means of this?

- @okay = 10, that means that we calculate all analysis metrics on the highest 10 retrieved chunks.

- Hit@10 = True, that means that a minimum of one of many appropriate (floor reality) chunks was discovered within the prime 10 retrieved chunks.

- Precision@10 = 0.20, that means that out of the ten retrieved chunks, solely 2 had been appropriate (0.20 = 2/10). In different phrases, the retriever additionally introduced again irrelevant data; solely 20% of what it retrieved was really helpful.

- Recall@10 = 0.67, that means that we retrieved 67% of all related chunks out there within the floor reality inside the prime 10 paperwork.

- F1@10 = 0.31, indicating the general retrieval high quality combining each precision and recall. An F1 rating of 0.31 signifies reasonable efficiency, and we all know that this is because of respectable recall however low precision.

As talked about earlier, we are able to calculate these metrics for any okay — it is smart to calculate just for values of okay bigger than the variety of chunks per question within the floor reality. On this approach, we are able to experiment with totally different values of okay and perceive how our retrieval system performs as we increase or slender the scope of retrieved outcomes.

On my thoughts

Whereas metrics like Precision@Ok, Recall@Ok, and F1@Ok might be computed for a single question and its corresponding set of retrieved chunks (like we did right here), in a real-world analysis, they’re usually evaluated over a set of queries, often known as the check set. Extra exactly, every question within the check set is related to its personal set of floor reality related chunks. We then calculate the retrieval metrics individually for every question, after which common the outcomes throughout all queries.

In the end, understanding the that means of varied retrieval metrics that may be calculated is basically necessary for successfully evaluating and fine-tuning a RAG pipeline. Most significantly, an efficient retrieval mechanism — discovering the suitable paperwork — is the muse for producing significant solutions with a RAG setup.

. . .

Beloved this submit? Let’s be mates! Be a part of me on:

📰Substack 💌 Medium 💼LinkedIn ☕Buy me a coffee!

. . .

What about pialgorithms?

Seeking to convey the ability of RAG into your group?

pialgorithms can do it for you 👉 book a demo as we speak