that occurs to each challenge supervisor: the stand-up assembly begins and all of the sudden a essential ticket is blocked, a developer calls in sick, a dependency slips or a key characteristic is delayed. Instantly, your fastidiously deliberate timeline begins to break down, and also you’re scrambling for options.

On this article, we’ll discover how a machine studying mannequin predicted 41% of challenge delays earlier than they hit the timeline, slicing prices and decreasing last-minute firefighting.

The issue: 62% of IT initiatives miss their deadlines in 2025

As a Challenge Supervisor working with Agile groups, I’ve typically handled delays and blockers, they rapidly turned a part of on a regular basis life. However after I got here throughout the 2025 Wellington State of Project Management examine revealing that in 2025, 62% of IT initiatives miss their deadlines, it shocked me into motion. It’s a rise in comparison with the 2017 PMI Pulse of the Profession examine the place it was 51% in 2017. Challenge delays are reaching a essential stage.

I do know delays are widespread, however I hadn’t imagined it might be that prime. However in the present day, we’ve got instruments to anticipate and higher perceive these dangers. Utilizing Python and Information Science, I constructed a mannequin to foretell challenge delays earlier than they occurred.

This statistic highlights two essential factors: delays typically stem from recurring causes, they usually carry an enormous enterprise influence. On this article, we’ll discover how data-driven approaches can uncover these causes and assist challenge managers anticipate them.

With this data, we are able to select the perfect plan of action.

That is the place we are able to use information science. Surprisingly, the 2020 Wellington State of Project Management report publicizes that solely 23% of corporations use challenge administration software program to handle their initiatives, though these instruments generate a wealth of invaluable information.

By analyzing info from challenge tickets, we are able to construct predictive machine studying fashions that spotlight potential dangers earlier than they escalate.

That’s precisely what I did: I analyzed greater than 5,000 tickets, not solely from my present challenge but in addition from previous initiatives.

Challenge administration software program, it seems, is an unimaginable supply of knowledge ready to be leveraged.

The Information Hole in Challenge Administration

In conventional challenge administration, reporting performs a central function, but few experiences supply a complete, detailed retrospective of the challenge as an entire.

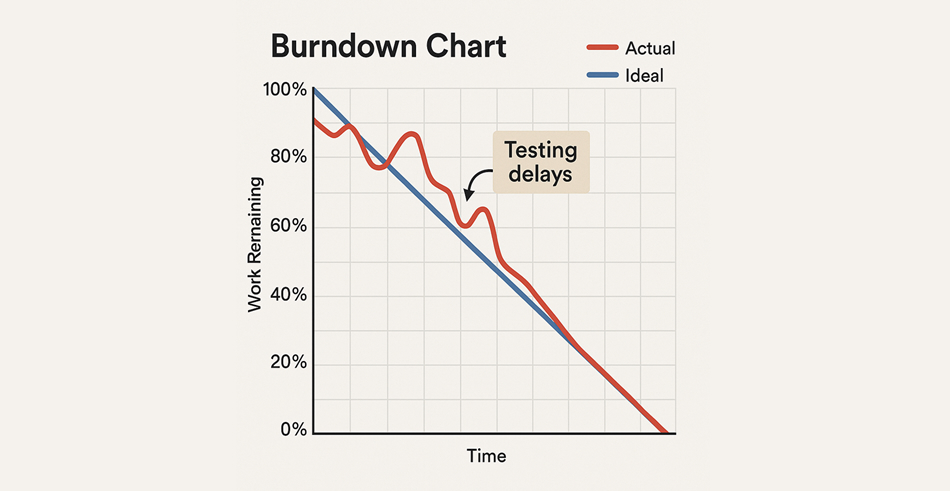

For instance, in Scrum, we observe our velocity, observe the pattern of our burndown chart, and measure the variety of story factors accomplished.

Conventional reporting nonetheless fails to offer us the entire image. Information science can.

As Challenge Managers, we might know from expertise the place the essential factors lie, however validating these assumptions with information makes our selections way more dependable.

Constructing the Dataset

To discover this concept, I analyzed 5,000 Jira tickets—one of many richest sources of challenge information accessible.

Since actual challenge information can’t all the time be shared, I generated an artificial dataset in Python that mirrors actuality, together with key variables resembling precedence, story factors, workforce measurement, dependencies, and delay.

Code by creator

Having constructed a sensible dataset, we are able to now discover the completely different ticket profiles it incorporates. This units the stage for our exploratory information evaluation.

Most tickets are of low or medium precedence, which is in keeping with how challenge backlogs are often structured. This preliminary distribution already hints at the place dangers would possibly accumulate, some extent we’ll discover additional within the EDA.

Whereas excessive and important precedence tickets symbolize a smaller share of the overall, they’re disproportionately extra prone to be delayed.

This bar plot confirms the phenomenon: high-priority tickets are strongly related to delays. Nevertheless, this may stem from two completely different dynamics:

- Excessive-priority tickets are inherently extra advanced and subsequently at better danger of delay.

- Some tickets solely develop into excessive precedence as a result of they had been delayed within the first place, making a vicious cycle of escalation.

With this simulated dataset, we now have a sensible snapshot of what occurs in actual initiatives: tickets differ in measurement, dependencies, and complexity and a few inevitably find yourself delayed. This displays the on a regular basis challenges challenge managers face.

The following step is to maneuver past easy counts and uncover the patterns hidden within the information. By Exploratory Information Evaluation (EDA), we are able to check our assumptions: do greater priorities and extra dependencies actually enhance the chance of delay? Let’s discover out.

Exploratory Information Evaluation (EDA)

Earlier than shifting to modeling, it’s vital to step again and visualize how our variables work together. Exploratory Information Evaluation (EDA) permits us to uncover patterns in:

- How delays differ with precedence.

- The influence of dependencies.

- The distribution of story factors.

- The everyday workforce sizes dealing with the tickets.

This chart confirms a key instinct: the upper the precedence, the better the chance of delay.

Dependencies amplify this impact, the extra there are, the upper the probabilities of one thing slipping by.

As soon as a delay or danger of delay seems, escalation mechanisms push the precedence even greater, making a suggestions loop.

Lastly, ticket complexity additionally performs a job, including one other layer of uncertainty.

Most tickets fall into the medium-risk class. These demand probably the most consideration from challenge managers: whereas not essential at first, their danger can rapidly escalate and set off delays.

Excessive-risk tickets, although fewer, carry a disproportionate influence if not managed early.

In the meantime, low-risk tickets often require lighter monitoring, permitting managers to focus their time the place it actually issues.

We additionally discover that the majority tickets have small story level sizes, and groups are often round 5 members.

This means that agile practices are usually being adopted.

Now, we are going to go additional and have a look at the distribution of danger scores throughout tickets.

We see that solely a small portion of tickets carry a really excessive danger, whereas most sit within the medium zone. Because of this by focusing early on the riskiest tickets, Challenge Managers might forestall many delays.

To check this assumption, let’s now discover how complexity per individual and precedence work together with danger scores.

We can not observe a transparent pattern right here. The chance rating doesn’t appear to strongly rely on both ticket complexity or precedence, suggesting that different hidden components would possibly drive delays.

Technical Deep Dive: Predictive Mannequin

The uncooked information offers a stable basis, however area information is crucial to constructing a really sturdy mannequin. To higher seize the dynamics of real-world initiatives, we engineered new options that replicate challenge administration realities:

- Complexity per individual = story factors/workforce measurement.

- Has dependency = whether or not a ticket depends upon others (dependencies > 0).

- Precedence story factors interplay = precedence stage multiplied by story factors.

Code by creator

We selected a Random Forest mannequin as a result of it will probably deal with non-linear relationships and offers insights into characteristic significance.

Our important focus is on Recall for the constructive class (1 = delayed). For example, a recall of 0.6 would imply the mannequin accurately identifies 60% of all actually delayed tickets.

The target isn’t good precision however early detection. In challenge administration, it’s higher to flag potential delays, even with some false positives, than to overlook essential points that might derail your entire challenge.

Code by creator

The mannequin achieved a recall of 0.41, that means it efficiently detected 41% of the delayed tickets.

This may increasingly appear modest. Nevertheless, in a challenge administration context, even this stage of early warning is efficacious. It offers Challenge Managers actionable indicators to anticipate dangers and put together mitigations.

With additional refinement, the mannequin could be improved to anticipate extra delays and assist forestall points earlier than they materialize.

We’ll use a confusion matrix to higher perceive the mannequin’s strengths and weaknesses.

Code by creator

The mannequin accurately identifies 169 delays, nevertheless it additionally generates 373 false alarms, duties flagged as delayed that truly completed on time. For a Challenge Supervisor, this trade-off is appropriate as a result of it’s higher to research just a few false positives than to overlook a essential delay. This is part of danger administration.

Nevertheless, the mannequin nonetheless misses 245 delayed tickets, that means its predictions are removed from good.

General, this mannequin works greatest as an early warning system. It offers invaluable indicators however nonetheless wants additional coaching and refinement. Most significantly, it must be complemented with human experience, the judgment and expertise of Challenge Managers, to make sure a whole and dependable challenge overview.

Mannequin Interpretability, Scoring, Enterprise influence, Dashboard & mannequin validation

To actually perceive why the mannequin makes these predictions, we have to look beneath the hood. Which options drive the danger of delay probably the most? That is the place mannequin interpretability is available in.

Code by creator

We will observe that complexity and the priority-story factors interplay are the strongest drivers of prediction accuracy.

Scoring tickets: Figuring out What’s Actually at Danger.

Why does this matter for Challenge Managers? As a result of we are able to go one step additional.

Calculate a danger rating for every ticket.

This rating highlights which duties are most in danger, permitting PMs to focus their consideration the place it issues most and take preventive motion earlier than delays escalate.

Code by creator

Enterprise Impression Evaluation.

The tickets with the best danger scores affirm the pattern: solely excessive and important precedence duties carry the best danger.

This perception issues not just for managing the challenge timeline but in addition for its monetary influence on the enterprise. Delays don’t simply decelerate supply, they enhance prices, scale back shopper satisfaction, and eat invaluable workforce sources.

To quantify this, we are able to estimate the enterprise worth of the predictions by simulating how a lot value could be prevented once we anticipate dangers and take preventive motion.

Code by creator

Our baseline exhibits that 27.6% of tickets are delayed. However what if Challenge Managers might focus solely on the riskiest 20%? We’ll now simulate this focused intervention and see how a lot influence it makes.

Code by creator

We recognized 1,021 high-risk tickets, representing about 20% of all duties. Amongst them, 516 (50.5%) are literally delayed. In different phrases, these few tickets alone drive roughly 10% of complete challenge delays.

To make this extra concrete, we translate the influence into enterprise phrases with a medium-size challenge valued at $100,000. By making use of preventive actions on these high-risk tickets, we are able to estimate the potential value financial savings.

Code by creator

By taking early actions, we might save $9,270, almost 10% of the overall challenge value. That’s not simply danger mitigation; it’s a direct enterprise benefit.

PM Dashboard

To make these insights actionable, we are able to additionally construct a Challenge Administration Dashboard. It offers a real-time view of dash well being, with all the important thing KPIs wanted to trace progress, anticipate dangers, and preserve a whole challenge overview.

Code by creator

Mannequin validation

We examined the robustness of the mannequin with a 5-fold cross-validation. Recall was chosen as the principle metric, as a result of in challenge administration it’s extra vital to catch potential delays than to maximise general accuracy.

Code by creator

The recall scores throughout folds ranged from 0.39 to 0.42. This implies the mannequin is much from flawless, nevertheless it persistently flags round 40% of delays, a invaluable early warning that helps challenge managers act earlier than points escalate.

Conclusion

In conclusion, this text confirmed how information science can assist make initiatives smoother by offering a clearer understanding of the causes of delays.

Information doesn’t substitute the instinct of a Challenge Supervisor, nevertheless it strengthens it, very similar to giving a pilot higher devices to navigate with precision and with a greater view of what’s occurring.

By predicting dangers and figuring out at-risk tickets, we are able to scale back delays, forestall conflicts, and in the end ship extra worth.

Challenge Managers ought to embrace information science. In the present day, there are two kinds of PMs: conventional ones and data-driven ones. They don’t compete in the identical league.

Lastly, these expertise will not be restricted to challenge administration. They prolong to product administration and enterprise evaluation. Studying SQL or Python enhances your potential to collaborate with builders, perceive product efficiency, and talk successfully throughout all ranges of the enterprise.

Lesson for Challenge Managers

What number of of our challenge selections are primarily based on so-called “greatest practices” which can be, in reality, unverified assumptions? Whether or not it’s about assembly schedules, workforce construction, or communication strategies, information can assist us problem our biases and uncover what actually works.

Relying on the group, the evaluation can even go deeper: grouping tickets by challenge section, subject, or stakeholder might reveal hidden bottlenecks and systemic points.

For instance, velocity typically drops throughout the QA section. Is it as a result of QA engineers underperform? In no way. They do their job very properly. The actual difficulty is the fixed back-and-forth with builders: clarifying tickets, determining how testing must be carried out, or asking for lacking info.

To resolve this, we launched a easy course of: builders now add clearer testing particulars within the ticket and spend 5 minutes on a fast handover name with QA. That small funding of time boosted workforce productiveness and velocity by greater than 15%.

Who am I?

I’m Yassin, an IT Challenge Supervisor who determined to study Information Science, Python, and SQL to bridge the hole between enterprise wants and technical options. This journey has taught me that probably the most invaluable challenge insights come from combining area experience with data-driven approaches. Let’s join on LinkedIn