That’s a compelling—even comforting—thought for many individuals. “We’re in an period the place different paths to materials enchancment of human lives and our societies appear to have been exhausted,” Vallor says.

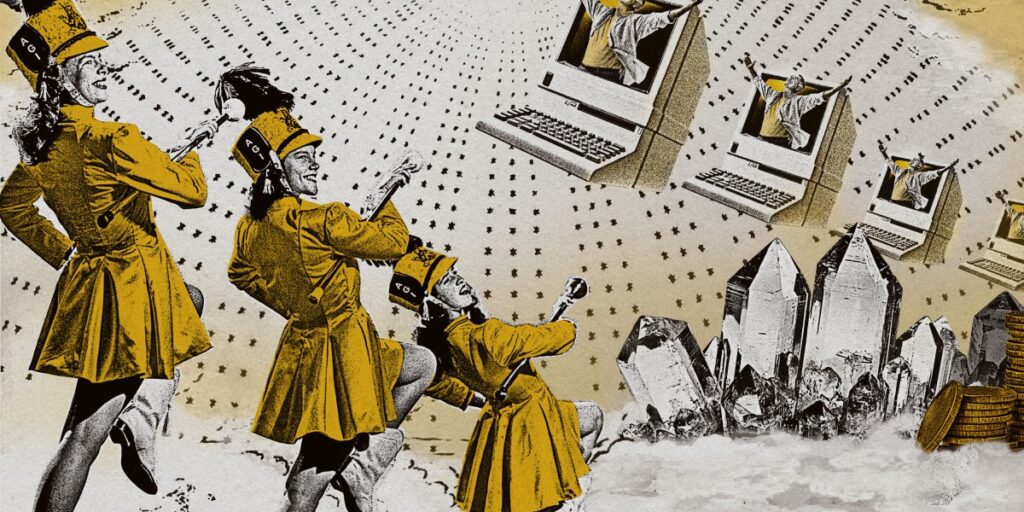

Know-how as soon as promised a path to a greater future: Progress was a ladder that we’d climb towards human and social flourishing. “We’ve handed the height of that,” says Vallor. “I believe the one factor that offers many individuals hope and a return to that form of optimism in regards to the future is AGI.”

Push this concept to its conclusion and, once more, AGI turns into a form of god—one that may provide reduction from earthly struggling, says Vallor.

Kelly Joyce, a sociologist on the College of North Carolina who research how cultural, political, and financial beliefs form the best way we take into consideration and use expertise, sees all these wild predictions about AGI as one thing extra banal: a part of a long-term sample of overpromising from the tech business. “What’s attention-grabbing to me is that we get sucked in each time,” she says. “There’s a deep perception that expertise is best than human beings.”

Joyce thinks that’s why, when the hype kicks in, persons are predisposed to imagine it. “It’s a faith,” she says. “We imagine in expertise. Know-how is God. It’s actually onerous to push again in opposition to it. Folks don’t need to hear it.”

How AGI hijacked an business

The fantasy of computer systems that may do nearly something an individual can is seductive. However like many pervasive conspiracy theories, it has very actual penalties. It has distorted the best way we take into consideration the stakes behind the present expertise growth (and potential bust). It might have even derailed the business, sucking sources away from extra fast, extra sensible software of the expertise. Greater than anything, it offers us a free go to be lazy. It fools us into pondering we would be capable to keep away from the precise onerous work wanted to resolve intractable, world-spanning issues—issues that can require worldwide cooperation and compromise and costly assist. Why hassle with that after we’ll quickly have machines to determine all of it out for us?

Take into account the sources being sunk into this grand undertaking. Simply final month, OpenAI and Nvidia announced an up-to-$100 billion partnership that might see the chip big provide at the very least 10 gigawatts of ChatGPT’s insatiable demand. That’s greater than nuclear energy plant numbers. A bolt of lightning would possibly launch that a lot power. The flux capacitor inside Dr. Emmett Brown’s DeLorean time machine solely required 1.2 gigawatts to ship Marty again to the longer term. After which, solely two weeks later, OpenAI introduced a second partnership with chipmaker AMD for one more six gigawatts of energy.

Selling the Nvidia deal on CNBC, Altman, straight-faced, claimed that with out this type of information heart buildout, individuals must select between a remedy for most cancers and free schooling. “Nobody needs to make that alternative,” he stated. (Only a few weeks later, he introduced that erotic chats could be coming to ChatGPT.)

Add to these prices the lack of funding in additional fast expertise that would change lives right now and tomorrow and the subsequent day. “To me it’s an enormous missed alternative,” says Lirio’s Symons, “to place all these sources into fixing one thing nebulous after we already know there’s actual issues that we may clear up.”

However that’s not how the likes of OpenAI must function. “With individuals throwing a lot cash at these corporations, they don’t have to do this,” Symons says. “For those who’ve obtained lots of of billions of {dollars}, you don’t must give attention to a sensible, solvable undertaking.”

Regardless of his steadfast perception that AGI is coming, Krueger additionally thinks the business’s single-minded pursuit of it signifies that potential options to actual issues, reminiscent of higher well being care, are being ignored. “This AGI stuff—it’s nonsense, it’s a distraction, it’s hype,” he tells me.