Silicon’s mid-life disaster

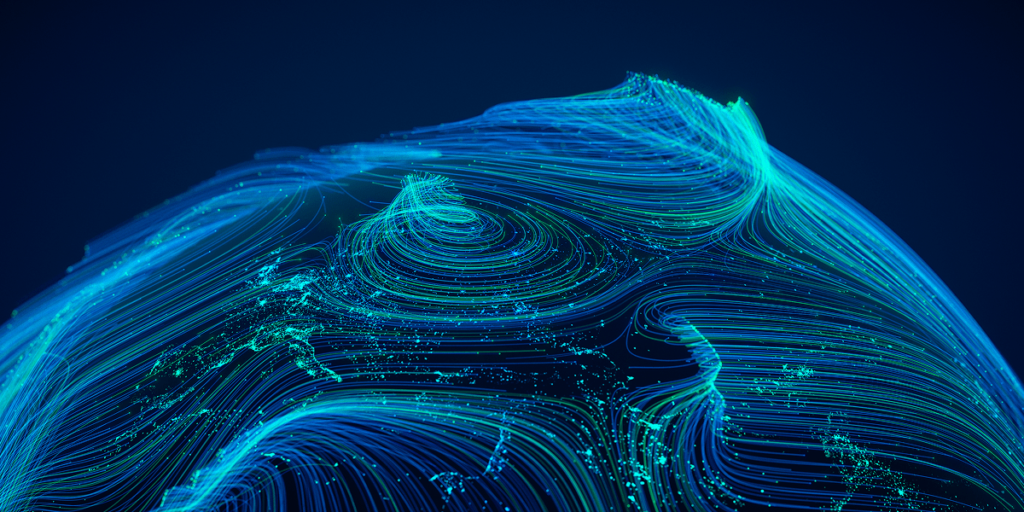

AI has advanced from classical ML to deep studying to generative AI. The newest chapter, which took AI mainstream, hinges on two phases—coaching and inference—which are knowledge and energy-intensive when it comes to computation, knowledge motion, and cooling. On the identical time, Moore’s Regulation, which determines that the variety of transistors on a chip doubles each two years, is reaching a physical and economic plateau.

For the final 40 years, silicon chips and digital know-how have nudged one another ahead—each step forward in processing functionality frees the creativeness of innovators to examine new merchandise, which require but extra energy to run. That’s occurring at mild pace within the AI age.

As fashions turn out to be extra available, deployment at scale places the highlight on inference and the applying of educated fashions for on a regular basis use circumstances. This transition requires the suitable {hardware} to deal with inference duties effectively. Central processing items (CPUs) have managed common computing duties for many years, however the broad adoption of ML launched computational calls for that stretched the capabilities of conventional CPUs. This has led to the adoption of graphics processing items (GPUs) and different accelerator chips for coaching complicated neural networks, as a result of their parallel execution capabilities and excessive reminiscence bandwidth that permit large-scale mathematical operations to be processed effectively.

However CPUs are already probably the most extensively deployed and could be companions to processors like GPUs and tensor processing items (TPUs). AI builders are additionally hesitant to adapt software program to suit specialised or bespoke {hardware}, and so they favor the consistency and ubiquity of CPUs. Chip designers are unlocking efficiency beneficial properties by means of optimized software program tooling, including novel processing options and knowledge varieties particularly to serve ML workloads, integrating specialised items and accelerators, and advancing silicon chip innovations, together with customized silicon. AI itself is a useful assist for chip design, making a optimistic suggestions loop during which AI helps optimize the chips that it must run. These enhancements and robust software program assist imply trendy CPUs are a sensible choice to deal with a spread of inference duties.

Past silicon-based processors, disruptive applied sciences are rising to handle rising AI compute and knowledge calls for. The unicorn start-up Lightmatter, as an example, launched photonic computing options that use mild for knowledge transmission to generate vital enhancements in pace and power effectivity. Quantum computing represents one other promising space in AI {hardware}. Whereas nonetheless years and even many years away, the mixing of quantum computing with AI may additional rework fields like drug discovery and genomics.

Understanding fashions and paradigms

The developments in ML theories and community architectures have considerably enhanced the effectivity and capabilities of AI fashions. Right now, the trade is transferring from monolithic fashions to agent-based programs characterised by smaller, specialised fashions that work collectively to finish duties extra effectively on the edge—on units like smartphones or trendy automobiles. This permits them to extract elevated efficiency beneficial properties, like sooner mannequin response occasions, from the identical and even much less compute.

Researchers have developed strategies, together with few-shot studying, to coach AI fashions utilizing smaller datasets and fewer coaching iterations. AI programs can study new duties from a restricted variety of examples to scale back dependency on massive datasets and decrease power calls for. Optimization strategies like quantization, which decrease the reminiscence necessities by selectively decreasing precision, are serving to scale back mannequin sizes with out sacrificing efficiency.

New system architectures, like retrieval-augmented era (RAG), have streamlined knowledge entry throughout each coaching and inference to scale back computational prices and overhead. The DeepSeek R1, an open supply LLM, is a compelling instance of how extra output could be extracted utilizing the identical {hardware}. By making use of reinforcement studying strategies in novel methods, R1 has achieved superior reasoning capabilities whereas utilizing far fewer computational resources in some contexts.