Contributors: Nicole Ren (GovTech), Ng Wei Cheng (GovTech)

chatbot that retrieves solutions out of your data base. It’s dependable, grounded in factual content material, and avoids the hallucination pitfalls that plague generative chatbots. Your system excels at fielding direct, standalone queries, however falters the second customers try pure, conversational follow-ups like “How can I do this?”, “What about in Germany?”.

The perpetrator? The everyday retrieval mechanism treats every question in isolation, looking for semantic matches with none reminiscence of the dialog’s context. Whereas this strategy works brilliantly for single-turn interactions, it breaks down when customers anticipate the fluid, contextual dialogue that defines trendy conversational AI.

What in case you might give your bot reminiscence? Welcome to the second a part of our sequence exploring the GenAI improvements that energy Digital Clever Chat Assistant (VICA). VICA is a conversational AI platform designed to assist authorities businesses reply to queries of residents shortly while sustaining strict management over content material by means of guardrails and custom-written responses that minimise hallucination. In our first article, we explored how LLM brokers can allow conversational transactions in chatbots. Now, we flip our consideration to a different crucial innovation: enabling pure, multi-turn conversations in stateless Q&A programs.

s

- The Anatomy of a Retrieval-Only Q&A Bot

- Conversational Breakdown: When Context is Lost

- The Solution: Query Rewriting with LLMs

- Conclusion

The Anatomy of a Retrieval-Solely Q&A Bot

At its core, a retrieval-only Q&A chatbot operates on a fantastically easy premise: match consumer inquiries to pre-defined solutions. This strategy sidesteps the unpredictability of generative AI, delivering quick, correct responses grounded in verified content material. Right here’s a breakdown of its parts:

The Data Base. Every part begins with a meticulously curated assortment of question-and-answer pairs (Q&A) that varieties the system’s data base. Each query and its corresponding reply is written and vetted by a human skilled. They’re accountable for its accuracy, tone, and compliance. This “human-in-the-loop” strategy means the bot is simply ever reciting from a trusted, pre-approved script, eliminating the danger of it going off-message or offering incorrect data.

The Semantic Understanding Layer. The magic occurs when these questions and solutions are remodeled into vector embeddings, that are dense numerical representations that seize semantic that means in high-dimensional house. This transformation is essential as a result of it permits the system to know questions like “How do I qualify for subsidies?” and “What’s the standards to use for subsidies?” are basically asking the identical factor, regardless of utilizing totally different phrases.

The Retrieval Engine. These embeddings are then listed in a vector database (e.g. Pinecone) optimised for similarity search. When customers ask questions, the system converts their question right into a vector and performs a nearest-neighbour search, discovering probably the most semantically related match utilizing metrics like cosine similarity or dot product, rating potential matches and deciding on probably the most related one.

The Response Supply. Discovered the match? The system merely retrieves the corresponding pre-written reply. There’s no era, no creativity, no threat of fabrication, simply the supply of verified, human-authored content material.

The advantages are compelling: assured accuracy, lightning-fast responses, and full content material management. However there’s a catch that turns into painfully apparent the second customers attempt to have an precise dialog.

Conversational Breakdown: When Context is Misplaced

Right here’s the place our retrieval system hits a wall. The second customers anticipate pure dialog, the stateless structure reveals its Achilles’ heel: it treats each question as if it’s the very first thing ever stated.

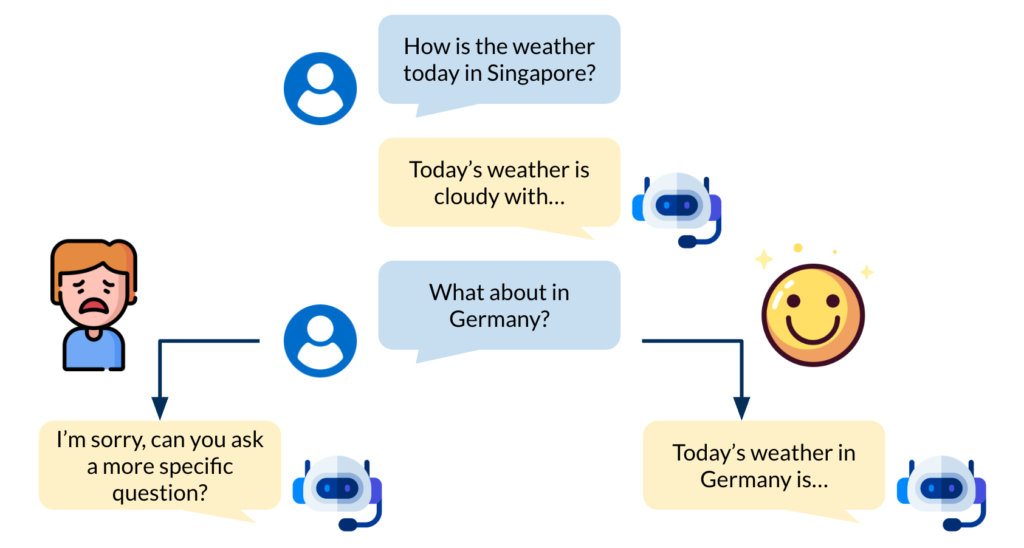

The instance above reveals a practical trade that demonstrates this limitation completely. The bot handles the preliminary query flawlessly, retrieving correct details about the climate right this moment. However when the consumer asks a pure follow-up “What about in Germany?”, the system fully breaks down.

The follow-up query “What about in Germany?” lands nowhere close to any significant match within the data base with out the context of “the climate right this moment” from the earlier trade. That is the inevitable consequence of a stateless design.

The outcome? Customers are compelled to desert pure conversational patterns and resort to repeating full context in each query: “How is the climate right this moment in Germany?” as a substitute of the intuitive “What about in Germany?”. This friction transforms what ought to really feel like a useful dialog right into a irritating recreation of twenty questions the place you need to begin from scratch each time.

The Resolution: Question Rewriting with LLMs

The answer is to make use of a separate, light-weight LLM to behave as a question rewriter. This LLM intercepts consumer queries and, when mandatory, rewrites ambiguous, conversational follow-ups into particular, standalone questions that the retrieval system can perceive.

This introduces a preprocessing layer between consumer enter and vector search. Right here’s the way it works:

Dialog Reminiscence. The system now maintains a rolling window of current dialog turns, usually the final 3–5 exchanges to stability context richness with processing effectivity.

Analyze and Rewrite the place applicable. When a brand new question arrives, use an LLM to look at each the present query and the dialog historical past to find out if rewriting is important. Standalone questions like “How is the climate right this moment in Singapore?” cross by means of unchanged, while follow-up questions like “What about in Germany?” set off the rewriting course of. The rewritten context-rich question, “How is the climate right this moment in Germany?” is then handed to the vector database as per standard.

Fallback (Elective). If the rewritten question fails to search out good matches, the system can fall again to looking out with the unique consumer question, guaranteeing robustness.

Conclusion

The transformation from amnesia to consciousness doesn’t require rebuilding your whole chatbot structure. By introducing question rewriting with LLMs, we’ve given retrieval-only chatbots the one factor they have been lacking: conversational reminiscence. This hybrid strategy of inserting an LLM as an clever question rewriter offers your chatbot the reminiscence it must deal with pure, multi-turn conversations whereas preserving the content material management that made retrieval-only chatbots enticing. The result’s a chatbot that feels genuinely conversational to customers however stays grounded in verified, human-authored responses. Generally probably the most highly effective options aren’t revolutionary overhauls, however considerate enhancements that deal with a crucial lacking piece, thereby reworking the entire.

Inquisitive about VICA? Head over to learn our first article on enabling conversational transactions in chatbots in case you haven’t! If you’re a Singapore public service officer, you possibly can go to our web site at https://www.vica.gov.sg to create your individual chatbot and discover out extra!