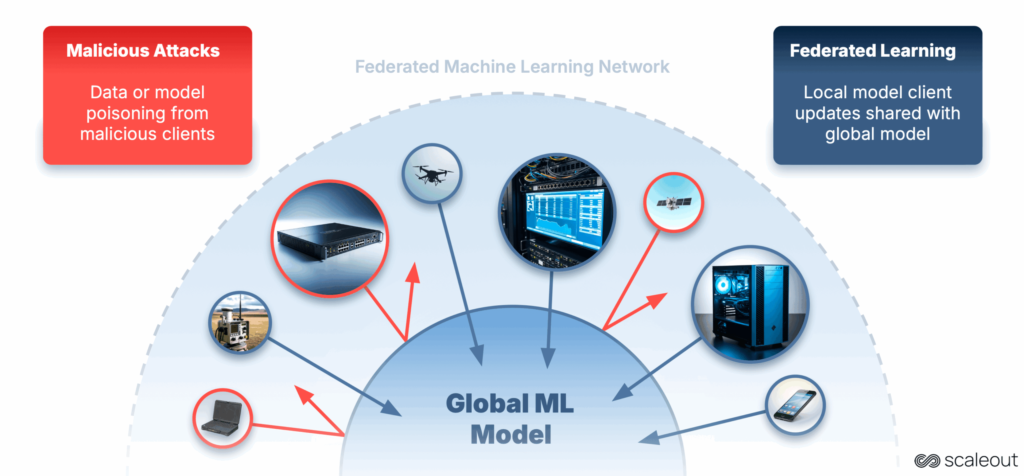

Federated Learning (FL) is we prepare AI fashions. As a substitute of sending all of your delicate information to a central location, FL retains the info the place it’s, and solely shares mannequin updates. This preserves privateness and allows AI to run nearer to the place the info is generated.

Nevertheless, with computation and information unfold throughout many units, new safety challenges come up. Attackers can be part of the coaching course of and subtly affect it, resulting in degraded accuracy, biased outputs or hidden backdoors within the mannequin.

On this undertaking, we got down to examine how we are able to detect and mitigate such assaults in FL. To do that, we constructed a multi node simulator that allows researchers and trade professionals to breed assaults and take a look at defences extra effectively.

Why This Issues

- A non-technical Instance: Consider a shared recipe e-book that cooks from many eating places contribute to. Every chef updates a number of recipes with their very own enhancements. A dishonest chef may intentionally add the fallacious elements to sabotage the dish, or quietly insert a particular flavour that solely they know the way to repair. If nobody checks the recipes fastidiously, all future diners throughout all eating places may find yourself with ruined or manipulated meals.

- A Technical Instance: The identical idea seems in FL as information poisoning (manipulating coaching examples) and mannequin poisoning (altering weight updates). These assaults are particularly damaging when the federation has non IID information distributions, imbalanced information partitions or late becoming a member of purchasers. Up to date defences reminiscent of Multi KRUM, Trimmed Mean and Divide and Conquer can nonetheless fail in sure eventualities.

Constructing the Multi Node FL Assault Simulator

To guage the resilience of federated studying towards real-world threats, we constructed a multi-node assault simulator on prime of the Scaleout Systems FEDn framework. This simulator makes it doable to breed assaults, take a look at defences, and scale experiments with tons of and even 1000’s of purchasers in a managed surroundings.

Key capabilities:

- Versatile deployment: runs distributed FL jobs utilizing Kubernetes, Helm and Docker.

- Practical information settings: Helps IID/non-IID label distributions, imbalanced information partitions and late becoming a member of purchasers.

- Assault injection: Contains implementation of widespread poisoning assaults (Label Flipping, Little is Sufficient) and permits new assaults to be outlined with ease.

- Protection benchmarking: Integrates current aggregation methods (FedAvg, Trimmed Imply, Multi-KRUM, Divide and Conquer) and permits for experimentation and testing of a variety of defensive methods and aggregation guidelines.

- Scalable experimentation: Simulation parameters reminiscent of variety of purchasers, malicious share and participation patterns might be tuned from one single configuration file.

Utilizing FEDn’s architecture implies that the simulations profit from the sturdy coaching orchestration, consumer administration and allows visible monitoring via the Studio web interface.

It is usually vital to notice that the FEDn framework helps Server Functions. This function makes it doable to implement new aggregation methods and consider them utilizing the assault simulator.

To start out with the primary instance undertaking utilizing FEDn, here is the quickstart guide.

The FEDn framework is free for all educational and analysis tasks, in addition to for industrial testing and trials.

The attack simulator is available and ready to use as an open source software.

The Assaults We Studied

- Label Flipping (Information Poisoning) – Malicious purchasers flip labels of their native datasets, reminiscent of altering “cat” to “canine” to cut back accuracy.

- Little is Sufficient (Mannequin Poisoning) – Attackers make small however focused changes to their mannequin updates to shift the worldwide mannequin output towards their very own objectives. On this thesis we utilized the Little is Sufficient assault each third spherical.

Past Assaults — Understanding Unintentional Affect

Whereas this research focuses on deliberate assaults, it’s equally invaluable for understanding the results of marginal contributions attributable to misconfigurations or system malfunctions in large-scale federations.

In our recipe instance, even an sincere chef may unintentionally use the fallacious ingredient as a result of their oven is damaged or their scale is inaccurate. The error is unintentional, nevertheless it nonetheless modifications the shared recipe in ways in which may very well be dangerous if repeated by many contributors.

In cross-device or fleet studying setups, the place 1000’s or thousands and thousands of heterogeneous units contribute to a shared mannequin, defective sensors, outdated configurations or unstable connections can degrade mannequin efficiency in comparable methods to malicious assaults. Finding out assault resilience additionally reveals the way to make aggregation guidelines sturdy to such unintentional noise.

Mitigation Methods Defined

In FL, aggregation guidelines resolve the way to mix mannequin updates from purchasers. Sturdy aggregation guidelines purpose to cut back the affect of outliers, whether or not attributable to malicious assaults or defective units. Listed below are the methods we examined:

- FedAvg (baseline) – Merely averages all updates with out filtering. Very susceptible to assaults.

- Trimmed Imply (TrMean) – Types every parameter throughout purchasers, then discards the best and lowest values earlier than averaging. Reduces excessive outliers however can miss delicate assaults.

- Multi KRUM – Scores every replace by how shut it’s to its nearest neighbours in parameter area, protecting solely these with the smallest whole distance. Very delicate to the variety of updates chosen (ok).

- EE Trimmed Imply (Newly developed) – An adaptive model of TrMean that makes use of epsilon–grasping scheduling to resolve when to check totally different consumer subsets. Extra resilient to altering consumer behaviour, late arrivals and non IID distributions.

tables and plots introduced on this put up have been initially designed by the Scaleout crew.

Experiments

Throughout 180 experiments we evaluated totally different aggregation methods below various assault sorts, malicious consumer ratios and information distributions. For additional particulars, please learn the full thesis here .

The desk above exhibits one of many collection of experiments utilizing label-flipping assault with non-IID label distributed and partially imbalanced information partitions. The desk exhibits Take a look at Accuracy and Take a look at Loss AUC, computed over all taking part purchasers. Every aggregation technique’s outcomes are proven in two rows, akin to the 2 late-policies (benign purchasers taking part from the fifth spherical or malicious purchasers taking part from the fifth spherical). Columns separate the outcomes on the three malicious proportions, yielding six experiment configurations per aggregation technique. The very best lead to every configuration is proven in daring.

Whereas the desk exhibits a comparatively homogeneous response throughout all protection methods, the person plots current a very totally different view. In FL, though a federation might attain a sure stage of accuracy, it’s equally vital to look at consumer participation—particularly, which purchasers efficiently contributed to the coaching and which have been rejected as malicious. The next plots illustrate consumer participation below totally different protection methods.

With 20% malicious purchasers below a label-flipping assault on non-IID, partially imbalanced information, Trimmed Imply (Fig-1) maintained general accuracy however by no means totally blocked any consumer from contributing. Whereas coordinate trimming decreased the influence of malicious updates, it filtered parameters individually quite than excluding total purchasers, permitting each benign and malicious contributors to stay within the aggregation all through coaching.

In a state of affairs with 30% late-joining malicious purchasers and non-IID , imbalanced information, Multi-KRUM (Fig-2) mistakenly chosen a malicious replace from spherical 5 onward. Excessive information heterogeneity made benign updates seem much less comparable, permitting the malicious replace to rank as some of the central and persist in one-third of the aggregated mannequin for the remainder of coaching.

Why we want adaptive aggregation methods

Present sturdy aggregation guidelines, usually depend on static thresholds to resolve which consumer replace to incorporate in aggregating the brand new international mannequin. This highlights a shortcoming of present aggregation methods, which might make them susceptible to late taking part purchasers, non-IID information distributions or information quantity imbalances between purchasers. These insights led us to develop EE-Trimmed Imply (EE-TrMean).

EE-TrMean: An epsilon grasping aggregation technique

EE-TrMean construct on the classical Trimmed Imply, however provides an exploration vs. exploitation, epsilon grasping layer for consumer choice.

- Exploration part: All purchasers are allowed to contribute and a standard Trimmed Imply aggregation spherical is executed.

- Exploitation part: The purchasers which were trimmed the least can be included into the exploitation part, via a median rating system primarily based on earlier rounds it participated.

- The swap between the 2 phases is managed by the epsilon-greedy coverage with a decaying epsilon and an alpha ramp.

Every consumer earns a rating primarily based on whether or not its parameters survive trimming in every spherical. Over time the algorithm will more and more favor the best scoring purchasers, whereas often exploring others to detect modifications in behaviour. This adaptive strategy permits EE-TrMean to extend resilience in instances the place the info heterogeneity and malicious exercise is excessive.

In a label-flipping state of affairs with 20% malicious purchasers and late benign joiners on non-IID, partially imbalanced information, EE-TrMean (Fig-3) alternated between exploration and exploitation phases—initially permitting all purchasers, then selectively blocking low-scoring ones. Whereas it often excluded a benign consumer because of information heterogeneity (nonetheless a lot better than the recognized methods), it efficiently recognized and minimized the contributions of malicious purchasers throughout coaching. This straightforward but highly effective modification improves the consumer’s contributions. The literature reviews that so long as the vast majority of purchasers are sincere, the mannequin’s accuracy stays dependable.