I TabPFN via the ICLR 2023 paper — TabPFN: A Transformer That Solves Small Tabular Classification Problems in a Second. The paper launched TabPFN, an open-source transformer mannequin constructed particularly for tabular datasets, an area that has probably not benefited from deep studying and the place gradient boosted resolution tree fashions nonetheless dominate.

At the moment, TabPFN supported solely as much as 1,000 coaching samples and 100 purely numerical options, so its use in real-world settings was pretty restricted. Over time, nonetheless, there have been a number of incremental enhancements together with TabPFN-2, which was launched in 2025 via the paper — Accurate Predictions on Small Data with a Tabular Foundation Model (TabPFN-2).

Extra lately, TabPFN-2.5 was launched and this model can deal with near 100,000 information factors and round 2,000 options, which makes it pretty sensible for actual world prediction duties. I’ve spent numerous my skilled years working with tabular datasets, so this naturally caught my curiosity and pushed me to look deeper. On this article, I give a excessive stage overview of TabPFN and likewise stroll via a fast implementation utilizing a Kaggle competitors that can assist you get began.

What’s TabPFN

TabPFN stands for Tabular Prior-data Fitted Community, a basis mannequin that is predicated on the thought of becoming a mannequin to a prior over tabular datasets, slightly than to a single dataset, therefore the identify.

As I learn via the technical reviews, there have been so much fascinating bits and items to those fashions. As an example, TabPFN can ship sturdy tabular predictions with very low latency, typically similar to tuned ensemble strategies, however with out repeated coaching loops.

From a workflow perspective additionally there isn’t a studying curve because it matches naturally into current setups via a scikit-learn type interface. It might probably deal with lacking values, outliers and blended characteristic sorts with minimal preprocessing which we are going to cowl through the implementation, later on this article.

The necessity for a basis mannequin for tabular information

Earlier than entering into how TabPFN works, let’s first attempt to perceive the broader downside it tries to handle.

With conventional machine studying on tabular datasets, you normally prepare a brand new mannequin for each new dataset. This typically entails lengthy coaching cycles, and it additionally implies that a beforehand educated mannequin can not actually be reused.

Nonetheless, if we take a look at the inspiration fashions for textual content and pictures, their thought is radically totally different. As an alternative of retraining from scratch, a considerable amount of pre-training is completed upfront throughout many datasets and the ensuing mannequin can then be utilized to new datasets with out retraining typically.

This for my part is the hole the mannequin is making an attempt to shut for tabular information i.e lowering the necessity to prepare a brand new mannequin from scratch for each dataset and this seems like a promising space of analysis.

TabPFN coaching & Inference pipeline at a excessive stage

TabPFN utilises in-context studying to suit a neural community to a previous over tabular datasets. What this implies is that as an alternative of studying one activity at a time, the mannequin learns how tabular issues are inclined to look on the whole after which makes use of that information to make predictions on new datasets via a single ahead go. Right here is an excerpt from TabPFN’s Nature paper:

TabPFN leverages in-context studying (ICL), the identical mechanism that led to the astounding efficiency of enormous language fashions, to generate a strong tabular prediction algorithm that’s totally realized. Though ICL was first noticed in massive language fashions, latest work has proven that transformers can study easy algorithms similar to logistic regression via ICL.

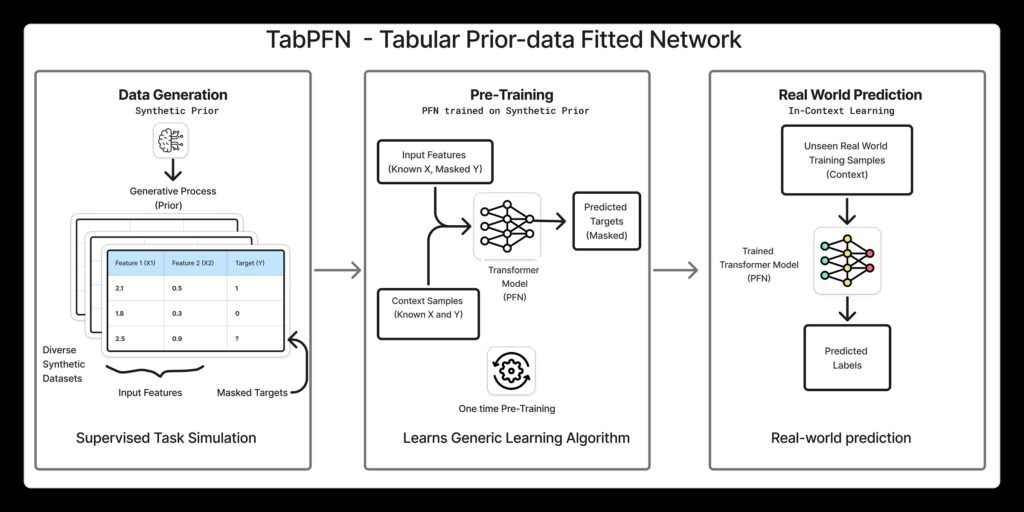

The pipeline will be divided into three main steps:

1. Producing Artificial Datasets

TabPFN treats a whole dataset as a single information level (or a token) fed into the community. This implies it requires publicity to a really massive variety of datasets throughout coaching. Because of this, coaching TabPFN begins with artificial tabular datasets. Why artificial? In contrast to textual content or photographs, there should not many massive and various actual world tabular datasets obtainable, which makes artificial information a key a part of the setup. To place it into perspective, TabPFN 2 was educated on 130 million datasets.

The method of producing artificial datasets is fascinating in itself. TabPFN makes use of a extremely parametric structural causal mannequin to create tabular datasets with diverse constructions, characteristic relationships, noise ranges and goal capabilities. By sampling from this mannequin, a big and various set of datasets will be generated, every performing as a coaching sign for the community. This encourages the mannequin to study normal patterns throughout many sorts of tabular issues, slightly than overfitting to any single dataset.

2. Coaching

The determine under has been taken from the Nature paper, talked about above clearly demonstrates the coaching and inference course of.

Throughout coaching, an artificial tabular dataset is sampled and cut up into X prepare,Y prepare, X check, and Y check. The Y check values are held out, and the remaining components are handed to the neural community which outputs a likelihood distribution for every Y check information level, as proven within the left determine.

The held out Y check values are then evaluated underneath these predicted distributions. A cross entropy loss is then computed and the community is up to date to reduce this loss. This completes one backpropagation step for a single dataset and this course of is then repeated for tens of millions of artificial datasets.

3. Inference

At check time, the educated TabPFN mannequin is utilized to an actual dataset. This corresponds to the determine on the fitting, the place the mannequin is used for inference. As you’ll be able to see, the interface stays the identical as throughout coaching. You present X prepare, Y prepare, and X check, and the mannequin outputs predictions for Y check via a single ahead go.

Most significantly, there isn’t a retraining at check time and TabPFN performs what’s successfully zero-shot inference, producing predictions instantly with out updating its weights.

Structure

Let’s additionally contact upon the core structure of the mannequin as talked about within the paper. At a excessive stage, TabPFN adapts the transformer structure to raised swimsuit tabular information. As an alternative of flattening a desk into a protracted sequence, the mannequin treats every worth within the desk as its personal unit. It makes use of a two-stage consideration mechanism whereby it first learns how options relate to one another inside a single row after which learns how the identical characteristic behaves throughout totally different rows.

This manner of structuring consideration is important because it matches how tabular information is definitely organized. This additionally means the mannequin doesn’t care concerning the order of rows or columns which implies it could actually deal with tables which might be bigger than these it was educated on.

Implementation

Lets now stroll via an implementation of TabPFN-2.5 and examine it in opposition to a vanilla XGBoost classifier to offer a well-known level of reference. Whereas the mannequin weights will be downloaded from Hugging Face, utilizing Kaggle Notebooks is extra easy because the model is available there and GPU assist comes out of the field for quicker inference. In both case, you could settle for the mannequin phrases earlier than utilizing it. After including the TabPFN model to the Kaggle pocket book setting, run the next cell to import it.

# importing the mannequin

import os

os.environ["TABPFN_MODEL_CACHE_DIR"] = "/kaggle/enter/tabpfn-2-5/pytorch/default/2"You could find the whole code within the accompanying Kaggle pocket book here.

Set up

You possibly can entry TabPFN in two methods both as a Python package deal and run it regionally or as an API shopper to run the mannequin within the cloud:

# Python package deal

pip set up tabpfn

# As an API shopper

pip set up tabpfn-clientDataset: Kaggle Playground competitors dataset

To get a greater sense of how TabPFN performs in an actual world setting, I examined it on a Kaggle Playground competitors that concluded few months in the past. The duty, Binary Prediction with a Rainfall Dataset (MIT license), requires predicting the likelihood of rainfall for every id within the check set. Analysis is completed utilizing ROC–AUC, which makes this a great match for probability-based fashions like TabPFN. The coaching information seems like this:

Coaching a TabPFN Classifier

Coaching TabPFN Classifier is easy and follows a well-known scikit-learn type interface. Whereas there isn’t a task-specific coaching within the conventional sense, it’s nonetheless necessary to allow GPU assist, in any other case inference will be noticeably slower. The next code snippet walks via making ready the info, coaching a TabPFN classifier and evaluating its efficiency utilizing ROC–AUC rating.

# Importing mandatory libraries

from tabpfn import TabPFNClassifier

import pandas as pd, numpy as np

from sklearn.model_selection import train_test_split

# Choose characteristic columns

FEATURES = [c for c in train.columns if c not in ["rainfall",'id']]

X = prepare[FEATURES].copy()

y = prepare["rainfall"].copy()

# Cut up information into prepare and validation units

train_index, valid_index = train_test_split(

prepare.index,

test_size=0.2,

random_state=42

)

x_train = X.loc[train_index].copy()

y_train = y.loc[train_index].copy()

x_valid = X.loc[valid_index].copy()

y_valid = y.loc[valid_index].copy()

# Initialize and prepare TabPFN

model_pfn = TabPFNClassifier(system=["cuda:0", "cuda:1"])

model_pfn.match(x_train, y_train)

# Predict class possibilities

probs_pfn = model_pfn.predict_proba(x_valid)

# # Use likelihood of the constructive class

pos_probs = probs_pfn[:, 1]

# # Consider utilizing ROC AUC

print(f"ROC AUC: {roc_auc_score(y_valid, pos_probs):.4f}")

-------------------------------------------------

ROC AUC: 0.8722Subsequent let’s prepare a primary XGBoost classifier.

Coaching an XGBoost Classifier

from xgboost import XGBClassifier

# Initialize XGBoost classifier

model_xgb = XGBClassifier(

goal="binary:logistic",

tree_method="hist",

system="cuda",

enable_categorical=True,

random_state=42,

n_jobs=1

)

# Prepare the mannequin

model_xgb.match(x_train, y_train)

# Predict class possibilities

probs_xgb = model_xgb.predict_proba(x_valid)

# Use likelihood of the constructive class

pos_probs_xgb = probs_xgb[:, 1]

# Consider utilizing ROC AUC

print(f"ROC AUC: {roc_auc_score(y_valid, pos_probs_xgb):.4f}")

------------------------------------------------------------

ROC AUC: 0.8515As you’ll be able to see, TabPFN performs fairly properly out of the field. Whereas XGBoost can actually be tuned additional, my intent right here is to match primary, vanilla implementations slightly than optimised fashions. It positioned me on a twenty second rank on the general public leaderboard. Beneath are the highest 3 scores for reference.

What about mannequin explainability?

Transformer fashions should not inherently interpretable and therefore to grasp the predictions, post-hoc interpretability strategies like SHAP (SHapley Additive Explanations) are generally used to investigate particular person predictions and have contributions. TabPFN offers a devoted Interpretability Extension that integrates with SHAP, making it simpler to examine and motive concerning the mannequin’s predictions. To entry that you just’ll want to put in the extension first:

# Set up the interpretability extension:

pip set up "tabpfn-extensions[interpretability]"

from tabpfn_extensions import interpretability

# Calculate SHAP values

shap_values = interpretability.shap.get_shap_values(

estimator=model_pfn,

test_x=x_test[:50],

attribute_names=FEATURES,

algorithm="permutation",

)

# Create visualization

fig = interpretability.shap.plot_shap(shap_values)

The plot on the left reveals the common SHAP characteristic significance throughout all the dataset, giving a world view of which options matter most to the mannequin. The plot on the fitting is a SHAP abstract (beeswarm) plot, which offers a extra granular view by displaying SHAP values for every characteristic throughout particular person predictions.

From the above plots, it’s evident that cloud cowl, sunshine, humidity, and dew level have the biggest general influence on the mannequin’s predictions, whereas options similar to wind route, strain, and temperature-related variables play a relatively smaller function.

You will need to be aware that SHAP explains the mannequin’s realized relationships, not bodily causality.

Conclusion

There may be much more to TabPFN than what I’ve lined on this article. What I personally favored is each the underlying thought and the way straightforward it’s to get began. There are lot of features that I’ve not touched on right here, similar to TabPFN use in time sequence forecasting, anomaly detection, producing artificial tabular information, and extracting embeddings from TabPFN fashions.

One other space I’m notably focused on exploring is fine-tuning, the place these fashions will be tailored to information from a particular area. That stated, this text was meant to be a lightweight introduction based mostly on my first hands-on expertise. I plan to discover these extra capabilities in additional depth in future posts. For now, the official documentation is an effective place to dive deeper.

Word: All photographs, until in any other case acknowledged, are created by the writer.