Introduction

in September 2025, I took half in a hackathon organized by Mistral in Paris. All of the groups needed to create an MCP server and combine it into Mistral.

Although my crew didn’t win something, it was a incredible private expertise! Moreover, I had by no means created an MCP server earlier than, so it allowed me to achieve direct expertise with new applied sciences.

In consequence, we created Prédictif — an MCP server permitting to coach and check machine studying fashions instantly within the chat and persist saved datasets, outcomes and fashions throughout totally different conversations.

On condition that I actually loved the occasion, I made a decision to take it a step additional and write this text to supply different engineers with a easy introduction to MCP and in addition provide a information on creating an MCP server from scratch.

In case you are curious, the hackathon’s options from all groups are here.

MCP

AI brokers and MCP servers are comparatively new applied sciences which can be at the moment in excessive demand within the machine studying world.

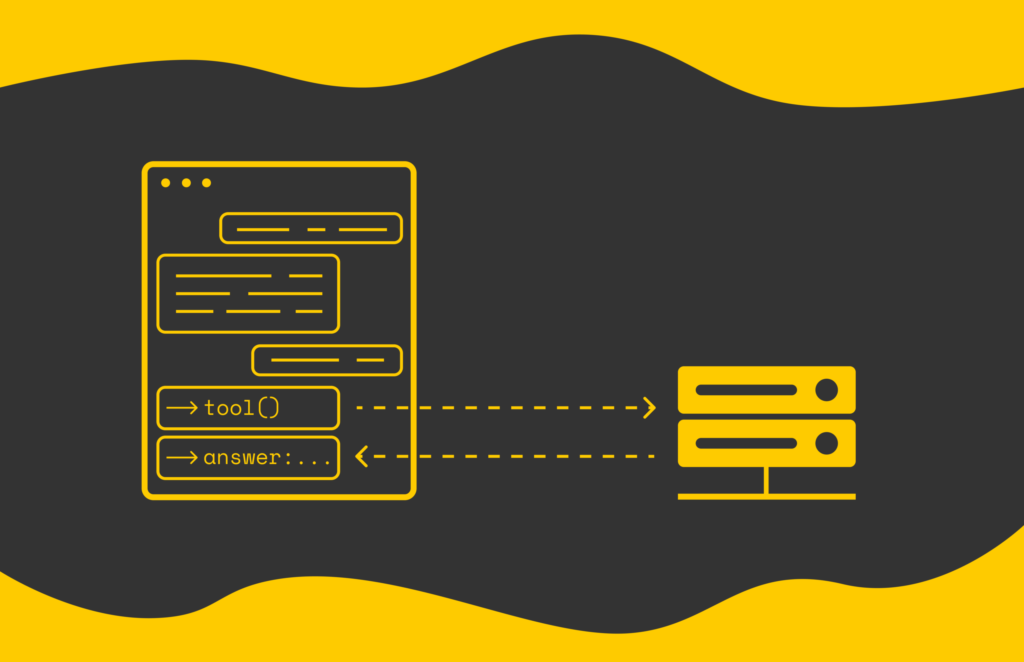

MCP stands for “Mannequin Context Protocol” and was initially developed in 2024 by Anthropic after which open-sourced. The motivation for creating MCP was the truth that totally different LLM distributors (OpenAI, Google, Mistral, and so forth.) supplied totally different APIs for creating exterior instruments (connectors) for his or her LLMs.

In consequence, if a developer created a connector for OpenAI, then they must carry out one other integration in the event that they needed to plug it in for Mistral and so forth. This method didn’t permit the easy reuse of connectors. That’s the place MCP stepped in.

With MCP, builders can create a device and reuse it throughout a number of MCP-compatible LLMs. It ends in a a lot easier workflow for builders as they now not have to carry out extra integrations. The identical is suitable with many LLMs.

For data, MCP makes use of JSON-RPC protocol.

Instance

Step 1

We’re going to construct a quite simple MCP server that may have just one device, whose objective can be to greet the person. For that, we’re going to use FastMCP — a library that enables us to construct MCP servers in a Pythonic method.

To begin with, we have to setup the setting:

uv init hello-mcp

cd hello-mcpAdd a fastmcp dependency (it will replace the pyproject.toml file):

uv add fastmcpCreate a predominant.py file and put the next code there:

from mcp.server.fastmcp import FastMCP

from pydantic import Subject

mcp = FastMCP(

identify="Howdy MCP Server",

host="0.0.0.0",

port=3000,

stateless_http=True,

debug=False,

)

@mcp.device(

title="Welcome a person",

description="Return a pleasant welcome message for the person.",

)

def welcome(

identify: str = Subject(description="Identify of the person")

) -> str:

return f"Welcome {identify} from this superb utility!"

if __name__ == "__main__":

mcp.run(transport="streamable-http")Nice! Now the MCP server is full and might even be deployed regionally:

uv run python predominant.pyTo any extent further, create a GitHub repository and push the native undertaking listing there.

Step 2

Our MCP server is prepared, however isn’t deployed. For deployment, we’re going to use Alpic — a platform that enables us to deploy MCP servers in actually a number of clicks. For that, create an account and sign up to Alpic.

Within the menu, select an choice to create a brand new undertaking. Alpic proposes to import an present Git repository. In the event you join your GitHub account to Alpic, it is best to be capable of see the checklist of obtainable repositories that can be utilized for deployment. Choose the one comparable to the MCP server and click on “Import”.

Within the following window, Alpic proposes a number of choices to configure the setting. For our instance, you possibly can go away these choices by default and click on “Deploy”.

After that, Alpic will assemble a Docker container with the imported repository. Relying on the complexity, deployment could take a while. If all the things goes nicely, you will note the “Deployed” standing with a inexperienced circle close to it.

Beneath the label “Area”, there’s a JSON-RPC tackle of the deployed server. Copy it for now, as we might want to join it within the subsequent step.

Step 3

The MCP server is constructed. Now we have to join it to the LLM supplier in order that we will use it in conversations. In our instance, we are going to use Mistral, however the connection course of must be comparable for different LLM suppliers.

Within the left menu, choose the “Connectors” possibility, which is able to open a brand new window with out there connectors. Connectors allow LLMs to connect with MCP servers. For instance, when you add a GitHub connector to Mistral, then within the chat, if wanted, LLM will be capable of search code in your repositories to supply a solution to a given immediate.

In our case, we wish to import a customized MCP server we now have simply constructed, so we click on on the “Add connector” button.

Within the modal window, navigate to “Customized MVP Connector” and fill within the essential data as proven within the screenshot under. For the connector server, use the HTTPS tackle of the deployed MCP server in step 2.

After the connector is added, you possibly can see it within the connectors’ menu:

In the event you click on on the MCP connector, within the “Features” subwindow, you will note an inventory of applied instruments within the MCP server. In our instance, we now have solely applied a single device “Welcome”, so it’s the solely perform we see right here.

Step 4

Now, return to the chat and click on the “Allow instruments” button, which lets you specify the instruments or MCP servers the LLM is permitted to make use of.

Click on on the checkbox comparable to our connector.

Now it’s time to check the connector. We will ask the LLM to make use of the “Welcome” device to greet the person. In Mistral chat, if the LLM acknowledges that it wants to make use of an exterior device, a modal window seems, displaying the device identify (“Welcome”) and the arguments it can take (identify = “Francisco”).

To verify the selection, click on on “Proceed”. In that case, we are going to get a response:

Wonderful! Our MCP server is working accurately. Equally, we will create extra advanced instruments.

Conclusion

On this article, we introduce MCP as an environment friendly mechanism for creating connectors with LLM distributors. Its simplicity and reusability have made MCP extremely popular these days, permitting builders to scale back the time required to implement LLM plugins.

Moreover, we now have examined a easy instance that demonstrates learn how to create an MCP server. In actuality, nothing prevents builders from constructing extra superior MCP purposes and leveraging extra performance from LLM suppliers.

For instance, within the case of Mistral, MCP servers can make the most of the performance of Libraries and Paperwork, permitting instruments to take as enter not solely textual content prompts but in addition uploaded recordsdata. These outcomes will be saved within the chat and made persistent throughout totally different conversations.

Sources

All photos except in any other case famous are by the writer.