As deep studying fashions develop bigger and datasets develop, practitioners face an more and more frequent bottleneck: GPU reminiscence bandwidth. Whereas cutting-edge {hardware} presents FP8 precision to speed up coaching and inference, most knowledge scientists and ML engineers work with older GPUs that lack this functionality.

This hole within the ecosystem is what motivated me to construct Feather, an open-source library that utilises a software-based method to ship FP8-like efficiency enhancements on extensively out there {hardware}. I created this instrument to make environment friendly deep studying extra accessible to the broader ML neighborhood, and I welcome contributions

Notation & Abbreviations

- FPX: X-bit floating level quantity

- UX: X-bit unsigned integer

- GPU: Graphics processing unit

- SRAM: Static RAM (on-chip GPU Cache)

- HBM: Excessive bandwidth reminiscence (GPU VRAM)

- GEMV: Basic Matrix-Vector multiplication

Motivation

FP8 processing has confirmed efficient within the Deep Studying neighborhood [1]; nonetheless, solely particular current {hardware} architectures (Ada and Blackwell) help it, limiting its advantages for practitioners and researchers to utilise it. I actually have an `Nvidia RTX 3050 6GB Laptop computer GPU`, which sadly doesn’t help FP8 operations on the {hardware} degree.

Impressed by software-based options like (software-accelerated rendering on computer systems that don’t help native {hardware} acceleration for gaming), the article proposes an fascinating answer that may utilise the ability of FP8 datatypes

Packing FP8 & FP16 in FP32 containers

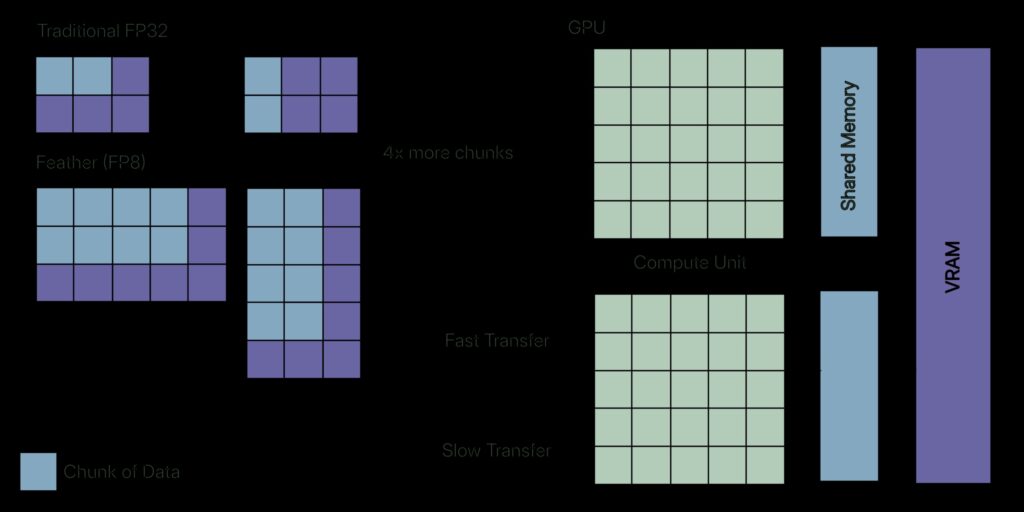

Impressed by bitwise operations and packing strategies, the article presents an algorithm that packs two FP16s or 4 FP8s right into a single FP32. This permits for packing twice or 4 occasions the reminiscence, benefiting from a decrease reminiscence footprint, whereas sacrificing solely a small quantity of precision.

One may argue that we’re performing redundant computation, “Pack -> Load -> Unpack -> Compute.” Nevertheless, take into account Deep Studying operations; More often than not, these operations are memory-bound slightly than compute-bound. This is similar bottleneck that algorithms like FlashAttention deal with; nonetheless, FlashAttention utilises tiling to maintain knowledge in quick SRAM, whereas Feather compresses knowledge to cut back reminiscence site visitors.

GPU Reminiscence Hierarchy

Check out this diagram. SRAM is the quickest accessible GPU reminiscence area and has the best bandwidth (excluding the register itself), however is proscribed to solely 20MB. HBM could be seen because the VRAM of the GPU itself, which has roughly 1/seventh the bandwidth of SRAM.

The GPU cores are quick sufficient to finish the computation immediately, however they spend most of their time sitting idle, ready for the info to complete loading and writing again. That is what I imply by memory-bound: the bottleneck right here isn’t the maths, however the knowledge switch between the hierarchy of reminiscence within the GPU.

Decrease Precision Sorts & Bandwidth

More often than not, values throughout computation are restricted to ranges round zero as a result of normalisation. Engineers developed lower-precision sorts similar to FP8 and FP16, which permit for greater bandwidth. One may be confused about how decreasing the precision permits for greater bandwidth. If we take a more in-depth look, we’re successfully loading two values within the place of 1 for the FP16 sort and 4 values within the place of 1 for the FP8 sort. We’re buying and selling off precision for greater bandwidth to sort out memory-bound operations.

{Hardware} Stage Assist

Similar to AVX-512 directions, that are supported solely on a restricted variety of {hardware} platforms, FP8 and FP16 directions and registers are additionally restricted by {hardware} and can be found solely on the current ones. If you’re on an RTX-30 or RTX-20 sequence GPU from Nvidia, you then will be unable to make the most of this decrease precision FP8 sort. That is precisely the issue that Feather makes an attempt to unravel.

Packing Methodology

Utilizing bitwise operators, one can simply pack the FP16 sort right into a FP32. The algorithm is described beneath.

Packing FP16

- Forged the enter FP32 right into a FP16; this step could be carried out with ease utilizing numpy’s astype perform.

- Forged them to U16 after which to U32; this units the higher 16 bits to 0s and decrease 16 bits to the precise FP16.

- Shift one in all them by 16 utilizing the bitwise LSHIFT operator, and mix each of them utilizing the bitwise OR operator.

Unpacking FP16

- Extract the decrease 16 bits utilizing the bitwise AND operator and masks 0xFFFF.

- Extract the higher 16 bits utilizing the RSHIFT operation by 16 after which carry out a bitwise AND operation with the masks 0xFFFF.

- Forged each U16 values again to FP16 and to FP32 if wanted.

Packing FP8

FP8 has two extensively used codecs – E5M2 & E4M3. One can not use the identical algorithm used for packing two FP16 into FP32 as a result of the CPU doesn’t help FP8 sorts natively, however does for FP16 (half precision); that is the rationale that np.float8 doesn’t exist.

Casting an FP16 to FP8-E5M2 is easy, as seen within the determine, as a result of each have the identical variety of exponent bits and differ solely of their fraction.

FP8-E5M2 Packing

- Forged the enter FP32 right into a FP16; this step could be carried out with ease utilizing numpy’s astype perform, or get the enter itself as FP16.

- Forged to U16, LSHIFT by 8, then RSHIFT by 8 to isolate the higher 8 bits

- Do that for all 4 FP32s or FP16s.

- Now utilizing the LSHIFT operator, shift them by 0, 8, 16 and 24 models and mix them utilizing the bitwise OR operator.

As soon as once more, unpacking ought to be simple; it’s the precise reverse of packing.

Packing an FP8-E4M3 shouldn’t be as straightforward and easy as packing an FP16 or FP8-E5M2, because of the exponent bits mismatch.

As an alternative of implementing it from scratch, the library makes use of the ml_dtypes library, which already does the casting math.

The ml_dtypes library gives help for generally used FP8 requirements, similar to E5M2 and E4M3 casting, for NumPy arrays. Utilizing the identical astype perform, we will carry out casting simply as we did for FP16 sorts. The Algorithm is precisely equivalent to how we pack FP16, so I’m skipping it right here.

Triton GPU Kernels

After we pack, we want an algorithm (kernel) to utilise this packed datatype and carry out the computation. Passing the packed datatype to a kernel carried out for FP32 or FP64 will lead to undefined computation as a result of we’ve already corrupted the FP32 or FP64 being handed. Writing a kernel that takes the packed datatype as enter in CUDA shouldn’t be a simple activity and is error-prone. That is precisely the place Triton shines; it’s a Area-Particular Language library that leverages a customized intermediate illustration for GPU kernels. In layman’s phrases, it permits one to write down GPU kernels in Python itself with out the necessity to write CUDA kernels in C.

Triton kernels do precisely what was talked about beforehand; the algorithm is as follows:

- Load the packed array into reminiscence

- Unpack the reminiscence and upcast it to FP32 for accumulation duties

- Carry out the computation

The reader ought to observe that when performing the computation, upcasting is used to forestall overflows. Subsequently, from a computational perspective, there isn’t a benefit. Nevertheless, from the angle of bandwidth, we’re loading reminiscence twice or 4 occasions with out compromising the bandwidth.

Triton Kernel Implementation (pseudocode)

@triton.jit

def gemv_fp8_kernel(packed_matrix_ptr, packed_vector_ptr, out_ptr):

# Get present row to course of

row_id = get_program_id()

# Initialize accumulator for dot product

accumulator = 0

# Iterate over row in blocks

for every block in row:

# Load packed FP32 values (every accommodates 4 FP8s)

packed_matrix = load(packed_matrix_ptr)

packed_vector = load(packed_vector_ptr)

# Unpack the FP32 into 4 FP8 values

m_a, m_b, m_c, m_d = unpack_fp8(packed_matrix)

v_a, v_b, v_c, v_d = unpack_fp8(packed_vector)

# Upcast to FP32 and compute partial dot merchandise

accumulator += (m_a * v_a) + (m_b * v_b) + (m_c * v_c) + (m_d * v_d)

# Retailer last outcome

retailer(out_ptr, accumulator)Outcomes

{Hardware}: NVIDIA GeForce RTX 3050 6GB VRAM

CUDA Model: 13.0

Python Model: 3.13.9

GEMV Benchmark (M = 16384, N = 16384) (MxN matrix)

| Implementation | Time (microseconds) | Speedup |

| Pytorch (FP32) | 5,635 | (Baseline) |

| Feather (FP8-E4M3) | 2,703 | 2.13x |

| Feather (FP8-E5M2) | 1,679 | 3.3x |

The theoretical efficiency increase that may be achieved is 4x; 3.3x is superb compared, with the remaining overhead primarily stemming from pack/unpack operations and kernel launch prices.

E5M2 is quicker than E4M3 because of the simpler unpacking, however E4M3 presents higher precision. Nevertheless, it’s considerably extra advanced to unpack (Feather makes use of a separate GPU kernel to unpack the E4M3 format).

Flash Consideration Benchmark (Sequence Size = 8192, Embedding Dimension = 512)

| Implementation | Time (microseconds) | Speedup |

| Pytorch (FP32) | 33,290 | (Baseline) |

| Feather (FP8-E5M2) | 9,887 | ~3.3x |

Accuracy & Precision

Testing with random matrices (integer distributions within the vary [-3, 3] and customary regular distributions) reveals that each E4M3 and E5M2 preserve numerical outcomes inside sensible tolerances for deep studying operations. The buildup errors stay manageable for typical workload sizes; nonetheless, customers requiring strict numerical precision ought to validate their particular use case.

When do you have to use Feather?

Use instances for Feather are usually not restricted; one can use Feather wherever FP8 packing and unpacking have a bonus, similar to

- Giant matrix-vector merchandise, the place loading and unloading are the bottlenecks.

- Consideration-like memory-bound kernels.

- Inference or fine-tuning on native RTX 30 or 20 sequence.

- Batch processing, the place packing overhead is amortised

When do you have to not use Feather?

- You might have RTX 40-series or H100 GPUs (native FP8 is quicker).

- Workloads are compute-bound slightly than bandwidth- or memory-bound.

- You want assured precision.

Limitations of Feather

Feather is at present within the early phases of prototyping with a number of areas for enchancment.

- Restricted help for operations; at present, Feather helps solely the dot product, GEMV subroutine and FlashAttention.

- Accuracy validation for full ML workloads; at present, Feather’s accuracy is validated just for operations, not for end-to-end ML workloads.

- Integration is at present restricted; Feather is a standalone implementation. Integration with PyTorch and help for autograd would make it extra production-ready.

The undertaking is open supply; neighborhood contributions are welcome! You’ll be able to check out the code by merely following the directions on GitHub.

Picture License: All the pictures are made by the writer. Adaptation sources are clearly talked about in respective captions.