Each week, new fashions are launched, together with dozens of benchmarks. However what does that imply for a practitioner deciding which mannequin to make use of? How ought to they strategy assessing the standard of a newly launched mannequin? And the way do benchmarked capabilities like reasoning translate into real-world worth?

On this publish, we’ll consider the newly launched NVIDIA Llama Nemotron Super 49B 1.5 mannequin. We use syftr, our generative AI workflow exploration and analysis framework, to floor the evaluation in an actual enterprise drawback and discover the tradeoffs of a multi-objective evaluation.

After analyzing greater than a thousand workflows, we provide actionable steerage on the use circumstances the place the mannequin shines.

The variety of parameters depend, however they’re not all the pieces

It must be no shock that parameter depend drives a lot of the price of serving LLMs. Weights should be loaded into reminiscence, and key-value (KV) matrices cached. Greater fashions sometimes carry out higher — frontier fashions are nearly at all times large. GPU developments have been foundational to AI’s rise by enabling these more and more giant fashions.

However scale alone doesn’t assure efficiency.

Newer generations of fashions usually outperform their bigger predecessors, even on the identical parameter depend. The Nemotron fashions from NVIDIA are a very good instance. The fashions construct on present open fashions, , pruning pointless parameters, and distilling new capabilities.

Meaning a smaller Nemotron mannequin can usually outperform its bigger predecessor throughout a number of dimensions: sooner inference, decrease reminiscence use, and stronger reasoning.

We wished to quantify these tradeoffs — particularly in opposition to among the largest fashions within the present era.

How rather more correct? How rather more environment friendly? So, we loaded them onto our cluster and started working.

How we assessed accuracy and price

Step 1: Determine the issue

With fashions in hand, we wanted a real-world problem. One which exams reasoning, comprehension, and efficiency inside an agentic AI move.

Image a junior monetary analyst attempting to ramp up on an organization. They need to have the ability to reply questions like: “Does Boeing have an enhancing gross margin profile as of FY2022?”

However additionally they want to clarify the relevance of that metric: “If gross margin isn’t a helpful metric, clarify why.”

To check our fashions, we’ll assign it the duty of synthesizing information delivered via an agentic AI move after which measure their means to effectively ship an correct reply.

To reply each varieties of questions appropriately, the fashions must:

- Pull information from a number of monetary paperwork (equivalent to annual and quarterly studies)

- Evaluate and interpret figures throughout time intervals

- Synthesize an evidence grounded in context

FinanceBench benchmark is designed for precisely such a activity. It pairs filings with expert-validated Q&A, making it a robust proxy for actual enterprise workflows. That’s the testbed we used.

Step 2: Fashions to workflows

To check in a context like this, it’s good to construct and perceive the total workflow — not simply the immediate — so you’ll be able to feed the best context into the mannequin.

And it’s a must to do that each time you consider a brand new mannequin–workflow pair.

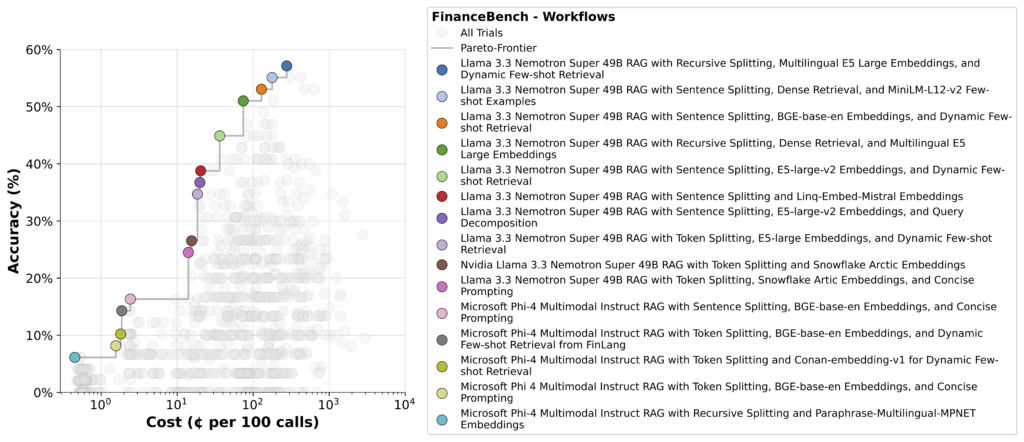

With syftr, we’re capable of run tons of of workflows throughout totally different fashions, shortly surfacing tradeoffs. The result’s a set of Pareto-optimal flows just like the one proven under.

Within the decrease left, you’ll see easy pipelines utilizing one other mannequin because the synthesizing LLM. These are cheap to run, however their accuracy is poor.

Within the higher proper are probably the most correct — however extra costly since these sometimes depend on agentic methods that break down the query, make a number of LLM calls, and analyze every chunk independently. Because of this reasoning requires environment friendly computing and optimizations to maintain inference prices in verify.

Nemotron reveals up strongly right here, holding its personal throughout the remaining Pareto frontier.

Step 3: Deep dive

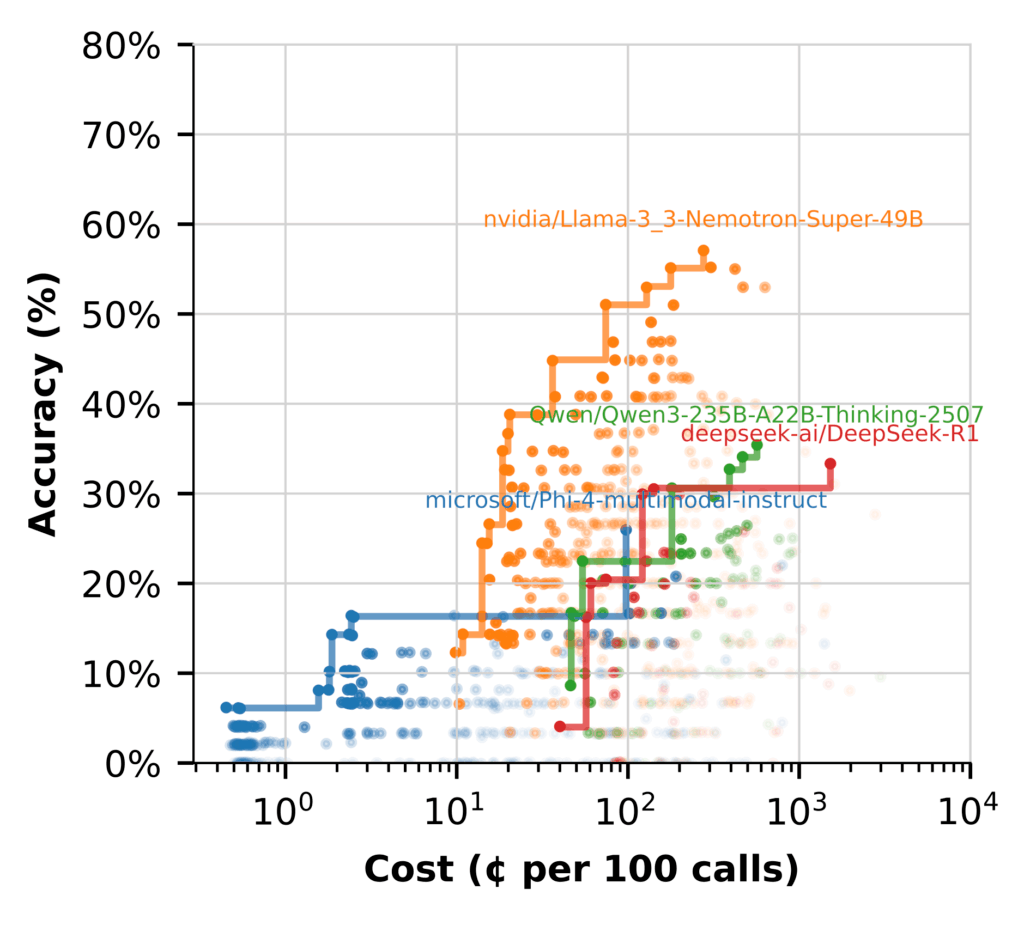

To higher perceive mannequin efficiency, we grouped workflows by the LLM used at every step and plotted the Pareto frontier for every.

The efficiency hole is evident. Most fashions battle to get anyplace close to Nemotron’s efficiency. Some have hassle producing cheap solutions with out heavy context engineering. Even then, it stays much less correct and costlier than bigger fashions.

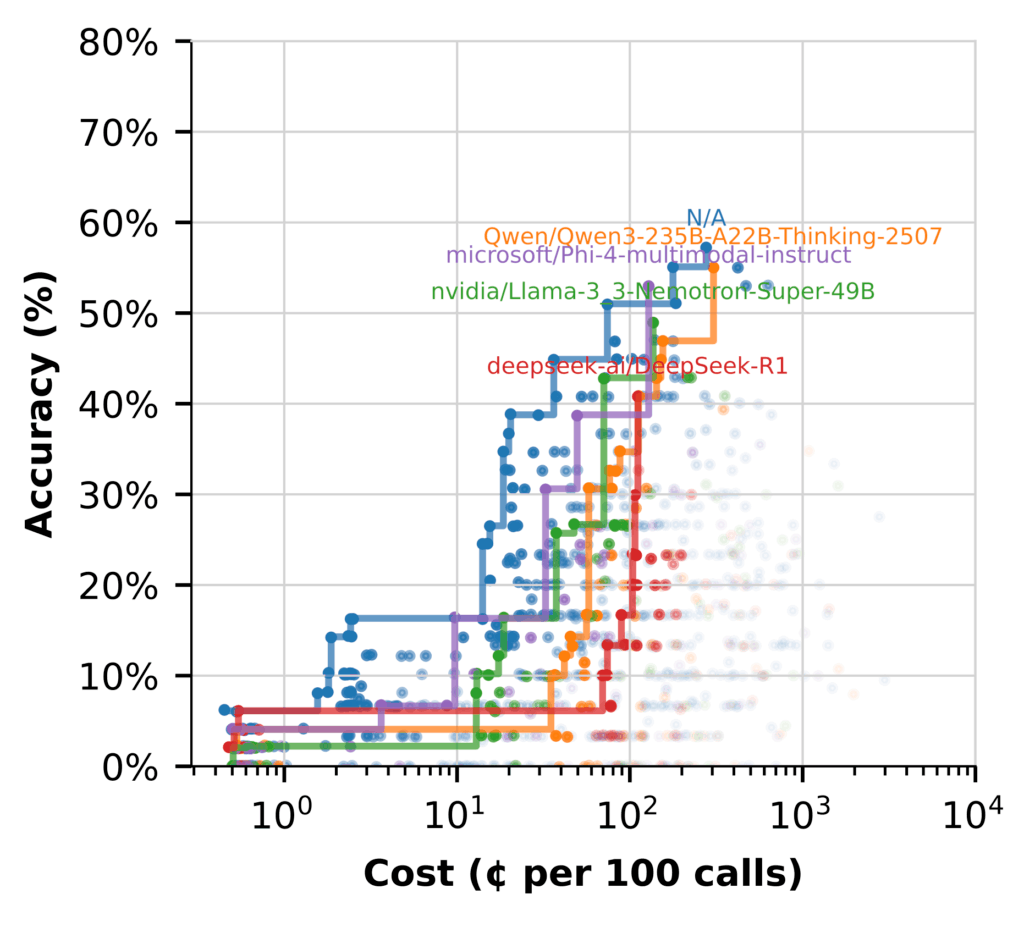

However after we swap to utilizing the LLM for (Hypothetical Doc Embeddings) HyDE, the story adjustments. (Flows marked N/A don’t embody HyDE.)

Right here, a number of fashions carry out nicely, with affordability whereas delivering excessive‑accuracy flows.

Key takeaways:

- Nemotron shines in synthesis, producing excessive‑constancy solutions with out added value

- Utilizing different fashions that excel at HyDE frees Nemotron to give attention to high-value reasoning

- Hybrid flows are probably the most environment friendly setup, utilizing every mannequin the place it performs finest

Optimizing for worth, not simply measurement

When evaluating new fashions, success isn’t nearly accuracy. It’s about discovering the best stability of high quality, value, and match on your workflow. Measuring latency, effectivity, and general affect helps make sure you’re getting actual worth

NVIDIA Nemotron fashions are constructed with this in thoughts. They’re designed not just for energy, however for sensible efficiency that helps groups drive affect with out runaway prices.

Pair that with a structured, Syftr-guided analysis course of, and also you’ve bought a repeatable option to keep forward of mannequin churn whereas preserving compute and finances in verify.

To discover syftr additional, take a look at the GitHub repository.