, the transfer from a conventional knowledge warehouse to Knowledge Mesh feels much less like an evolution and extra like an id disaster.

At some point, the whole lot works (possibly “works” is a stretch, however all people is aware of the lay of the land) The subsequent day, a brand new CDO arrives with thrilling information: “We’re shifting to Knowledge Mesh.” And all of the sudden, years of fastidiously designed pipelines, fashions, and conventions are questioned.

On this article, I wish to step away from idea and buzzwords and stroll by means of a sensible transition, from a centralised knowledge “monolith” to a contract-driven Knowledge Mesh, utilizing a concrete instance: web site analytics.

The standardized knowledge contract turns into the vital enabler for this transition. By adhering to an open, structured contract specification, schema definitions, enterprise semantics, and high quality guidelines are expressed in a constant format that ETL and Knowledge High quality instruments can interpret immediately. As a result of the contract follows an ordinary, these exterior platforms can programmatically generate assessments, implement validations, orchestrate transformations, and monitor knowledge well being with out customized integrations.

The contract shifts from static documentation to an executable management layer that seamlessly integrates governance, transformation, and observability. The Knowledge Contract is de facto the glue that holds the integrity of the Knowledge Mesh.

Why conventional knowledge warehousing turns into a monolith

When individuals hear “monolith”, they usually consider dangerous structure. However most monolithic knowledge platforms didn’t begin that approach, they developed into one.

A conventional enterprise knowledge warehouse sometimes has:

- One central group chargeable for ingestion, modelling, high quality, and publishing

- One central structure with shared pipelines and shared patterns

- Tightly coupled parts, the place a change in a single mannequin can ripple in all places

- Gradual change cycles, as a result of demand at all times exceeds capability

- Restricted area context, as modelers are sometimes far faraway from the enterprise

- Scaling ache, as extra knowledge sources and use circumstances arrive

This isn’t incompetence, it’s a pure end result of centralisation and years of unintended penalties. Ultimately, the warehouse turns into the bottleneck.

What Knowledge Mesh truly adjustments (and what it doesn’t)

Knowledge Mesh is commonly misunderstood as “no extra warehouse” or “everybody does their very own factor.”

In actuality, it’s a community shift, not essentially a expertise shift.

At its core, Knowledge Mesh is constructed on 4 pillars:

- Area possession

- Knowledge as a Product

- Self-serve knowledge platform

- Federated governance

The important thing distinction is that as an alternative of 1 massive system owned by one group, you get many small, related knowledge merchandise, owned by domains, and linked collectively by means of clear contracts.

And that is the place knowledge contracts grow to be the quiet hero of the story.

Knowledge contracts: the lacking stabiliser

Knowledge contracts borrow a well-recognized thought from software program engineering: API contracts, utilized to knowledge.

They had been popularised within the Knowledge Mesh group between 2021 and 2023, with contributions from individuals and tasks akin to:

- Andrew Jones, who launched the time period knowledge contract broadly by means of blogs and talks and his e-book, which was revealed in 20231

- Chad Sanderson (gable.ai)

- The Open Data Contract Standard, which was launched by the Bitol challenge

A knowledge contract explicitly defines the settlement between a knowledge producer and a knowledge shopper.

The instance: web site analytics

Let’s floor this with a concrete situation.

Think about a web based retailer, PlayNest, a web based toy retailer. The enterprise needs to analyse the person behaviour on our web site.

There are two primary departments which might be related to this train. Buyer Expertise, which is chargeable for the person journey on our web site; How the shopper feels when they’re looking our merchandise.

Then there may be the Advertising area, who make campaigns that take customers to our web site, and ideally make them inquisitive about shopping for our product.

There’s a pure overlap between these two departments. The boundaries between domains are sometimes fuzzy.

On the operational stage, after we speak about web sites, you seize issues like:

- Guests

- Periods

- Occasions

- Gadgets

- Browsers

- Merchandise

A conceptual mannequin for this instance might appear to be this:

From a advertising perspective, nevertheless, no one needs uncooked occasions. They need:

- Advertising leads

- Funnel efficiency

- Marketing campaign effectiveness

- Deserted carts

- Which sort of merchandise individuals clicked on for retargeting and so on.

And from a buyer expertise perspective, they wish to know:

- Frustration scores

- Conversion metrics (For instance what number of customers created wishlists, which alerts they’re inquisitive about sure merchandise, a kind of conversion from random person to person)

The centralised (pre-Mesh) strategy

I’ll use a Medallion framework as an instance how this could be inbuilt a centralised lakehouse structure.

- Bronze: uncooked, immutable knowledge from instruments like Google Analytics

- Silver: cleaned, standardized, source-agnostic fashions

- Gold: curated, business-aligned datasets (info, dimensions, marts)

Right here within the Bronze layer, the uncooked CSV or JSON objects are saved in, for instance, an Object retailer like S3 or Azure Blob. The central group is chargeable for ingesting the info, ensuring the API specs are adopted and the ingestion pipelines are monitored.

Within the Silver layer, the central group begins to scrub and remodel the info. Maybe the info modeling chosen was Knowledge Vault and thus the info is standardised into particular knowledge varieties, enterprise objects are recognized and sure comparable datasets are being conformed or loosely coupled.

Within the Gold layer, the actual end-user necessities are documented in story boards and the centralised IT groups implement the size and info required for the completely different domains’ analytical functions.

Let’s now reframe this instance, shifting from a centralised working mannequin to a decentralised, domain-owned strategy.

Web site analytics in a Knowledge Mesh

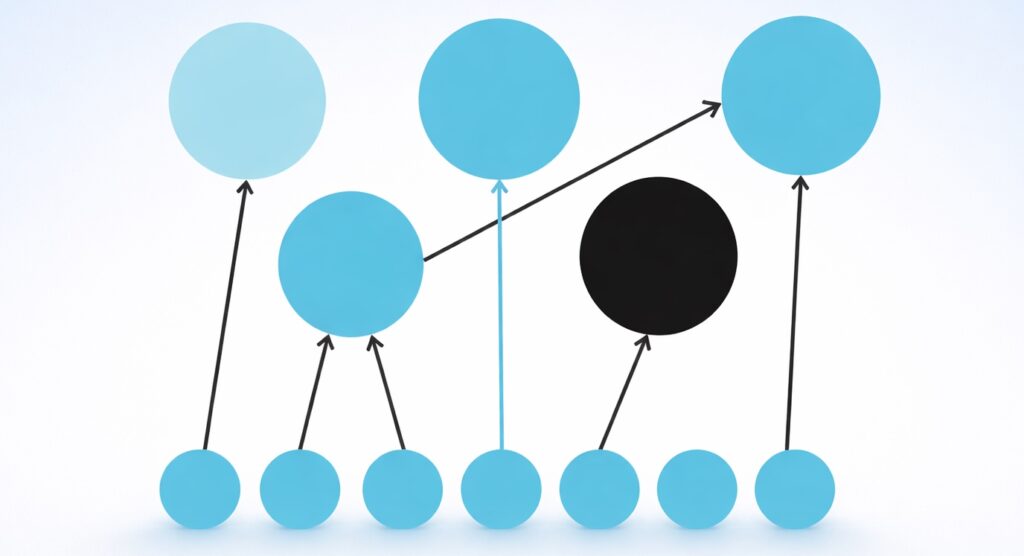

A typical Knowledge Mesh knowledge mannequin may very well be depicted like this:

A Knowledge Product is owned by a Area, with a particular kind, and knowledge is available in by way of enter ports and goes out by way of output ports. Every port is ruled by a knowledge contract.

As an organisation, if in case you have chosen to go together with Knowledge Mesh you’ll continually should determine between the next two approaches:

Do you organise your panorama with these re-usable constructing blocks the place logic is consolidated, OR:

Do you let all customers of the info merchandise determine for themselves how you can implement it, with the danger of duplication of logic?

Folks take a look at this and so they inform me it’s apparent. After all it’s best to select the primary choice as it’s the higher observe, and I agree. Besides that in actuality the primary two questions that can be requested are:

- Who will personal the foundational Knowledge Product?

- Who can pay for it?

These are basic questions that usually hamper the momentum of Knowledge Mesh. As a result of you’ll be able to both overengineer it (having a lot of reusable components, however in so doing hampering autonomy and escalate prices), or create a community of many little knowledge merchandise that don’t converse to one another. We wish to keep away from each of those extremes.

For the sake of our instance, let’s assume that as an alternative of each group ingesting Google Analytics independently, we create a number of shared foundational merchandise, for instance Web site Person Behaviour and Merchandise.

These merchandise are owned by a particular area (in our instance will probably be owned by Buyer Expertise), and they’re chargeable for exposing the info in commonplace output ports, which must be ruled by knowledge contracts. The entire thought is that these merchandise ought to be reusable within the organisation identical to exterior knowledge units are reusable by means of a standardised API sample. Downstream domains, like Advertising, then construct Shopper Knowledge Merchandise on high.

Web site Person Behaviour Foundational Knowledge Product

- Designed for reuse

- Secure, well-governed

- Typically constructed utilizing Knowledge Vault, 3NF, or comparable resilient fashions

- Optimised for change, not for dashboards

The 2 sources are handled as enter ports to the foundational knowledge product.

The modelling methods used to construct the info product is once more open to the area to determine however the motivation is for re-usability. Thus a extra versatile modelling approach like Knowledge Vault I’ve usually seen getting used inside this context.

The output ports are then additionally designed for re-usability. For instance, right here you’ll be able to mix the Knowledge Vault objects into an easier-to-consume format OR for extra technical customers you’ll be able to merely expose the uncooked knowledge vault tables. These will merely be logically break up into completely different output ports. You could possibly additionally determine to publish a separate output to be uncovered to LLM’s or autonomous brokers.

Advertising Lead Conversion Metrics Shopper Knowledge Product

- Designed for particular use circumstances

- Formed by the wants of the consuming area

- Typically dimensional or extremely aggregated

- Allowed (and anticipated) to duplicate logic if wanted

Right here I illustrate how we go for utilizing different foundational knowledge merchandise as enter ports. Within the case of the Web site person behaviour we go for utilizing the normalised Snowflake tables (since we wish to maintain constructing in Snowflake) and create a Knowledge Product that’s prepared for our particular consumption wants.

Our primary customers can be for analytics and dashboard constructing so choosing a Dimensional mannequin is sensible. It’s optimised for any such analytical querying inside a dashboard.

Zooming into Knowledge Contracts

The Knowledge Contract is de facto the glue that holds the integrity of the Knowledge Mesh. The Contract mustn’t simply specify among the technical expectations but additionally the authorized and high quality necessities and something that the patron could be inquisitive about.

The Bitol Open Knowledge Contract Normal2 got down to deal with among the gaps that existed with the seller particular contracts that had been out there in the marketplace. Specifically a shared, open commonplace for describing knowledge contracts in a approach that’s human-readable, machine-readable, and tool-agnostic.

Why a lot give attention to a shared commonplace?

- Shared language throughout domains

When each group defines contracts otherwise, federation turns into unimaginable.

A normal creates a frequent vocabulary for producers, customers, and platform groups.

- Software interoperability

An open commonplace permits knowledge high quality instruments, orchestration frameworks, metadata platforms and CI/CD pipelines to all devour the identical contract definition, as an alternative of every requiring its personal configuration format.

- Contracts as residing artifacts

Contracts shouldn’t be static paperwork. With an ordinary, they are often versioned, validated mechanically, examined in pipelines and in contrast over time. This strikes contracts from “documentation” to enforceable agreements.

- Avoiding vendor lock-in

Many distributors now assist knowledge contracts, which is nice, however with out an open commonplace, switching instruments turns into costly.

The ODCS is a YAML template that features the next key parts:

- Fundamentals – Goal, possession, area, and supposed customers

- Schema – Fields, varieties, constraints, and evolution guidelines

- Knowledge high quality expectations – Freshness, completeness, validity, thresholds

- Service-level agreements (SLAs) – Replace frequency, availability, latency

- Help and communication channels – Who to contact when issues break

- Groups and roles – Producer, proprietor, steward duties

- Entry and infrastructure – How and the place the info is uncovered (tables, APIs, recordsdata)

- Customized area guidelines – Enterprise logic or semantics that customers should perceive

Not each contract wants each part — however the construction issues, as a result of it makes expectations express and repeatable.

Knowledge Contracts enabling interoperability

In our instance we’ve got a knowledge contract on the enter port (Foundational knowledge product) in addition to the output port (Shopper knowledge product). You wish to implement these expectations as seamlessly as attainable, simply as you’d with any contract between two events. Because the contract follows a standardised, machine-readable format, now you can combine with third celebration ETL and knowledge high quality instruments to implement these expectations.

Platforms akin to dbt, SQLMesh, Coalesce, Nice Expectations, Soda, and Monte Carlo can programmatically generate assessments, implement validations, orchestrate transformations, and monitor knowledge well being with out customized integrations. A few of these instruments have already introduced assist for the Open Knowledge Contract Normal.

LLMs, MCP servers and Knowledge Contracts

By utilizing standardised metadata, together with the info contracts, organisations can safely make use of LLMs and different agentic AI functions to work together with their crown jewels, the info.

So in our instance, let’s assume Peter from PlayNest needs to verify what the highest most visited merchandise are:

That is sufficient context for the LLM to make use of the metadata to find out which knowledge merchandise are related, but additionally to see that the person doesn’t have entry to the info. It could now decide who and how you can request entry.

As soon as entry is granted:

The LLM can interpret the metadata and create the question that matches the person request.

Ensuring autonomous brokers and LLMs have strict guardrails below which to function will permit the enterprise to scale their AI use circumstances.

A number of distributors are rolling out MCP servers to offer a effectively structured strategy to exposing your knowledge to autonomous brokers. Forcing the interfacing to work by means of metadata requirements and protocols (akin to these knowledge contracts) will permit safer and scalable roll-outs of those use circumstances.

The MCP server supplies the toolset and the guardrails for which to function in. The metadata, together with the info contracts, supplies the insurance policies and enforceable guidelines below which any agent could function.

In the intervening time there’s a tsunami of AI use circumstances being requested by enterprise. Most of them are at the moment nonetheless not including worth. Now we’ve got a chief alternative to spend money on organising the proper guardrails for these tasks to function in. There’ll come a vital mass second when the worth will come, however first we’d like the constructing blocks.

I’ll go so far as to say this: a Knowledge Mesh with out contracts is solely decentralised chaos. With out clear, enforceable agreements, autonomy turns into silos, shadow IT multiplies, and inconsistency scales quicker than worth. At that time, you haven’t constructed a mesh, you’ve distributed dysfunction. You may as effectively revert to centralisation.

Contracts change assumption with accountability. Construct small, join neatly, govern clearly — don’t mesh round.

[1] Jones, A. (2023). Driving knowledge high quality with knowledge contracts: A complete information to constructing dependable, trusted, and efficient knowledge platforms. O’Reilly Media.

[2] Bitol. (n.d.). Open knowledge contract commonplace (v3.1.0). Retrieved February 18, 2026, from https://bitol-io.github.io/open-data-contract-standard/v3.1.0/

All pictures on this article was created by the creator