Synthetic Intelligence (AI) continues to remodel industries with its velocity, relevance, and accuracy. Nonetheless, regardless of spectacular capabilities, AI programs typically face a important problem referred to as the AI reliability hole—the discrepancy between AI’s theoretical potential and its real-world efficiency. This hole manifests in unpredictable habits, biased choices, and errors that may have vital penalties, from misinformation in customer support to flawed medical diagnoses.

To handle these challenges, Human-in-the-Loop (HITL) programs have emerged as an important strategy. HITL integrates human instinct, oversight, and experience into AI analysis and coaching, making certain that AI fashions are dependable, honest, and aligned with real-world complexities. This text explores the design of efficient HITL programs, their significance in closing the AI reliability hole, and finest practices knowledgeable by present developments and success tales.

Understanding the AI Reliability Hole and the Position of People

AI programs, regardless of their superior algorithms, will not be infallible. Actual-world examples:

| Incident | Error Kind | Potential HITL Intervention |

|---|---|---|

| Canadian airline’s AI chatbot gave pricey misinformation | Misinformation / Incorrect Response | Human overview of chatbot responses throughout important queries may catch and proper errors earlier than they affect clients. |

| AI recruiting device discriminated primarily based on age | Bias / Discrimination | Common audits and human oversight in screening choices can establish and handle biased patterns in AI suggestions. |

| ChatGPT hallucinated fictitious court docket circumstances | Fabrication / Hallucination | Human consultants verifying AI-generated authorized content material can forestall using false data in important paperwork. |

| COVID-19 prediction fashions did not detect the virus precisely | Prediction Error / Inaccuracy | Steady human monitoring and validation of mannequin outputs may also help recalibrate predictions and flag anomalies early. |

These incidents underscore that AI alone can not assure flawless outcomes. The reliability hole arises as a result of AI fashions typically lack transparency, contextual understanding, and the power to deal with edge circumstances or moral dilemmas with out human intervention.

People deliver important judgment, area data, and moral reasoning that machines presently can not replicate totally. Incorporating human suggestions all through the AI lifecycle—from coaching knowledge annotation to real-time analysis—helps mitigate errors, cut back bias, and enhance AI trustworthiness.

What Is Human-in-the-Loop (HITL) in AI?

Human-in-the-Loop refers to programs the place human enter is actively built-in into AI processes to information, right, and improve mannequin habits. HITL can contain:

- Validating and refining AI-generated predictions.

- Reviewing mannequin choices for equity and bias.

- Dealing with ambiguous or complicated situations.

- Offering qualitative consumer suggestions to enhance usability.

This creates a steady suggestions loop the place AI learns from human experience, leading to fashions that higher replicate real-world wants and moral requirements.

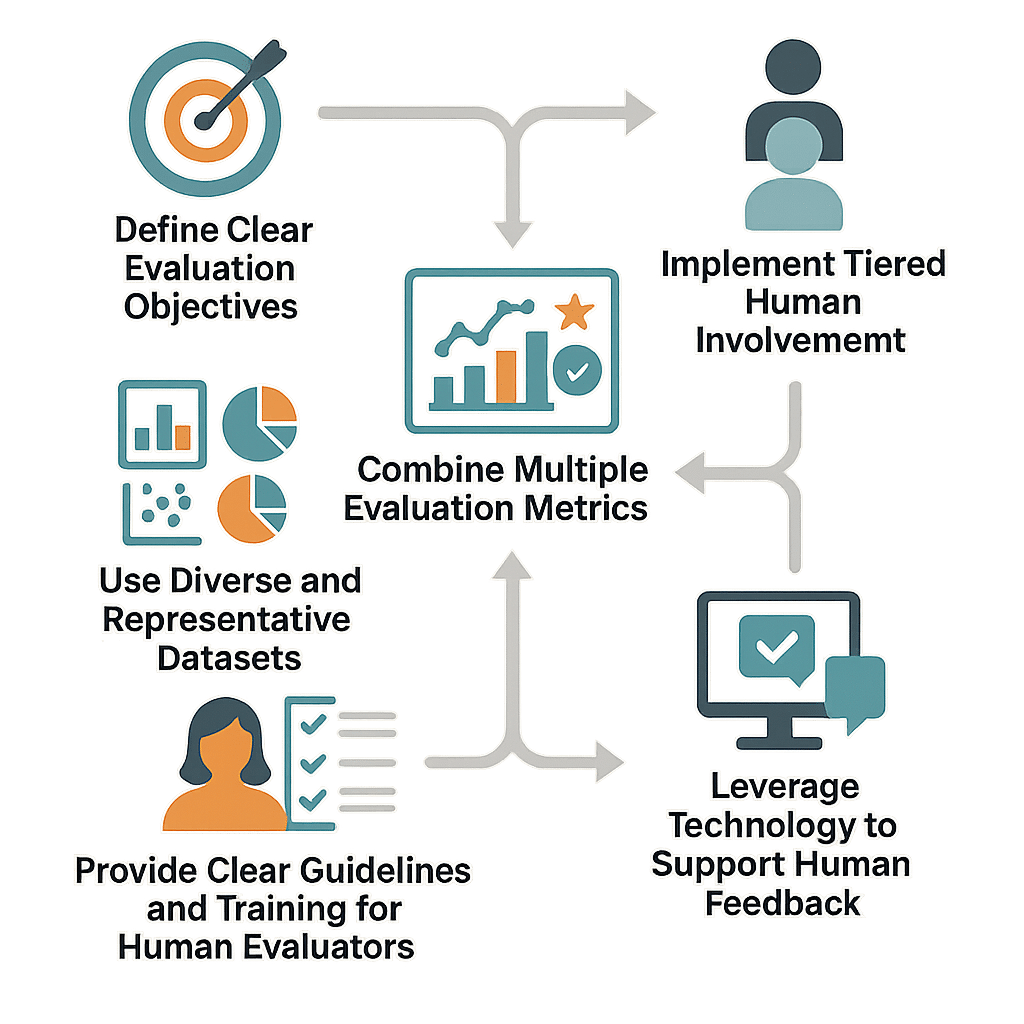

Key Methods for Designing Efficient HITL Methods

Designing a strong HITL system requires balancing automation with human oversight to maximise effectivity with out sacrificing high quality.

Outline Clear Analysis Aims

Set particular targets aligned with enterprise wants, moral issues, and AI use circumstances. Aims could deal with accuracy, equity, robustness, or compliance.

Use Numerous and Consultant Datasets

Guarantee coaching and analysis datasets replicate real-world range, together with demographic selection and edge circumstances, to stop bias and enhance generalization.

Mix A number of Analysis Metrics

Transcend accuracy by incorporating equity indicators, robustness exams, and interpretability assessments to seize a holistic view of mannequin efficiency.

Implement Tiered Human Involvement

Automate routine duties whereas escalating complicated or important choices to human evaluators. This reduces fatigue and optimizes useful resource allocation.

Present Clear Tips and Coaching for Human Evaluators

Equip human reviewers with standardized protocols to make sure constant, high-quality suggestions.

Leverage Expertise to Help Human Suggestions

Use instruments like annotation platforms, lively studying, and predictive fashions to establish when human enter is most precious.

Challenges and Options in HITL System Design

- Scalability: Human overview may be resource-intensive. Resolution: Prioritize duties for human overview utilizing confidence thresholds and automate less complicated circumstances.

- Evaluator Fatigue: Steady guide overview could degrade high quality. Resolution: Rotate duties and use AI to flag solely unsure circumstances.

- Sustaining Suggestions High quality: Inconsistent human enter can hurt mannequin coaching. Resolution: Standardize analysis standards and supply ongoing coaching.

- Bias in Human Suggestions: People can introduce their very own biases. Resolution: Use numerous evaluator swimming pools and cross-validation.

Success Tales Demonstrating HITL Impression

Enhancing Language Translation with Linguist Suggestions

A tech firm improved AI translation accuracy for much less frequent languages by integrating native speaker suggestions, capturing nuances and cultural context missed by AI alone.

Enhancing E-commerce Suggestions by Person Enter

An e-commerce platform included direct buyer suggestions on product suggestions, enabling knowledge analysts to refine algorithms and increase gross sales and engagement.

Advancing Medical Diagnostics with Dermatologist-Affected person Loops

A healthcare startup used suggestions from numerous dermatologists and sufferers to enhance AI pores and skin situation analysis throughout all pores and skin tones, enhancing inclusivity and accuracy.

Streamlining Authorized Doc Evaluation with Knowledgeable Overview

Authorized consultants flagged AI misinterpretations in doc evaluation, serving to refine the mannequin’s understanding of complicated authorized language and bettering analysis accuracy.

Newest Traits in HITL and AI Analysis

- Multimodal AI Fashions: Fashionable AI programs now course of textual content, photographs, and audio, requiring HITL programs to adapt to numerous knowledge sorts.

- Transparency and Explainability: Growing demand for AI programs to elucidate choices fosters belief and accountability, a key focus in HITL design.

- Actual-time Human Suggestions Integration: Rising platforms help seamless human enter throughout AI operation, enabling dynamic correction and studying.

- AI Superagency: The long run office envisions AI augmenting human decision-making fairly than changing it, emphasizing collaborative HITL frameworks.

- Steady Monitoring and Mannequin Drift Detection: HITL programs are important for ongoing analysis to detect and proper mannequin degradation over time.

Conclusion

The AI reliability hole highlights the indispensable position of people in AI improvement and deployment. Efficient Human-in-the-Loop programs create a symbiotic partnership the place human intelligence enhances synthetic intelligence, leading to extra dependable, honest, and moral AI options.