AI detection was presupposed to simplify tutorial integrity.

As an alternative, it launched a brand new drawback: false positives.

Lecturers are more and more pressured to depend on AI detectors when evaluating pupil work. However as I’ve written earlier than, these instruments are removed from dependable sufficient to behave as judges—particularly when false positives can result in critical academic consequences.

That doesn’t imply detectors don’t have any place in schooling. It means their function must be reframed.

For academics, the sensible purpose isn’t excellent detection. It’s screening: figuring out writing that clearly resembles AI output, flagging it for nearer evaluation, after which counting on human judgment to make the ultimate name.

This listing is accuracy-first and deliberately slender. Each detector right here has been examined in prior articles, and solely true-positive efficiency is taken into account. No hype, no theoretical claims — simply what truly labored.

How this listing ought to be used

Earlier than diving into instruments, it’s value stating this clearly:

No AI detector ought to ever be used as sole proof of misconduct.

Detectors are finest used to reply one query: “Is that this writing sufficiently AI-like that it deserves a more in-depth look?”

That nearer look ought to contain:

- evaluating in opposition to the coed’s prior work

- checking drafting historical past

- asking follow-up questions

- or utilizing in-class writing samples as reference factors

With that framing in place, accuracy nonetheless issues — particularly when time is proscribed.

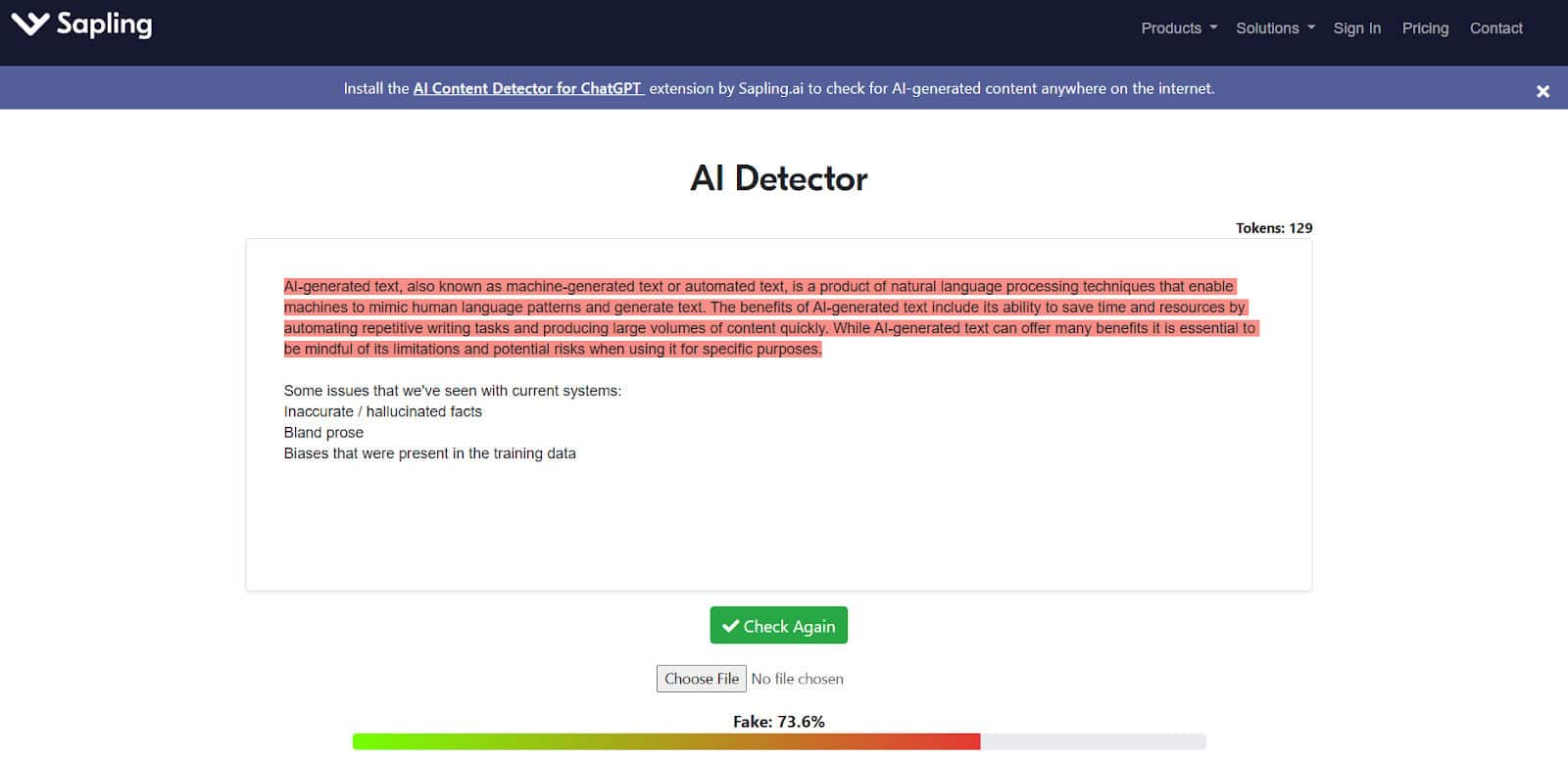

Sapling (High Advice)

Sapling is essentially the most constant detector I’ve examined for figuring out plain, unedited AI writing.

In managed testing, Sapling accurately recognized 100% of baseline ChatGPT outputs, with an general true-positive accuracy rating of 67.92% throughout broader samples that features Undetectable AI output (an AI humanizer).

What makes Sapling particularly appropriate for school rooms is restraint. It doesn’t try to over-explain outcomes or inflate confidence. You get a transparent sign, not a theatrical verdict.

That issues. Lecturers don’t want dramatic percentages — they want predictability. Sapling’s habits is constant sufficient that, when it flags one thing strongly, it’s normally value a re-examination.

Sapling can also be largely free, which removes a significant barrier for institutional or private use.

When you solely use one detector, that is the most secure default.

Winston AI

Winston AI is a extra feature-heavy detector, and its accuracy displays that ambition.

In testing, Winston efficiently detected 100% of straightforward AI-generated text, performing very nicely on unmodified LLM outputs, however solely 50% for Undetectable AI outputs.

The place Winston turns into much less predictable is with blended or frivolously edited content material — not as a result of it fails solely, however as a result of its confidence can fluctuate considerably relying on construction and size.

For academics, Winston works finest as a secondary validator, particularly when documentation or reporting is required. It’s not free (which is why this isn’t as sturdy as a suggestion as Sapling) however it’s sturdy, and its detection power on apparent AI content material is powerful.

Copyleaks

Copyleaks is commonly positioned as an institutional software, and its testing outcomes justify that fame — with caveats.

In prior testing, Copyleaks achieved a 78.27% true-positive accuracy score.

Its power lies in consistency throughout environments, particularly when paired with plagiarism detection. Nonetheless, its interface and licensing mannequin make it higher suited to school-wide adoption reasonably than particular person instructor use.

Copyleaks shouldn’t be absolutely free, however many establishments have already got entry. That is nice if solely you both have further money to spend or your college can provide you one.

TruthScan

In focused testing targeted on Gemini outputs, TruthScan achieved a 93% true-positive accuracy score, outperforming many general-purpose detectors in that state of affairs.

For school rooms encountering newer LLM writing kinds that don’t essentially resemble traditional ChatGPT output, TruthScan is usually a beneficial addition. That is very true since TruthScan is solely free and likewise works with AI image detection — making it a very nice platform general.

Different Detectors to Take into account

Along with the instruments lined above, I additionally tested a broader set of detectors prior to now. That article examined over a dozen detectors throughout a variety of fashions and writing sorts, and whereas not all of them make the principle suggestions right here, just a few are nonetheless value figuring out about:

Listed below are different detectors that earned honorable mentions or that you just may think about for supplementary checks:

- GPTZero — 65.25% true-positive accuracy within the closing tally. It’s not a high performer in that dataset, however it’s nonetheless a extensively used classroom cross-check—finest handled as a secondary sign, not a deciding issue.

- Originality.ai — 68.83% true-positive accuracy within the closing tally. Helpful if you need a stricter detector with a publishing-style workflow, however its core detection efficiency lands mid-pack right here.

- Content material at Scale (now, BrandWell) — 70.83% true-positive accuracy within the closing tally. It carried out higher than the weakest instruments, however nonetheless doesn’t beat the highest classroom-safe defaults.

My Closing Ideas

If accuracy and accessibility are your major considerations, Sapling stays the very best all-around alternative. It’s free, constant, and strict sufficient to catch apparent AI writing with out encouraging overconfidence.

For higher-stakes conditions, pairing Sapling with GPTZero or Winston AI supplies a extra defensible, multi-signal method.

And no matter software, bear in mind this:

AI detectors ought to information consideration, not decide guilt. Used fastidiously, they may also help academics navigate a tough transition. Used carelessly, they threat undermining belief — precisely the result they had been meant to forestall.