Introduction

within the interval of 2017-2019, physics-informed neural networks (PINNs) have been a extremely popular space of analysis within the scientific machine studying (SciML) neighborhood [1,2]. PINNs are used to unravel abnormal and partial differential equations (PDEs) by representing the unknown resolution subject with a neural community, and discovering the weights and biases (parameters) of the community by minimizing a loss perform based mostly on the governing differential equation. For instance, the unique PINNs method penalizes the sum of pointwise errors of the governing PDE, whereas the Deep Ritz technique minimizes an “vitality” practical whose minimal enforces the governing equation [3]. One other various is to discretize the answer with a neural community, then assemble the weak type of the governing equation utilizing adversarial networks [4] or polynomial check capabilities [5,6]. Whatever the alternative of physics loss, neural community discretizations have been efficiently used to research a lot of techniques ruled by PDEs. From the Navier-Stokes equations [7] to conjugate warmth switch [8] and elasticity [9], PINNs and their variants have confirmed themselves a worthy addition to the computational scientist’s toolkit.

As is the case with all machine studying issues, an important ingredient of acquiring sturdy and correct options with PINNs is hyperparameter tuning. The analyst has a lot freedom in setting up an answer technique—as mentioned above, the selection of physics loss perform will not be distinctive, neither is the optimizer, strategy of boundary situation enforcement, or neural community structure. For instance, whereas ADAM has traditionally been the go-to optimizer for machine studying issues, there was a surge of curiosity in second-order Newton-type strategies for physics-informed issues [10,11]. Different research have in contrast strategies for implementing boundary situations on the neural community discretization [12]. Greatest practices for the PINNs structure have primarily been investigated by the selection of activation capabilities. To fight the spectral bias of neural networks [13], sinusoidal activation capabilities have been used to raised symbolize high-frequency resolution fields [14,15]. In [16], a lot of customary activation capabilities have been in contrast on compressible fluid circulate issues. Activation capabilities with partially learnable options have been proven to enhance resolution accuracy in [17]. Whereas most PINNs depend on multi-layer perceptron (MLP) networks, convolutional networks have been investigated in [18] and recurrent networks in [19].

The research referenced above are removed from an exhaustive listing of works investigating the selection of hyperparameters for physics-informed coaching. Nonetheless, these research exhibit that the loss perform, optimizer, activation perform, and fundamental class of community structure (MLP, convolutional, recurrent, and so on.) have all obtained consideration within the literature as fascinating and vital parts of the PINN resolution framework. One hyperparameter that has seen comparatively little scrutiny is the dimensions of the neural community discretizing the answer subject. In different phrases, to one of the best of our information, there are not any revealed works that ask the next query: what number of parameters ought to the physics-informed community include? Whereas this query is in some sense apparent, the neighborhood’s lack of curiosity in it isn’t stunning—there is no such thing as a worth to pay in resolution accuracy for an overparameterized community. In reality, overparameterized networks can present useful regularization of the answer subject, as is seen with the phenomenon of double descent [20]. Moreover, within the context of data-driven classification issues, overparameterized networks have been proven to result in smoother loss landscapes [21]. As a result of resolution accuracy supplies no incentive to drive the dimensions of the community down, and since the optimization downside may very well favor overparameterization, many authors use very giant networks to symbolize PDE options.

Whereas the accuracy of the answer solely stands to achieve by growing the parameter depend, the computational price of the answer does scale with the community measurement. On this research, we take three examples from the PINNs literature and present that networks with orders of magnitude fewer parameters are able to satisfactorily reproducing the outcomes of the bigger networks. The conclusion from these three examples is that, within the case of low-frequency resolution fields, small networks can receive correct options with decreased computational price. We then present a counterexample, the place regression to a fancy oscillatory perform constantly advantages from growing the community measurement. Thus, our suggestion is as follows: the variety of parameters in a PINN ought to be as few as potential, however no fewer.

Examples

The primary three examples are impressed by issues taken from the PINNs literature. In these works, giant networks are used to acquire the PDE resolution, the place the dimensions of the community is measured by the variety of parameters. Whereas totally different community architectures could carry out in another way with totally different parameter counts, we use this metric as a proxy for community complexity, unbiased of the structure. In our examples, we incrementally lower the parameter depend of a multilayer perceptron community till the error with a reference resolution begins to extend. This level represents a decrease restrict on the community measurement for the actual downside, and we evaluate the variety of parameters at this level to the variety of parameters used within the unique paper. In every case, we discover that the networks from the literature are overparameterized by not less than an order of magnitude. Within the fourth instance, we remedy a regression downside to point out how small networks can fail to symbolize oscillatory fields, which acts as a caveat to our findings.

Section subject fracture

The section subject mannequin of fracture is a variational method to fracture mechanics, which concurrently finds the displacement and harm fields by minimizing a suitably outlined vitality practical [22]. Our research is predicated on the one-dimensional instance downside given in [23], which makes use of the Deep Ritz technique to find out the displacement and harm fields that reduce the fracture vitality practical. This vitality practical is given by

[PiBig(u(x),alpha(x)Big) = Pi^u + Pi^{alpha}=int_0^1 frac{1}{2}(1-alpha)^2 Big(frac{partial u}{partial x}Big)^2 + frac{3}{8}Big( alpha + ell^2 Big(frac{partial alpha}{partial x}Big)^2 Big) dx,]

the place ( x ) is the spatial coordinate, ( u(x) ) is the displacement, ( alpha(x)in[0,1] ) is the crack density, and ( ell ) is a size scale figuring out the width of smoothed cracks. The vitality practical includes two parts ( Pi^u ) and ( Pi^{alpha} ), that are the elastic and fracture energies respectively. As within the cited work, we take (ell=0.05). The displacement and section subject are discretized with a single neural community ( N: mathbb R rightarrow mathbb R^2 ) with parameters (boldsymbol theta). The issue is pushed by an utilized tensile displacement on the suitable finish, which we denote ( U ). Boundary situations are constructed into the 2 fields with

[ begin{bmatrix}

u(x ; boldsymbol theta) alpha (x; boldsymbol theta)

end{bmatrix} = begin{bmatrix} x(1-x) N_1(x;boldsymbol theta) + Ux x(1-x) N_2(x; boldsymbol theta)

end{bmatrix} ,]

the place (N_i ) refers back to the (i)-th output of the community and the Dirichlet boundary situations on the crack density are used to suppress cracking on the edges of the area. In [23], a 4 hidden-layer MLP community with a width of fifty is used to symbolize the 2 resolution fields. If we neglect the bias on the closing layer, this corresponds to (7850) trainable parameters. For all of our research, we use a two-hidden layer community with hyperbolic tangent activation capabilities and no bias on the output layer, as, in our expertise, these networks suffice to symbolize any resolution subject of curiosity. If each hidden layers have width (M), the overall variety of parameters on this community is (M^2+5M). When (M=86), we receive (7826) trainable parameters. Within the absence of an analytical resolution, we use this as the massive community reference resolution to which the smaller networks are in contrast.

To generate the answer fields, we reduce the overall potential vitality utilizing ADAM optimization with a studying fee of (1 instances 10^{-3}). Whole fracture of the bar is noticed round (U=0.6). As within the paper, we compute the elastic and fracture energies over a variety of utilized displacements, the place ADAM is run for (3500) epochs at every displacement increment to acquire the answer fields. These “loading curves” are used to check the efficiency of networks of various sizes. Our experiment is carried out with (8) totally different community sizes, every comprising (20) increments of the utilized displacement to construct the loading curves. See Determine 1 for the loading curves computed with the totally different community sizes. Solely when there are (|boldsymbol theta|=14) parameters, which corresponds to a community of width (2), will we see a divergence from the loading curves of the massive community reference resolution. The smallest community that performs nicely has (50) parameters, which is (157times) smaller than the community used within the paper. Determine 2 confirms that this small community is able to approximating the discontinuous displacement subject, in addition to the localized harm subject.

Burgers’ equation

We now research the impact of community measurement on a standard mannequin downside from fluid mechanics. Burgers’ equation is ceaselessly used to check numerical resolution strategies due to its nonlinearity and tendency to kind sharp options. The viscous Burgers’ equation with homogeneous Dirichlet boundaries is given by

[frac{partial u}{partial t} + ufrac{partial u}{partial x} = nu frac{partial^2 u}{partial x^2}, quad u(x,0) = u_0(x), quad u(-1,t)=u(1,t) = 0,]

the place (xin[-1,1]) is the spatial area, (tin[0,T]) is the time coordinate, (u(x,t)) is the speed subject, (nu) is the viscosity, and (u_0(x)) is the preliminary velocity profile. In [24], a neural community discretization of the speed subject is used to acquire an answer to the governing differential equation. Their community incorporates (3) hidden layers with (64) neurons in every layer, akin to (8576) trainable parameters. Once more, we use a two hidden-layer community that has (5M+M^2) trainable parameters the place (M) is the width of every layer. If we take (M=90), we receive (8550) trainable parameters in our community. We take the answer from this community to be the reference resolution (u_{textual content{ref}}(x,t)), and compute the discrepancy between velocity fields from smaller networks. We do that with an error perform given by

[ E Big( u(x,t)Big)= frac{int_{Omega}| u(x,t) – u_{text{ref}}(x,t)| dOmega}{int_{Omega}| u_{text{ref}}(x,t)| dOmega},]

the place (Omega = [-1,1] instances [0,T]) is the computational area. To resolve Burgers’ equation, we undertake the usual PINNs method and reduce the squared error of the governing equation:

[ underset{boldsymbol theta}{text{argmin }} L(boldsymbol theta), quad L(boldsymbol theta) = frac{1}{2} int_{Omega} Big(frac{partial u}{partial t} + ufrac{partial u}{partial x} – nu frac{partial^2 u}{partial x^2}Big)^2 dOmega.]

The rate subject is discretized with the assistance of an MLP community (N (x,t;boldsymbol theta)), and the boundary and preliminary situations are built-in with a distance function-type method [25]:

[ u(x,t;boldsymbol theta) = (1+x)(1-x)Big(t N(x,t; boldsymbol theta) + u_0(x)(1-t/T)Big). ]

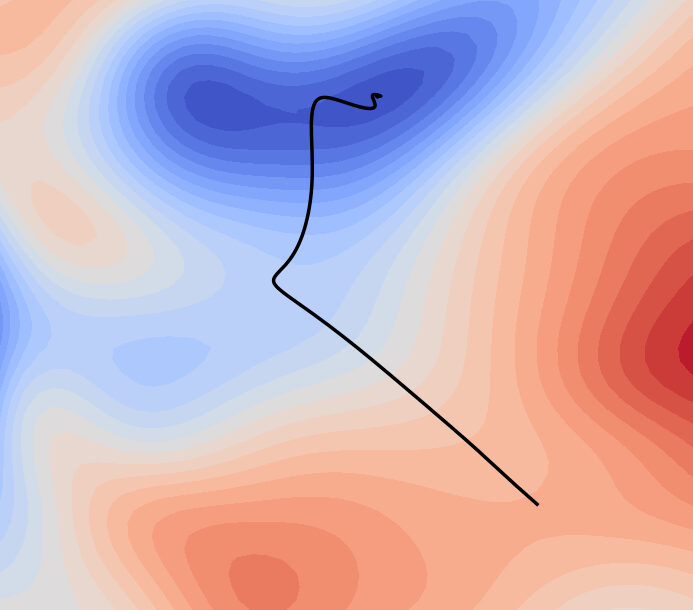

On this downside, we take the viscosity to be (nu=0.01) and the ultimate time to be (T=2). The preliminary situation is given by (u_0(x) = – sin(pi x)), which ends up in the well-known shock sample at (x=0). We run ADAM optimization for (1.5 instances 10^{4}) epochs with a studying fee of (1.5 instances 10^{-3}) to unravel the optimization downside at every community measurement. By sweeping over (8) community sizes, we once more search for the parameter depend at which the answer departs from the reference resolution. Notice that we confirm our reference resolution towards a spectral solver to make sure the accuracy of our implementation. See Determine 3 for the outcomes. All networks with (|boldsymbol theta|geq 150) parameters present roughly equal efficiency. As such, the unique community is overparameterized by an element of (57).

Neohookean hyperelasticity

On this instance, we think about the nonlinearly elastic deformation of a dice below a prescribed displacement. The pressure vitality density of a 3D hyperelastic strong is given by the compressible Neohookean mannequin [26] as

[PsiBig( mathbf{u}(mathbf{X}) Big) = frac{ell_1}{2}Big( I_1 – 3 Big) – ell_1 ln J + frac{ell_2}{2} Big( ln J Big)^2 ,]

the place (ell_1) and (ell_2) are materials properties which we take as constants. The pressure vitality makes use of the next definitions:

[ mathbf{F} = mathbf{I} + frac{partial mathbf{u}}{partial mathbf{X}},

I_1 = mathbf{F} : mathbf{F},

J = det(mathbf{F}),]

the place (mathbf{u}) is the displacement subject, (mathbf{X}) is the place within the reference configuration, and (mathbf F) is the deformation gradient tensor. The displacement subject is obtained by minimizing the overall potential vitality, given by

[ PiBig( mathbf{u}(mathbf{X}) Big) = int_{Omega} PsiBig( mathbf{u}(mathbf{X}) Big) – mathbf{b} cdot mathbf{u} dOmega – int_{partial Omega} mathbf{t} cdot mathbf{u} dS,]

the place (Omega) is the undeformed configuration of the physique, (mathbf{b}) is a volumetric power, and (mathbf{t}) is an utilized floor traction. Our investigation into the community measurement is impressed by [27], wherein the Deep Ritz technique is used to acquire a minimal of the hyperelastic whole potential vitality practical. Nonetheless, we choose to make use of the Neohookean mannequin of the pressure vitality, versus the Lopez-Pamies mannequin they make use of. As within the cited work, we take the undeformed configuration to be the unit dice (Omega=[0,1]^3) and we topic the dice to a uniaxial pressure state. To implement this pressure state, we apply a displacement (U) within the (X_3) route on the highest floor of the dice. Curler helps, which zero solely the (X_3) part of the displacement, are utilized on the underside floor. All different surfaces are traction-free, which is enforced weakly by the chosen vitality practical. The boundary situations are glad robotically by discretizing the displacement as

[ begin{bmatrix}

u_1(mathbf{X}; boldsymbol theta)

u_2(mathbf{X}; boldsymbol theta)

u_3(mathbf{X}; boldsymbol theta)

end{bmatrix} = begin{bmatrix}

X_3 N_1(mathbf{X}; boldsymbol theta)

X_3 N_2(mathbf{X}; boldsymbol theta)

sin(pi X_3) N_3(mathbf{X}; boldsymbol theta) + UX_3

end{bmatrix},]

the place ( N_i) is the (i)-th part of the community output. Within the cited work, a six hidden-layer community of width (40) is used to discretize the three parts of the displacement subject. This corresponds to (8480) trainable parameters. Provided that the community is a map ( N: mathbb R^3 rightarrow mathbb R^3), a two hidden-layer community of width (M) has (8M+M^2) trainable parameters when no bias is utilized on the output layer. Thus, if we take ( M=88 ), our community has (8448) trainable parameters. We’ll take this community structure to be the massive community reference.

In [27], the connection between the traditional part of the primary Piola-Kirchhoff stress tensor (mathbf{P}) within the route of the utilized displacement and the corresponding part of the deformation gradient (mathbf{F}) was computed to confirm their Deep Ritz implementation. Right here, we research the connection between this tensile stress and the utilized displacement (U). The primary Piola-Kirchhoff stress tensor is obtained with the pressure vitality density as

[ mathbf{P} = frac{ partial Psi}{partial mathbf{F}} = ell_1( mathbf{F} – mathbf{F}^{-T} ) + ell_2 mathbf{F}^{-T}log J.]

Given the unit dice geometry and the uniaxial stress/pressure state, the deformation gradient is given by

[ mathbf{F} = begin{bmatrix}

1 & 0 & 0 0 & 1 & 0 0 & 0 & 1+U

end{bmatrix}.]

With these two equations, we compute the tensile stress (P_{33}) and the pressure vitality as a perform of the utilized displacement to be

[ begin{aligned} P_{33} = ell_1Big( 1 + U – frac{1}{1+U}Big) + ell_2frac{log(1+U)}{1+U}, Pi = frac{ell_1}{2}(2+(1+U)^2-3) – ell_1 log(1+U) + frac{ell_2}{2}(log(1+U))^2. end{aligned}]

These analytical options can be utilized to confirm our implementation of the hyperelastic mannequin, in addition to to gauge the efficiency of various measurement networks. Utilizing the neural community mannequin, the tensile stress and the pressure vitality are computed at every utilized displacement with:

[ P_{33} = int_{Omega} ell_1( {mathbf{F}} – {mathbf{F}}^{-T} ) + ell_2 {mathbf{F}}^{-T}log J dOmega, quad Pi = int_{Omega} PsiBig( {mathbf{u}}(mathbf{X})Big) dOmega,]

the place the displacement subject is constructed from parameters obtained from the Deep Ritz technique. To compute the stress, we common over your entire area, provided that we anticipate a relentless stress state. On this instance, the fabric parameters are set at (ell_1=1) and (ell_2=0.25). We iterate over (8) community sizes and take (10) load steps at every measurement to acquire the stress and pressure vitality as a perform of the utilized displacement. See Determine 4 for the outcomes. All networks precisely reproduce the pressure vitality and stress loading curves. This contains even the community of width (2), with solely (20) trainable parameters. Thus, the unique community has (424times) extra parameters than essential to symbolize the outcomes of the tensile check.

A counterexample

Within the fourth and closing instance, we remedy a regression downside to point out the failure of small networks to suit high-frequency capabilities. The one-dimensional regression downside is given by

[ underset{boldsymbol theta}{text{argmin }} L(boldsymbol theta), quad L(boldsymbol theta) = frac{1}{2}int_0^1Big( v(x) – N(x;boldsymbol theta) Big)^2 dx,]

the place ( N) is a two hidden-layer MLP community and (v(x)) is the goal perform. On this instance, we take (v(x)=sin^5(20pi x)). We iterate over (5) totally different community sizes and report the converged loss worth (L) as an error measure. We practice utilizing ADAM optimization for (5 instances 10^4) epochs and with a studying fee of (5 instances 10^{-3}). See Determine 5 for the outcomes. Not like the earlier three examples, the goal perform is sufficiently complicated that giant networks are required to symbolize it. The converged error decreases monotonically with the parameter depend. We additionally time the coaching process at every community measurement, and be aware the dependence of the run time (in seconds) on the parameter depend. This instance illustrates that representing oscillatory capabilities requires bigger networks, and that the parameter depend drives up the price of coaching.

Conclusion

Whereas tuning hyperparameters governing the loss perform, optimization course of, and activation perform is frequent within the PINNs neighborhood, it’s much less frequent to tune the community measurement. With three instance issues taken from the literature, we’ve got proven that very small networks typically suffice to symbolize PDE options, even when there are discontinuities and/or different localized options. See Desk 1 for a abstract of our outcomes on the potential for utilizing small networks. To qualify our findings, we then offered the case of regression to a high-frequency goal perform, which required a lot of parameters to suit precisely. Thus, our conclusions are as follows: resolution fields which don’t oscillate can typically be represented by small networks, even once they comprise localized options akin to cracks and shocks. As a result of the price of coaching scales with the variety of parameters, smaller networks can expedite coaching for physics-informed issues with non-oscillatory resolution fields. In our expertise, such resolution fields seem recurrently in sensible issues from warmth conduction and static strong mechanics. By shrinking the dimensions of the community, these issues and others symbolize alternatives to render PINN options extra computationally environment friendly, and thus extra aggressive with conventional approaches such because the finite factor technique.

| Downside | Overparameterization |

| Section subject fracture [23] | (157 instances ) |

| Burgers’ equation [24] | ( 57 instances ) |

| Neohookean hyperelasticity [27] | ( 424 instances ) |

References

[1] Justin Sirignano and Konstantinos Spiliopoulos. DGM: A deep studying algorithm for fixing partial differential equations. Journal of Computational Physics, 375:1339–1364, December 2018. arXiv:1708.07469 [q-fin].

[2] M. Raissi, P. Perdikaris, and G.E. Karniadakis. Physics-informed neural networks: A deep studying framework for fixing ahead and inverse issues involving nonlinear partial differential equations. Journal of Computational Physics, 378:686–707, February 2019.

[3] Weinan E and Bing Yu. The Deep Ritz technique: A deep learning-based numerical algorithm for fixing variational issues, September 2017. arXiv:1710.00211 [cs].

[4] Yaohua Zang, Gang Bao, Xiaojing Ye, and Haomin Zhou. Weak Adversarial Networks for Excessive-dimensional Partial Differential Equations. Journal of Computational Physics, 411:109409, June 2020. arXiv:1907.08272 [math].

[5] Reza Khodayi-Mehr and Michael M. Zavlanos. VarNet: Variational Neural Networks for the Resolution of Partial Differential Equations, December 2019. arXiv:1912.07443 [cs].

[6] E. Kharazmi, Z. Zhang, and G. E. Karniadakis. Variational Physics-Knowledgeable Neural Networks For Fixing Partial Differential Equations, November 2019. arXiv:1912.00873 [cs].

[7] Xiaowei Jin, Shengze Cai, Hui Li, and George Em Karniadakis. NSFnets (Navier-Stokes Circulate nets): Physics-informed neural networks for the incompressible Navier-Stokes equations. Journal of Computational Physics, 426:109951, February 2021.

[8] Shengze Cai, Zhicheng Wang, Sifan Wang, Paris Perdikaris, and George Em Karniadakis. Physics-Knowledgeable Neural Networks for Warmth Switch Issues. Journal of Warmth Switch, 143(060801), April 2021.

[9] Min Liu, Zhiqiang Cai, and Karthik Ramani. Deep Ritz technique with adaptive quadrature for linear elasticity. Pc Strategies in Utilized Mechanics and Engineering, 415:116229, October 2023.

[10] Sifan Wang, Ananyae Kumar Bhartari, Bowen Li, and Paris Perdikaris. Gradient Alignment in Physics-informed Neural Networks: A Second-Order Optimization Perspective, September 2025. arXiv:2502.00604 [cs].

[11] Jorge F. City, Petros Stefanou, and Jose A. Pons. Unveiling the optimization technique of physics knowledgeable neural networks: How correct and aggressive can PINNs be? Journal of Computational Physics, 523:113656, February 2025.

[12] Conor Rowan, Kai Hampleman, Kurt Maute, and Alireza Doostan. Boundary situation enforcement with PINNs: a comparative research and verification on 3D geometries, December 2025. arXiv:2512.14941[math].

[13] Nasim Rahaman, Aristide Baratin, Devansh Arpit, Felix Draxler, Min Lin, Fred A. Hamprecht, Yoshua Bengio, and Aaron Courville. On the Spectral Bias of Neural Networks, Could 2019. arXiv:1806.08734 [stat].

[14] Mirco Pezzoli, Fabio Antonacci, and Augusto Sarti. Implicit neural illustration with physics-informed neural networks for the reconstruction of the early a part of room impulse responses. In Proceedings of the tenth Conference of the European Acoustics Affiliation Discussion board Acusticum 2023, pages 2177–2184, January 2024.

[15] Shaghayegh Fazliani, Zachary Frangella, and Madeleine Udell. Enhancing Physics-Knowledgeable Neural Networks By way of Characteristic Engineering, June 2025. arXiv:2502.07209 [cs].

[16] Duong V. Dung, Nguyen D. Music, Pramudita S. Palar, and Lavi R. Zuhal. On The Selection of Activation Features in Physics-Knowledgeable Neural Community for Fixing Incompressible Fluid Flows. In AIAA SCITECH 2023 Discussion board. American Institute of Aeronautics and Astronautics. Eprint: https://arc.aiaa.org/doi/pdf/10.2514/6.2023-1803.

[17] Honghui Wang, Lu Lu, Shiji Music, and Gao Huang. Studying Specialised Activation Features for Physics-informed Neural Networks. Communications in Computational Physics, 34(4):869–906, June 2023. arXiv:2308.04073 [cs].

[18] Zhao Zhang, Xia Yan, Piyang Liu, Kai Zhang, Renmin Han, and Sheng Wang. A physics-informed convolutional neural community for the simulation and prediction of two-phase Darcy flows in heterogeneous porous media. Journal of Computational Physics, 477:111919, March 2023.

[19] Pu Ren, Chengping Rao, Yang Liu, Jianxun Wang, and Hao Solar. PhyCRNet: Physics-informed Convolutional-Recurrent Community for Fixing Spatiotemporal PDEs. Pc Strategies in Utilized Mechanics and Engineering, 389:114399, February 2022. arXiv:2106.14103 [cs].

[20] Mikhail Belkin, Daniel Hsu, Siyuan Ma, and Soumik Mandal. Reconciling trendy machine-learning apply and the classical bias–variance trade-off. Proceedings of the Nationwide Academy of Sciences, 116(32):15849–15854, August 2019. Writer: Proceedings of the Nationwide Academy of Sciences.

[21] Arthur Jacot, Franck Gabriel, and Cl´ement Hongler. Neural Tangent Kernel: Convergence and Generalization in Neural Networks, February 2020. arXiv:1806.07572 [cs].

[22] B. Bourdin, G. A. Francfort, and J-J. Marigo. Numerical experiments in revisited brittle fracture. Journal of the Mechanics and Physics of Solids, 48(4):797–826, April 2000.

[23] M. Manav, R. Molinaro, S. Mishra, and L. De Lorenzis. Section-field modeling of fracture with physics-informed deep studying. Pc Strategies in Utilized Mechanics and Engineering, 429:117104, September 2024.

[24] Xianke Wang, Shichao Yi, Huangliang Gu, Jing Xu, and Wenjie Xu. WF-PINNs: fixing ahead and inverse issues of burgers equation with steep gradients utilizing weak-form physics-informed neural networks. Scientific Reviews, 15(1):40555, November 2025. Writer: Nature Publishing Group.

[25] N. Sukumar and Ankit Srivastava. Precise imposition of boundary situations with distance capabilities in physics-informed deep neural networks. Pc Strategies in Utilized Mechanics and Engineering, 389:114333, February 2022.

[26] Javier Bonet and Richard D. Wooden. Nonlinear Continuum Mechanics for Finite Factor Evaluation. Cambridge College Press, Cambridge, 2 version, 2008.

[27] Diab W. Abueidda, Seid Koric, Rashid Abu Al-Rub, Corey M. Parrott, Kai A. James, and Nahil A. Sobh. A deep studying vitality technique for hyperelasticity and viscoelasticity. European Journal of Mechanics – A/Solids, 95:104639, September 2022. arXiv:2201.08690 [cs].