After dinner in downtown San Francisco, I stated goodbye to mates and pulled out my cellphone to determine learn how to get house. It was near 11:30 pm, and Uber estimates had been unusually lengthy. I opened Google Maps and checked out strolling instructions as a substitute. The routes had been comparable in distance, however I hesitated — not due to how lengthy the stroll would take, however as a result of I wasn’t positive how totally different elements of the route would really feel at the moment of night time. Google Maps might inform me the quickest means house, but it surely couldn’t assist reply the query I used to be truly asking: how can I filter for a route that takes me via safer blocks relatively than the quickest route?

Defining the Downside Assertion

Given a beginning location, ending location, day of the week, and time how can we predict the anticipated threat on the given strolling route? For instance, if I need to stroll from the Ferry Constructing to Decrease Nob Hill, Google Maps reveals me the next route(s):

At a excessive stage, the issue I needed to resolve was this: given a beginning location, ending location, time of day, and day of week, how can we estimate the anticipated threat alongside a strolling route?

For instance, if I need to stroll from Chinatown to Market & Van Ness, Google Maps presents a pair route choices, all taking roughly 40 minutes. Whereas it’s helpful to match distance and length, it doesn’t assist reply a extra contextual query: which elements of those routes are inclined to look totally different relying on the time I’m making the stroll? How does the identical route examine at 9 am on a Tuesday versus 11 pm on a Saturday?

As walks get longer — or go via areas with very totally different historic exercise patterns — these questions turn into tougher to reply intuitively. Whereas San Francisco isn’t uniquely unsafe in comparison with different main cities, public security remains to be a significant consideration, particularly when strolling via unfamiliar areas or at unfamiliar occasions. My objective was to construct a instrument for locals and guests alike that provides context to those choices — utilizing historic information and machine studying to floor how threat varies throughout area and time, with out decreasing town to simplistic labels.

Getting the Information + Pre-Processing

Fetching the Uncooked Dataset

The San Francisco Metropolis and County Departments publish police incident reviews day by day via the San Francisco Open Information portal. The dataset spans from January 1, 2018 to the current and contains structured info reminiscent of incident class, subcategory, description, time, and site (latitude and longitude).

Categorizing Incidents Reported

One speedy problem with this information is that not all incidents symbolize the identical stage or kind of threat. Treating all reviews equally would blur significant variations — for instance, a minor vandalism report shouldn’t be weighted the identical as a violent incident. To deal with this, I first extracted all distinctive combos of incident class, subcategory, and outline, which resulted in a little bit over 800 distinct incident triplets.

Slightly than scoring particular person incidents immediately, I used an LLM to assign severity scores to every distinctive incident kind. This allowed me to normalize semantic variations within the information whereas conserving the scoring constant and interpretable. Every incident kind was scored on three separate dimensions, every on a 0–10 scale:

- Hurt rating: the potential threat to human security and passersby

- Property rating: the potential for injury or lack of property

- Public disruption rating: the extent to which an incident disrupts regular public exercise

These three scores had been later mixed to type an total severity sign for every incident, which might then be aggregated spatially and temporally. This method made it doable to mannequin threat in a means that displays each the frequency and the nature of reported incidents, relatively than counting on uncooked counts alone.

Geospatial Illustration

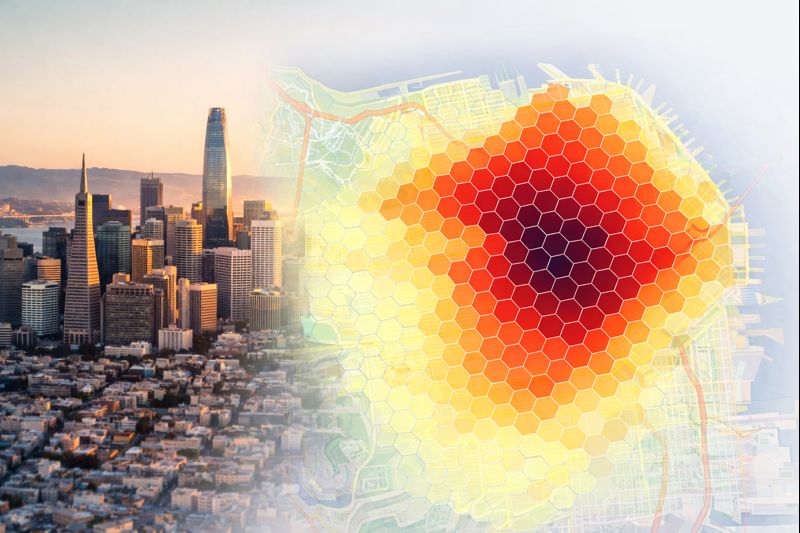

Offering uncooked latitude and longitude numbers won’t add a lot worth to the ML mannequin as a result of I must group combination incident context at a block and neighborhood-level. I wanted a technique to map a block or neighborhood to a set index to simplify function engineering and construct a constant spatial mapping. Reduce to the seminal engineering weblog printed by Uber — H3.

Uber’s H3 blog describes how projecting an icosahedron (20-faced polyhedron) to the floor of the earth and hierarchically breaking them down into hexagonal shapes (and 12 strategically positioned pentagons) might help tessellate the whole map. Hexagons are particular as a result of there are one of many few common polygons that type common tessellations and its centerpoint is equidistant to it’s neighbors’, which simplifies smoothing over gradients.

The web site https://clupasq.github.io/h3-viewer/ is a enjoyable experiment to see what your location’s H3 Index is!

Temporal Illustration

Time is simply as vital as location when modeling strolling threat. Nonetheless, naïvely encoding hour and day as integers introduces discontinuities — 23:59 and 00:00 are numerically far aside, despite the fact that they’re solely a minute aside in actuality.

To deal with this, I encoded time of day and day of week utilizing sine and cosine transformations, which symbolize cyclical values on a unit circle. This permits the mannequin to be taught that late-night and early-morning hours are temporally adjoining, and that days of the week wrap naturally from Saturday again to Sunday.

As well as, I aggregated incidents into 3-hour time home windows. Shorter home windows had been too sparse to supply dependable indicators, whereas bigger home windows obscured significant variations (for instance, early night versus late night time). Three-hour buckets struck a steadiness between granularity and stability, leading to intuitive intervals reminiscent of early morning, afternoon, and late night.

Remaining Function Illustration

After preprocessing, every information level consisted of:

- An H3 index representing location

- Cyclically encoded hour and day options

- An aggregated severity sign derived from historic incidents

The mannequin was then educated to foretell the anticipated threat for a given H3 cell, at a given time of day and day of week. In apply, which means when a consumer opens the app and offers a location and time, the system has sufficient context to estimate how strolling threat varies throughout close by blocks.

Coaching the Mannequin Utilizing XGBoost

Why XGBoost?

With the geospatial and temporal options prepared, I knew I wanted to leverage a mannequin which might seize non-linear patterns within the dataset whereas offering low latency to carry out inference on a number of segments in a route. XGBoost was a pure match for a pair causes:

- Tree-based fashions are naturally sturdy at modeling heterogenous information — categorical spatial indices, cyclical time options, and sparse inputs can coexist with out heavy function scaling or normalization

- Function results are extra interpretable than in deep neural networks, which are inclined to introduce pointless opacity for tabular information.

- Flexibility in goals and regularization made it doable to mannequin threat in a means that aligns with the construction of the issue.

Whereas I did think about alternate options reminiscent of linear fashions, random forests, and neural networks, they had been unsatisfactory attributable to incapability to seize nuance in information, excessive latency at inference time, or over-complication for tabular information. XGBoost strikes one of the best steadiness between efficiency and practicality.

Modeling Anticipated Danger

It’s vital to make clear earlier than we transfer on that modeling anticipated threat isn’t a Gaussian downside. When modeling incident charges within the metropolis, I seen per [H3, time] cell that:

- a number of cells have incident rely = 0 and/or whole threat = 0

- a number of cells have simply 1–2 incidents

- handful cells have incidents many incidents (> 1000)

- excessive occasions happen, however not often

These are indicators that my mannequin is neither symmetrical nor will the info factors cluster round a set imply. These properties instantly rule out widespread assumptions like usually distributed errors.

That is the place Tweedie regression turns into helpful.

What’s Tweedie Regression?

Put merely, Tweedie regression says: “Your worth is the sum of random occasions the place the variety of occasions is random and every occasion has a optimistic random measurement.” This suits the crime incident mannequin completely.

Tweedie regression combines Poisson and Gamma distribution processes to mannequin the variety of incidents and the scale (threat rating) of every incident. For example:

- Poisson course of: in window 6pm-9pm on December tenth, 2025 what number of incidents occurred in H3 index 89283082873ffff?

- Gamma distribution: how extreme was every occasion that occurred in H3 index 89283082873ffff between 6pm-9pm on December tenth, 2025?

Why This Issues?

A concrete instance from the info illustrates why this framing is vital.

Within the Presidio, there was a single, uncommon high-severity incident that scored near 9/10. In distinction, a block close to 300 Hyde Road within the Tenderloin has 1000’s of incidents over time, however with a decrease common severity. Tweedie breaks it down as:

Anticipated threat = E[#incidents] × E[severity]# Presidio

E[#] ≈ ~0

E[severity] = excessive

→ Anticipated threat ≈ nonetheless ~0

# Tenderloin

E[#] = excessive

E[severity] = medium

→ Anticipated threat = giantDue to this fact, if the high-risk occasions are inclined to occur extra usually in Presidio then it is going to regulate the anticipated threat accordingly and lift the output scores. Tweedie handles the goal’s zero‑heavy, proper‑skewed distribution and the enter options we mentioned earlier simply clarify variation in that concentrate on.

Framing the Consequence

The result’s a mannequin that predicts anticipated threat, not conditional severity and never binary security labels. This distinction issues. It avoids overreacting to uncommon however excessive occasions, whereas nonetheless reflecting sustained patterns that emerge over time.

Remaining Steps + Deployments

To convey the mannequin to life, I used the Google Maps API to construct an internet site which integrates the maps, routes, and path UI on which I can overlay colours based mostly on the danger scores. I color-coded the segments by taking a percentile of distributions in my information, i.e. rating ≤ P50 = inexperienced (secure), rating ≤ P75 = yellow (reasonably secure), rating ≤ P90 = orange (reasonably dangerous), else pink (dangerous). I additionally added a logic to re-route the consumer via a safer route if the detour isn’t over 15% of the unique length. This may be tweaked, however I left it as is for now since with the San Francisco hills a 15% detour might work you a large number.

I additionally deployed backend on Render and frontend on Vercel.

Placing StreetSense To Use!

And now, going again to the primary instance we checked out — the journey from Chinatown to Market & Van Ness, however now with our new mannequin + utility we’ve constructed!

Right here’s how the stroll seems to be like at 9am on a Tuesday versus 11pm on a Saturday:

Within the first picture, the segments in Chinatown that are inexperienced have a decrease incident and severity rely in comparison with segments that are pink and the info backs it too. The cool half concerning the second picture is that it routinely re-routes the consumer via a route which is safer at 11pm on a Saturday night time. That is the sort of contextual decision-making I initially wished for — and the motivation behind constructing StreetSense.

Remaining Ideas and Potential Enhancements

Whereas the present system captures spatial and temporal patterns in historic incidents, there are clear areas for enchancment:

- incorporating real-time indicators

- utilizing additional floor fact information to validate and prepare

(a) if an incident was marked as 4/10 threat rating for theft and we will discover out via the San Francisco database that an arrest was made, we will bump it as much as a 5/10 - make H3 index delicate to neighboring cells — Outer Richmond ~ Central Richmond so the mannequin ought to infer proximity and contextual info needs to be partially shared

- Broaden spatial options past H3 ID (neighbor aggregation, distance to hotspots, land‑use options).

- deeper exploration of various strategies of dealing with of incident information + evaluations

(a) experiment with totally different XGBoost goal features reminiscent of Pseudo Huber Loss

(b) Leverage hyperparameter optimization frameworks and consider totally different combos of values

(c) experiment with neural networks - increasing past a single metropolis would all make the mannequin extra sturdy

Like all mannequin constructed on historic information, StreetSense displays previous patterns relatively than predicting particular person outcomes, and it needs to be used as a instrument for context relatively than certainty. In the end, the objective is to not label locations as secure or unsafe, however to assist folks make extra knowledgeable, situationally conscious decisions as they transfer via a metropolis.

Attempt StreetSense: https://san-francisco-safety-index.vercel.app/

Information Sources & Licensing

This venture makes use of publicly obtainable information from the San Francisco Open Information Portal:

- San Francisco Police Division Incident Studies

– Supply: San Francisco Open Information Portal (https://data.sfgov.org)

– License: Open Information Commons Public Area Dedication and License (PDDL)

All datasets used are publicly obtainable and permitted for reuse, modification, and industrial use below their respective open information licenses.

Acknowledgement & References

I’d wish to thank the San Francisco Open Information group for sustaining high-quality public datasets that make initiatives like this doable.

Extra references that knowledgeable my understanding of the strategies and ideas used on this work embrace: