. What a present to society that is. If not for google tendencies, how would we’ve ever recognized that more Disney movies released in the 2000s led to fewer divorces in the UK. Or that drinking Coca Cola is an unknown remedy for cat scratches.

Wait, am I getting confused by correlation vs causation once more?

In the event you choose watching over studying, you are able to do so proper right here:

Google Tendencies is without doubt one of the most generally used instruments for analysing human behaviour at scale. Journalists use it. Information scientists use it. Whole papers are constructed on it. However there’s a basic property of Google Tendencies information that makes it very straightforward to misuse, particularly if you’re working with time sequence or making an attempt to construct fashions, and most of the people by no means realise they’re doing it.

All charts and screenshots are created by the writer until acknowledged in any other case.

The Downside with Google Tendencies Information

Google doesn’t really publish figures on their search quantity. That data prints {dollars} for them and there’s no method they’d open that up for different folks to monetise. However what they do give us is a method to see a time sequence, to grasp modifications in folks’s searches of a selected time period and the way in which they do that’s by giving us a normalised set of knowledge.

This doesn’t sound like an issue till you try to do some machine studying with it. As a result of relating to getting a machine to be taught something, we have to give it a number of information.

My preliminary thought was to seize a window of 5 years however I instantly have an issue: the bigger the time window, the much less granular the information. I couldn’t get day by day information for 5 years and whereas I then thought “simply take the utmost time interval you may get day by day information for and transfer that window”, that was an issue too. As a result of it was right here that I found the true terror of normalisation:

No matter time interval I take advantage of or no matter single search time period I take advantage of, the information level with the best variety of searches is straight away set to 100. Meaning the which means of 100 modifications with each window I take advantage of.

This complete submit exists for that reason.

Google Tendencies Fundamentals

Now, I don’t know should you’ve used Google Trends earlier than however should you haven’t, I’m going to speak you thru it so we will get to the meat of the issue.

So I’m going to go looking the phrase “motivation” and it’s going to default to the UK as a result of that’s the place I’m from and to the previous day and we’ve a stunning graph which exhibits how usually folks had been looking out the phrase “motivation” within the final 24 hours.

I like this as a result of you possibly can see actually clearly that persons are principally trying to find motivation through the working day, nobody is looking out it when a lot of the nation is asleep and there’s undoubtedly a few children needing some encouragement for his or her homework. I don’t have an evidence for the late night time searches however I might form of guess these are folks not prepared to return to work tomorrow.

Now that is beautiful however whereas eight minute increments over 24 hours does give us a pleasant 180 information factors to make use of, most of them are literally zero and I don’t know if the previous 24 hours have been extremely demotivating in comparison with the remainder of the 12 months or if immediately represents the 12 months’s highest GDP contribution, so I’m going to extend the window a little bit bit.

The second we go to per week, the very first thing you discover is that the information is rather a lot much less granular. We’ve got per week of knowledge however now it’s solely hourly and I nonetheless have the identical core downside of not realizing how consultant this week is.

I can hold zooming out. 30 days, 90 days. At every level we lose granularity and don’t have anyplace close to as many information factors as we did for twenty-four hours. If I’m going to construct an precise mannequin, this isn’t going to chop it. I must go large.

And after I choose 5 years is the place we’re going to come across the issue that motivated this whole video (excuse the pun, that was unintentional): I can’t get day by day information. And in addition, why is immediately not at 100 anymore?

Herein lies the actual downside with google tendencies information

As I discussed earlier, google tendencies information is normalised. Because of this no matter time interval I take advantage of or no matter single search time period I take advantage of, the information level with the best variety of searches is straight away set to 100. All the opposite factors are scaled down accordingly. If the first of April had half the searches of the utmost, then the first of April goes to have a google tendencies rating of fifty.

So let’s look at an example here just to illustrate the point. Let’s take the months of May and June 2025, both 30 or 31 days so we have daily data here, we actually lose it beyond 90 days. If I look at May you can see we’re scaled so we hit 100 on the 13th and in June we hit it on the 10th. So does that mean motivation was searched just as often on the 10th of June as it was on the 13th of May?

If I zoom out now so that I have May and June on the same graph, you can immediately see that that’s not the case. When both months are included we see that the searches for motivation had a google trends score of 83 on the 10th of June, meaning as a proportion of searches in the UK, it was 81% of the proportion of searches on the 13th May. If we didn’t zoom out, we wouldn’t have known that.

Now all is not lost, we did get a good bit of information from this experiment because we know that we can see the relative difference between two data points if they’re both included in the same graph, so if we did load May and June separately, knowing 10th of June is 81% of 13th of May means we can scale June down accordingly and the data will be comparable.

So that’s what I decided I’d do. I’d fetch my google trends data with a one day overlap on each window, so 1st of Jan to 31st of March, then 31st of March to 31st of July. Then I could use March 31st in both data sets to scale the second set to be comparable to the first.

But while this is close to something we can use, there’s one more problem I need to make you aware of.

Google Trends: Another Layer of Randomness

So when it comes to google trends data, google isn’t actually tracking every single search. That would be a computational nightmare. Instead, Google makes use of sampling techniques so to build a representation of search volumes.

This means that while the sample is likely very well-built, it is Google after all, each day will have some natural random variation. If by chance March 31st was a day where Google’s sample happened to be unusually high or low compared to the real world, our overlap method would introduce an error into our entire data set.

On top of this, we also have to consider rounding. Google trends rounds everything to the nearest whole number. There’s no 50.5, it’s 50 or it’s 51. Now this seems like a small detail but it can actually become a big problem. Let me show you why.

On the 4th of October 2021, there was a massive spike in searches for Facebook. This massive spike gets scaled to 100 and as a result everything else in that period is much closer to zero. When you’re rounding to the nearest whole number that tiny error of 0.5 suddenly becomes a huge proportional error when your number is only 1 or 2. This means that our solution has to be robust enough to handle noise, not just scaling.

So how do we solve this? Well we know that on average the samples will be representative, so let’s just take a bigger sample. If we use a larger window to get our overlap, the random variation and rounding errors have less of an impact.

So here’s the final plan. I know I can get daily data for up to 90 days. I’m going to load a rolling window of 90-day periods but I’ll make sure each window overlaps by a full month with the next. That way, our overlap isn’t just one potentially noisy day but a stable month-long anchor that we can use to scale our data more accurately.

So it sounds like we’ve got a plan. I’ve got some concerns, mainly that by having lots of batches there’s going to be compounding errors and it could result in big numbers absolutely blowing up. But in order to see how this shakes out with real data we have to go and do it. So here’s one I made earlier.

Writing Code to Figure Out Google Trends

After writing up everything we’ve discussed in code form and, after having some fun getting temporarily banned from google trends for pulling too much data, I’ve put together some graphs. My immediate reaction when I saw this was: “Oh no, it blew up”.

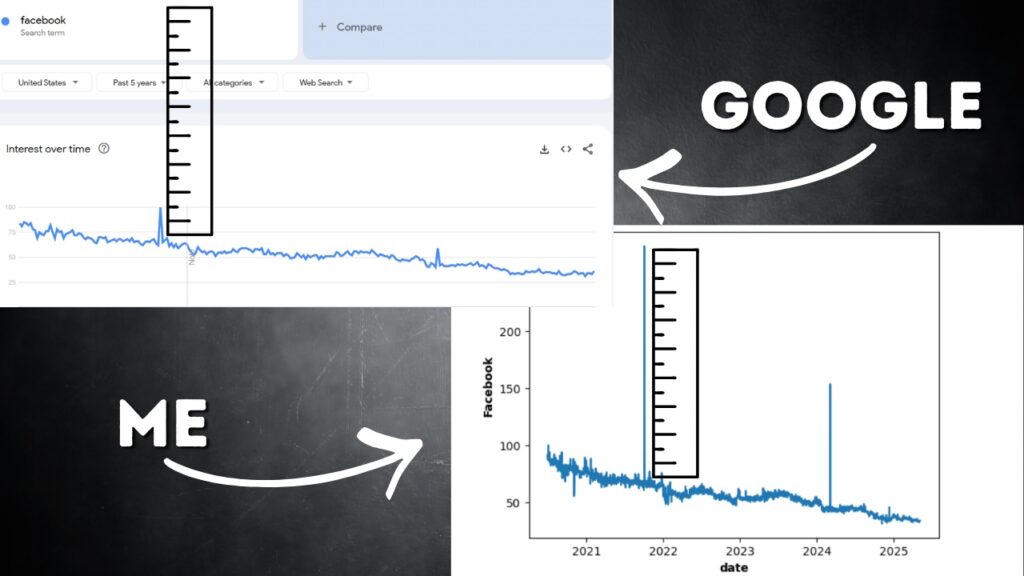

The graph below shows my chained-together five years of search volumes for Facebook. You’ll see a pretty steady downward trend but two spikes stand out. The first of these was the massive spike on 4th October 2021 that we mentioned earlier.

My first thought was to verify the spikes. I, unironically, googled it and found out about widespread Meta outages that day. I pulled data for Instagram and Whatsapp over the same period and saw similar spikes. So I knew the spike was real but I still had a question: Was it too big?

When I put my time series side-by-side with Google Trends’ own graph, my heart sank. My spikes were huge in comparison. I started thinking about how to handle this. Should I cap the maximum spike value? That felt arbitrary and would lose information about the relative sizes of spikes. Should I apply an arbitrary scaling factor? Again, it felt like a guess.

That was until I had a bolt of inspiration. Remember, Google Trends is giving us weekly data for this period, that’s the whole reason we’re doing this. What if I averaged my data for that week to see how it compared to Google’s weekly value?

This is where I breathed a huge sigh of relief. That week was the biggest spike on Google Trends so set to 100. When I averaged my data for the same week, I got 102.8. Incredibly close to Google Trends. We also finish in about the same place. This means the compounding errors from my scaling method haven’t blown up my data. I have something that looks and behaves just like the Google Trends data!

So now we have a robust methodology for creating a clean, comparable daily time series for any search term. Which is great. But what if we actually want to do something useful with it, like comparing search terms around the world for example?

Because while Google Trends allows you to compare multiple search terms it doesn’t allow direct comparison of multiple countries. So I can grab a dataset of motivation for each country using the method we’ve discussed today, but how do I make them comparable? Facebook is part of the solution.

But this solution is one for a later blog post, one in which we’re going to build a “basket of goods” to compare countries and see exactly how Facebook fits into all of this.

So today we started with the question of whether we can model national motivation and in trying to do so immediately hit a wall. Because Google Trends daily data is misleading. Not due to an error, but by its very design. We’ve found a way to tackle that now, but in the life of a data scientist, there are always more problems lurking around the corner.