Introduction

buyer annoyance from wait instances. Calls arrive randomly, so wait time X follows an Exponential distribution—most waits are quick, a number of are painfully lengthy.

Now I’d argue that annoyance isn’t linear: a 10-minute wait feels greater than twice as unhealthy as a 5-minute one. So that you resolve to mannequin “annoyance items” as (Y = X²).

Easy, proper? Simply take the pdf of X, change x with (sqrt{y}), and also you’re completed.

You plot it. It appears to be like affordable—peaked close to zero, lengthy tail.

However what in the event you truly computed the CDF? You’ll count on 1 proper?

The outcome? 2.

Quick numpy snippet to substantiate this

import numpy as np

import matplotlib.pyplot as plt

from scipy.stats import expon

# CDF of Exponential(1): F(x) = 1 - exp(-x) for x >= 0

def cdf_exp(x):

return 1 - np.exp(-x)

# Flawed (naive) pdf for Y = X²: simply substitute x = sqrt(y)

def wrong_pdf(y):

return np.exp(-np.sqrt(y)) # This integrates to 2!

# Fast numerical test of integral

from scipy.combine import quad

integral, err = quad(wrong_pdf, 0, np.inf)

print(f"Numerical integral ≈ {integral:.3f} (must be 1, but it surely's 2)")

# prints 2Your new distribution claims each doable end result is twice as probably correctly.

That’s unimaginable… but it surely occurred since you missed one small adjustment.

This “adjustment” is the Jacobian—a scaling issue that compensates for the way the transformation stretches or compresses the axis at completely different factors. Skip it, and your chances lie. Embrace it, and the whole lot provides up completely once more.

On this put up, we’ll construct the instinct, derive the mathematics step-by-step, see it seem naturally in histogram equalization, visualize the stretching/shrinking empirically, and show it with simulations.

The Instinct

To understand why the Jacobian adjustment is critical, let’s use a tangible analogy: consider a likelihood distribution as a set quantity of sand—precisely 1 pound—unfold alongside a quantity line, the place the peak of the sand pile at every level represents the likelihood density. The full sand at all times provides as much as 1, representing 100% likelihood.

Now, while you remodel the random variable (say, from X to Y = X²), it’s like grabbing that quantity line—a versatile rubber sheet—and warping it in accordance with the transformation. You’re not including or eradicating sand; you’re simply stretching or compressing completely different elements of the sheet.

In areas the place the transformation compresses the sheet (a protracted stretch of the unique line will get squished right into a shorter section on the brand new Y-axis), the identical quantity of sand now occupies much less horizontal house. To maintain the entire sand conserved, the pile should get taller—the density will increase. For instance, close to Y=0 within the squaring transformation, many small X values (from 0 to 1) get crammed right into a tiny Y interval (0 to 1), so the density shoots up dramatically.

Conversely, in areas the place the transformation stretches the sheet (a brief section of the unique line will get pulled into an extended one on the Y-axis), the sand spreads out over extra space, making the pile shorter and flatter—the density decreases. For big X (say, from 10 to 11), Y stretches from 100 to 121—a a lot wider interval—so the density thins on the market.

The important thing level: the entire sand stays precisely 1 lb, regardless of the way you warp the sheet. With out accounting for this native stretching and shrinking, your new density can be inconsistent, like claiming you might have 2 lb of sand after the warp. The Jacobian is the mathematical issue that mechanically adjusts the peak in all places to protect the entire quantity.

The Math

Let’s formalize the instinct with the instance of ( Y = g(X) = X^2 ), the place ( X ) has pdf ( f_X(x) = e^{-x} ) for ( x geq 0 ) (Exponential with charge 1).

Contemplate a small interval round ( x ) with width ( Delta x ).

The likelihood in that interval is roughly ( f_X(x) Delta x ).

After transformation, this maps to an interval round ( y = x^2 ) with width

( Delta y approx left| g'(x) proper| Delta x = |2x| Delta x ).

To preserve likelihood:

$$ f_Y(y) Delta y approx f_X(x) Delta x, $$

so

$$ f_Y(y) approx frac{f_X(x)} $$

Within the restrict as ( Delta x to 0 ), this turns into precise:

$$ f_Y(y) = f_X(x) left| frac{dx}{dy} proper|, $$

the place ( x = sqrt{y} ) (the inverse) and

( frac{dx}{dy} = frac{1}{2sqrt{y}} ).

Plugging in:

$$ f_Y(y) = e^{-sqrt{y}} cdot frac{1}{2sqrt{y}} quad textual content{for } y > 0. $$

With out the Jacobian time period ( frac{1}{2sqrt{y}} ), the naive

( f_Y(y) = e^{-sqrt{y}} )

integrates to 2:

Let ( u = sqrt{y} ), ( y = u^2 ), ( dy = 2u , du ):

$$ int_0^infty e^{-sqrt{y}} , dy $$

$$ = int_0^infty e^{-u}cdot 2u , du $$

$$= 2 int_0^infty u e^{-u} , du $$

$$ = 2 Gamma(2) = 2 cdot 1 = 2. $$

The Jacobian adjustment ensures $$ int_0^infty f_Y(y) , dy = 1. $$

A notice on (Gamma)

(Gamma) is the illustration of factorial for actual numbers. $$Gamma(n) = (n-1)! quad textual content{for constructive integers } n$$

This scaling issue ( left| frac{dx}{dy} proper| ) is exactly what compensates for the native stretching and shrinking of the axis.

The Normal Type

Let Y = g(X), the place g is a strictly monotonic (growing or lowering) differentiable perform, and X has pdf ( f_X(x) ).

We would like the pdf ( f_Y(y) ) of Y.

Contemplate a small interval round x with width ( Delta x ).

The likelihood in that interval is roughly ( f_X(x) Delta x ).

After transformation y = g(x), this interval maps to an interval round y with width

( Delta y approx left| g'(x) proper| Delta x ).

Going again to the equation we developed beforehand:

$$ f_Y(y) = f_X(x) left| frac{dx}{dy} proper|, $$

the place we use the inverse relation (x = h(y) = g^{-1}(y) ), and

( frac{dx}{dy} = h'(y) = frac{1}{g'(x)} ).

Thus the overall components is

$$ f_Y(y) = f_X(h(y)) left| h'(y) proper|. $$

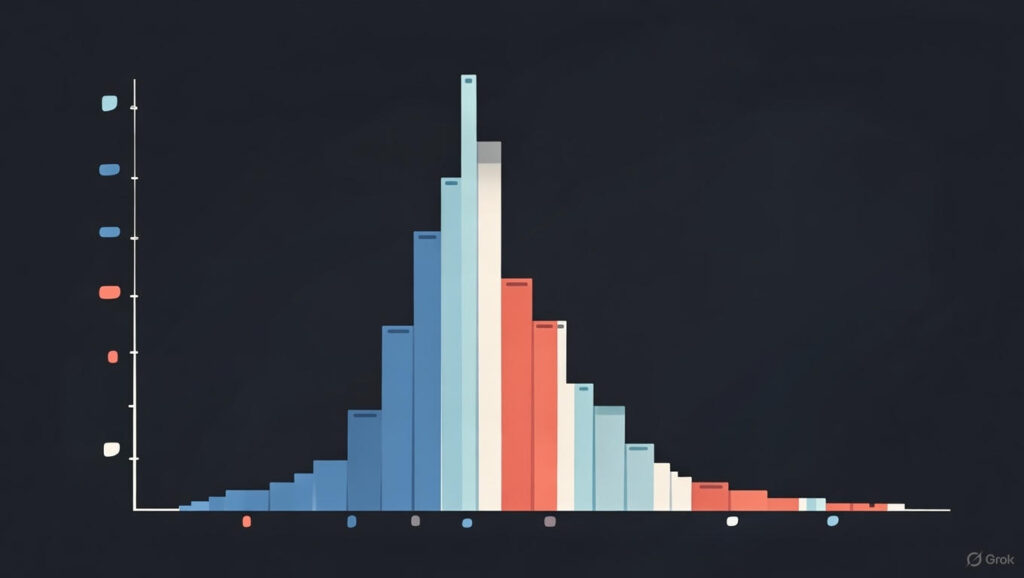

Emperical Proof

Simulating the stretching and shrinking

One of the best ways to “really feel” the stretching and shrinking is to zoom in on two areas individually: close to zero (the place compression occurs) and farther out (the place stretching dominates).

We’ll generate 4 plots:

1. Unique X histogram, zoomed on small values (X < 1), with equal small intervals of width 0.1 — to indicate the supply of compression.

2. Corresponding Y = X² histogram, zoomed close to zero — displaying how these tiny X intervals get even tinier on Y (shrink).

3. Unique X histogram for bigger values (X > 1), with equal intervals of width 1 — to indicate the supply of stretching.

4. Corresponding Y histogram for big values — displaying how these X intervals explode into enormous Y intervals (stretch).

Code

import numpy as np

import matplotlib.pyplot as plt

# Generate massive pattern for clear visuals

n = 50000

x = np.random.exponential(scale=1, dimension=n)

y = x**2

fig = plt.determine(figsize=(16, 10))

def plot_histogram(ax, knowledge, bins, density, shade, alpha, title, xlabel, ylabel):

ax.hist(knowledge, bins=bins, density=density, shade=shade, alpha=alpha)

ax.set_title(title)

ax.set_xlabel(xlabel)

ax.set_ylabel(ylabel)

# Plot 1: X small values (compression supply)

ax1 = fig.add_subplot(2, 2, 1)

plot_histogram(ax1, x[x < 1], bins=50, density=True, shade='skyblue', alpha=0.7,

title='Unique X (zoomed X < 1)', xlabel='X', ylabel='Density')

# Equal-width intervals of 0.1 on small X

small_x_lines = np.arange(0, 1.01, 0.1)

for line in small_x_lines:

ax1.axvline(line, shade='crimson', linestyle='--', alpha=0.8)

# Plot 2: Y close to zero (displaying shrink/compression)

ax2 = fig.add_subplot(2, 2, 2)

plot_histogram(ax2, y[y < 1], bins=100, density=True, shade='lightcoral', alpha=0.7,

title='Y = X² close to zero (compression seen)', xlabel='Y', ylabel='Density')

# Mapped small intervals on Y (very slim!)

small_y_lines = small_x_lines**2

for line in small_y_lines:

ax2.axvline(line, shade='crimson', linestyle='--', alpha=0.8)

# Plot 3: X bigger values (stretching supply)

ax3 = fig.add_subplot(2, 2, 3)

plot_histogram(ax3, x[(x > 1) & (x < 12)], bins=50, density=True, shade='skyblue', alpha=0.7,

title='Unique X (X > 1)', xlabel='X', ylabel='Density')

# Equal-width intervals of 1 on bigger X

large_x_starts = [1, 3, 5, 7, 9, 11]

large_x_lines = large_x_starts + [s + 1 for s in large_x_starts]

for line in large_x_lines:

if line < 12:

ax3.axvline(line, shade='crimson', linestyle='--', alpha=0.8)

# Plot 4: Y massive values (displaying stretch)

ax4 = fig.add_subplot(2, 2, 4)

plot_histogram(ax4, y[(y > 1) & (y < 150)], bins=80, density=True, shade='lightgreen', alpha=0.7,

title='Y = X² massive values (stretching seen)', xlabel='Y', ylabel='Density')

# Mapped massive intervals on Y (enormous gaps!)

large_y_lines = np.array(large_x_lines)**2

for line in large_y_lines:

if line < 150:

ax4.axvline(line, shade='crimson', linestyle='--', alpha=0.8)

# Replace annotation types with modified font model

fig.textual content(0.5, 0.75, "X-axis shrinking → Density increasesnY-axis larger close to zero",

ha='middle', va='middle', fontsize=12, shade='black',

fontstyle='italic', bbox=dict(facecolor='#f7f7f7', edgecolor='none', alpha=0.9))

fig.textual content(0.5, 0.25, "X-axis stretching → Density decreasesnY-axis decrease for big values",

ha='middle', va='middle', fontsize=12, shade='black',

fontstyle='italic', bbox=dict(facecolor='#f7f7f7', edgecolor='none', alpha=0.9))

fig.set_dpi(250)

plt.tight_layout()

plt.present()Simulating the Jacobian adjustment

To see the Jacobian adjustment in motion, let’s simulate knowledge from the Exponential(1) distribution for X, compute Y = X², and plot the empirical histogram of Y in opposition to the theoretical pdf for growing pattern sizes n. As n grows, the histogram ought to converge to the proper adjusted pdf, not the naive one.

Code

import numpy as np

import matplotlib.pyplot as plt

def correct_pdf(y):

return np.exp(-np.sqrt(y)) / (2 * np.sqrt(y))

def naive_pdf(y):

return np.exp(-np.sqrt(y))

# Pattern sizes to check

sample_sizes = [100, 1_000, 10_000]

fig, axs = plt.subplots(1, len(sample_sizes), figsize=(15, 5))

y_vals = np.linspace(0.01, 50, 1000) # Vary for plotting theoretical pdfs

for i, n in enumerate(sample_sizes):

# Pattern X ~ Exp(1)

x = np.random.exponential(scale=1, dimension=n)

y = x**2

# Plot histogram (normalized to density)

axs[i].hist(y, bins=50, vary=(0, 50), density=True, alpha=0.6, shade='skyblue', label='Empirical Histogram')

# Plot theoretical pdfs

axs[i].plot(y_vals, correct_pdf(y_vals), 'g-', label='Appropriate PDF (with Jacobian)')

axs[i].plot(y_vals, naive_pdf(y_vals), 'r--', label='Naive PDF (no Jacobian)')

axs[i].set_title(f'n = {n}')

axs[i].set_xlabel('Y = X²')

axs[i].set_ylabel('Density')

axs[i].legend()

axs[i].set_ylim(0, 0.5) # For constant viewing

axs[i].grid(True) # Add grid to every subplot

# Set the determine DPI to 250 for larger decision

fig.set_dpi(250)

plt.grid()

plt.tight_layout()

plt.present()

And the result’s what we count on.

Histogram Equalization: an actual world utility

A traditional instance the place the Jacobian adjustment seems naturally is histogram equalization in picture processing.

We deal with pixel intensities X (usually in ([0, 255])) as samples from some distribution with empirical pdf based mostly on the picture histogram.

The purpose is to rework them to new intensities Y in order that Y is roughly uniform on ([0, 255]) — this spreads out the values and improves distinction.

The transformation used is strictly the scaled cumulative distribution perform (CDF) of X:

$$ Y = 255 cdot F_X(X) $$

the place ( F_X(x) = int_{-infty}^x f_X(t) , dt ) (empirical CDF in apply).

Why does this work? It’s a direct utility of the Chance Integral Remodel (PIT):

If ( Y = F_X(X) ) and X is steady, then Y ~ Uniform([0,1]).

Scaling by 255 provides Uniform([0,255]).

Now see the Jacobian at work:

Let ( g(x) = L cdot F_X(x) ) (( L = 255 )).

The by-product ( g'(x) = L cdot f_X(x) ) (for the reason that by-product of the CDF is the pdf).

Apply the change-of-variables components:

$$ f_Y(y) = f_X(x) / |g'(x)| = f_X(x) / (L f_X(x)) = 1/L $$

The ( f_X(x) ) cancels completely, leaving a relentless (uniform) density.

The Jacobian issue ( 1 / |g'(x)| ) mechanically flattens the distribution by compensating for areas the place the unique density was excessive or low.

In discrete pictures, rounding makes it approximate, however the precept is similar.

For a deeper dive into histogram equalization with examples, see my earlier put up: here.

In Conclusion

The Jacobian adjustment is a type of quiet items of arithmetic that feels pointless—till you skip it and all of the sudden your chances don’t add as much as 1 anymore. Whether or not you’re squaring ready instances, modeling vitality from velocity, or flattening picture histograms, the transformation adjustments not simply the values however how likelihood is distributed throughout them. The issue ( left| frac{dx}{dy} proper| ) (or its multivariate cousin, the determinant) is the exact compensation that retains the entire likelihood conserved whereas accounting for native stretching and compression.

Subsequent time you remodel a random variable, keep in mind the sand on the rubber sheet: warp the axis all you need, however the whole sand should keep the identical. The Jacobian Adjustment is the rule that makes it occur.

Code

References and Additional Studying

Just a few issues I discovered helpful.