In the present day’s mannequin is Logistic Regression.

For those who already know this mannequin, here’s a query for you:

Is Logistic Regression a regressor or a classifier?

Effectively, this query is precisely like: Is a tomato a fruit or a vegetable?

From a botanist’s viewpoint, a tomato is a fruit, as a result of they have a look at construction: seeds, flowers, plant biology.

From a prepare dinner’s viewpoint, a tomato is a vegetable, as a result of they have a look at style, how it’s utilized in a recipe, whether or not it goes in a salad or a dessert.

The identical object, two legitimate solutions, as a result of the standpoint is completely different.

Logistic Regression is precisely like that.

- Within the Statistical / GLM perspective, it’s a regression. And there may be not the idea of “classification” on this framework anyway. There are gamma regression, logistic regression, Poisson regression…

- Within the machine studying perspective, it’s used for classification. So it’s a classifier.

We’ll come again to this later.

For now, one factor is certain:

Logistic Regression could be very nicely tailored when the goal variable is binary, and normally y is coded as 0 or 1.

However…

What’s a classifier for a weight-based mannequin?

So, y will be 0 or 1.

0 or 1, they’re numbers, proper?

So we will simply think about y as steady!

Sure, y = a x + b, with y = 0 or 1.

Why not?

Now, it’s possible you’ll ask: why this query, now? Why it was not requested earlier than.

Effectively, for distance-based and tree-based fashions, a categorical y is actually categorical.

When y is categorical, like pink, blue, inexperienced, or just 0 and 1:

- In Ok-NN, you classify by neighbors of every class.

- In centroid fashions, you evaluate with the centroid of every class.

- In a determination tree, you compute class proportions at every node.

In all these fashions:

Class labels should not numbers.

They’re classes.

The algorithms by no means deal with them as values.

So classification is pure and rapid.

However for weight-based fashions, issues work in another way.

In a weight-based mannequin, we at all times compute one thing like:

y = a x + b

or, later, a extra advanced perform with coefficients.

This implies:

The mannequin works with numbers in all places.

So right here is the important thing thought:

If the mannequin does regression, then this similar mannequin can be utilized for binary classification.

Sure, we will use linear regression for binary classification!

Since binary labels are 0 and 1, they’re already numeric.

And on this particular case: we can apply Bizarre Least Squares (OLS) straight on y = 0 and y = 1.

The mannequin will match a line, and we will use the identical closed-form system, as we will see beneath.

We are able to do the identical gradient descent, and it’ll completely work:

After which, to acquire the ultimate class prediction, we merely select a threshold.

It’s normally 0.5 (or 50 p.c), however relying on how strict you need to be, you may choose one other worth.

- If the expected y≥0.5, predict class 1

- In any other case, class 0

This can be a classifier.

And since the mannequin produces a numeric output, we will even determine the purpose the place: y=0.5.

This worth of x defines the determination frontier.

Within the earlier instance, this occurs at x=9.

At this threshold, we already noticed one misclassification.

However an issue seems as quickly as we introduce some extent with a massive worth of x.

For instance, suppose we add some extent with: x= 50 and y = 1.

As a result of linear regression tries to suit a straight line by all the info, this single massive worth of x pulls the road upward.

The choice frontier shifts from x= to roughly x=12.

And now, with this new boundary, we find yourself with two misclassifications.

This illustrates the primary situation:

A linear regression used as a classifier is extraordinarily delicate to excessive values of x. The choice frontier strikes dramatically, and the classification turns into unstable.

This is among the causes we’d like a mannequin that doesn’t behave linearly eternally. A mannequin that stays between 0 and 1, even when x turns into very massive.

And that is precisely what the logistic perform will give us.

How Logistic Regression works

We begin with : ax + b, similar to the linear regression.

Then we apply one perform referred to as sigmoid, or logistic perform.

As we will see within the screenshot beneath, the worth of p is then between 0 and 1, so that is good.

p(x)is the predicted chance thaty = 11 − p(x)is the expected chance thaty = 0

For classification, we will merely say:

- If

p(x) ≥ 0.5, predict class1 - In any other case, predict class

0

From probability to log-loss

Now, the OLS Linear Regression tries to attenuate the MSE (Imply Squared Error).

Logistic regression for a binary goal makes use of the Bernoulli probability. For every statement i:

- If

yᵢ = 1, the chance of the info level ispᵢ - If

yᵢ = 0, the chance of the info level is1 − pᵢ

For the entire dataset, the chances are the product over all i. In apply, we take the logarithm, which turns the product right into a sum.

Within the GLM perspective, we attempt to maximize this log probability.

Within the machine studying perspective, we outline the loss because the adverse log probability and we reduce it. This offers the same old log-loss.

And it’s equal. We won’t do the demonstration right here

Gradient Descent for Logistic Regression

Precept

Simply as we did for Linear Regression, we will additionally use Gradient Descent right here. The thought is at all times the identical:

- Begin from some preliminary values of

aandb. - Compute the loss and its gradient (derivatives) with respect to

aandb. - Transfer

aandba bit of bit within the course that reduces the loss. - Repeat.

Nothing mysterious.

Simply the identical mechanical course of as earlier than.

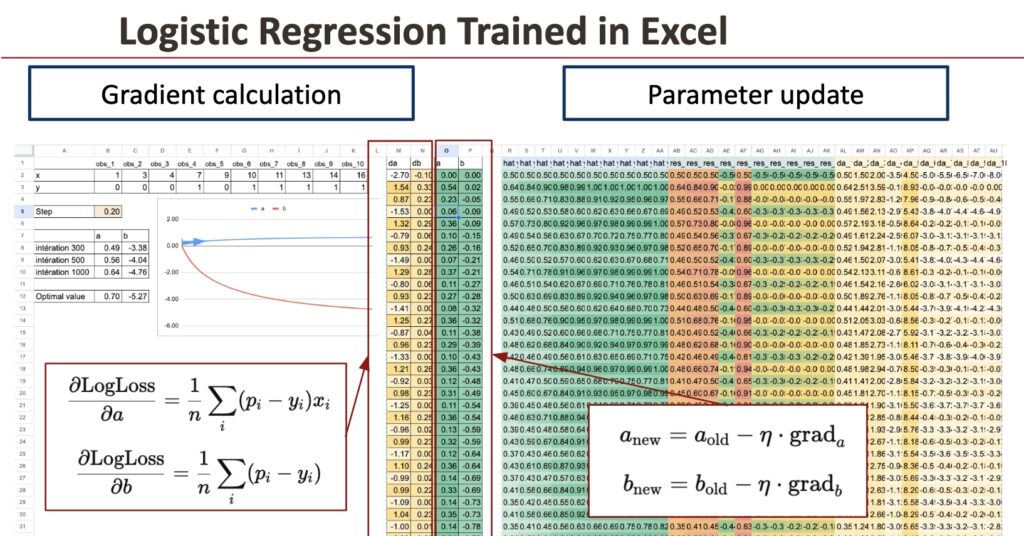

Step 1. Gradient Calculation

For logistic regression, the gradients of the common log-loss comply with a quite simple construction.

That is merely the common residual.

We’ll simply give the outcome beneath, for the system that we will implement in Excel. As you may see, it’s fairly easy on the finish, even when the log-loss system will be advanced at first look.

Excel can compute these two portions with simple SUMPRODUCT formulation.

Step 2. Parameter Replace

As soon as the gradients are identified, we replace the parameters.

This replace step is repeated at every iteration.

And iteration after iteration, the loss goes down, and the parameters converge to the optimum values.

We now have the entire image.

You will have seen the mannequin, the loss, the gradients, and the parameter updates.

And with the detailed view of every iteration in Excel, you may truly play with the mannequin: change a price, watch the curve transfer, and see the loss lower step-by-step.

It’s surprisingly satisfying to watch how all the pieces suits collectively so clearly.

What about multiclass classification?

For distance-based and tree-based fashions:

No situation in any respect.

They naturally deal with a number of lessons as a result of they by no means interpret the labels as numbers.

However for weight-based fashions?

Right here we hit an issue.

If we write numbers for the category: 1, 2, 3, and so on.

Then the mannequin will interpret these numbers as actual numeric values.

Which results in issues:

- the mannequin thinks class 3 is “greater” than class 1

- the midpoint between class 1 and sophistication 3 is class 2

- distances between lessons turn out to be significant

However none of that is true in classification.

So:

For weight-based fashions, we can not simply use y = 1, 2, 3 for multiclass classification.

This encoding is inaccurate.

We’ll see later how you can repair this.

Conclusion

Ranging from a easy binary dataset, we noticed how a weight-based mannequin can act as a classifier, why linear regression shortly reaches its limits, and the way the logistic perform solves these issues by conserving predictions between 0 and 1.

Then, by expressing the mannequin by probability and log-loss, we obtained a formulation that’s each mathematically sound and straightforward to implement.

And as soon as all the pieces is positioned in Excel, your complete studying course of turns into seen: the possibilities, the loss, the gradients, the updates, and at last the convergence of the parameters.

With the detailed iteration desk, you may truly see how the mannequin improves step-by-step.

You may change a price, regulate the training charge, or add some extent, and immediately observe how the curve and the loss react.

That is the actual worth of doing machine studying in a spreadsheet: nothing is hidden, and each calculation is clear.

By constructing logistic regression this fashion, you not solely perceive the mannequin, you perceive why it’s skilled.

And this instinct will stick with you as we transfer to extra superior fashions later within the Introduction Calendar.