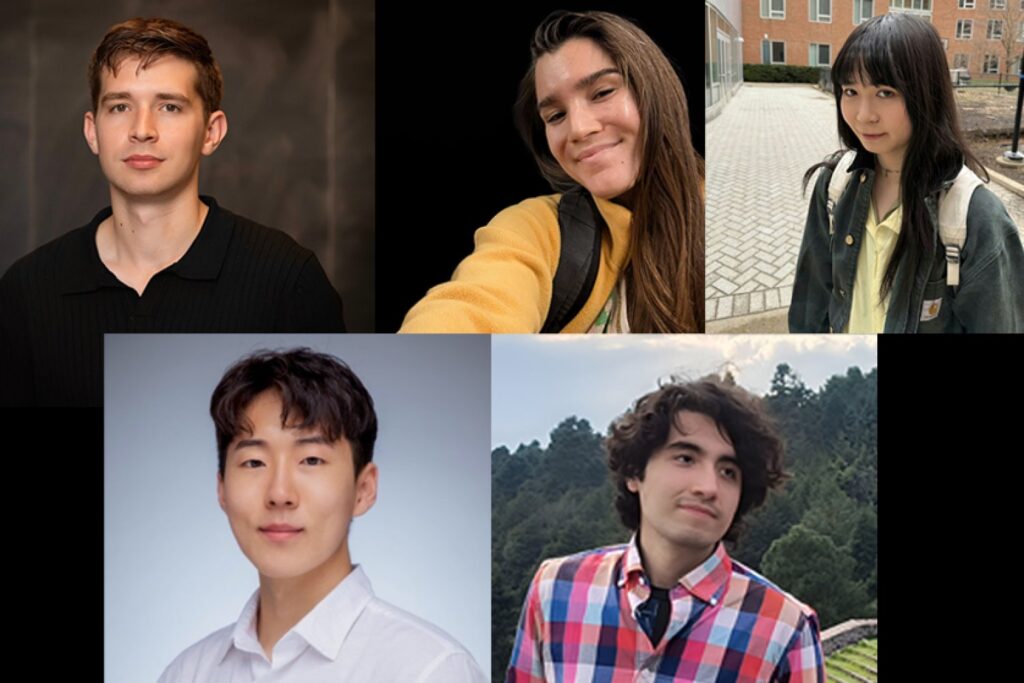

Adoption of latest instruments and applied sciences happens when customers largely understand them as dependable, accessible, and an enchancment over the out there strategies and workflows for the associated fee. 5 PhD college students from the inaugural class of the MIT-IBM Watson AI Lab Summer season Program are using state-of-the-art assets, assuaging AI ache factors, and creating new options and capabilities to advertise AI usefulness and deployment — from studying when to belief a mannequin that predicts one other’s accuracy to extra successfully reasoning over information bases. Collectively, the efforts from the scholars and their mentors type a through-line, the place sensible and technically rigorous analysis results in extra reliable and beneficial fashions throughout domains.

Constructing probes, routers, new consideration mechanisms, artificial datasets, and program-synthesis pipelines, the scholars’ work spans security, inference effectivity, multimodal knowledge, and knowledge-grounded reasoning. Their methods emphasize scaling and integration, with influence at all times in sight.

Studying to belief, and when

MIT math graduate scholar Andrey Bryutkin’s analysis prioritizes the trustworthiness of fashions. He seeks out inside constructions inside issues, equivalent to equations governing a system and conservation legal guidelines, to know methods to leverage them to supply extra reliable and sturdy options. Armed with this and dealing with the lab, Bryutkin developed a way to see into the character of enormous studying fashions (LLMs) behaviors. Along with the lab’s Veronika Thost of IBM Analysis and Marzyeh Ghassemi — affiliate professor and the Germeshausen Profession Improvement Professor within the MIT Division of Electrical Engineering and Pc Science (EECS) and a member of the Institute of Medical Engineering Sciences and the Laboratory for Info and Determination Programs — Bryutkin explored the “uncertainty of uncertainty” of LLMs.

Classically, tiny feed-forward neural networks two-to-three layers deep, referred to as probes, are educated alongside LLMs and employed to flag untrustworthy solutions from the bigger mannequin to builders; nevertheless, these classifiers can even produce false negatives and solely present level estimates, which don’t provide a lot details about when the LLM is failing. Investigating protected/unsafe prompts and question-answer duties, the MIT-IBM crew used prompt-label pairs, in addition to the hidden states like activation vectors and final tokens from an LLM, to measure gradient scores, sensitivity to prompts, and out-of-distribution knowledge to find out how dependable the probe was and study areas of knowledge which are troublesome to foretell. Their technique additionally helps determine potential labeling noise. This can be a essential operate, because the trustworthiness of AI methods relies upon completely on the standard and accuracy of the labeled knowledge they’re constructed upon. Extra correct and constant probes are particularly vital for domains with essential knowledge in functions like IBM’s Granite Guardian household of fashions.

One other manner to make sure reliable responses to queries from an LLM is to enhance them with exterior, trusted information bases to eradicate hallucinations. For structured knowledge, equivalent to social media connections, monetary transactions, or company databases, information graphs (KG) are pure matches; nevertheless, communications between the LLM and KGs typically use fastened, multi-agent pipelines which are computationally inefficient and costly. Addressing this, physics graduate scholar Jinyeop Tune, together with lab researchers Yada Zhu of IBM Analysis and EECS Affiliate Professor Julian Shun created a single-agent, multi-turn, reinforcement studying framework that streamlines this course of. Right here, the group designed an API server internet hosting Freebase and Wikidata KGs, which encompass common web-based information knowledge, and a LLM agent that points focused retrieval actions to fetch pertinent info from the server. Then, by means of steady back-and-forth, the agent appends the gathered knowledge from the KGs to the context and responds to the question. Crucially, the system makes use of reinforcement studying to coach itself to ship solutions that strike a stability between accuracy and completeness. The framework pairs an API server with a single reinforcement studying agent to orchestrate data-grounded reasoning with improved accuracy, transparency, effectivity, and transferability.

Spending computation properly

The timeliness and completeness of a mannequin’s response carry related weight to the significance of its accuracy. That is very true for dealing with lengthy enter texts and people the place components, like the topic of a narrative, evolve over time, so EECS graduate scholar Songlin Yang is re-engineering what fashions can deal with at every step of inference. Specializing in transformer limitations, like these in LLMs, the lab’s Rameswar Panda of IBM Analysis and Yoon Kim, the NBX Professor and affiliate professor in EECS, joined Yang to develop next-generation language mannequin architectures past transformers.

Transformers face two key limitations: excessive computational complexity in long-sequence modeling because of the softmax consideration mechanism, and restricted expressivity ensuing from the weak inductive bias of RoPE (rotary positional encoding). Because of this because the enter size doubles, the computational value quadruples. RoPE permits transformers to know the sequence order of tokens (i.e., phrases); nevertheless, it doesn’t do a superb job capturing inside state adjustments over time, like variable values, and is restricted to the sequence lengths seen throughout coaching.

To deal with this, the MIT-IBM crew explored theoretically grounded but hardware-efficient algorithms. As an alternative choice to softmax consideration, they adopted linear consideration, decreasing the quadratic complexity that limits the possible sequence size. In addition they investigated hybrid architectures that mix softmax and linear consideration to strike a greater stability between computational effectivity and efficiency.

Rising expressivity, they changed RoPE with a dynamic reflective positional encoding primarily based on the Householder rework. This method permits richer positional interactions for deeper understanding of sequential info, whereas sustaining quick and environment friendly computation. The MIT-IBM crew’s development reduces the necessity for transformers to interrupt issues into many steps, as a substitute enabling them to deal with extra complicated subproblems with fewer inference tokens.

Visions anew

Visible knowledge comprise multitudes that the human mind can shortly parse, internalize, after which imitate. Utilizing vision-language fashions (VLMs), two graduate college students are exploring methods to do that by means of code.

Over the previous two summers and beneath the advisement of Aude Oliva, MIT director of the MIT-IBM Watson AI Lab and a senior analysis scientist within the Pc Science and Synthetic Intelligence Laboratory; and IBM Analysis’s Rogerio Feris, Dan Gutfreund, and Leonid Karlinsky (now at Xero), Jovana Kondic of EECS has explored visible doc understanding, particularly charts. These comprise components, equivalent to knowledge factors, legends, and axes labels, that require optical character recognition and numerical reasoning, which fashions nonetheless wrestle with. With a view to facilitate the efficiency on duties equivalent to these, Kondic’s group got down to create a big, open-source, artificial chart dataset from code that might be used for coaching and benchmarking.

With their prototype, ChartGen, the researchers created a pipeline that passes seed chart pictures by means of a VLM, which is prompted to learn the chart and generate a Python script that was seemingly used to create the chart within the first place. The LLM part of the framework then iteratively augments the code from many charts to in the end produce over 200,000 distinctive pairs of charts and their codes, spanning almost 30 chart varieties, in addition to supporting knowledge and annotation like descriptions and question-answer pairs in regards to the charts. The crew is additional increasing their dataset, serving to to allow essential multimodal understanding to knowledge visualizations for enterprise functions like monetary and scientific reviews, blogs, and extra.

As an alternative of charts, EECS graduate scholar Leonardo Hernandez Cano has his eyes on digital design, particularly visible texture era for CAD functions and the objective of discovering environment friendly methods to allow to capabilities in VLMs. Teaming up with the lab teams led by Armando Photo voltaic-Lezama, EECS professor and Distinguished Professor of Computing within the MIT Schwarzman Faculty of Computing, and IBM Analysis’s Nathan Fulton, Hernandez Cano created a program synthesis system that learns to refine code by itself. The system begins with a texture description given by a person within the type of a picture. It then generates an preliminary Python program, which produces visible textures, and iteratively refines the code with the objective of discovering a program that produces a texture that matches the goal description, studying to seek for new packages from the information that the system itself produces. By means of these refinements, the novel program can create visualizations with the specified luminosity, coloration, iridescence, and many others., mimicking actual supplies.

When considered collectively, these initiatives, and the individuals behind them, are making a cohesive push towards extra sturdy and sensible synthetic intelligence. By tackling the core challenges of reliability, effectivity, and multimodal reasoning, the work paves the way in which for AI methods that aren’t solely extra highly effective, but additionally extra reliable and cost-effective, for real-world enterprise and scientific functions.