If you happen to missed Half 1: How to Evaluate Retrieval Quality in RAG Pipelines, test it out here

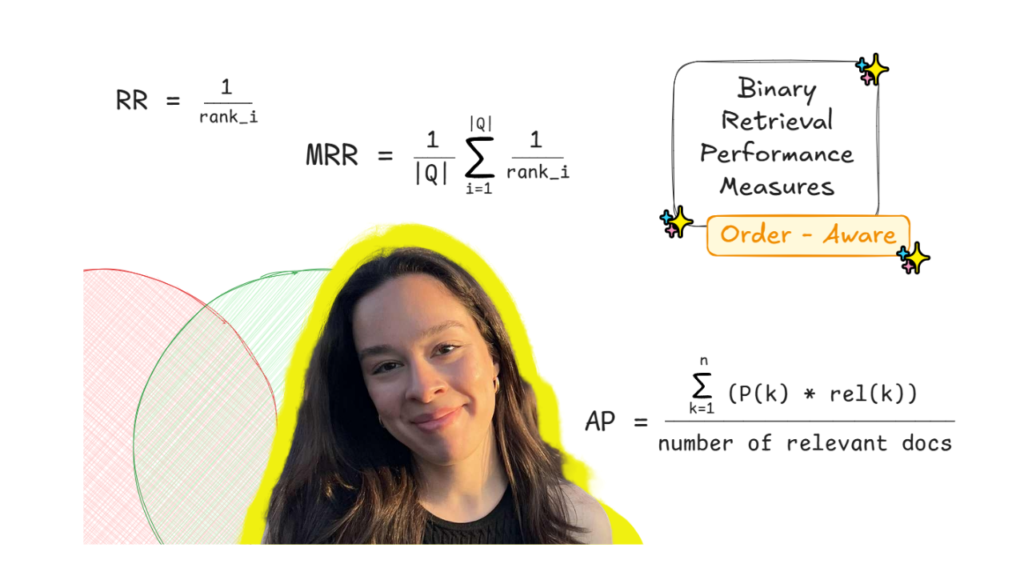

In my previous post, I took a take a look at to guage the retrieval high quality of a RAG pipeline, in addition to some primary metrics for doing so. Extra particularly, that first half primarily targeted on binary, order-unaware measures, basically evaluating if related outcomes exist within the retrieved set or not. On this second half, we’re going to additional discover binary, order-aware measures. That’s, measures that keep in mind the rating with which every related result’s retrieved, other than evaluating whether it is retrieved or not. So, on this submit, we’re going to take a more in-depth take a look at two generally used binary, order-aware metrics: Imply Reciprocal Rank (MRR) and Common Precision (AP).

Why rating issues in retrieval analysis

Efficient retrieval is de facto necessary in a RAG pipeline, given {that a} good retrieval mechanism is the very first step for producing legitimate solutions, grounded in our paperwork. In any other case, if the right paperwork that comprise the wanted data can’t be recognized within the first place, no AI magic can repair this and supply legitimate solutions.

We are able to distinguish between two massive classes of retrieval high quality analysis measures: binary and graded measures. Extra particularly, binary measures categorize a retrieved chunk both as related or irrelevant, with no in-between conditions. On the flip facet, when utilizing graded measures, we think about that the relevance of a piece to the person’s question is relatively a spectrum, and on this manner, a retrieved chunk will be kind of related.

Binary measures will be additional divided into order-unaware and order-aware measures. Order-unaware measures consider whether or not a piece exists within the retrieved set or not, whatever the rating with which it was retrieved. In my latest post, we took an in depth take a look at the commonest binary, order-unaware measures, and ran an in-depth code instance in Python. Specifically, we went over HitRate@Ok, Precision@Ok, Recall@Ok, and F1@Ok. In distinction, binary, order-aware measures, other than contemplating if chunks exist or not within the retrieved set, additionally keep in mind the rating with which they’re retrieved.

Thereby, in in the present day’s submit, we’re going to have a extra detailed take a look at essentially the most generally used binary order-aware retrieval metrics, comparable to MRR and AP, and likewise take a look at how these will be calculated in Python.

I write 🍨DataCream, the place I’m studying and experimenting with AI and knowledge. Subscribe here to be taught and discover with me.

Some order-aware, binary measures

So, binary, order-unaware measures like Precision@Ok or Recall@Ok inform us whether or not the right paperwork are someplace within the high okay chunks or not, however don’t point out if a doc is scoring on the high or on the very backside of these okay chunks. And this actual data is what order-aware measures present us. Some very helpful and generally used order-unaware measures are Imply Reciprocal Rank (MRR) and Common Precision (AP). However let’s see all these in some extra element.

🎯 Imply Reciprocal Rank (MRR)

A generally used order-aware measure for evaluating retrieval is Mean Reciprocal Rank (MRR). Taking one step again, the Reciprocal Rank (RR) expresses in what rating the primary actually related result’s discovered, among the many high okay retrieved outcomes. Extra exactly, it measures how excessive the primary related end result seems within the rating. RR will be calculated as follows, with rank_i being the rank the primary related result’s discovered:

We are able to additionally visually discover this calculation with the next instance:

We are able to now put collectively the Imply Reciprocal Rank (MRR). MRR expresses the common place of the primary related merchandise throughout completely different end result units.

On this manner, MRR can vary from 0 to 1. That’s, the upper the MRR, the upper within the rating the primary related doc seems.

An actual-life instance the place a metric like MRR will be helpful for evaluating the retrieval step of a RAG pipeline could be any fast-paced setting, the place fast decision-making is required, and we have to make it possible for a really related end result emerges on the high of the search. It really works effectively for assessing techniques the place only one related result’s sufficient, and important data just isn’t scattered throughout a number of textual content chunks.

A superb metaphor to additional perceive MRR as a retrieval analysis metric is Google Search. We consider Google as a superb search engine as a result of yow will discover what you might be searching for within the high outcomes. If you happen to needed to scroll all the way down to end result 150 to really discover what you might be searching for, you wouldn’t consider it as a superb search engine. Equally, a superb vector search mechanism in a RAG pipeline ought to floor the related chunks in fairly excessive rankings and thus rating a fairly excessive MRR.

🎯 Common Precision (AP)

In my earlier submit on binary, order-unaware retrieval measures, we took a glance particularly at Precision@okay. Particularly, Precision@okay signifies how most of the high okay retrieved paperwork are certainly related. Precision@okay will be calculated as follows:

Common Precision (AP) additional builds on that concept. Extra particularly, to calculate AP, we have to initially iteratively calculate Precision@okay for every okay when a brand new, related merchandise seems. Then we will calculate AP by merely calculating the common of these Precision@okay scores.

However let’s see an illustrative instance of this calculation. For this instance set, we discover that new related chunks are launched within the retrieved set for okay = 1 and okay = 4.

Thus, we calculate the Precision@1 and Precision@4, after which take their common. That will likely be (1/1 + 2/4)/ 2 = (1 + 0.5)/ 2 = 0.75.

We are able to then generalize the calculation of AP as follows:

Once more, AP can vary from 0 to 1. Extra particularly, the upper the AP rating, the extra constantly our retrieval system ranks related paperwork in the direction of the highest. In different phrases, the extra related paperwork are retrieved and the extra they seem earlier than the irrelevant ones.

In contrast to MRR, which focuses solely on the primary related end result, AP takes under consideration the rating of all of the retrieved related chunks. It basically quantifies how a lot or how little rubbish we get alongside, whereas retrieving the actually related gadgets, for varied high okay.

To get a greater grip on AP and MRR, we will additionally think about them within the context of a Spotify playlist. Equally to the Google Search instance, a excessive MRR would imply that the primary tune of the playlist is our favourite tune. On the flip facet, a excessive AP would imply that the whole playlist is nice, and plenty of of our favourite songs seem often and in the direction of the highest of the playlist.

So, is our vector search any good?

Usually, I might proceed this part with the Conflict and Peace instance, as I’ve accomplished in my other RAG tutorials. Nonetheless, the complete retrieval code is getting fairly massive to incorporate in each submit. As an alternative, on this submit, I’ll concentrate on displaying tips on how to calculate these metrics in Python, doing my finest to maintain the examples concise.

In any case! Let’s see how MRR and AP will be calculated in follow for a RAG pipeline in Python. We are able to outline capabilities for calculating the RR and MRR as follows:

from typing import Listing, Iterable, Sequence

# Reciprocal Rank (RR)

def reciprocal_rank(relevance: Sequence[int]) -> float:

for i, rel in enumerate(relevance, begin=1):

if rel:

return 1.0 / i

return 0.0

# Imply Reciprocal Rank (MRR)

def mean_reciprocal_rank(all_relevance: Iterable[Sequence[int]]) -> float:

vals = [reciprocal_rank(r) for r in all_relevance]

return sum(vals) / len(vals) if vals else 0.0We’ve already calculated Precision@okay within the earlier submit as follows:

# Precision@okay

def precision_at_k(relevance: Sequence[int], okay: int) -> float:

okay = min(okay, len(relevance))

if okay == 0:

return 0.0

return sum(relevance[:k]) / okayConstructing on that, we will outline Common Precision (AP) as follows:

def average_precision(relevance: Sequence[int]) -> float:

if not relevance:

return 0.0

precisions = []

hit_count = 0

for i, rel in enumerate(relevance, begin=1):

if rel:

hit_count += 1

precisions.append(hit_count / i) # Precision@i

return sum(precisions) / hit_count if hit_count else 0.0Every of those capabilities takes as enter a listing of binary relevance labels, the place 1 means a retrieved chunk is related to the question, and 0 means it’s not. In follow, these labels are generated by evaluating the retrieved outcomes with the bottom reality set, exactly as we did in Part 1 when calculating Precision@Ok and Recall@Ok. On this manner, for every question (as an example, “Who’s Anna Pávlovna?”), we generate a binary relevance checklist primarily based on whether or not every retrieved chunk accommodates the reply textual content. From there, we will calculate all of the metrics utilizing the capabilities as proven above.

One other helpful order-aware metric we will calculate is Imply Common Precision (MAP). As you’ll be able to think about, MAP is the imply of the APs for various retrieved units. For instance, if we calculate AP for 3 completely different take a look at questions in our RAG pipeline, the MAP rating tells us the general rating high quality throughout all of them.

On my thoughts

Binary order-unaware measures that we noticed within the first a part of this collection, comparable to HitRate@okay, Precsion@okay, Recall@okay, and F1@okay, can present us useful data for evaluating the retrieval efficiency of a RAG pipeline. Nonetheless, such measures solely present us data on whether or not a related doc it current within the retrieved set or not.

Binary order-aware measures reviewed on this submit, like Imply Reciprocal Rank (MRR) and Common Precision (AP) can present us additional perception, as they not solely inform us whether or not the related paperwork exist within the retrieved outcomes, but in addition how effectively they’re ranked. On this manner, we will have a greater overview of how effectively the retrieval mechanism of our RAG pipeline performs, relying on the duty and kind of paperwork we’re utilizing.

Keep tuned for the following and ultimate a part of this retrieval analysis collection, the place I’ll be discussing graded retrieval analysis measures for RAG pipelines.

Cherished this submit? Let’s be pals! Be a part of me on:

📰Substack 💌 Medium 💼LinkedIn ☕Buy me a coffee!

What about pialgorithms?

Seeking to deliver the facility of RAG into your group?

pialgorithms can do it for you 👉 book a demo in the present day