I in each a graph database and a SQL database, then used varied massive language fashions (LLMs) to reply questions concerning the information via a retrieval-augmented technology (RAG) strategy. Through the use of the identical dataset and questions throughout each techniques, I evaluated which database paradigm delivers extra correct and insightful outcomes.

Retrieval-Augmented Technology (RAG) is an AI framework that enhances massive language fashions (LLMs) by letting them retrieve related exterior data earlier than producing a solution. As a substitute of relying solely on what the mannequin was educated on, RAG dynamically queries a data supply (on this article a SQL or graph database) and integrates these outcomes into its response. An introduction to RAG will be discovered here.

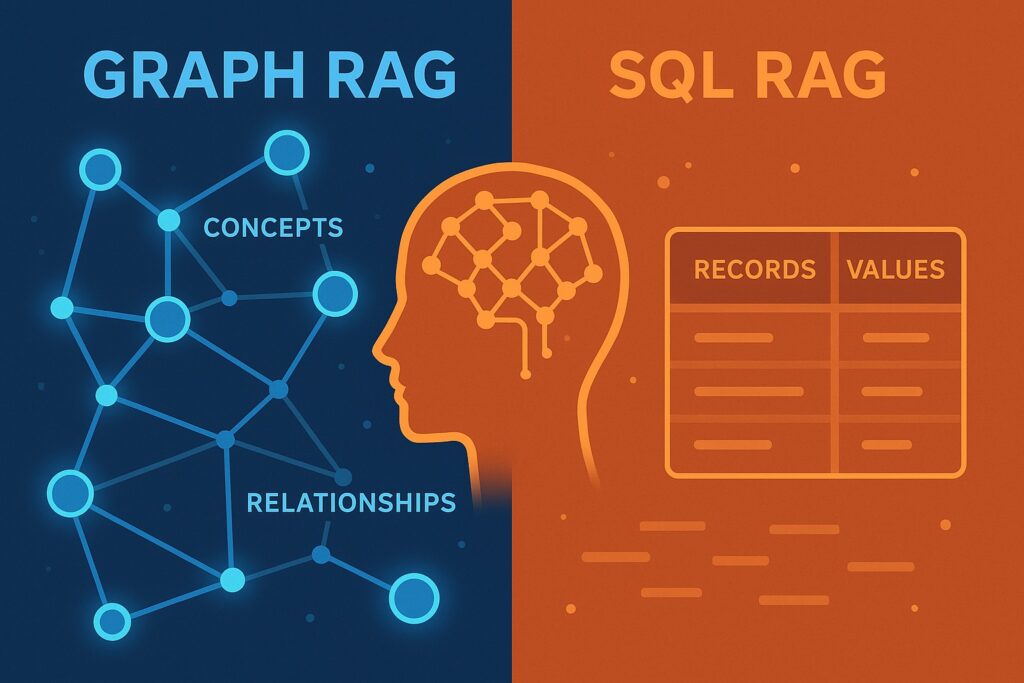

SQL databases set up information into tables made up of rows and columns. Every row represents a file, and every column represents an attribute. Relationships between tables are outlined utilizing keys and joins, and all information follows a hard and fast schema. SQL databases are perfect for structured, transactional information the place consistency and precision are essential — for instance, finance, stock, or affected person information.

Graph databases retailer information as nodes (entities) and edges (relationships) with non-compulsory properties hooked up to each. As a substitute of becoming a member of tables, they immediately signify relationships, permitting for quick traversal throughout linked information. Graph databases are perfect for modelling networks and relationships — resembling social graphs, data graphs, or molecular interplay maps — the place connections are as essential because the entities themselves.

Information

The dataset I used to check the efficiency of RAGs incorporates Formulation 1 outcomes from 1950 to 2024. It contains detailed outcomes at races of drivers and constructors (groups) protecting qualifying, dash race, primary race, and even lap occasions and pit cease occasions. The standings of the drivers and constructors’ championships after each race are additionally included.

SQL Schema

This dataset is already structured in tables with keys so {that a} SQL database will be simply arrange. The database’s schema is proven beneath:

Races is the central desk which is linked with all kinds of outcomes in addition to further data like season and circuits. The outcomes tables are additionally linked with Drivers and Constructors tables to file their end result at every race. The championship standings after every race are saved within the Driver_standings and Constructor_standings tables.

Graph Schema

The schema of the graph database is proven beneath:

As graph databases can retailer data in nodes and relationships it solely requires six nodes in comparison with 14 tables of the SQL database. The Automobile node is an intermediate node that’s used to mannequin {that a} driver drove a automobile of a constructor at a selected race. Since driver – constructor pairings are altering over time, this relationship must be outlined for every race. The race outcomes are saved within the relationships e.g. :RACED between Automobile and Race. Whereas the :STOOD_AFTER relationships include the driving force and constructor championship standings after every race.

Querying the Database

I used LangChain to construct a RAG chain for each database sorts that generates a question based mostly on a person query, runs the question, and converts the question end result to a solution to the person. The code will be discovered on this repo. I outlined a generic system immediate that may very well be used to generate queries of any SQL or graph database. The one information particular data was included by inserting the auto-generated database schema into the immediate. The system prompts will be discovered here.

Right here is an instance how one can initialize the mannequin chain and ask the query: “What driver gained the 92 Grand Prix in Belgium?”

from langchain_community.utilities import SQLDatabase

from langchain_openai import ChatOpenAI

from qa_chain import GraphQAChain

from config import DATABASE_PATH

# connect with database

connection_string = f"sqlite:///{DATABASE_PATH}"

db = SQLDatabase.from_uri(connection_string)

# initialize LLM

llm = ChatOpenAI(temperature=0, mannequin="gpt-5")

# initialize qa chain

chain = GraphQAChain(llm, db, db_type='SQL', verbose=True)

# ask a query

chain.invoke("What driver gained the 92 Grand Prix in Belgium?")Which returns:

{'write_query': {'question': "SELECT d.forename, d.surname

FROM outcomes r

JOIN races ra ON ra.raceId = r.raceId

JOIN drivers d ON d.driverId = r.driverId

WHERE ra.yr = 1992

AND ra.identify = 'Belgian Grand Prix'

AND r.positionOrder = 1

LIMIT 10;"}}

{'execute_query': {'end result': "[('Michael', 'Schumacher')]"}}

{'generate_answer': {'reply': 'Michael Schumacher'}}The SQL question joins the Outcomes, Races, and Drivers tables, selects the race on the 1992 Belgian Grand Prix and the driving force who completed first. The LLM transformed the yr 92 to 1992 and the race identify from “Grand Prix in Belgium” to “Belgian Grand Prix”. It derived these conversions from the database schema which included three pattern rows of every desk. The question result’s “Michael Schumacher” which the LLM returned as reply.

Analysis

Now the query I need to reply is that if an LLM is healthier in querying the SQL or the graph database. I outlined three problem ranges (simple, medium, and arduous) the place simple have been questions that may very well be answered by querying information from just one desk or node, medium have been questions which required one or two hyperlinks amongst tables or nodes and arduous questions required extra hyperlinks or subqueries. For every problem stage I outlined 5 questions. Moreover, I outlined 5 questions that would not be answered with information from the database.

I answered every query with three LLM fashions (GPT-5, GPT-4, and GPT-3.5-turbo) to research if essentially the most superior fashions are wanted or older and cheaper fashions may additionally create passable outcomes. If a mannequin gave the proper reply, it bought 1 level, if it replied that it couldn’t reply the query it bought 0 factors, and in case it gave a improper reply it bought -1 level. All questions and solutions are listed here. Under are the scores of all fashions and database sorts:

| Mannequin | Graph DB | SQL DB |

| GPT-3.5-turbo | -2 | 4 |

| GPT-4 | 7 | 9 |

| GPT-5 | 18 | 18 |

It’s exceptional how extra superior fashions outperform less complicated fashions: GPT-3-turbo bought about half the variety of questions improper, GPT-4 bought 2 to three questions improper however couldn’t reply 6 to 7 questions, and GPT-5 bought all besides one query right. Less complicated fashions appear to carry out higher with a SQL than graph database whereas GPT-5 achieved the identical rating with both database.

The one query GPT-5 bought improper utilizing the SQL database was “Which driver gained essentially the most world championships?”. The reply “Lewis Hamilton, with 7 world championships” just isn’t right as a result of Lewis Hamilton and Michael Schumacher gained 7 world championships. The generated SQL question aggregated the variety of championships by driver, sorted them in descending order and solely chosen the primary row whereas the driving force within the second row had the identical variety of championships.

Utilizing the graph database, the one query GPT-5 bought improper was “Who gained the Formulation 2 championship in 2017?” which was answered with “Lewis Hamilton” (Lewis Hamilton gained the Formulation 1 however not Formulation 2 championship that yr). This can be a difficult query as a result of the database solely incorporates Formulation 1 however not Formulation 2 outcomes. The anticipated reply would have been to answer that this query couldn’t be answered based mostly on the supplied information. Nevertheless, contemplating that the system immediate didn’t include any particular details about the dataset it’s comprehensible that this query was not appropriately answered.

Apparently utilizing the SQL database GPT-5 gave the proper reply “Charles Leclerc”. The generated SQL question solely searched the drivers desk for the identify “Charles Leclerc”. Right here the LLM should have acknowledged that the database doesn’t include Formulation 2 outcomes and answered this query from its frequent data. Though this led to the proper reply on this case it may be harmful when the LLM just isn’t utilizing the supplied information to reply questions. One solution to cut back this danger may very well be to explicitly state within the system immediate that the database have to be the one supply to reply questions.

Conclusion

This comparability of RAG efficiency utilizing a Formulation 1 outcomes dataset reveals that the most recent LLMs carry out exceptionally effectively, producing extremely correct and contextually conscious solutions with none further immediate engineering. Whereas less complicated fashions battle, newer ones like GPT-5 deal with advanced queries with near-perfect precision. Importantly, there was no vital distinction in efficiency between the graph and SQL database approaches – customers can merely select the database paradigm that most closely fits the construction of their information.

The dataset used right here serves solely as an illustrative instance; outcomes might differ when utilizing different datasets, particularly those who require specialised area data or entry to private information sources. General, these findings spotlight how far retrieval-augmented LLMs have superior in integrating structured information with pure language reasoning.

If not said in any other case, all pictures have been created by the writer.