Learn This Doc?

This doc is for machine studying practitioners, researchers, and engineers serious about exploring customized aggregation schemes in federated studying. It’s significantly helpful for individuals who wish to design, check, and analyze new aggregation strategies in actual, distributed environments.

Introduction

Machine Studying (ML) continues to drive innovation throughout numerous domains comparable to healthcare, finance, and protection. Nevertheless, whereas ML fashions rely closely on massive volumes of centralized high-quality information, issues round information privateness, possession, and regulatory compliance stay important boundaries.

Federated Studying (FL) addresses these challenges by enabling mannequin coaching throughout distributed datasets, permitting information to stay decentralized whereas nonetheless contributing to a shared world mannequin. This decentralized method makes FL particularly beneficial in delicate domains the place centralizing information is impractical or restricted.

To completely leverage FL, understanding and designing customized aggregation schemes is necessary. Efficient aggregation not solely influences mannequin accuracy and robustness but in addition handles points comparable to information heterogeneity, consumer reliability, and adversarial habits. Analyzing and constructing tailor-made aggregation strategies is, due to this fact, a key step towards optimizing efficiency and guaranteeing equity in federated settings.

In my previous article, we explored the cyberattack panorama in federated studying and the way it differs from conventional centralized ML. That dialogue launched Scaleout’s open-source attack simulation toolkit, which helps researchers and practitioners analyze the impression of assorted assault varieties and consider the restrictions of present mitigation schemes, particularly underneath difficult situations comparable to excessive information imbalance, non-IID distributions, and the presence of stragglers or late joiners.

Nevertheless, that first article didn’t cowl the best way to design, implement, and check your individual schemes in reside, distributed environments.

This may increasingly sound complicated, however this text affords a fast, step-by-step information overlaying the essential ideas wanted to design and develop customized aggregation schemes for real-world federated settings.

What are Server Features?

Customized Aggregators are capabilities that run on the server facet of a federated studying setup. They outline how mannequin updates from shoppers are processed, comparable to aggregation, validation, or filtering, earlier than producing the following world mannequin. Server Perform is the time period used within the Scaleout Edge AI platform to seek advice from customized aggregators.

Past easy aggregation, Server Features allow researchers and builders to design new schemes to incorporate privateness preservation, equity, robustness, and convergence effectivity. By customizing this layer, one also can experiment with modern protection mechanisms, adaptive aggregation guidelines, or optimization strategies tailor-made to particular information and deployment eventualities.

Server Features are the mechanism within the Scaleout Edge AI platform for implementing customized aggregation schemes. Along with this flexibility, the platform consists of 4 built-in aggregators: FedAvg (default), FedAdam (FedOpt), FedYogi(FedOpt), and FedAdaGrad(FedOpt). The implementation of the strategies from the FedOpt family follows the method described within the paper. For extra particulars, please seek advice from the platform’s documentation.

Constructing A Customized Aggregation Scheme

On this weblog put up, I’ll exhibit how one can construct and check your individual assault mitigation scheme utilizing Scaleout’s Edge AI platform. One of many key enablers for that is the platform’s Server Perform function.

Inside the scope of this put up, the main target will probably be on the best way to develop and execute a customized aggregation course of in a geographically distributed setting. I’ll skip the essential setup and assume you might be already accustomed to the Scaleout platform. Should you’re simply getting began, listed here are some helpful assets:

Quickstart FL Instance: https://docs.scaleoutsystems.com/en/stable/quickstart.html

Framework Structure: https://docs.scaleoutsystems.com/en/stable/architecture.html

Consumer API Reference: https://docs.scaleoutsystems.com/en/stable/apiclient.html Server

Perform Information: https://docs.scaleoutsystems.com/en/stable/serverfunctions.html

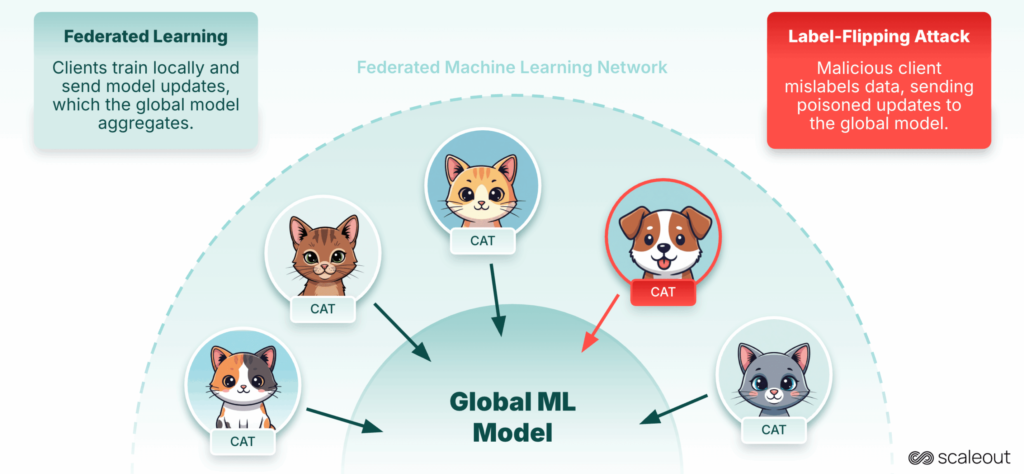

Instance: Label-Flipping Assault

Let’s take into account a concrete assault, Label-Flipping. On this situation, a malicious consumer deliberately alters the labels in its native dataset (for instance, flipping “cat” to “canine”). When these poisoned updates are despatched to the server, the worldwide mannequin learns incorrect patterns, resulting in decreased accuracy and reliability.

For this experiment, we used the MNIST open supply dataset, divided into six partitions, every assigned to a separate consumer. client-6 acts as a malicious participant by flipping labels in its native information, utilizing it for coaching, and sending the ensuing native mannequin to the aggregator.

| Malicious Consumer | ID |

|---|---|

| client-6 | 07505d04-08a5-4453-ad55-d541e9e4ef57 |

Right here you possibly can see an instance of an information level (a grayscale picture) from client-6, the place the labels have been deliberately flipped. By altering the labels, this consumer makes an attempt to deprave the worldwide mannequin in the course of the aggregation course of.

Job

Stop client-6 from interfering with the event of the worldwide mannequin.

Mitigation with Cosine Similarity

To counter this, we use a cosine similarity-based method for instance. The concept is easy: examine every consumer’s replace vector with the worldwide mannequin (Step 1). If the similarity rating is under a predefined threshold (s̄ᵢ < 𝓣), the replace is probably going from a malicious consumer (Steps 2–4). Within the ultimate step (Step 5), that consumer’s contribution is excluded from the aggregation course of, which on this case makes use of a weighted federated averaging scheme (FedAVG).

Word: This can be a easy assault mitigation scheme, primarily supposed to exhibit the pliability in supporting customized aggregation in real looking federated studying eventualities. The identical scheme is probably not efficient for extremely difficult circumstances, comparable to these with imbalanced datasets or non-IID situations.

The Python implementation under illustrates the customized aggregator workflow: figuring out on-line shoppers (Step 1), computing cosine-similarity scores (Steps 2–3), excluding the malicious consumer (Step 4), and performing federated weighted averaging (Step 5).

Step 1

# --- Compute deltas (consumer - previous_global) ---

prev_flat = self._flatten_params(previous_global)

flat_deltas, norms = [], []

for params in client_params:

flat = self._flatten_params(params)

delta = flat - prev_flat

flat_deltas.append(delta)

norms.append(np.linalg.norm(delta))Step 2 and three

# --- Cosine similarity matrix ---

similarity_matrix = np.zeros((num_clients, num_clients), dtype=float)

for i in vary(num_clients):

for j in vary(i + 1, num_clients):

denom = norms[i] * norms[j]

sim = float(np.dot(flat_deltas[i], flat_deltas[j]) / denom) if denom > 0 else 0.0

similarity_matrix[i, j] = sim

similarity_matrix[j, i] = sim

# --- Common similarity per consumer ---

avg_sim = np.zeros(num_clients, dtype=float)

if num_clients > 1:

avg_sim = np.sum(similarity_matrix, axis=1) / (num_clients - 1)

for cid, s in zip(client_ids, avg_sim):

logger.data(f"Spherical {self.spherical}: Avg delta-cosine similarity for {cid}: {s:.4f}")Step 4

# --- Mark suspicious shoppers ---

suspicious_indices = [i for i, s in enumerate(avg_sim) if s < self.similarity_threshold]

suspicious_clients = [client_ids[i] for i in suspicious_indices]

if suspicious_clients:

logger.warning(f"Spherical {self.spherical}: Excluding suspicious shoppers: {suspicious_clients}")

# --- Hold solely non-suspicious shoppers ---

keep_indices = [i for i in range(num_clients) if i not in suspicious_indices]

if len(keep_indices) < 3: # safeguard

logger.warning("Too many exclusions, falling again to ALL shoppers.")

keep_indices = checklist(vary(num_clients))

kept_client_ids = [client_ids[i] for i in keep_indices]

logger.data(f"Spherical {self.spherical}: Purchasers used for FedAvg: {kept_client_ids}")Step 5

# === Weighted FedAvg ===

weighted_sum = [np.zeros_like(param) for param in previous_global]

total_weight = 0

for i in keep_indices:

client_id = client_ids[i]

client_parameters, metadata = client_updates[client_id]

num_examples = metadata.get("num_examples", 1)

total_weight += num_examples

for j, param in enumerate(client_parameters):

weighted_sum[j] += param * num_examples

if total_weight == 0:

logger.error("Aggregation failed: total_weight = 0.")

return previous_global

new_global = [param / total_weight for param in weighted_sum]

logger.data("Fashions aggregated with FedAvg + cosine similarity filtering")

return new_globalFull code is offered at Github: https://github.com/sztoor/server_functions_cosine_similarity_example.git

Step-by-Step Activation of Server Features

- Log in to Scaleout Studio and create a brand new challenge.

- Add the Compute Bundle and Seed Mannequin to the challenge.

- Join shoppers to the Studio by putting in Scaleout’s client-side libraries through pip ( # pip set up fedn) . I’ve linked six shoppers.

Word: For Steps 1–3, please seek advice from the Quick Start Guide for detailed directions.

- Clone the Github repository from the offered hyperlink. This provides you with two Python recordsdata:

- server_functions.py – comprises the cosine similarity–based mostly aggregation scheme.

- scaleout_start_session.py – connects to the Studio, pushes the native server_function, and begins a coaching session based mostly on predefined settings (e.g., 5 rounds, 180-second spherical timeout).

- Execute scaleout_start_session.py script.

For full particulars about these steps, seek advice from the official Server Functions Information.

Outcome and Dialogue

The steps concerned when operating scaleout_start_session.py are outlined under.

On this setup, six shoppers have been linked to the studio, with client-6 utilizing a intentionally flipped dataset to simulate malicious habits. The logs from Scaleout Studio present an in depth view of the Server Perform execution and the way the system responds throughout aggregation. In Part 1, the logs present that the server efficiently obtained fashions from all shoppers, confirming that communication and mannequin add have been functioning as anticipated. Part 2 presents the similarity scores for every consumer. These scores quantify how intently every consumer’s replace aligns with the general development, offering a metric for detecting anomalies.

Part 1

2025-10-20 11:35:08 [INFO] Obtained consumer choice request.

2025-10-20 11:35:08 [INFO] Purchasers chosen: ['eef3e17f-d498-474c-aafe-f7fa7203e9a9', 'e578482e-86b0-42fc-8e56-e4499e6ca553', '7b4b5238-ff67-4f03-9561-4e16ccd9eee7', '69f6c936-c784-4ab9-afb2-f8ccffe15733', '6ca55527-0fec-4c98-be94-ef3ffb09c872', '07505d04-08a5-4453-ad55-d541e9e4ef57']

2025-10-20 11:35:14 [INFO] Obtained earlier world mannequin

2025-10-20 11:35:14 [INFO] Obtained metadata

2025-10-20 11:35:14 [INFO] Obtained consumer mannequin from consumer eef3e17f-d498-474c-aafe-f7fa7203e9a9

2025-10-20 11:35:14 [INFO] Obtained metadata

2025-10-20 11:35:15 [INFO] Obtained consumer mannequin from consumer e578482e-86b0-42fc-8e56-e4499e6ca553

2025-10-20 11:35:15 [INFO] Obtained metadata

2025-10-20 11:35:15 [INFO] Obtained consumer mannequin from consumer 07505d04-08a5-4453-ad55-d541e9e4ef57

2025-10-20 11:35:15 [INFO] Obtained metadata

2025-10-20 11:35:15 [INFO] Obtained consumer mannequin from consumer 69f6c936-c784-4ab9-afb2-f8ccffe15733

2025-10-20 11:35:15 [INFO] Obtained metadata

2025-10-20 11:35:15 [INFO] Obtained consumer mannequin from consumer 6ca55527-0fec-4c98-be94-ef3ffb09c872

2025-10-20 11:35:16 [INFO] Obtained metadata

2025-10-20 11:35:16 [INFO] Obtained consumer mannequin from consumer 7b4b5238-ff67-4f03-9561-4e16ccd9eee7Part 2

2025-10-20 11:35:16 [INFO] Receieved aggregation request: combination

2025-10-20 11:35:16 [INFO] Spherical 0: Avg delta-cosine similarity for eef3e17f-d498-474c-aafe-f7fa7203e9a9: 0.7498

2025-10-20 11:35:16 [INFO] Spherical 0: Avg delta-cosine similarity for e578482e-86b0-42fc-8e56-e4499e6ca553: 0.7531

2025-10-20 11:35:16 [INFO] Spherical 0: Avg delta-cosine similarity for 07505d04-08a5-4453-ad55-d541e9e4ef57: -0.1346

2025-10-20 11:35:16 [INFO] Spherical 0: Avg delta-cosine similarity for 69f6c936-c784-4ab9-afb2-f8ccffe15733: 0.7528

2025-10-20 11:35:16 [INFO] Spherical 0: Avg delta-cosine similarity for 6ca55527-0fec-4c98-be94-ef3ffb09c872: 0.7475

2025-10-20 11:35:16 [INFO] Spherical 0: Avg delta-cosine similarity for 7b4b5238-ff67-4f03-9561-4e16ccd9eee7: 0.7460Part 3

2025-10-20 11:35:16 ⚠️ [WARNING] Spherical 0: Excluding suspicious shoppers: ['07505d04-08a5-4453-ad55-d541e9e4ef57']Part 4

2025-10-20 11:35:16 [INFO] Spherical 0: Purchasers used for FedAvg: ['eef3e17f-d498-474c-aafe-f7fa7203e9a9', 'e578482e-86b0-42fc-8e56-e4499e6ca553', '69f6c936-c784-4ab9-afb2-f8ccffe15733', '6ca55527-0fec-4c98-be94-ef3ffb09c872', '7b4b5238-ff67-4f03-9561-4e16ccd9eee7']

2025-10-20 11:35:16 [INFO] Fashions aggregated with FedAvg + cosine similarity filteringPart 3 highlights that client-6 (ID …e4ef57) was recognized as malicious. The warning message signifies that this consumer can be excluded from the aggregation course of. This exclusion is justified by its extraordinarily low similarity rating of –0.1346, the bottom amongst all shoppers, and by the edge worth (𝓣) set within the server perform. By eradicating client-6, the aggregation step ensures that the worldwide mannequin shouldn’t be adversely affected by outlier updates. Part 4 then lists the consumer IDs included within the aggregation, confirming that solely the trusted shoppers contributed to the up to date world mannequin.

Job Completed

client-6 has been efficiently excluded from the aggregation course of utilizing the customized aggregation scheme.

Along with the log messages, the platform supplies wealthy, per-client insights that improve understanding of consumer habits. These embrace per-client coaching loss and accuracy, world mannequin efficiency metrics, and coaching occasions for every consumer. One other key function is the mannequin path, which tracks mannequin updates over time and allows an in depth evaluation of how particular person shoppers impression the worldwide mannequin. Collectively, the sections and supplementary information present a complete view of consumer contributions, clearly illustrating the excellence between regular shoppers and the malicious client-6 (ID …e4ef57), and demonstrating the effectiveness of the server perform in mitigating potential assaults.

(picture created by the creator).

The client-side coaching accuracy and loss plots spotlight the efficiency of every consumer. They present that client-6 deviates considerably from the others. Together with it within the aggregation course of would hurt the worldwide mannequin’s efficiency.

Abstract

This weblog put up highlights the significance of customized aggregators to handle particular wants and allows the event of sturdy, environment friendly, and extremely correct fashions in federated machine studying settings. It additionally emphasizes the worth of leveraging present constructing blocks moderately than beginning solely from scratch and spending important time on elements that aren’t central to the core thought.

Federated Studying affords a strong solution to unlock datasets which can be troublesome and even unimaginable to centralize in a single location. Research like this exhibit that whereas the method is extremely promising, it additionally requires a cautious understanding of the underlying programs. Thankfully, there are ready-to-use platforms that make it simpler to discover real-world use circumstances, experiment with totally different aggregation schenes, and design options tailor-made to particular wants.

Helpful Hyperlinks

Writer’s Particulars:

Salman Toor

CTO and Co-founder, Scaleout.

Affiliate Professor

Division of Info Know-how,

Uppsala College, Sweden

LinkedIn Google Scholar