Microsoft’s GraphRAG implementation was one of many first programs and launched many modern options. It combines each the indexing section, the place entities, relationships, and hierarchical communities are extracted and summarized, with superior query-time capabilities. This method permits the system to reply broad, thematic questions by leveraging pre-computed entity, relationship, and group summaries, going past the standard document-retrieval limitations of ordinary RAG programs.

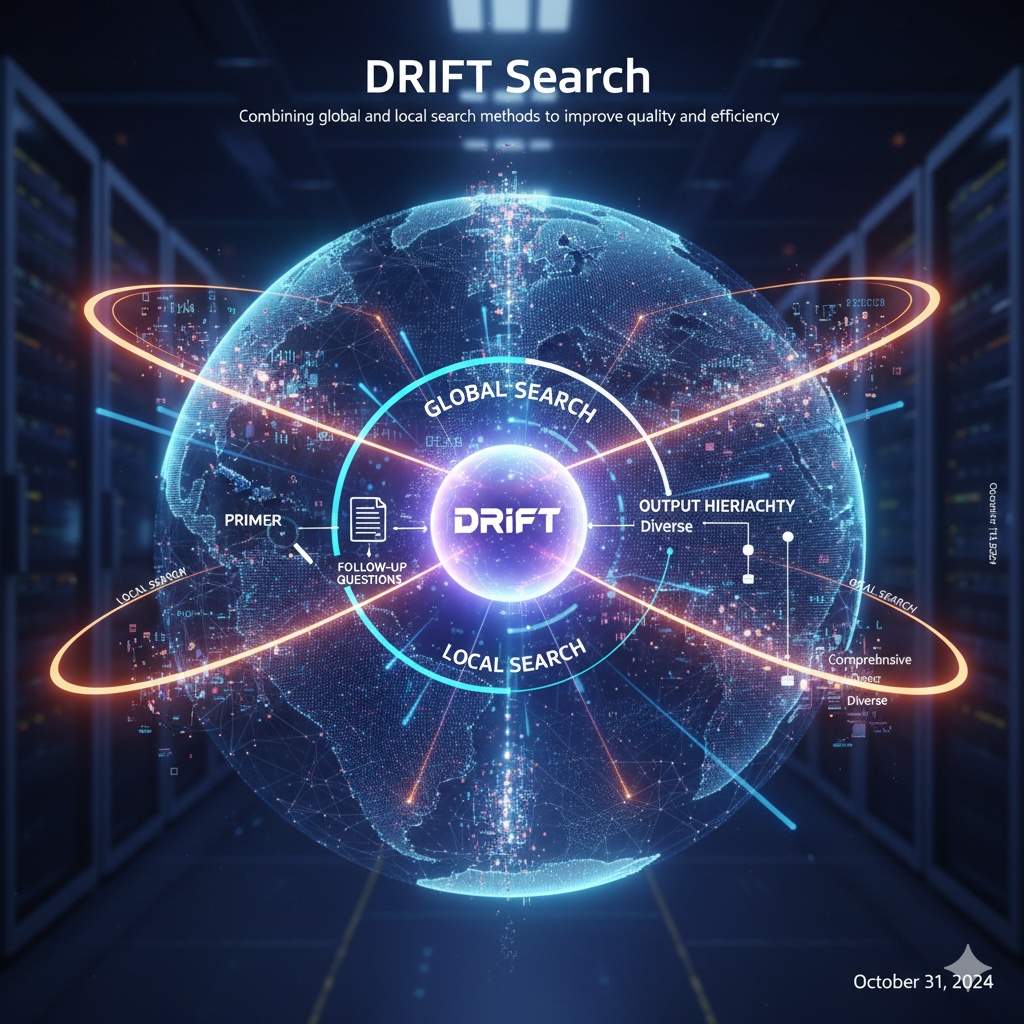

I’ve coated the indexing section together with world and native search mechanisms in earlier weblog posts (here and here), so we’ll skip these particulars on this dialogue. Nonetheless, we haven’t but explored DRIFT search, which would be the focus of this weblog submit. DRIFT is a more moderen method that mixes traits of each world and native search strategies. The method begins by leveraging group data by vector search to ascertain a broad place to begin for queries, then makes use of these group insights to refine the unique query into detailed follow-up queries. This permits DRIFT to dynamically traverse the information graph to retrieve particular details about entities, relationships, and different localized particulars, balancing computational effectivity with complete reply high quality.

The implementation makes use of LlamaIndex workflows to orchestrate the DRIFT search course of by a number of key steps. It begins with HyDE era, making a hypothetical reply based mostly on a pattern group report to enhance question illustration.

The group search step then makes use of vector similarity to determine essentially the most related group studies, offering broad context for the question. The system analyzes these outcomes to generate an preliminary intermediate reply and a set of follow-up queries for deeper investigation.

These follow-up queries are executed in parallel through the native search section, retrieving focused data together with textual content chunks, entities, relationships, and extra group studies from the information graph. This course of can iterate as much as a most depth, with every spherical probably spawning new follow-up queries.

Lastly, the reply era step synthesizes all intermediate solutions collected all through the method, combining broad community-level insights with detailed native findings to supply a complete response. This method balances breadth and depth, beginning huge with group context and progressively drilling down into specifics.

That is my implementation of DRIFT search, tailored for LlamaIndex workflows and Neo4j. I reverse-engineered the method by analyzing Microsoft’s GraphRAG code, so there could also be some variations from the unique implementation.

The code is obtainable on GitHub.

Dataset

For this weblog submit, we’ll use Alice’s Adventures in Wonderland by Lewis Carroll, a basic textual content that’s freely obtainable from Project Gutenberg. This richly narrative dataset with its interconnected characters, places, and occasions makes it a wonderful alternative for demonstrating GraphRAG’s capabilities.

Ingestion

For the ingestion course of, we’ll reuse the Microsoft GraphRAG indexing implementation I developed for a previous blog post, tailored right into a LlamaIndex workflow.

The ingestion pipeline follows the usual GraphRAG method with three primary levels:

class MSGraphRAGIngestion(Workflow):

@step

async def entity_extraction(self, ev: StartEvent) -> EntitySummarization:

chunks = splitter.split_text(ev.textual content)

await ms_graph.extract_nodes_and_rels(chunks, ev.allowed_entities)

return EntitySummarization()

@step

async def entity_summarization(

self, ev: EntitySummarization

) -> CommunitySummarization:

await ms_graph.summarize_nodes_and_rels()

return CommunitySummarization()

@step

async def community_summarization(

self, ev: CommunitySummarization

) -> CommunityEmbeddings:

await ms_graph.summarize_communities()

return CommunityEmbeddings()The workflow extracts entities and relationships from textual content chunks, generates summaries for each nodes and relationships, after which creates hierarchical group summaries.

After summarization, we generate vector embeddings for each communities and entities to allow similarity search. Right here’s the group embedding step:

@step

async def community_embeddings(self, ev: CommunityEmbeddings) -> EntityEmbeddings:

# Fetch all communities from the graph database

communities = ms_graph.question(

"""

MATCH (c:__Community__)

WHERE c.abstract IS NOT NULL AND c.score > $min_community_rating

RETURN coalesce(c.title, "") + " " + c.abstract AS community_description, c.id AS community_id

""",

params={"min_community_rating": MIN_COMMUNITY_RATING},

)

if communities:

# Generate vector embeddings from group descriptions

response = await consumer.embeddings.create(

enter=[c["community_description"] for c in communities],

mannequin=TEXT_EMBEDDING_MODEL,

)

# Retailer embeddings within the graph and create vector index

embeds = [

{

"community_id": community["community_id"],

"embedding": embedding.embedding,

}

for group, embedding in zip(communities, response.knowledge)

]

ms_graph.question(

"""UNWIND $knowledge as row

MATCH (c:__Community__ {id: row.community_id})

CALL db.create.setNodeVectorProperty(c, 'embedding', row.embedding)""",

params={"knowledge": embeds},

)

ms_graph.question(

"CREATE VECTOR INDEX group IF NOT EXISTS FOR (c:__Community__) ON c.embedding"

)

return EntityEmbeddings()The identical course of is utilized to entity embeddings, creating the vector indices wanted for DRIFT search’s similarity-based retrieval.

DRIFT search

DRIFT search is an intuitive method to data retrieval: begin by understanding the massive image, then drill down into specifics the place wanted. Reasonably than instantly trying to find precise matches on the doc or entity stage, DRIFT first consults group summaries, that are high-level overviews that seize the primary themes and subjects inside the information graph.

As soon as DRIFT identifies related higher-level data, it intelligently generates follow-up queries to retrieve exact details about particular entities, relationships, and supply paperwork. This two-phase method mirrors how people naturally search data: we first get oriented with a normal overview, then ask focused inquiries to fill within the particulars. By combining the great protection of world search with the precision of native search, DRIFT achieves each breadth and depth with out the computational expense of processing each group report or doc.

Let’s stroll by every stage of the implementation.

The code is obtainable on GitHub.

Group search

DRIFT makes use of HyDE (Hypothetical Doc Embeddings) to enhance vector search accuracy. As a substitute of embedding the consumer’s question instantly, HyDE generates a hypothetical reply first, then makes use of that for similarity search. This works as a result of hypothetical solutions are semantically nearer to precise group summaries than uncooked queries are.

@step

async def hyde_generation(self, ev: StartEvent) -> CommunitySearch:

# Fetch a random group report to make use of as a template for HyDE era

random_community_report = driver.execute_query(

"""

MATCH (c:__Community__)

WHERE c.abstract IS NOT NULL

RETURN coalesce(c.title, "") + " " + c.abstract AS community_description""",

result_transformer_=lambda r: r.knowledge(),

)

# Generate a hypothetical reply to enhance question illustration

hyde = HYDE_PROMPT.format(

question=ev.question, template=random_community_report[0]["community_description"]

)

hyde_response = await consumer.responses.create(

mannequin="gpt-5-mini",

enter=[{"role": "user", "content": hyde}],

reasoning={"effort": "low"},

)

return CommunitySearch(question=ev.question, hyde_query=hyde_response.output_text)Subsequent, we embed the HyDE question and retrieves the highest 5 most related group studies by way of vector similarity. It then prompts the LLM to generate an intermediate reply from these studies and determine follow-up queries for deeper investigation. The intermediate reply is saved, and all follow-up queries are dispatched in parallel for the native search section.

@step

async def community_search(self, ctx: Context, ev: CommunitySearch) -> LocalSearch:

# Create embedding from the HyDE-enhanced question

embedding_response = await consumer.embeddings.create(

enter=ev.hyde_query, mannequin=TEXT_EMBEDDING_MODEL

)

embedding = embedding_response.knowledge[0].embedding

# Discover high 5 most related group studies by way of vector similarity

community_reports = driver.execute_query(

"""

CALL db.index.vector.queryNodes('group', 5, $embedding) YIELD node, rating

RETURN 'community-' + node.id AS source_id, node.abstract AS community_summary

""",

result_transformer_=lambda r: r.knowledge(),

embedding=embedding,

)

# Generate preliminary reply and determine what more information is required

initial_prompt = DRIFT_PRIMER_PROMPT.format(

question=ev.question, community_reports=community_reports

)

initial_response = await consumer.responses.create(

mannequin="gpt-5-mini",

enter=[{"role": "user", "content": initial_prompt}],

reasoning={"effort": "low"},

)

response_json = json_repair.masses(initial_response.output_text)

print(f"Preliminary intermediate response: {response_json['intermediate_answer']}")

# Retailer the preliminary reply and put together for parallel native searches

async with ctx.retailer.edit_state() as ctx_state:

ctx_state["intermediate_answers"] = [

{

"intermediate_answer": response_json["intermediate_answer"],

"rating": response_json["score"],

}

]

ctx_state["local_search_num"] = len(response_json["follow_up_queries"])

# Dispatch follow-up queries to run in parallel

for local_query in response_json["follow_up_queries"]:

ctx.send_event(LocalSearch(question=ev.question, local_query=local_query))

return NoneThis establishes DRIFT’s core method: begin broad with HyDE-enhanced group search, then drill down with focused follow-up queries.

Native search

The native search section executes follow-up queries in parallel to drill down into particular particulars. Every question retrieves focused context by entity-based vector search, then generates an intermediate reply and probably extra follow-up queries.

@step(num_workers=5)

async def local_search(self, ev: LocalSearch) -> LocalSearchResults:

print(f"Working native question: {ev.local_query}")

# Create embedding for the native question

response = await consumer.embeddings.create(

enter=ev.local_query, mannequin=TEXT_EMBEDDING_MODEL

)

embedding = response.knowledge[0].embedding

# Retrieve related entities and collect their related context:

# - Textual content chunks the place entities are talked about

# - Group studies the entities belong to

# - Relationships between the retrieved entities

# - Entity descriptions

local_reports = driver.execute_query(

"""

CALL db.index.vector.queryNodes('entity', 5, $embedding) YIELD node, rating

WITH accumulate(node) AS nodes

WITH

accumulate {

UNWIND nodes as n

MATCH (n)<-[:MENTIONS]->(c:__Chunk__)

WITH c, rely(distinct n) as freq

RETURN {chunkText: c.textual content, source_id: 'chunk-' + c.id}

ORDER BY freq DESC

LIMIT 3

} AS text_mapping,

accumulate {

UNWIND nodes as n

MATCH (n)-[:IN_COMMUNITY*]->(c:__Community__)

WHERE c.abstract IS NOT NULL

WITH c, c.score as rank

RETURN {abstract: c.abstract, source_id: 'community-' + c.id}

ORDER BY rank DESC

LIMIT 3

} AS report_mapping,

accumulate {

UNWIND nodes as n

MATCH (n)-[r:SUMMARIZED_RELATIONSHIP]-(m)

WHERE m IN nodes

RETURN {descriptionText: r.abstract, source_id: 'relationship-' + n.title + '-' + m.title}

LIMIT 3

} as insideRels,

accumulate {

UNWIND nodes as n

RETURN {descriptionText: n.abstract, source_id: 'node-' + n.title}

} as entities

RETURN {Chunks: text_mapping, Experiences: report_mapping,

Relationships: insideRels,

Entities: entities} AS output

""",

result_transformer_=lambda r: r.knowledge(),

embedding=embedding,

)

# Generate reply based mostly on the retrieved context

local_prompt = DRIFT_LOCAL_SYSTEM_PROMPT.format(

response_type=DEFAULT_RESPONSE_TYPE,

context_data=local_reports,

global_query=ev.question,

)

local_response = await consumer.responses.create(

mannequin="gpt-5-mini",

enter=[{"role": "user", "content": local_prompt}],

reasoning={"effort": "low"},

)

response_json = json_repair.masses(local_response.output_text)

# Restrict follow-up queries to forestall exponential development

response_json["follow_up_queries"] = response_json["follow_up_queries"][:LOCAL_TOP_K]

return LocalSearchResults(outcomes=response_json, question=ev.question)The following step orchestrates the iterative deepening course of. It waits for all parallel searches to finish utilizing collect_events, then decides whether or not to proceed drilling down. If the present depth hasn’t reached the utmost (we use max depth=2), it extracts follow-up queries from all outcomes, shops the intermediate solutions, and dispatches the subsequent spherical of parallel searches.

@step

async def local_search_results(

self, ctx: Context, ev: LocalSearchResults

) -> LocalSearch | FinalAnswer:

local_search_num = await ctx.retailer.get("local_search_num")

# Watch for all parallel searches to finish

outcomes = ctx.collect_events(ev, [LocalSearchResults] * local_search_num)

if outcomes is None:

return None

intermediate_results = [

{

"intermediate_answer": event.results["response"],

"rating": occasion.outcomes["score"],

}

for occasion in outcomes

]

current_depth = await ctx.retailer.get("local_search_depth", default=1)

question = [ev.query for ev in results][0]

# Proceed drilling down if we have not reached max depth

if current_depth < MAX_LOCAL_SEARCH_DEPTH:

await ctx.retailer.set("local_search_depth", current_depth + 1)

follow_up_queries = [

query

for event in results

for query in event.results["follow_up_queries"]

]

# Retailer intermediate solutions and dispatch subsequent spherical of searches

async with ctx.retailer.edit_state() as ctx_state:

ctx_state["intermediate_answers"].prolong(intermediate_results)

ctx_state["local_search_num"] = len(follow_up_queries)

for local_query in follow_up_queries:

ctx.send_event(LocalSearch(question=question, local_query=local_query))

return None

else:

return FinalAnswer(question=question)This creates an iterative refinement loop the place every depth stage builds on earlier findings. As soon as max depth is reached, it triggers closing reply era.

Remaining reply

The ultimate step synthesizes all intermediate solutions collected all through the DRIFT search course of right into a complete response. This contains the preliminary reply from group search and all solutions generated through the native search iterations.

@step

async def final_answer_generation(self, ctx: Context, ev: FinalAnswer) -> StopEvent:

# Retrieve all intermediate solutions collected all through the search course of

intermediate_answers = await ctx.retailer.get("intermediate_answers")

# Synthesize all findings right into a complete closing response

answer_prompt = DRIFT_REDUCE_PROMPT.format(

response_type=DEFAULT_RESPONSE_TYPE,

context_data=intermediate_answers,

global_query=ev.question,

)

answer_response = await consumer.responses.create(

mannequin="gpt-5-mini",

enter=[

{"role": "developer", "content": answer_prompt},

{"role": "user", "content": ev.query},

],

reasoning={"effort": "low"},

)

return StopEvent(consequence=answer_response.output_text)Abstract

DRIFT search presents an fascinating technique for balancing the breadth of world search with the precision of native search. By beginning with community-level context and progressively drilling down by iterative follow-up queries, it avoids the computational overhead of processing all group studies whereas nonetheless sustaining complete protection.

Nonetheless, there’s room for a number of enhancements. The present implementation treats all intermediate solutions equally, however filtering based mostly on their confidence scores may enhance closing reply high quality and cut back noise. Equally, follow-up queries may very well be ranked by relevance or potential data achieve earlier than execution, guaranteeing essentially the most promising leads are pursued first.

One other promising enhancement could be introducing a question refinement step that makes use of an LLM to investigate all generated follow-up queries, grouping comparable ones to keep away from redundant searches and filtering out queries unlikely to yield helpful data. This might considerably cut back the variety of native searches whereas sustaining reply high quality.

The total implementation is obtainable on GitHub for these involved in experimenting with these enhancements or adapting DRIFT for their very own use instances.