OpenAI hasn’t launched an open-weight language mannequin since GPT-2 again in 2019. Six years later, they stunned everybody with two: gpt-oss-120b and the smaller gpt-oss-20b.

Naturally, we wished to know — how do they really carry out?

To search out out, we ran each fashions by our open-source workflow optimization framework, syftr. It evaluates fashions throughout completely different configurations — quick vs. low cost, excessive vs. low accuracy — and contains assist for OpenAI’s new “pondering effort” setting.

In concept, extra pondering ought to imply higher solutions. In follow? Not at all times.

We additionally use syftr to discover questions like “is LLM-as-a-Judge actually working?” and “what workflows perform well across many datasets?”.

Our first outcomes with GPT-OSS would possibly shock you: the very best performer wasn’t the most important mannequin or the deepest thinker.

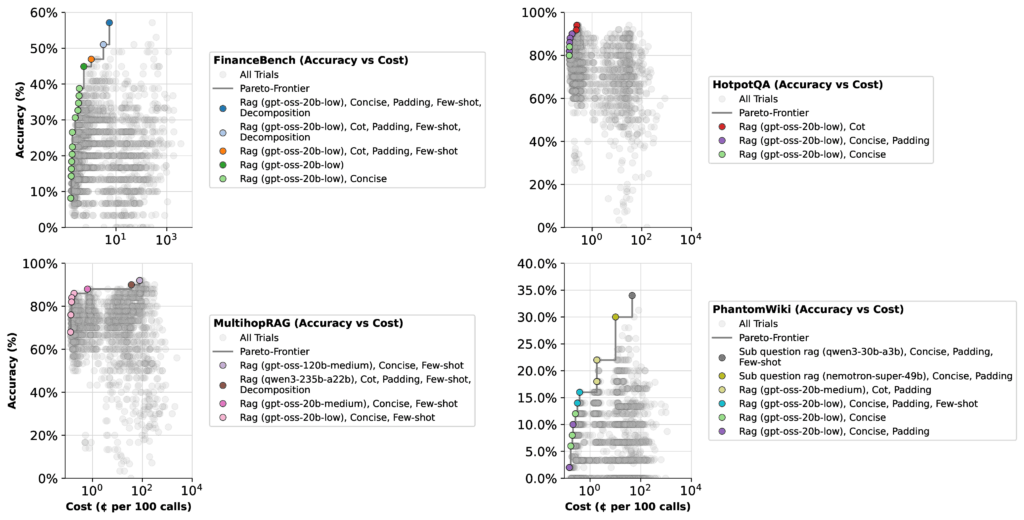

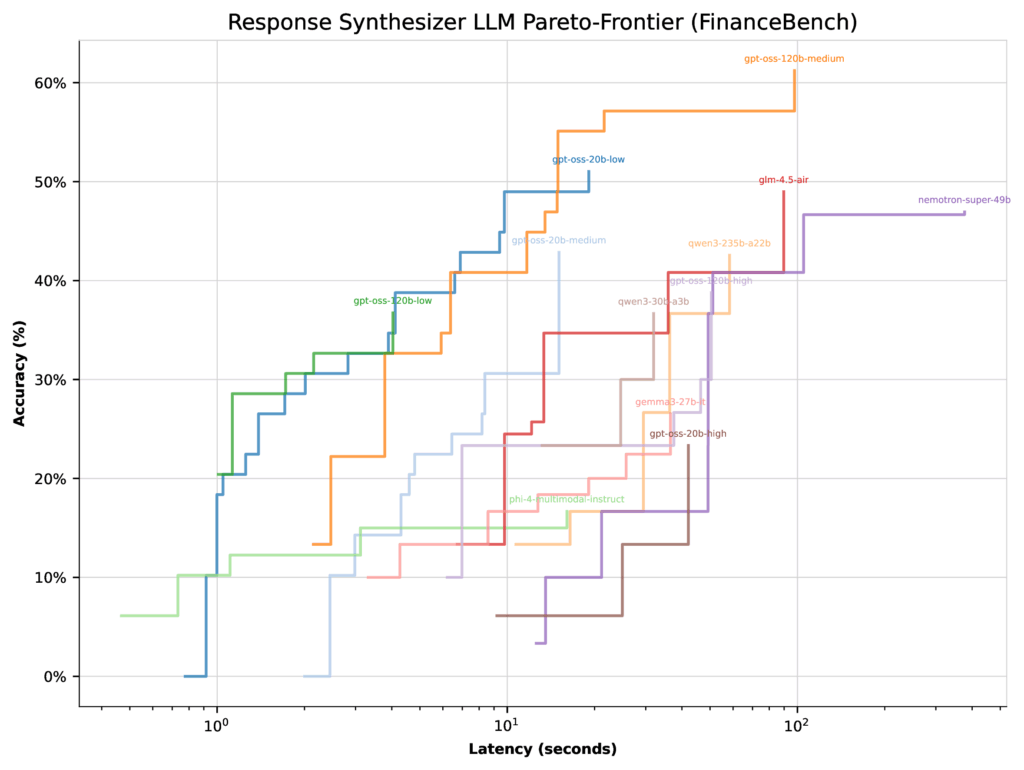

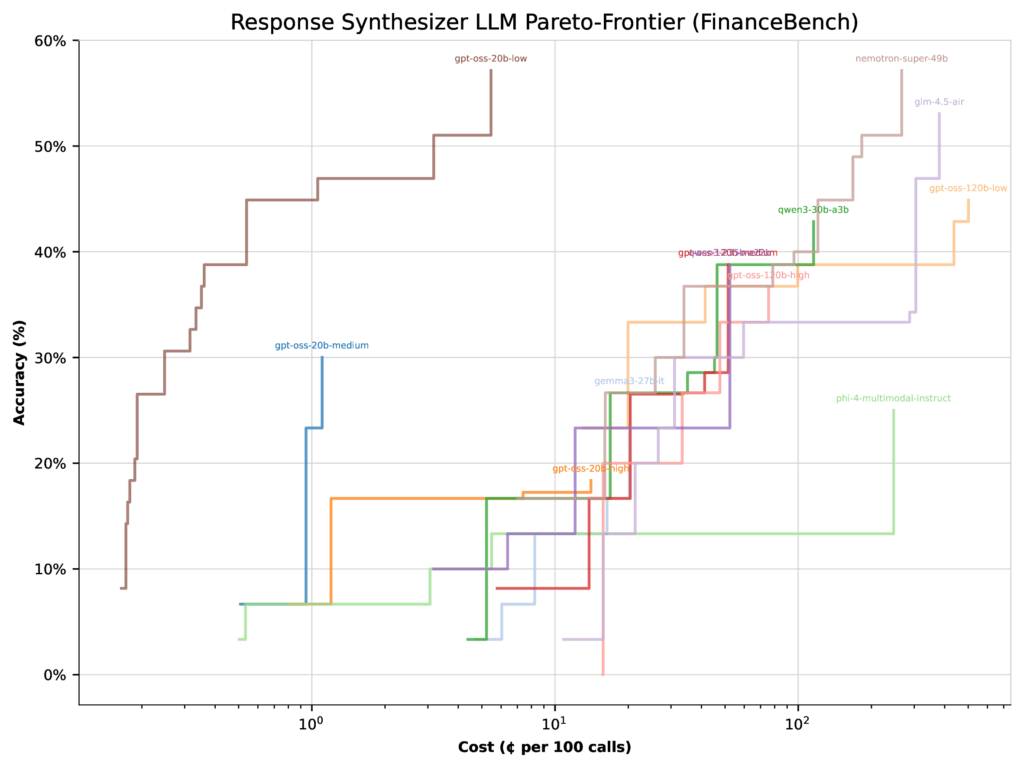

As an alternative, the 20b mannequin with low pondering effort persistently landed on the Pareto frontier, even rivaling the 120b medium configuration on benchmarks like FinanceBench, HotpotQA, and MultihopRAG. In the meantime, excessive pondering effort not often mattered in any respect.

How we arrange our experiments

We didn’t simply pit GPT-OSS in opposition to itself. As an alternative, we wished to see the way it stacked up in opposition to different robust open-weight fashions. So we in contrast gpt-oss-20b and gpt-oss-120b with:

- qwen3-235b-a22b

- glm-4.5-air

- nemotron-super-49b

- qwen3-30b-a3b

- gemma3-27b-it

- phi-4-multimodal-instruct

To check OpenAI’s new “pondering effort” function, we ran every GPT-OSS mannequin in three modes: low, medium, and excessive pondering effort. That gave us six configurations in complete:

- gpt-oss-120b-low / -medium / -high

- gpt-oss-20b-low / -medium / -high

For analysis, we forged a large web: 5 RAG and agent modes, 16 embedding fashions, and a variety of stream configuration choices. To guage mannequin responses, we used GPT-4o-mini and in contrast solutions in opposition to recognized floor reality.

Lastly, we examined throughout 4 datasets:

- FinanceBench (monetary reasoning)

- HotpotQA (multi-hop QA)

- MultihopRAG (retrieval-augmented reasoning)

- PhantomWiki (artificial Q&A pairs)

We optimized workflows twice: as soon as for accuracy + latency, and as soon as for accuracy + value—capturing the tradeoffs that matter most in real-world deployments.

Optimizing for latency, value, and accuracy

Once we optimized the GPT-OSS fashions, we checked out two tradeoffs: accuracy vs. latency and accuracy vs. value. The outcomes have been extra shocking than we anticipated:

- GPT-OSS 20b (low pondering effort):

Quick, cheap, and persistently correct. This setup appeared on the Pareto frontier repeatedly, making it the very best default alternative for many non-scientific duties. In follow, which means faster responses and decrease payments in comparison with increased pondering efforts. - GPT-OSS 120b (medium pondering effort):

Greatest suited to duties that demand deeper reasoning, like monetary benchmarks. Use this when accuracy on advanced issues issues greater than value. - GPT-OSS 120b (excessive pondering effort):

Costly and normally pointless. Hold it in your again pocket for edge circumstances the place different fashions fall brief. For our benchmarks, it didn’t add worth.

Studying the outcomes extra fastidiously

At first look, the outcomes look easy. However there’s an necessary nuance: an LLM’s prime accuracy rating relies upon not simply on the mannequin itself, however on how the optimizer weighs it in opposition to different fashions within the combine. As an instance, let’s have a look at FinanceBench.

When optimizing for latency, all GPT-OSS fashions (besides excessive pondering effort) landed with related Pareto-frontiers. On this case, the optimizer had little cause to focus on the 20b low pondering configuration—its prime accuracy was solely 51%.

When optimizing for value, the image shifts dramatically. The identical 20b low pondering configuration jumps to 57% accuracy, whereas the 120b medium configuration really drops 22%. Why? As a result of the 20b mannequin is much cheaper, so the optimizer shifts extra weight towards it.

The takeaway: Efficiency will depend on context. Optimizers will favor completely different fashions relying on whether or not you’re prioritizing pace, value, or accuracy. And given the massive search house of potential configurations, there could also be even higher setups past those we examined.

Discovering agentic workflows that work properly in your setup

The brand new GPT-OSS fashions carried out strongly in our checks — particularly the 20b with low pondering effort, which regularly outpaced dearer opponents. The larger lesson? Extra mannequin and extra effort doesn’t at all times imply extra accuracy. Typically, paying extra simply will get you much less.

That is precisely why we constructed syftr and made it open-source. Each use case is completely different, and the very best workflow for you will depend on the tradeoffs you care about most. Need decrease prices? Quicker responses? Most accuracy?

Run your own experiments and discover the Pareto candy spot that balances these priorities to your setup.