Image this: you’re scrolling by your cellphone at 2 AM, feeling a bit lonely, when an advert pops up promising the “good digital girlfriend who’ll by no means choose you.” Sounds tempting, proper?

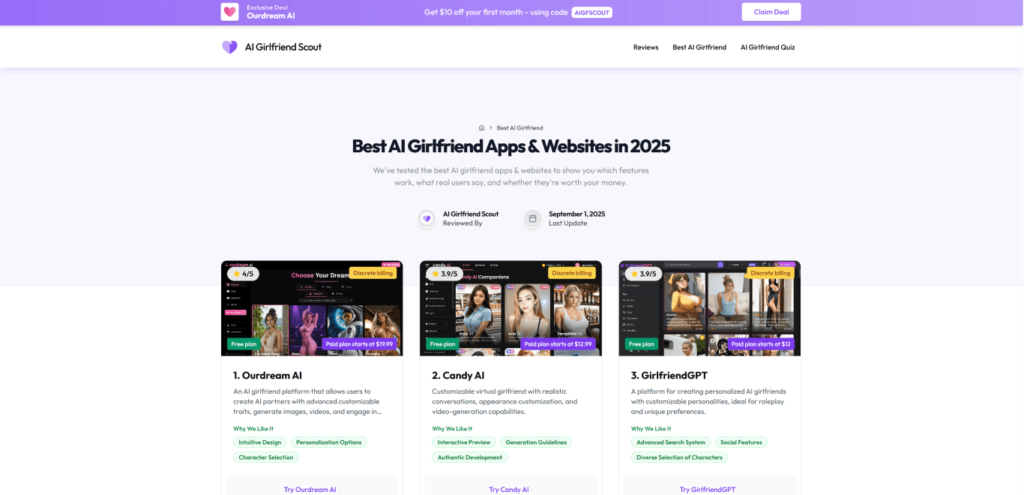

With AI girlfriend apps like Replika, Character.AI, and Sweet AI exploding in reputation, hundreds of thousands of individuals are diving into digital relationships. However right here’s what most don’t notice — these digital romances include some critical high-quality print that would go away you heartbroken, broke, or worse.

Earlier than you swipe proper on synthetic love, listed here are 5 essential issues each potential AI girlfriend person must know.

1. Your Relationship Isn’t Non-public

You may assume these late-night conversations along with your AI girlfriend are simply between you two — however you’d be lifeless flawed.

Romantic chatbots are privateness nightmares on steroids. These apps acquire extremely delicate knowledge about you: your sexual preferences, psychological well being struggles, relationship patterns, and even biometric knowledge like your voice patterns. Mozilla’s 2024 assessment was so alarmed that they slapped a “Privateness Not Included” warning on each single romantic AI app they examined.

The truth verify: Each confession, fantasy, and susceptible second you share might doubtlessly be bought, leaked, or subpoenaed. That’s not precisely the inspiration for a trusting relationship, is it?

2. Your Associate Can Change In a single day (Or Disappear Fully)

Think about waking up sooner or later to search out your girlfriend has fully completely different persona, can’t bear in mind your shared recollections, or has merely vanished. Welcome to the wild world of AI relationships.

Mannequin updates, coverage adjustments, and technical outages can rework or fully interrupt your digital associate with none warning. Replika customers skilled this firsthand in 2023 when the corporate immediately banned NSFW content material in what the group dubbed “the lobotomy.” 1000’s of customers reported that their AI companions felt hole and unrecognizable afterward.

The tough reality: You’re not in a relationship with an individual — you’re subscribed to a service that may change the principles, persona, or availability of your “associate” at any second. The corporate controls your relationship’s destiny, not you.

3. The Prices Add Up Quick (And Hold Rising)

That “free” AI girlfriend? She’s about to get very costly, in a short time.

The marketed costs not often inform the entire story. Character.AI+ prices round €9.99/month only for higher reminiscence and sooner responses. Sweet AI costs $13.99/month plus further tokens for photos and voice calls. Need your AI to recollect your anniversary? That’ll price further. Need her to ship you a photograph? Extra tokens, please.

The cash lure: These apps are designed like cell video games — they hook you with fundamental options, then nickel-and-dime you for every little thing that makes the expertise worthwhile. Customers report spending lots of and even 1000’s of {dollars} yearly on what began as a “free” relationship.

4. The Emotional Affect Is No Joke

Don’t let anybody let you know that AI relationships aren’t “actual” — the sentiments actually are, and they are often each great and harmful.

Many customers report real emotional advantages: lowered loneliness, a judgment-free area to observe social abilities, and luxury throughout troublesome occasions. For some individuals, particularly these with social anxiousness or trauma, AI companions present a secure stepping stone towards human connection.

However there’s a darker aspect that therapists are more and more fearful about. Research present that heavy customers typically turn into extra depending on their AI companions whereas concurrently lowering their real-world social interactions. The AI is programmed to all the time agree with you, validate your emotions, and by no means problem your development — which sounds good however can create an unhealthy bubble.

The psychological actuality: Some customers wrestle to differentiate between their AI relationship and actuality, creating unrealistic expectations for human companions. Others turn into so emotionally invested that technical points or coverage adjustments really feel like real heartbreak or abandonment.

5. You’ll Develop into a Relationship Designer (Whether or not You Need to Or Not)

Neglect the fantasy of an AI girlfriend who “simply will get you” proper out of the field. These relationships require fixed work, upkeep, and technical troubleshooting that may make a NASA engineer drained.

You’ll must craft detailed persona descriptions, preserve reminiscence notes, use particular prompts to take care of consistency, and continually troubleshoot when your AI “forgets” necessary particulars about your relationship. Many customers spend hours on Reddit boards studying the way to jailbreak their bots for sure behaviors or work round content material restrictions.

The upkeep actuality: You’re not simply getting a girlfriend — you’re turning into a relationship programmer, reminiscence supervisor, and technical help specialist all rolled into one. The “easy connection” advertising guarantees couldn’t be farther from the reality.

Backside Line

AI girlfriends aren’t inherently good or unhealthy — they’re instruments that may present real consolation and companionship for some individuals whereas creating dependency and unrealistic expectations for others.

The expertise has actual potential to assist individuals observe social abilities, work by loneliness, and discover relationships in a secure surroundings. However the present panorama is full of privateness violations, predatory pricing, technical instability, and emotional manipulation that firms aren’t being clear about.

My advice: If you happen to determine to discover AI companionship, go in along with your eyes extensive open. Use a burner e-mail, restrict app permissions, set strict money and time boundaries, preserve actual human connections, and by no means share something you couldn’t deal with being leaked to the world.