Introduction

any information pipeline, information sources — particularly databases — are the spine. To simulate a practical pipeline, I wanted a safe, dependable database atmosphere to function the supply of reality for downstream ETL jobs.

Somewhat than provisioning this manually, I selected to automate all the things utilizing Terraform, aligning with fashionable information engineering and DevOps finest practices. This not solely saved time but in addition ensured the atmosphere might be simply recreated, scaled, or destroyed with a single command — identical to in manufacturing. And in case you’re engaged on the AWS Free Tier, that is much more vital — automation ensures you’ll be able to clear up all the things with out forgetting a useful resource that may generate prices.

Stipulations

To observe together with this undertaking, you’ll want the next instruments and setup:

- Terraform put in https://developer.hashicorp.com/terraform/install

- AWS CLI & IAM Setup

- Set up the AWS CLI

- Create an IAM consumer with programmatic entry that has permission to:

- Connect the coverage

AdministratorAccess(or create a customized coverage with restricted permissions to create all of the assets included) - Obtain the Entry Key ID and Secret Entry Key

- Connect the coverage

- Configure the AWS CLI

- An AWS Key Pair

Required to SSH into the bastion host. You may create one within the AWS Console below EC2 > Key Pairs. - A Unix-based atmosphere (Linux/macOS, or WSL for Home windows)

This ensures compatibility with the shell script and Terraform instructions.

Getting Began: What We’re Constructing

Let’s stroll by easy methods to construct a safe and automatic AWS database setup utilizing Terraform.

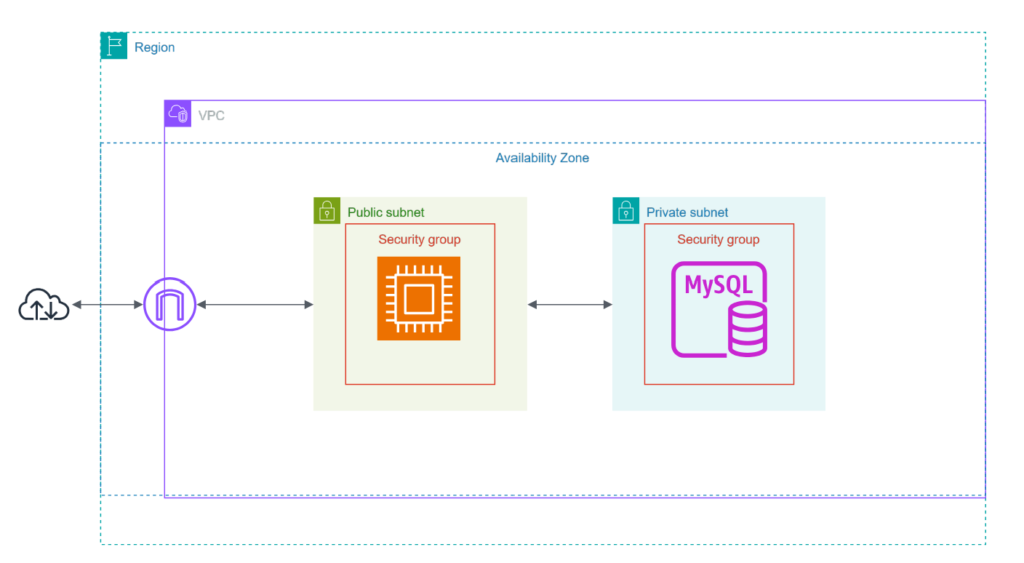

Infrastructure Overview

This undertaking provisions a whole, production-style AWS atmosphere utilizing Terraform. The next assets shall be created:

Networking

- A customized VPC with a CIDR block (

10.0.0.0/16) - Two personal subnets in numerous Availability Zones (for the RDS occasion)

- One public subnet (for the bastion host)

- Web Gateway and Route Tables for public subnet routing

- A DB Subnet Group for multi-AZ RDS deployment

Compute

- A bastion EC2 occasion within the public subnet

- Used to SSH into the personal subnets and entry the database securely

- Provisioned with a customized safety group permitting solely port 22 (SSH) entry

Database

- A MySQL RDS occasion

- Deployed in personal subnets (not accessible from the general public web)

- Configured with a devoted safety group that permits entry solely from the bastion host

Safety

- Safety teams:

- Bastion SG: permits inbound SSH (port 22) out of your IP

- RDS SG: permits inbound MySQL (port 3306) from the bastion’s SG

Automation

- A setup script (

setup.sh) that:- Exports Terraform variables

Modular Design With Terraform

I broke the infrastructure into modules like community, bastion and rds. This permits me to reuse, scale and check completely different elements independently.

The next diagram illustrates how Terraform understands and constructions the dependencies between completely different elements of the infrastructure, the place every node represents a useful resource or module.

This visualization helps confirm that:

- Assets are correctly linked (e.g., the RDS occasion is dependent upon personal subnets),

- Modules are remoted but interoperable (e.g.,

community,bastion, andrds), - There aren’t any round dependencies.

To keep up the above-mentioned modular configuration, I structured the undertaking accordingly and supplied explanations for every element to make clear their roles throughout the setup.

.

├── information

│ └── mysqlsampledatabase.sql # Pattern dataset to be imported into the RDS database

├── scripts

│ └── setup.sh # Bash script to export atmosphere variables (TF_VAR_*), fetch dynamic values, and add Glue scripts (optionally available)

└── terraform

├── modules # Reusable infrastructure modules

│ ├── bastion

│ │ ├── compute.tf # Defines EC2 occasion configuration for the Bastion host

│ │ ├── community.tf # Makes use of information sources to reference present public subnet and VPC (doesn't create them)

│ │ ├── outputs.tf # Outputs Bastion host public IP tackle

│ │ └── variables.tf # Enter variables required by the Bastion module (AMI ID, key pair identify, and so on.)

│ ├── community

│ │ ├── community.tf # Provisions VPC, public/personal subnets, Web gateway, and route tables

│ │ ├── outputs.tf # Exposes VPC ID, subnet IDs, and route desk IDs for downstream modules

│ │ └── variables.tf # Enter variables like CIDR blocks and availability zones

│ └── rds

│ ├── community.tf # Defines DB subnet group utilizing personal subnet IDs

│ ├── outputs.tf # Outputs RDS endpoint and safety group for different modules to eat

│ ├── rds.tf # Provisions a MySQL RDS occasion inside personal subnets

│ └── variables.tf # Enter variables resembling DB identify, username, password, and occasion dimension

└── rds-bastion # Root Terraform configuration

├── backend.tf # Configures the Terraform backend (e.g., native or distant state file location)

├── foremost.tf # High-level orchestrator file that connects and wires up all modules

├── outputs.tf # Consolidates and re-exports outputs from the modules (e.g., Bastion IP, DB endpoint)

├── supplier.tf # Defines the AWS supplier and required model

└── variables.tf # Venture-wide variables handed to modules and referenced throughout recordsdata

With the modular construction in place, the foremost.tf file is positioned within the rds-bastion listing acts because the orchestrator. It ties collectively the core elements: the community, the RDS database, and the bastion host. Every module is invoked with required inputs, most of that are outlined in variables.tf or handed through atmosphere variables (TF_VAR_*).

module "community" {

supply = "../modules/community"

area = var.area

project_name = var.project_name

availability_zone_1 = var.availability_zone_1

availability_zone_2 = var.availability_zone_2

vpc_cidr = var.vpc_cidr

public_subnet_cidr = var.public_subnet_cidr

private_subnet_cidr_1 = var.private_subnet_cidr_1

private_subnet_cidr_2 = var.private_subnet_cidr_2

}

module "bastion" {

supply = "../modules/bastion"

area = var.area

vpc_id = module.community.vpc_id

public_subnet_1 = module.community.public_subnet_id

availability_zone_1 = var.availability_zone_1

project_name = var.project_name

instance_type = var.instance_type

key_name = var.key_name

ami_id = var.ami_id

}

module "rds" {

supply = "../modules/rds"

area = var.area

project_name = var.project_name

vpc_id = module.community.vpc_id

private_subnet_1 = module.community.private_subnet_id_1

private_subnet_2 = module.community.private_subnet_id_2

availability_zone_1 = var.availability_zone_1

availability_zone_2 = var.availability_zone_2

db_name = var.db_name

db_username = var.db_username

db_password = var.db_password

bastion_sg_id = module.bastion.bastion_sg_id

}

On this modular setup, every infrastructure element is loosely coupled however linked by well-defined inputs and outputs.

For instance, after provisioning the VPC and subnets within the community module, I retrieve their IDs utilizing its outputs, and go them as enter variables to different modules like rds and bastion. This avoids hardcoding and allows Terraform to dynamically resolve dependencies and construct the dependency graph internally.

In some circumstances (resembling throughout the bastion module), I additionally use information sources to reference present assets created by earlier modules, as an alternative of recreating or duplicating them.

The dependency between modules depends on the right definition and publicity of outputs from beforehand created modules. These outputs are then handed as enter variables to dependent modules, enabling Terraform to construct an inner dependency graph and orchestrate the right creation order.

For instance, the community module exposes the VPC ID and subnet IDs utilizing outputs.tf. These values are then consumed by downstream modules like rds and bastion by the foremost.tf file of the foundation configuration.

Under is how this works in apply:

Inside modules/community/outputs.tf:

output "vpc_id" {

description = "ID of the VPC"

worth = aws_vpc.foremost.id

}Inside modules/bastion/variables.tf:

variable "vpc_id" {

description = "ID of the VPC"

kind = string

}Inside modules/bastion/community.tf:

information "aws_vpc" "foremost" {

id = var.vpc_id

}To provision the RDS occasion, I created two personal subnets in numerous Availability Zones, as AWS requires no less than two subnets in separate AZs to arrange a DB subnet group.

Though I met this requirement for proper configuration, I disabled Multi-AZ deployment throughout RDS creation to keep throughout the AWS Free Tier limits and keep away from extra prices. This setup nonetheless simulates a production-grade community format whereas remaining cost-effective for growth and testing.

Deployment Workflow

With all of the modules correctly wired by inputs and outputs, and the infrastructure logic encapsulated in reusable blocks, the subsequent step is to automate the provisioning course of. As an alternative of manually passing variables every time, a helper script setup.sh is used to export crucial atmosphere variables (TF_VAR_*).

As soon as the setup script is sourced, deploying the infrastructure turns into so simple as operating just a few Terraform instructions.

supply scripts/setup.sh

cd terraform/rds-bastion

terraform init

terraform plan

terraform applyTo streamline the Terraform deployment course of, I created a helper script (setup.sh) that robotically exports required atmosphere variables utilizing the TF_VAR_ naming conference. Terraform robotically picks up variables prefixed with TF_VAR_, so this strategy avoids hardcoding values in .tf recordsdata or requiring handbook enter each time.

#!/bin/bash

set -e

export de_project=""

export AWS_DEFAULT_REGION=""

# Outline the variables to handle

declare -A TF_VARS=(

["TF_VAR_project_name"]="$de_project"

["TF_VAR_region"]="$AWS_DEFAULT_REGION"

["TF_VAR_availability_zone_1"]="us-east-1a"

["TF_VAR_availability_zone_2"]="us-east-1b"

["TF_VAR_ami_id"]=""

["TF_VAR_key_name"]=""

["TF_VAR_db_username"]=""

["TF_VAR_db_password"]=""

["TF_VAR_db_name"]=""

)

for var in "${!TF_VARS[@]}"; do

worth="${TF_VARS[$var]}"

if grep -q "^export $var=" "$HOME/.bashrc"; then

sed -i "s|^export $var=.*|export $var=$worth|" "$HOME/.bashrc"

else

echo "export $var=$worth" >> "$HOME/.bashrc"

fi

completed

# Supply up to date .bashrc to make adjustments obtainable instantly on this shell

supply "$HOME/.bashrc"

After operating terraform apply, Terraform will provision all of the outlined assets—VPC, subnets, route tables, RDS occasion, and Bastion host. As soon as the method completes efficiently, you’ll see output values much like the next:

Apply full! Assets: 12 added, 0 modified, 0 destroyed.

Outputs:

bastion_public_ip = "<Bastion EC2 Public IP>"

bastion_sg_id = "<Safety Group ID for Bastion Host>"

db_endpoint = "<RDS Endpoint>:3306"

instance_public_dns = "<EC2 Public DNS>"

rds_db_name = "<Database Identify>"

vpc_id = "<VPC ID>"

vpc_name = "<VPC Identify>"These outputs are outlined within the outputs.tf recordsdata of your modules and re-exported within the root module (rds-bastion/outputs.tf). They’re essential for:

- SSH-ing into the Bastion Host

- Connecting securely to the personal RDS occasion

- Validating useful resource creation

Connecting to the RDS through Bastion Host and Seeding the Database

Now that the infrastructure is provisioned, the subsequent step is to seed the MySQL database hosted on the RDS occasion. Because the database is inside a personal subnet, we can’t entry it immediately from our native machine. As an alternative, we’ll use the Bastion EC2 occasion as a bounce host to:

- Switch the pattern dataset (

mysqlsampledatabase.sql) to the Bastion.

- Join from the Bastion to the RDS occasion.

- Import the SQL information to initialize the database.

You might transfer two directories up from the Terraform foremost listing and ship the SQL content material to the distant EC2 (Bastion) after studying the native SQL file inside information listing.

cd ../..

cat information/mysqlsampledatabase.sql | ssh -i your-key.pem ec2-user@<BASTION_PUBLIC_IP> 'cat > ~/mysqlsampledatabase.sql'

As soon as the dataset is copied to the Bastion EC2 occasion, the subsequent step is to SSH into the distant machine and :

ssh -i ~/.ssh/new-key.pem ec2-user@<BASTION_PUBLIC_IP>After connecting, you should utilize the MySQL consumer (already put in in case you used mariadb105 in your EC2 setup) to import the SQL file into your RDS database:

mysql -h <DATABASE_ENDPOINT> -P 3306 -u <DATABASE_USERNAME> -p < mysqlsampledatabase.sql Enter the password when prompted.

As soon as the import is full, you’ll be able to hook up with the RDS MySQL database once more to confirm that the database and its tables have been efficiently created.

Run the next command from throughout the Bastion host:

mysql -h <DATABASE_ENDPOINT> -P 3306 -u <DATABASE_USERNAME> -p After coming into your password, you’ll be able to checklist the obtainable databases and tables:

To make sure the dataset was correctly imported into the RDS occasion, I ran a easy question:

This returned a row from the clients desk, confirming that:

- The database and tables had been created efficiently

- The pattern dataset was seeded into the RDS occasion

- The Bastion host and personal RDS setup are working as supposed

This completes the infrastructure setup and information import course of.

Destroying the Infrastructure

When you’re completed testing or demonstrating your setup, it’s vital to destroy the AWS assets to keep away from pointless costs.

Since all the things was provisioned utilizing Terraform, tearing down the complete infrastructure is simply so simple as operating one command after navigating to your root configuration listing:

cd terraform/rds-bastion

terraform destroyConclusion

On this undertaking, I demonstrated easy methods to provision a safe and production-like database infrastructure utilizing Terraform on AWS. Somewhat than exposing the database to the general public web, I carried out finest practices by putting the RDS occasion in personal subnets, accessible solely through a bastion host in a public subnet.

By structuring the undertaking with modular Terraform configurations, I ensured every element—community, database, and bastion host—was loosely coupled, reusable, and straightforward to handle. I additionally showcased how Terraform’s inner dependency graph handles the orchestration and sequencing of useful resource creation seamlessly.

Because of infrastructure as code (IaC), the complete atmosphere might be introduced up or torn down with a single command, making it superb for ETL prototyping, information engineering apply, or proof-of-concept pipelines. Most significantly, this automation helps keep away from sudden prices by letting you destroy all assets cleanly when you’re completed.

You’ll find the entire supply code, Terraform configuration, and setup scripts in my GitHub repository:

https://github.com/YagmurGULEC/rds-ec2-terraform.git

Be happy to discover the code, clone the repo, and adapt it to your individual AWS tasks. Contributions, suggestions, and stars are all the time welcome!

What’s Subsequent?

You may prolong this setup by:

- Connecting an AWS Glue job to the RDS occasion for ETL processing.

- Including monitoring to your RDS database and EC2 occasion