instruments like dbt make developing SQL information pipelines simple and systematic. However even with the added construction and clearly outlined information fashions, pipelines can nonetheless turn out to be complicated, which makes debugging points and validating adjustments to information fashions troublesome.

The rising complexity of information transformation logic provides rise to the next points:

- Conventional code evaluation processes solely have a look at code adjustments and exclude the information impression of these adjustments.

- Information impression ensuing from code adjustments is tough to hint. In sprawling DAGs with nested dependencies, discovering how and the place information impression happens is extraordinarily time-consuming, or close to unimaginable.

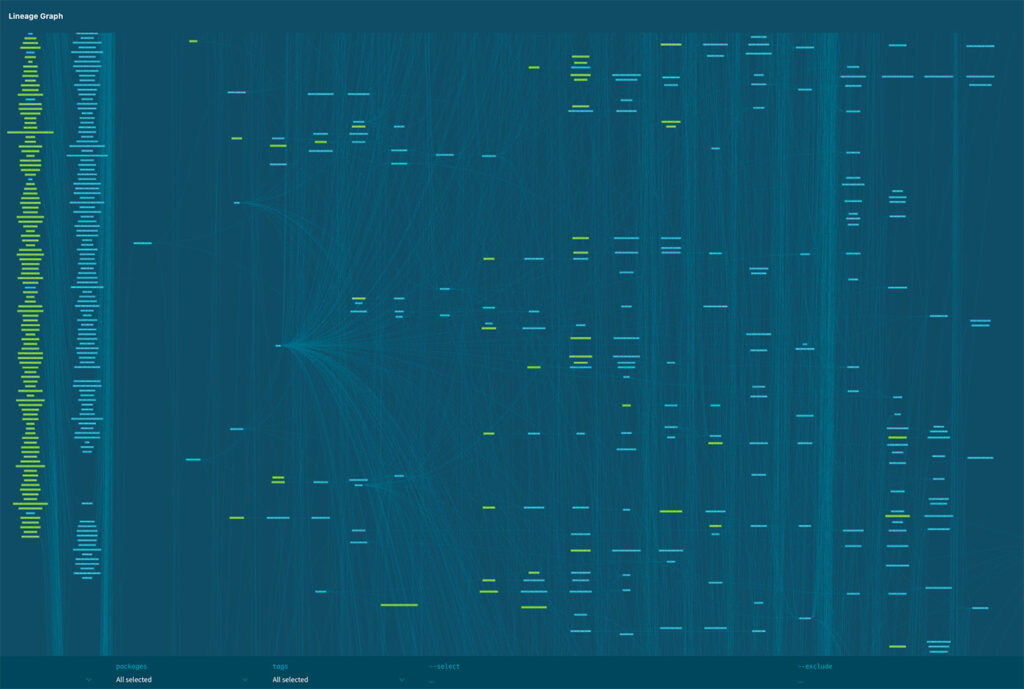

Gitlab’s dbt DAG (proven within the featured picture above) is the proper instance of an information challenge that’s already a house-of-cards. Think about making an attempt to comply with a easy SQL logic change to a column by this complete lineage DAG. Reviewing an information mannequin replace could be a frightening process.

How would you method one of these evaluation?

What’s information validation?

Information validation refers back to the course of used to find out that the information is appropriate by way of real-world necessities. This implies making certain that the SQL logic in an information mannequin behaves as meant by verifying that the information is appropriate. Validation is normally carried out after modifying an information mannequin, akin to accommodating new necessities, or as a part of a refactor.

A singular evaluation problem

Information has states and is straight affected by the transformation used to generate it. That is why reviewing information mannequin adjustments is a novel problem, as a result of each the code and the information must be reviewed.

Because of this, information mannequin updates must be reviewed not just for completeness, but in addition context. In different phrases, that the information is appropriate and present information and metrics weren’t unintentionally altered.

Two extremes of information validation

In most information groups, the individual making the change depends on institutional information, instinct, or previous expertise to evaluate the impression and validate the change.

“I’ve made a change to X, I believe I do know what the impression must be. I’ll test it by operating Y”

The validation technique normally falls into one in every of two extremes, neither of which is good:

- Spot-checking with queries and a few high-level checks like row depend and schema. It’s quick however dangers lacking precise impression. Important and silent errors can go unnoticed.

- Exhaustive checking of each single downstream mannequin. It’s sluggish and useful resource intensive, and will be pricey because the pipeline grows.

This leads to an information evaluation course of that’s unstructured, arduous to repeat, and sometimes introduces silent errors. A brand new technique is required that helps the engineer to carry out exact and focused information validation.

A greater method by understanding information mannequin dependencies

To validate a change to a knowledge challenge, it’s essential to know the connection between fashions and the way information flows by the challenge. These dependencies between fashions inform us how information is handed and remodeled from one mannequin to a different.

Analyze the connection between fashions

As we’ve seen, information challenge DAGs will be large, however an information mannequin change solely impacts a subset of fashions. By isolating this subset after which analyzing the connection between the fashions, you possibly can peel again the layers of complexity and focus simply on the fashions that really want validating, given a particular SQL logic change.

The forms of dependencies in an information challenge are:

Mannequin-to mannequin

A structural dependency wherein columns are chosen from an upstream mannequin.

--- downstream_model

choose

a,

b

from {{ ref("upstream_model") }}Column-to-column

A projection dependency that selects, renames, or transforms an upstream column.

--- downstream_model

choose

a,

b as b2

from {{ ref("upstream_model") }}Mannequin-to-column

A filter dependency wherein a downstream mannequin makes use of an upstream mannequin in a the place, be a part of, or different conditional clause.

-- downstream_model

choose

a

from {{ ref("upstream_model") }}

the place b > 0Understanding the dependencies between fashions helps us to outline the impression radius of an information mannequin logic change.

Establish the impression radius

When making adjustments to a knowledge mannequin’s SQL, it’s essential to know which different fashions could be affected (the fashions you need to test). On the excessive degree, that is executed by model-to-model relationships. This subset of DAG nodes is named the impression radius.

Within the DAG beneath, the impression radius contains nodes B (the modified mannequin) and D (the downstream mannequin). In dbt, these fashions will be recognized utilizing the modified+ selector.

Figuring out modified nodes and downstream is a superb begin, and by isolating adjustments like this you’ll cut back the potential information validation space. Nonetheless, this might nonetheless lead to a lot of downstream fashions.

Classifying the varieties of SQL adjustments can additional aid you to prioritize which fashions really require validation by understanding the severity of the change, eliminating branches with adjustments which might be recognized to be protected.

Classify the SQL change

Not all SQL adjustments carry the identical degree of threat to downstream information, and so must be categorized accordingly. By classifying SQL adjustments this manner, you possibly can add a scientific method to your information evaluation course of.

A SQL change to a knowledge mannequin will be labeled as one of many following:

Non-breaking change

Modifications that don’t impression the information in downstream fashions akin to including new columns, changes to SQL formatting, or including feedback and so forth.

-- Non-breaking change: New column added

choose

id,

class,

created_at,

-- new column

now() as ingestion_time

from {{ ref('a') }}Partial-breaking change

Modifications that solely impression downstream fashions that reference sure columns akin to eradicating or renaming a column; or modifying a column definition.

-- Partial breaking change: `class` column renamed

choose

id,

created_at,

class as event_category

from {{ ref('a') }}Breaking change

Modifications that impression all downstream fashions akin to filtering, sorting, or in any other case altering the construction or which means of the remodeled information.

-- Breaking change: Filtered to exclude information

choose

id,

class,

created_at

from {{ ref('a') }}

the place class != 'inside'Apply classification to cut back scope

After making use of these classifications the impression radius, and the variety of fashions that must be validated, will be considerably diminished.

Within the above DAG, nodes B, C and F have been modified, leading to probably 7 nodes that must be validated (C to E). Nonetheless, not every department accommodates SQL adjustments that really require validation. Let’s check out every department:

Node C: Non-breaking change

C is classed as a non-breaking change. Subsequently each C and H don’t must be checked, they are often eradicated.

Node B: Partial-breaking change

B is classed as a partial-breaking change as a consequence of change to the column B.C1. Subsequently, D and E must be checked solely in the event that they reference column B.C1.

Node F: Breaking change

The modification to mannequin F is classed as a breaking-change. Subsequently, all downstream nodes (G and E) must be checked for impression. As an example, mannequin g may combination information from the modified upstream column

The preliminary 7 nodes have already been diminished to five that must be checked for information impression (B, D, E, F, G). Now, by inspecting the SQL adjustments on the column degree, we will cut back that quantity even additional.

Narrowing the scope additional with column-level lineage

Breaking and non-breaking adjustments are simple to categorise however, in terms of inspecting partial-breaking adjustments, the fashions must be analyzed on the column degree.

Let’s take a more in-depth have a look at the partial-breaking change in mannequin B, wherein the logic of column c1 has been modified. This modification may probably lead to 4 impacted downstream nodes: D, E, Ok, and J. After monitoring column utilization downstream, this subset will be additional diminished.

Following column B.c1 downstream we will see that:

- B.c1 → D.c1 is a column-to-column (projection) dependency.

- D.c1 → E is a model-to-column dependency.

- D → Ok is a model-to-model dependency. Nonetheless, as D.c1 isn’t utilized in Ok, this mannequin will be eradicated.

Subsequently, the fashions that must be validated on this department are B, D, and E. Along with the breaking change F and downstream G, the overall fashions to be validated on this diagram are F, G, B, D, and E, or simply 5 out of a complete of 9 probably impacted fashions.

Conclusion

Information validation after a mannequin change is troublesome, particularly in giant and sophisticated DAGs. It’s simple to overlook silent errors and performing validation turns into a frightening process, with information fashions usually feeling like black packing containers in terms of downstream impression.

A structured and repeatable course of

Through the use of this change-aware information validation method, you possibly can carry construction and precision to the evaluation course of, making it systematic and repeatable. This reduces the variety of fashions that must be checked, simplifies the evaluation course of, and lowers prices by solely validating fashions that really require it.

Earlier than you go…

Dave is a senior technical advocate at Recce, the place we’re constructing a toolkit to allow superior information validation workflows. He’s at all times joyful to speak about SQL, information engineering, or serving to groups navigate their information validation challenges. Join with Dave on LinkedIn.

Analysis for this text was made doable by my colleague Chen En Lu (Popcorny).