will share the best way to construct an AI journal with the LlamaIndex. We are going to cowl one important perform of this AI journal: asking for recommendation. We are going to begin with essentially the most primary implementation and iterate from there. We will see important enhancements for this perform after we apply design patterns like Agentic Rag and multi-agent workflow.

Yow will discover the supply code of this AI Journal in my GitHub repo here. And about who I am.

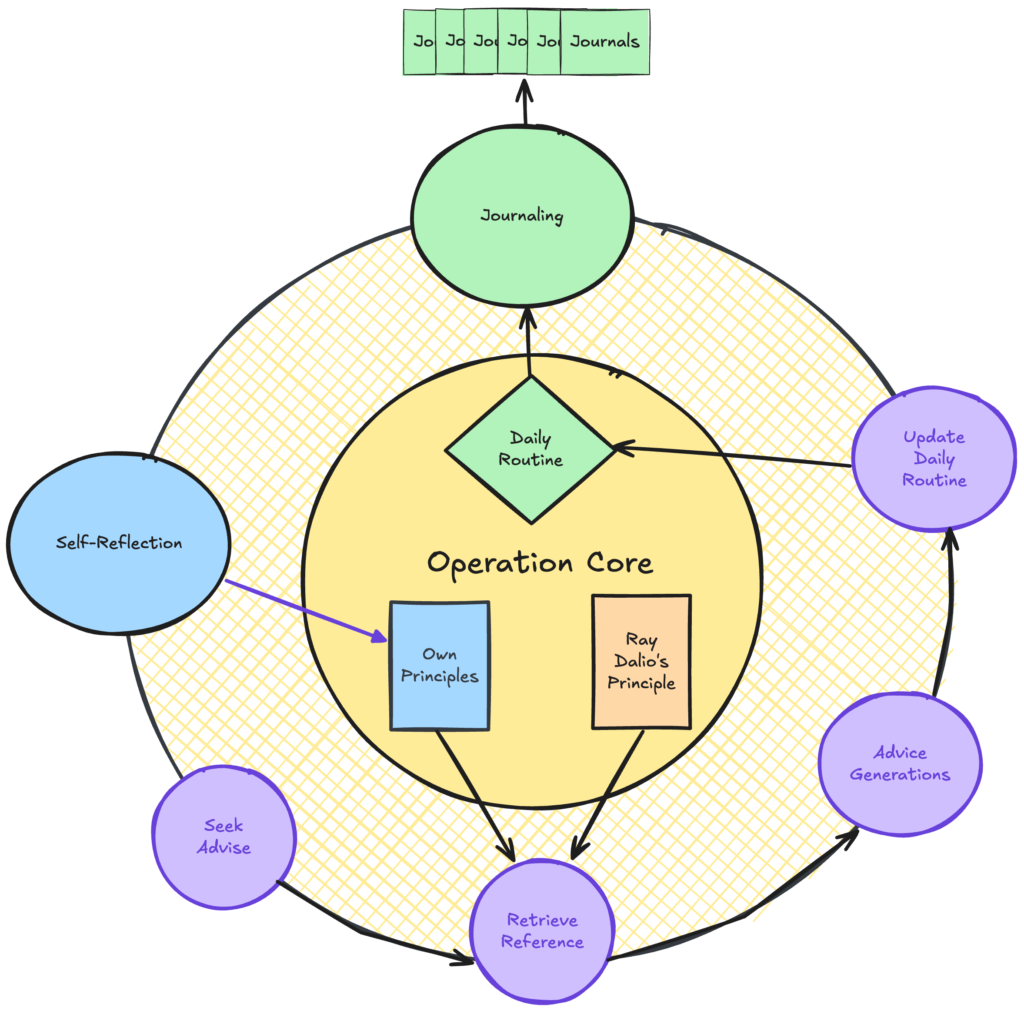

Overview of AI Journal

I need to construct my rules by following Ray Dalio’s apply. An AI journal will assist me to self-reflect, monitor my enchancment, and even give me recommendation. The general perform of such an AI journal appears like this:

In the present day, we’ll solely cowl the implementation of the seek-advise movement, which is represented by a number of purple cycles within the above diagram.

Easiest Kind: LLM with Giant Context

In essentially the most simple implementation, we will move all of the related content material into the context and fasten the query we need to ask. We will do this in Llamaindex with a number of strains of code.

import pymupdf

from llama_index.llms.openai import OpenAI

path_to_pdf_book = './path/to/pdf/ebook.pdf'

def load_book_content():

textual content = ""

with pymupdf.open(path_to_pdf_book) as pdf:

for web page in pdf:

textual content += str(web page.get_text().encode("utf8", errors='ignore'))

return textual content

system_prompt_template = """You might be an AI assistant that gives considerate, sensible, and *deeply customized* recommendations by combining:

- The consumer's private profile and rules

- Insights retrieved from *Ideas* by Ray Dalio

Ebook Content material:

```

{book_content}

```

Consumer profile:

```

{user_profile}

```

Consumer's query:

```

{user_question}

```

"""

def get_system_prompt(book_content: str, user_profile: str, user_question: str):

system_prompt = system_prompt_template.format(

book_content=book_content,

user_profile=user_profile,

user_question=user_question

)

return system_prompt

def chat():

llm = get_openai_llm()

user_profile = enter(">>Inform me about your self: ")

user_question = enter(">>What do you need to ask: ")

user_profile = user_profile.strip()

book_content = load_book_summary()

response = llm.full(immediate=get_system_prompt(book_content, user_profile, user_question))

return responseThis strategy has downsides:

- Low Precision: Loading all of the ebook context would possibly immediate LLM to lose deal with the consumer’s query.

- Excessive Value: Sending over significant-sized content material in each LLM name means excessive value and poor efficiency.

With this strategy, when you move the entire content material of Ray Dalio’s Ideas ebook, responses to questions like “Find out how to deal with stress?” change into very basic. Such responses with out regarding my query made me really feel that the AI was not listening to me. Regardless that it covers many essential ideas like embracing actuality, the 5-step course of to get what you need, and being radically open-minded. I like the recommendation I bought to be extra focused to the query I raised. Let’s see how we will enhance it with RAG.

Enhanced Kind: Agentic RAG

So, what’s Agentic RAG? Agentic RAG is combining dynamic decision-making and information retrieval. In our AI journal, the Agentic RAG movement appears like this:

- Query Analysis: Poorly framed questions result in poor question outcomes. The agent will consider the consumer’s question and make clear the questions if the Agent believes it’s mandatory.

- Query Re-write: Rewrite the consumer enquiry to undertaking it to the listed content material within the semantic area. I discovered these steps important for enhancing the precision throughout the retrieval. Let’s say in case your information base is Q/A pair and you’re indexing the questions half to seek for solutions. Rewriting the consumer’s question assertion to a correct query will assist you discover essentially the most related content material.

- Question Vector Index: Many parameters could be tuned when constructing such an index, together with chunk dimension, overlap, or a unique index sort. For simplicity, we’re utilizing VectorStoreIndex right here, which has a default chunking technique.

- Filter & Artificial: As an alternative of a fancy re-ranking course of, I explicitly instruct LLM to filter and discover related content material within the immediate. I see LLM choosing up essentially the most related content material, regardless that generally it has a decrease similarity rating than others.

With this Agentic RAG, you may retrieve extremely related content material to the consumer’s questions, producing extra focused recommendation.

Let’s study the implementation. With the LlamaIndex SDK, creating and persisting an index in your native listing is easy.

from llama_index.core import Doc, VectorStoreIndex, StorageContext, load_index_from_storage

Settings.embed_model = OpenAIEmbedding(api_key="ak-xxxx")

PERSISTED_INDEX_PATH = "/path/to/the/listing/persist/index/domestically"

def create_index(content material: str):

paperwork = [Document(text=content)]

vector_index = VectorStoreIndex.from_documents(paperwork)

vector_index.storage_context.persist(persist_dir=PERSISTED_INDEX_PATH)

def load_index():

storage_context = StorageContext.from_defaults(persist_dir=PERSISTED_INDEX_PATH)

index = load_index_from_storage(storage_context)

return indexAs soon as we now have an index, we will create a question engine on prime of that. The question engine is a robust abstraction that lets you modify the parameters throughout the question(e.g., TOP Ok) and the synthesis behaviour after the content material retrieval. In my implementation, I overwrite the response_mode NO_TEXT as a result of the agent will course of the ebook content material returned by the perform name and synthesize the ultimate outcome. Having the question engine to synthesize the outcome earlier than passing it to the agent could be redundant.

from llama_index.core.indices.vector_store import VectorIndexRetriever

from llama_index.core.query_engine import RetrieverQueryEngine

from llama_index.core.response_synthesizers import ResponseMode

from llama_index.core import VectorStoreIndex, get_response_synthesizer

def _create_query_engine_from_index(index: VectorStoreIndex):

# configure retriever

retriever = VectorIndexRetriever(

index=index,

similarity_top_k=TOP_K,

)

# return the unique content material with out utilizing LLM to synthesizer. For later analysis.

response_synthesizer = get_response_synthesizer(response_mode=ResponseMode.NO_TEXT)

# assemble question engine

query_engine = RetrieverQueryEngine(

retriever=retriever,

response_synthesizer=response_synthesizer

)

return query_engineThe immediate appears like the next:

You might be an assistant that helps reframe consumer questions into clear, concept-driven statements that match

the model and subjects of Ideas by Ray Dalio, and carry out lookup precept ebook for related content material.

Background:

Ideas teaches structured occupied with life and work selections.

The important thing concepts are:

* Radical fact and radical transparency

* Choice-making frameworks

* Embracing errors as studying

Process:

- Process 1: Make clear the consumer's query if wanted. Ask follow-up questions to make sure you perceive the consumer's intent.

- Process 2: Rewrite a consumer’s query into a press release that will match how Ray Dalio frames concepts in Ideas. Use formal, logical, impartial tone.

- Process 3: Lookup precept ebook with given re-wrote statements. It is best to present at the least {REWRITE_FACTOR} rewrote variations.

- Process 4: Discover essentially the most related from the ebook content material as your fina solutions.Lastly, we will construct the agent with these capabilities outlined.

def get_principle_rag_agent():

index = load_persisted_index()

query_engine = _create_query_engine_from_index(index)

def look_up_principle_book(original_question: str, rewrote_statement: Listing[str]) -> Listing[str]:

outcome = []

for q in rewrote_statement:

response = query_engine.question(q)

content material = [n.get_content() for n in response.source_nodes]

outcome.prolong(content material)

return outcome

def clarify_question(original_question: str, your_questions_to_user: Listing[str]) -> str:

"""

Make clear the consumer's query if wanted. Ask follow-up questions to make sure you perceive the consumer's intent.

"""

response = ""

for q in your_questions_to_user:

print(f"Query: {q}")

r = enter("Response:")

response += f"Query: {q}nResponse: {r}n"

return response

instruments = [

FunctionTool.from_defaults(

fn=look_up_principle_book,

name="look_up_principle_book",

description="Look up principle book with re-wrote queries. Getting the suggestions from the Principle book by Ray Dalio"),

FunctionTool.from_defaults(

fn=clarify_question,

name="clarify_question",

description="Clarify the user's question if needed. Ask follow-up questions to ensure you understand the user's intent.",

)

]

agent = FunctionAgent(

title="principle_reference_loader",

description="You're a useful agent will primarily based on consumer's query and lookup essentially the most related content material in precept ebook.n",

system_prompt=QUESTION_REWRITE_PROMPT,

instruments=instruments,

)

return agent

rag_agent = get_principle_rag_agent()

response = await agent.run(chat_history=chat_history)There are a number of observations I had throughout the implementations:

- One attention-grabbing reality I discovered is that offering a non-used parameter,

original_question, within the perform signature helps. I discovered that once I would not have such a parameter, LLM generally doesn’t comply with the rewrite instruction and passes the unique query inrewrote_statementthe parameter. Havingoriginal_questionparameters one way or the other emphasizes the rewriting mission to LLM. - Totally different LLMs behave fairly in a different way given the identical immediate. I discovered DeepSeek V3 rather more reluctant to set off perform calls than different mannequin suppliers. This doesn’t essentially imply it isn’t usable. If a practical name ought to be initiated 90% of the time, it ought to be a part of the workflow as an alternative of being registered as a perform name. Additionally, in comparison with OpenAI’s fashions, I discovered Gemini good at citing the supply of the ebook when it synthesizes the outcomes.

- The extra content material you load into the context window, the extra inference functionality the mannequin wants. A smaller mannequin with much less inference energy is extra prone to get misplaced within the giant context offered.

Nevertheless, to finish the seek-advice perform, you’ll want a number of Brokers working collectively as an alternative of a single Agent. Let’s discuss the best way to chain your Brokers collectively into workflows.

Ultimate Kind: Agent Workflow

Earlier than we begin, I like to recommend this text by Anthropic, Building Effective Agents. The one-liner abstract of the articles is that it is best to all the time prioritise constructing a workflow as an alternative of a dynamic agent when potential. In LlamaIndex, you are able to do each. It lets you create an agent workflow with extra computerized routing or a personalized workflow with extra express management of the transition of steps. I’ll present an instance of each implementations.

Let’s check out how one can construct a dynamic workflow. Here’s a code instance.

interviewer = FunctionAgent(

title="interviewer",

description="Helpful agent to make clear consumer's questions",

system_prompt=_intervierw_prompt,

can_handoff_to = ["retriver"]

instruments=instruments

)

interviewer = FunctionAgent(

title="retriever",

description="Helpful agent to retrive precept ebook's content material.",

system_prompt=_retriver_prompt,

can_handoff_to = ["advisor"]

instruments=instruments

)

advisor = FunctionAgent(

title="advisor",

description="Helpful agent to advise consumer.",

system_prompt=_advisor_prompt,

can_handoff_to = []

instruments=instruments

)

workflow = AgentWorkflow(

brokers=[interviewer, advisor, retriever],

root_agent="interviewer",

)

handler = await workflow.run(user_msg="Find out how to deal with stress?")It’s dynamic as a result of the Agent transition is predicated on the perform name of the LLM mannequin. Underlying, LlamaIndex workflow gives agent descriptions as capabilities for LLM fashions. When the LLM mannequin triggers such “Agent Operate Name”, LlamaIndex will path to your subsequent corresponding agent for the next step processing. Your earlier agent’s output has been added to the workflow inner state, and your following agent will decide up the state as a part of the context of their name to the LLM mannequin. You additionally leverage state and reminiscence elements to handle the workflow’s inner state or load exterior information(reference the doc here).

Nevertheless, as I’ve urged, you may explicitly management the steps in your workflow to realize extra management. With LlamaIndex, it may be executed by extending the workflow object. For instance:

class ReferenceRetrivalEvent(Occasion):

query: str

class Recommendation(Occasion):

rules: Listing[str]

profile: dict

query: str

book_content: str

class AdviceWorkFlow(Workflow):

def __init__(self, verbose: bool = False, session_id: str = None):

state = get_workflow_state(session_id)

self.rules = state.load_principle_from_cases()

self.profile = state.load_profile()

self.verbose = verbose

tremendous().__init__(timeout=None, verbose=verbose)

@step

async def interview(self, ctx: Context,

ev: StartEvent) -> ReferenceRetrivalEvent:

# Step 1: Interviewer agent asks inquiries to the consumer

interviewer = get_interviewer_agent()

query = await _run_agent(interviewer, query=ev.user_msg, verbose=self.verbose)

return ReferenceRetrivalEvent(query=query)

@step

async def retrieve(self, ctx: Context, ev: ReferenceRetrivalEvent) -> Recommendation:

# Step 2: RAG agent retrieves related content material from the ebook

rag_agent = get_principle_rag_agent()

book_content = await _run_agent(rag_agent, query=ev.query, verbose=self.verbose)

return Recommendation(rules=self.rules, profile=self.profile,

query=ev.query, book_content=book_content)

@step

async def recommendation(self, ctx: Context, ev: Recommendation) -> StopEvent:

# Step 3: Adviser agent gives recommendation primarily based on the consumer's profile, rules, and ebook content material

advisor = get_adviser_agent(ev.profile, ev.rules, ev.book_content)

advise = await _run_agent(advisor, query=ev.query, verbose=self.verbose)

return StopEvent(outcome=advise)The precise occasion sort’s return controls the workflow’s step transition. As an example, retrieve step returns an Recommendation occasion that can set off the execution of the recommendation step. You may also leverage the Recommendation occasion to move the mandatory data you want.

Throughout the implementation, in case you are aggravated by having to begin over the workflow to debug some steps within the center, the context object is important if you need to failover the workflow execution. You possibly can retailer your state in a serialised format and get better your workflow by unserialising it to a context object. Your workflow will proceed executing primarily based on the state as an alternative of beginning over.

workflow = AgentWorkflow(

brokers=[interviewer, advisor, retriever],

root_agent="interviewer",

)

attempt:

handler = w.run()

outcome = await handler

besides Exception as e:

print(f"Error throughout preliminary run: {e}")

await fail_over()

# Elective, serialised and save the contexct for debugging

ctx_dict = ctx.to_dict(serializer=JsonSerializer())

json_dump_and_save(ctx_dict)

# Resume from the identical context

ctx_dict = load_failed_dict()

restored_ctx = Context.from_dict(workflow, ctx_dict,serializer=JsonSerializer())

handler = w.run(ctx=handler.ctx)

outcome = await handlerAbstract

On this put up, we now have mentioned the best way to use LlamaIndex to implement an AI journal’s core perform. The important thing studying contains:

- Utilizing Agentic RAG to leverage LLM functionality to dynamically rewrite the unique question and synthesis outcome.

- Use a Custom-made Workflow to realize extra express management over step transitions. Construct dynamic brokers when mandatory.

The bitterce code of this AI journal is in my GitHub repo here. I hope you take pleasure in this text and this small app I constructed. Cheers!