Fashions have undeniably revolutionized how many people method coding, however they’re usually extra like a super-powered intern than a seasoned architect. Errors, bugs and hallucinations occur on a regular basis, and it would even occur that the code runs effectively however… it’s not doing precisely what we needed.

Now, think about an AI that doesn’t simply write code primarily based on what it’s seen, however actively evolves it. To a primary shock, this implies you enhance the probabilities of getting the suitable code written; nonetheless, it goes far past: Google confirmed that it could possibly additionally use such AI methodology to find new algorithms which might be sooner, extra environment friendly, and generally, fully new.

I’m speaking about AlphaEvolve, the current bombshell from Google DeepMind. Let me say it once more: it isn’t simply one other code generator, however fairly a system that generates and evolves code, permitting it to find new algorithms. Powered by Google’s formidable Gemini fashions (that I intend to cowl quickly, as a result of I’m amazed at their energy!), AlphaEvolve might revolutionize how we method coding, arithmetic, algorithm design, and why not information evaluation itself.

How Does AlphaEvolve ‘Evolve’ Code?

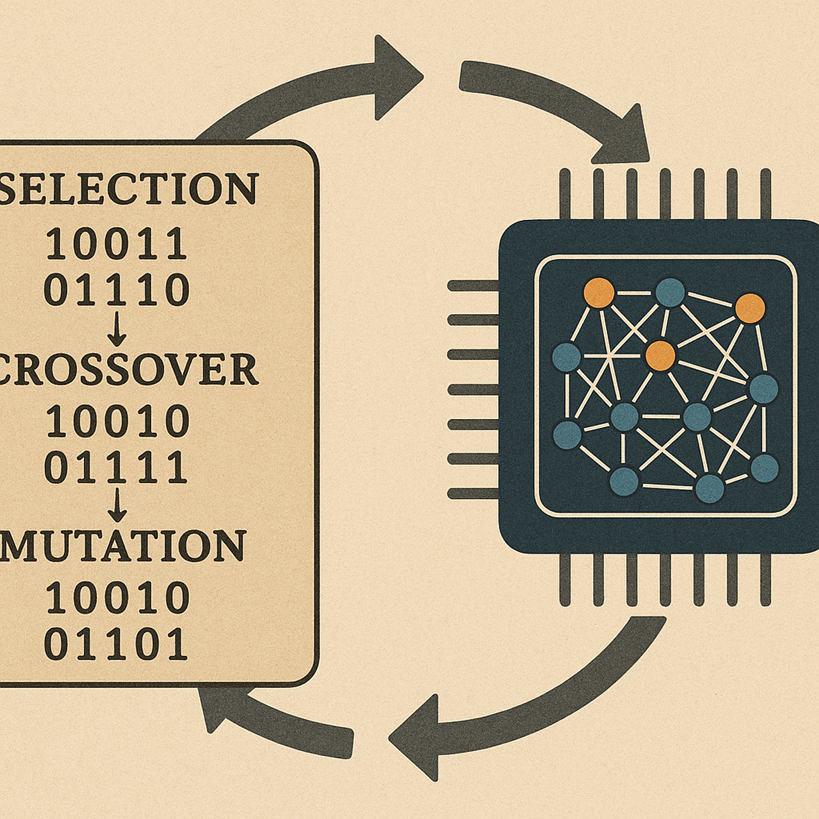

Consider it like pure choice, however for software program. That’s, take into consideration Genetic Algorithms, which have existed in information science, numerical strategies and computational arithmetic for many years. Briefly, as a substitute of ranging from scratch each time, AlphaEvolve takes an preliminary piece of code – presumably a “skeleton” supplied by a human, with particular areas marked for enchancment – after which runs on it an iterative means of refinement.

Let me summarize right here the process detailed in Deepmind’s white paper:

Clever prompting: AlphaEvolve is “sensible” sufficient to craft its personal prompts for the underlying Gemini Llm. These prompts instruct Gemini to behave like a world-class skilled in a selected area, armed with context from earlier makes an attempt, together with the factors that appeared to have labored appropriately and people which might be clear failures. That is the place these large context home windows of fashions like Gemini (even you possibly can run as much as one million tokens at Google’s AI studio) come into play.

Inventive mutation: The LLM then generates a various pool of “candidate” options – variations and mutations of the unique code, exploring totally different approaches to resolve the given drawback. This parallels very carefully the inside working of normal genetic algorithms.

Survival of the fittest: Once more like in genetic algorithms, however candidate options are routinely compiled, run, and rigorously evaluated towards predefined metrics.

Breeding of the highest packages: One of the best-performing options are chosen and grow to be the “dad and mom” for a subsequent era, identical to in genetic algorithms. The profitable traits of the father or mother packages are fed again into the prompting mechanism.

Repeat (to evolve): This cycle – generate, take a look at, choose, be taught – repeats, and with every iteration, AlphaEvolve explores the huge search area of potential packages thus step by step homing in on options which might be higher and higher, whereas purging those who fail. The longer you let it run (what the researchers name “test-time compute”), the extra refined and optimized the options can grow to be.

Constructing on Earlier Makes an attempt

AlphaEvolve is the successor to earlier Google tasks like AlphaCode (which tackled aggressive Programming) and, extra immediately, of FunSearch. FunSearch was an interesting proof of idea that confirmed how LLMs might uncover new mathematical insights by evolving small Python features.

AlphaEvolve took that idea and “injected it with steroids”. I imply this for numerous causes…

First, as a result of due to Gemini’s enormous token window, AlphaEvolve can grapple with total codebases, a whole lot of traces lengthy, not simply tiny features as within the early exams like FunSearch. Second, as a result of like different LLMs, Gemini has seen 1000’s and 1000’s of code in tens of programming languages; therefore it has coated a greater diversity of duties (as sometimes totally different languages are used extra in some domains than others) and it turned a form of polyglot programmer.

Be aware that with smarter LLMs as engines, AlphaEvolve can itself evolve to grow to be sooner and extra environment friendly in its seek for options and optimum packages.

AlphaEvolve’s Thoughts-Blowing Outcomes on Actual-World Issues

Listed here are essentially the most attention-grabbing functions offered within the white paper:

- Optimizing effectivity at Google’s information facilities: AlphaEvolve found a brand new scheduling heuristic that squeezed out a 0.7% saving in Google’s computing assets. This may increasingly look small, however Google’s scale this implies a considerable ecological and financial minimize!

- Designing higher AI chips: AlphaEvolve might simplify a few of the complicated circuits inside Google’s TPUs, particularly for the matrix multiplication operations which might be the lifeblood of contemporary AI. This improves calculation speeds and once more contributes to decrease ecological and economical prices.

- Sooner AI coaching: AlphaEvolve even turned its optimization gaze inward, by accelerating a matrix multiplication library utilized in coaching the very Gemini fashions that energy it! This implies a slight however sizable discount in AI coaching instances and once more decrease ecological and economical prices!

- Numerical strategies: In a form of validation take a look at, AlphaEvolve was set free on over 50 notoriously difficult open issues in arithmetic. In round 75% of them, it independently rediscovered the best-known human options!

In the direction of Self-Bettering AI?

Probably the most profound implications of instruments like AlphaEvolve is the “virtuous cycle” by which AI might enhance AI fashions themselves. Furthermore, extra environment friendly fashions and {hardware} make AlphaEvolve itself extra highly effective, enabling it to find even deeper optimizations. That’s a suggestions loop that would dramatically speed up AI progress, and lead who is aware of the place. That is someway utilizing AI to make AI higher, sooner, and smarter – a real step on the trail in the direction of extra highly effective and maybe basic synthetic intelligence.

Leaving apart this reflection, which shortly will get near the realm of science operate, the purpose is that for an enormous class of issues in science, engineering, and computation, AlphaEvolve might characterize a paradigm shift. As a computational chemist and biologist, I actually use instruments primarily based in LLMs and reasoning AI programs to help my work, write and debug packages, take a look at them, analyze information extra quickly, and extra. With what Deepmind has offered now, it turns into even clearer that we method a future the place AI doesn’t simply execute human directions however turns into a inventive associate in discovery and innovation.

Already for some months we’ve been transferring from AI that completes our code to AI that creates it virtually fully, and instruments like AlphaFold will push us to instances the place AI simply sits to crack issues with (or for!) us, writing and evolving code to get to optimum and presumably fully surprising options. Little question that the subsequent few years are going to be wild.

References and Associated Reads

www.lucianoabriata.com I write about the whole lot that lies in my broad sphere of pursuits: nature, science, expertise, programming, and so on. Subscribe to get my new stories by electronic mail. To seek the advice of about small jobs examine my services page here. You’ll be able to contact me here. You’ll be able to tip me here.